-

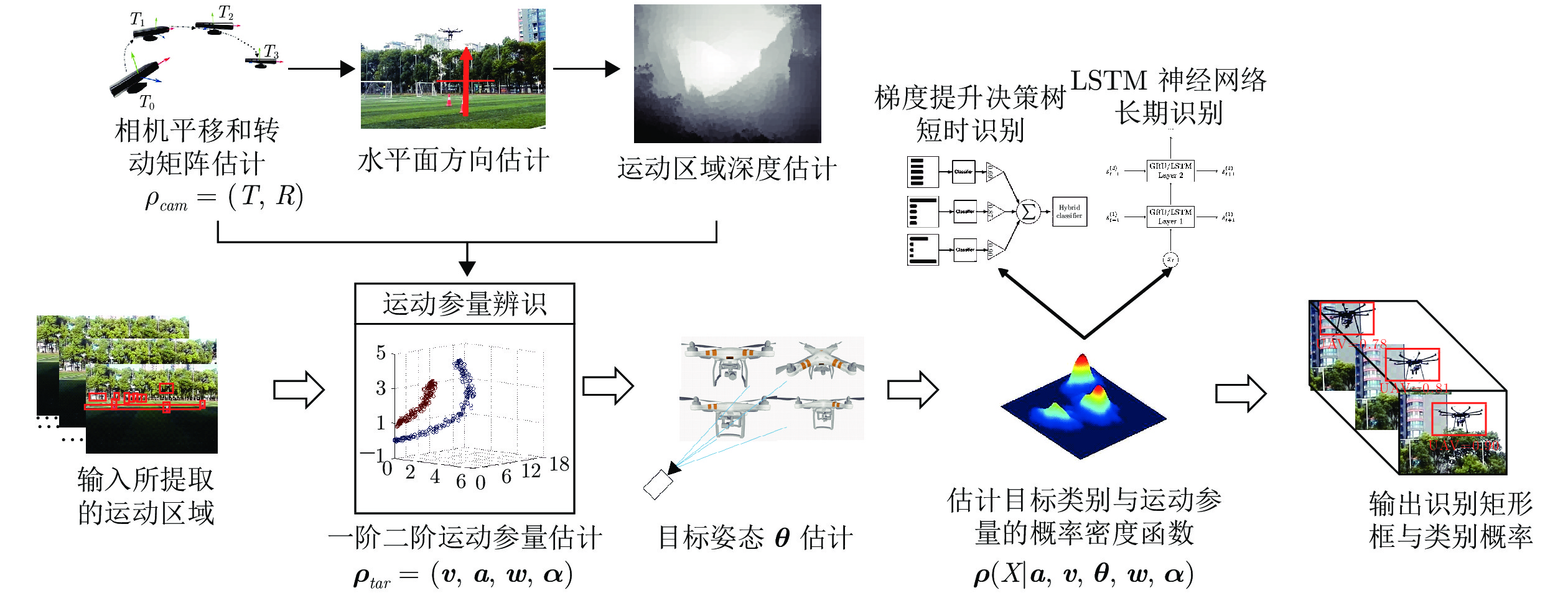

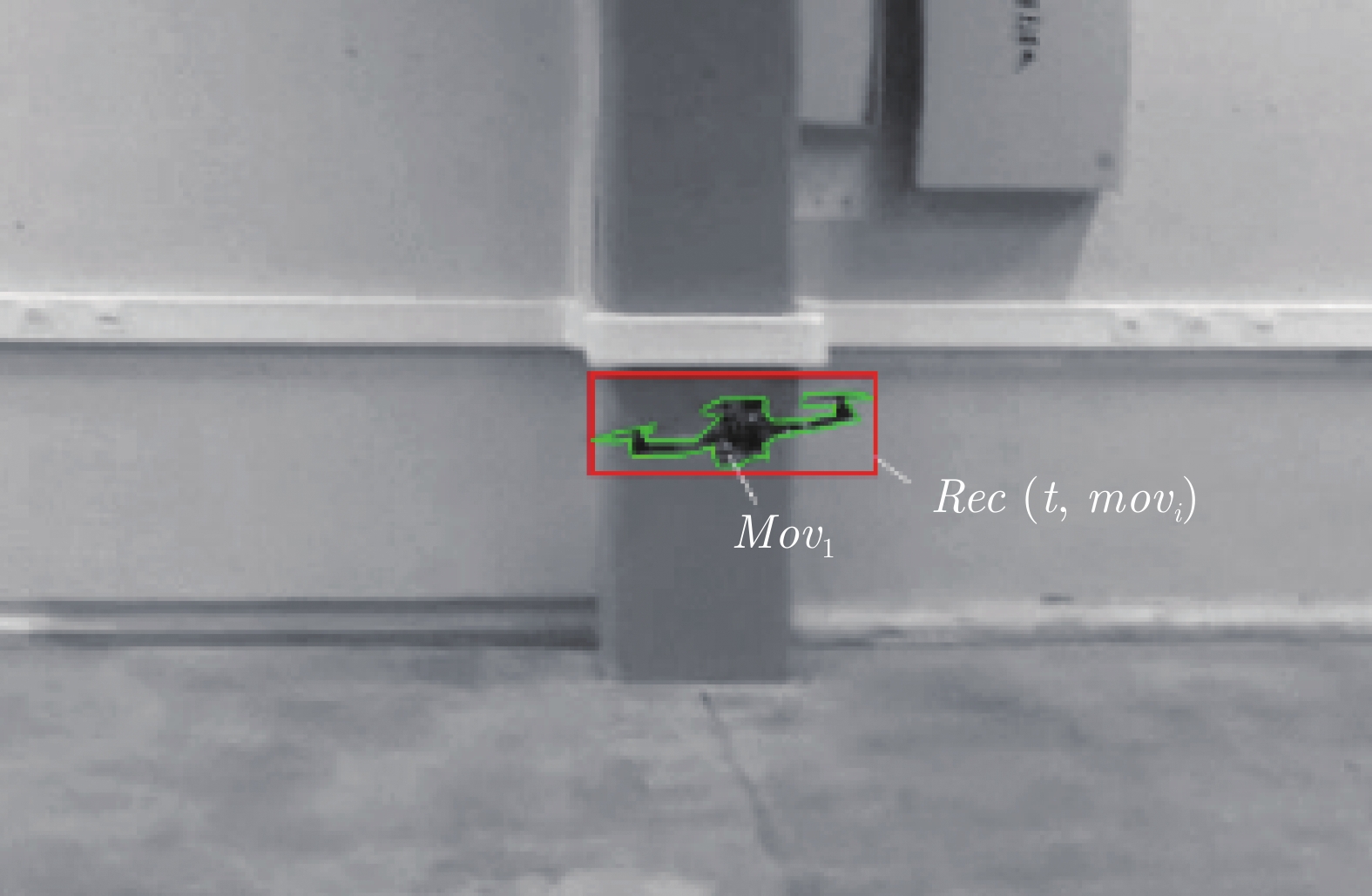

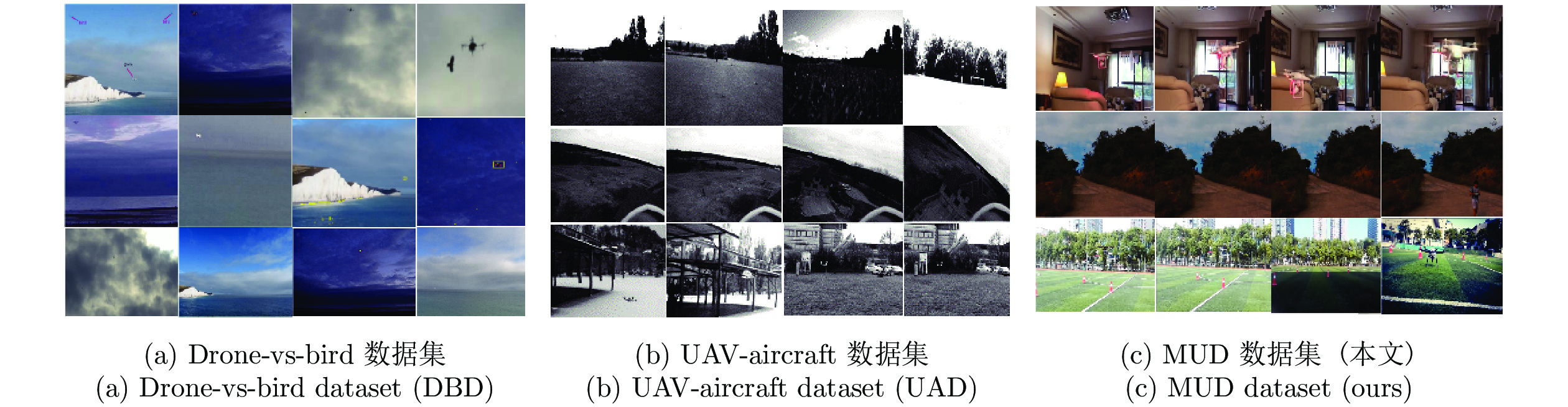

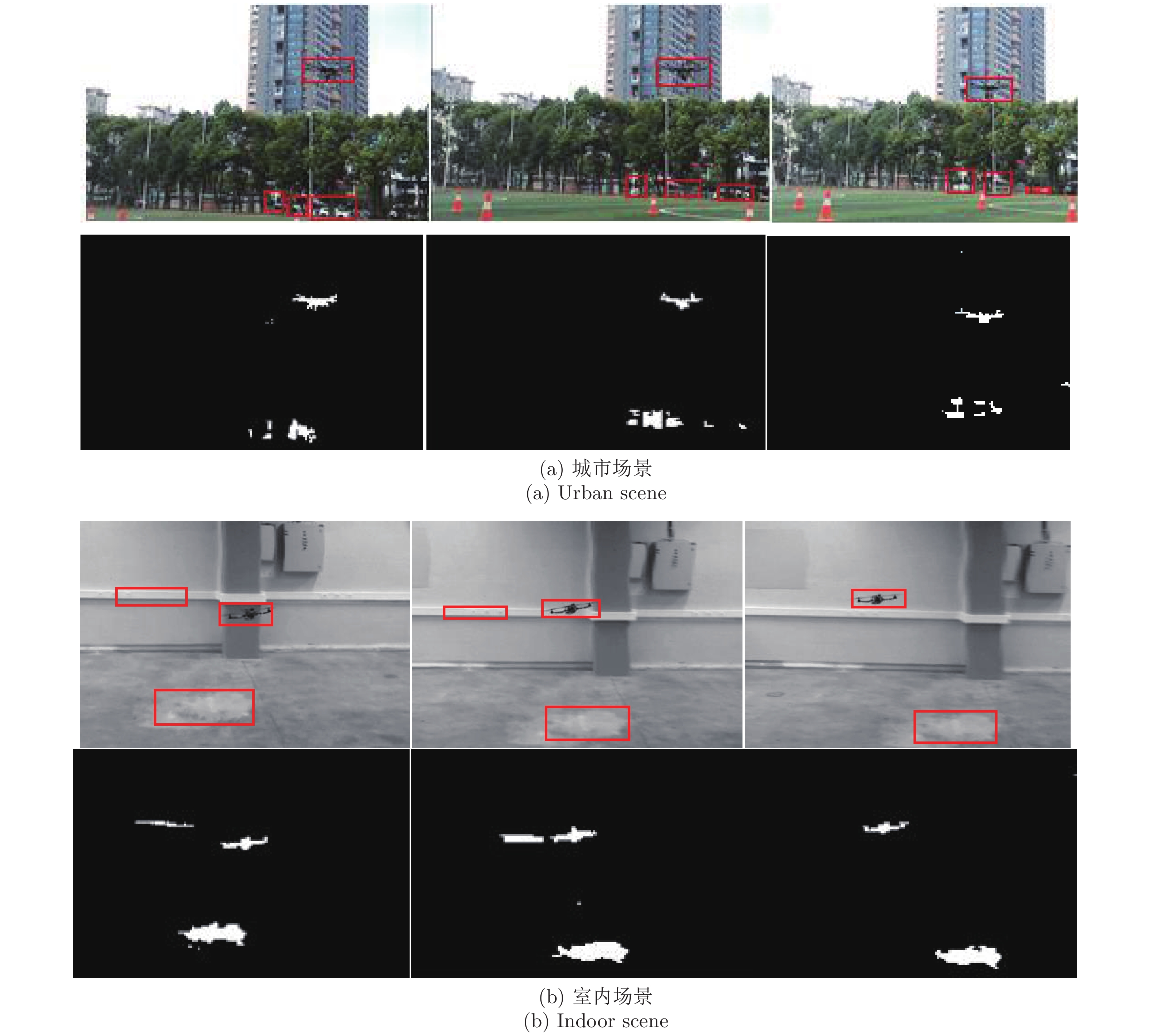

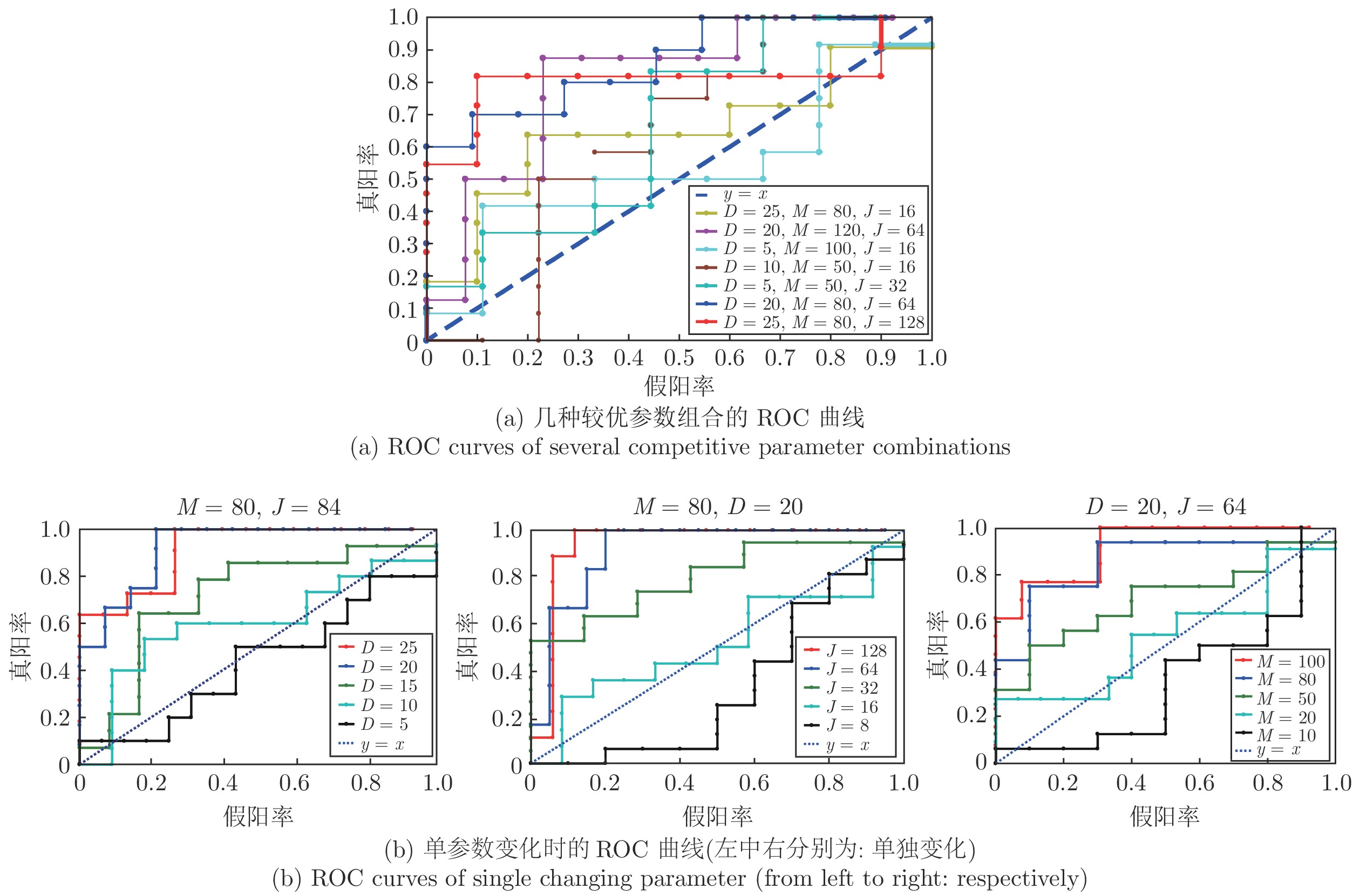

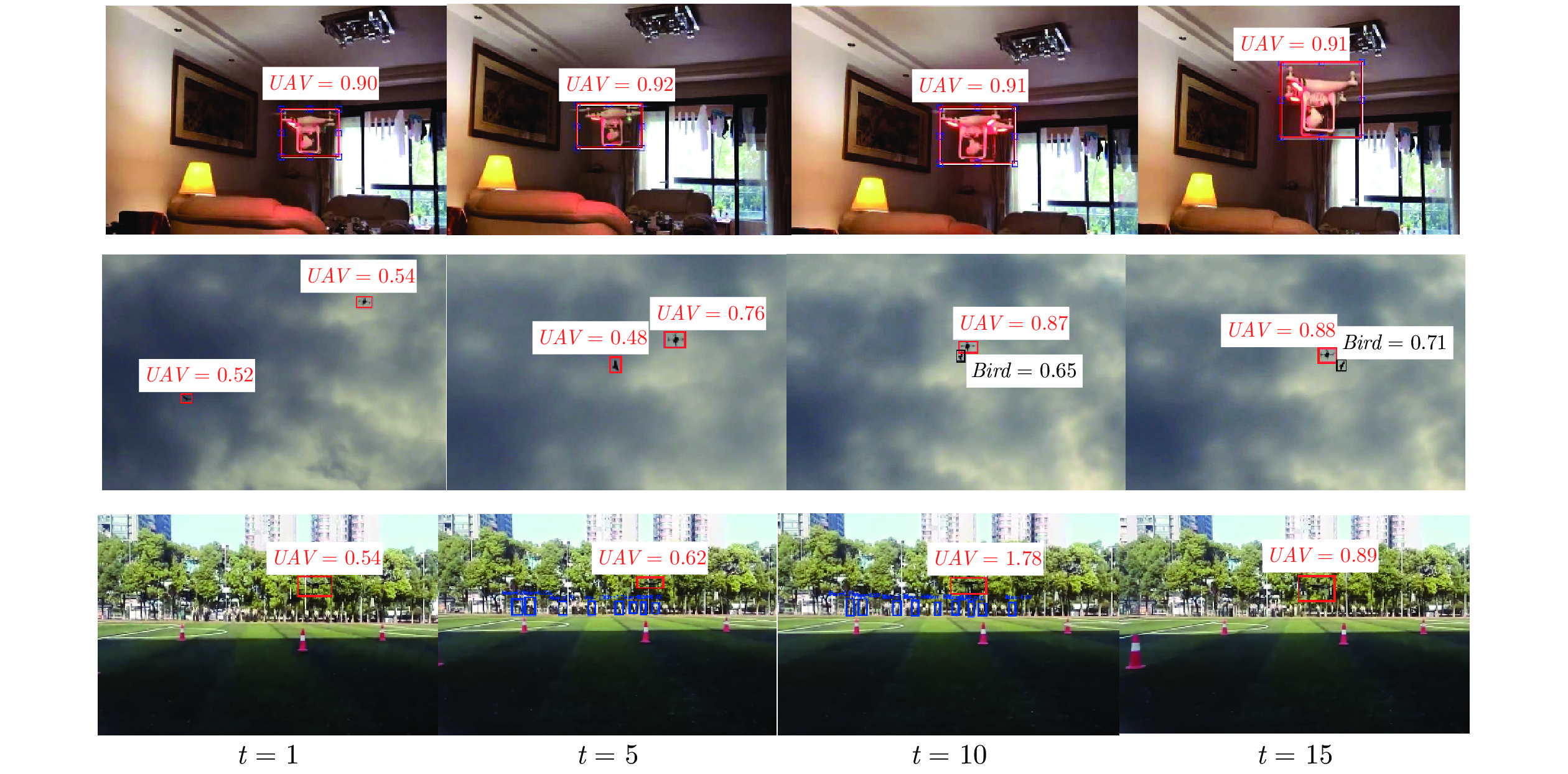

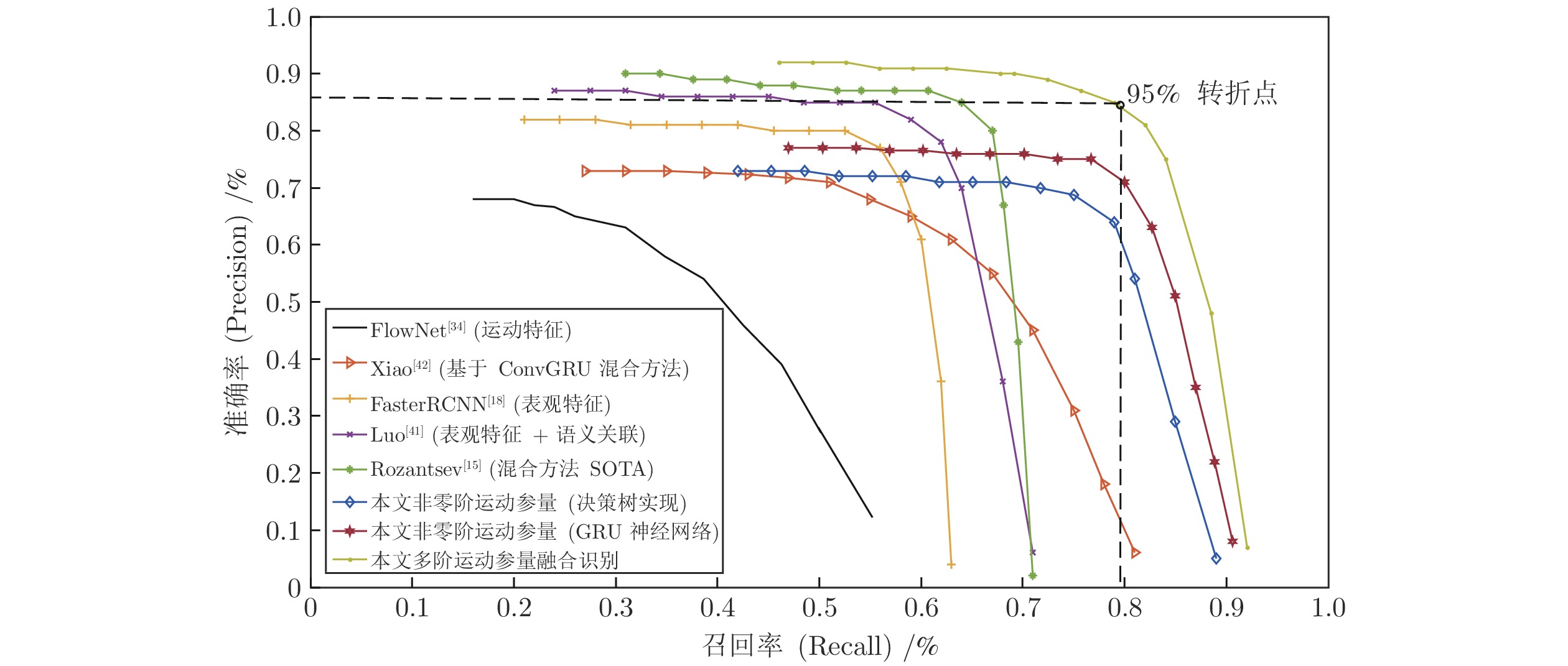

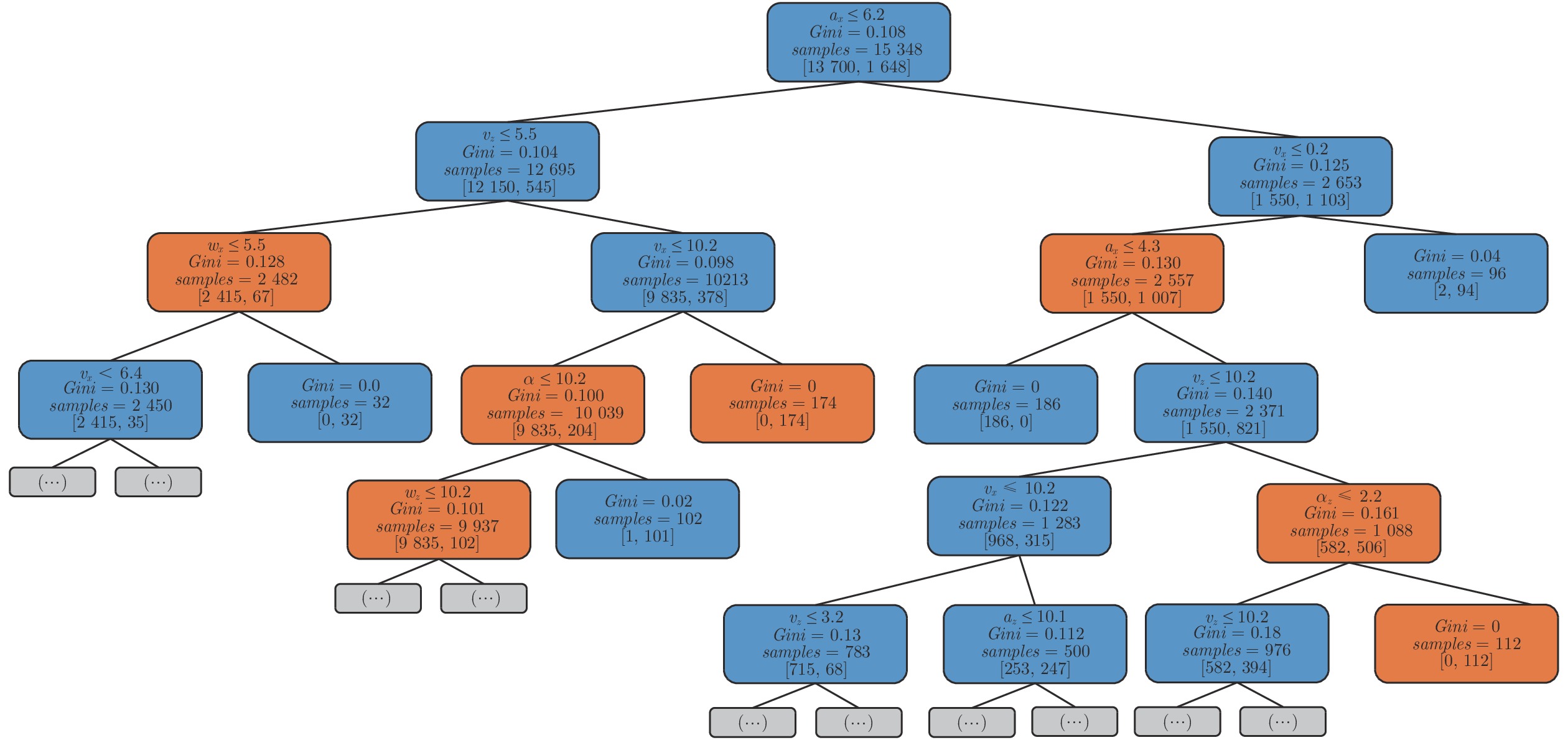

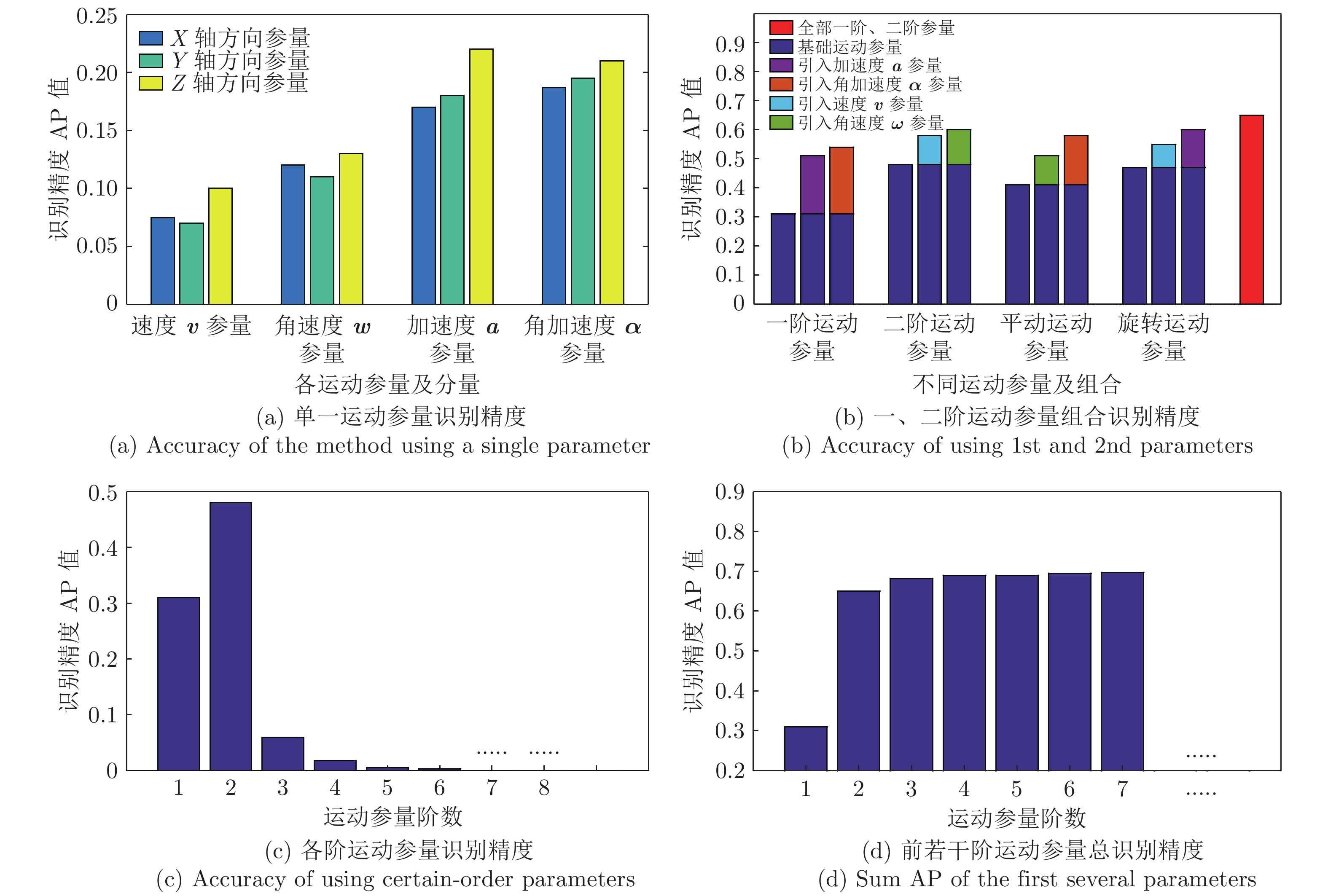

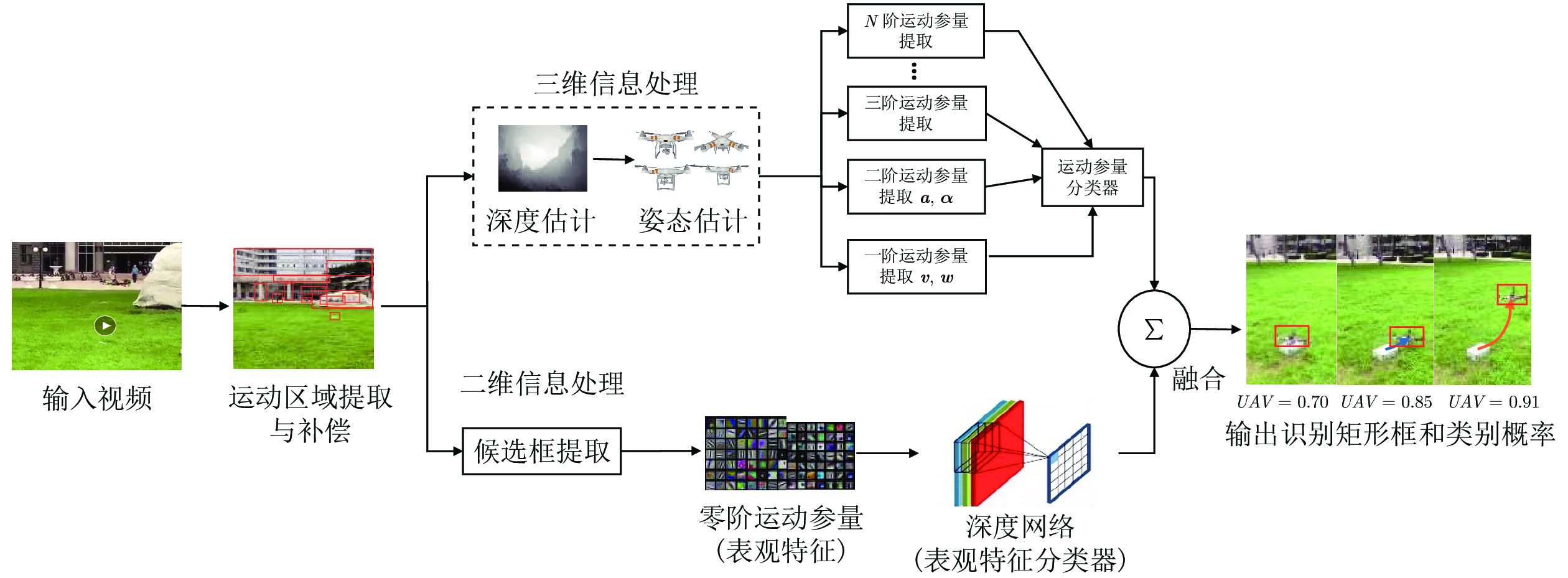

摘要: 以小型多轴无人机为代表的“低慢小”目标, 通常难以被常规手段探测, 而此类目标又会严重威胁某些重要设施. 因此对该类目标的识别已经成为一个亟待解决的重要问题. 本文基于目标运动特征, 提出了一种无人机目标识别方法, 并揭示了二阶运动参量以及重力方向运动参量是无人机识别过程中的关键参数. 该方法首先提取候选目标的多阶运动参量, 建立梯度提升树(Gradient boosting decision tree, GBDT)和门控制循环单元(Gate recurrent unit, GRU)记忆神经网络分别完成短时和长期识别, 然后融合表观特征识别结果得到最终判别结果. 此外, 本文还建立了一个综合多尺度无人机数据集(Multi-scale UAV dataset, MUD), 本文所提出的方法在该数据集上相对于传统基于运动特征的方法, 其识别精度(Average precision, AP)提升103%, 融合方法提升26%.Abstract: Due to the features of low, slow and small aircraft, such as quadrotors, it is a challenging and urgent problem to detect UAVs (Unmanned aerial vehicles) in the wild. Different from the past literatures directly using deep learning method, this paper exploits motion features by extracting multi-order kinematic parameters such as velocity, accelerate, angular velocity, angular velocity vectors and it is exposed that 2nd order and gravity direction motion parameters are key motion patterns for UAV detection. By building GBDT (Gradient boosting decision tree) and GRU (Gate recurrent unit) network, it comes out with a short-term and a long-term detection result, respectively. This recognition process integrates appearance detection result into motion detection result and obtains the final determination. The experimental results achieve state-of-the-art result, with a 103% increase on the precision index AP (Average precision) with respect to the previous work and a 26% increase for hybrid method.

-

Key words:

- Quadrotors /

- object detection /

- motion feature /

- fusion method

-

表 1 本文所采集数据与其他运动目标数据集的对比

Table 1 Comparison of different datasets for moving objects

表 2 MUD数据集采集设备说明

Table 2 Main equipment for acquisition of multi-scale UAV dataset (MUD)

设备 参数 精度 相机 SONY A7 ILCE-7M2, $6\,000 \times 4\,000$像素FE 24 ~ 240 mm, F 3.5 ~ 6.3 — GPS GPS/GLONASS双模 垂直$\pm 0.5\;{\rm{m} },$ 水平$ \pm 1.5\;{\rm{m}}$ 激光测距仪 SKIL Xact 0530, 0 ~ 80 m $ \pm 0.2\;{\rm{mm}}$ 表 3 运动目标区域提取算法性能对比

Table 3 Comparison between performance of different motion ROIs

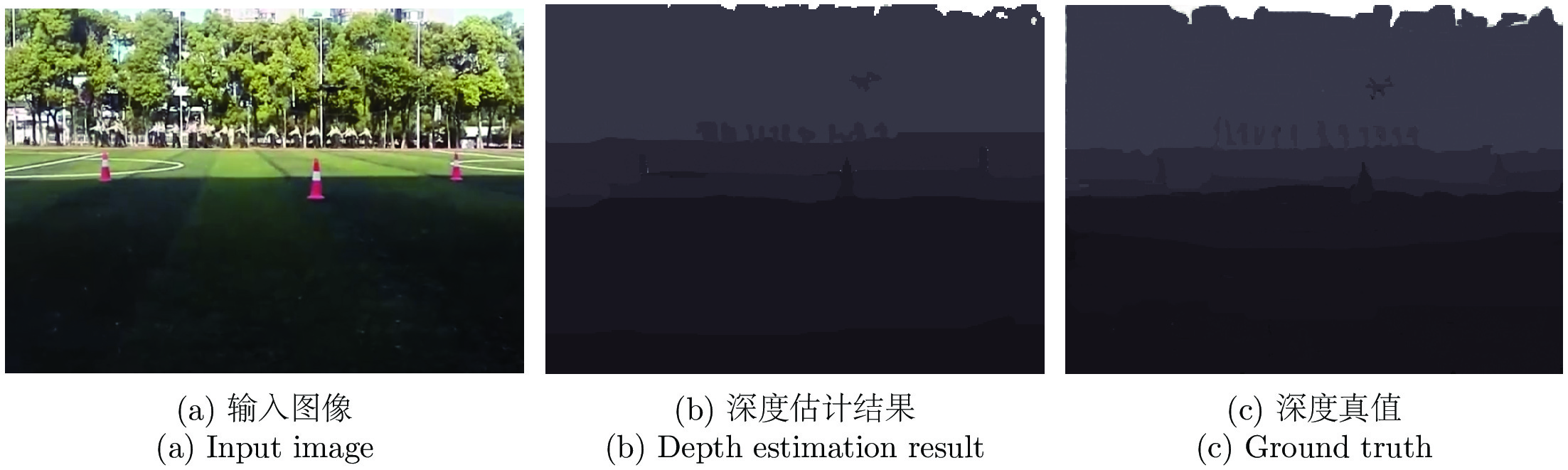

表 4 不同深度估计方法误差对比

Table 4 Error of different depth estimation methods

方法 探测范围 绝对误差 平方误差 均方根误差 $\delta < 1.25$ $\delta < {1.25^2}$ $\delta < {1.25^3}$ DORN[51] 0 ~ 100 m 0.103 0.321 9.014 0.832 0.875 0.922 GeoNet[63] 0 ~ 100 m 0.280 2.813 14.312 0.817 0.849 0.895 双目视觉[64] 0 ~ 100 m 0.062 1.210 0.821 0.573 0.642 0.692 激光测距 0 ~ 200 m 0.041 2.452 1.206 0.875 0.932 0.961 DORN[51] 200 ~ 500 m 0.216 1.152 13.021 0.672 0.711 0.748 GeoNet[63] 200 ~ 500 m 0.398 5.813 18.312 0.617 0.649 0.696 双目视觉[64] 200 ~ 500 m 0.786 5.210 25.821 0.493 0.532 0.562 激光测距 200 ~ 500 m 0.078 3.152 2.611 0.891 0.918 0.935 参数 说明 ${\boldsymbol v}$ 速度 ${\boldsymbol a}$ 加速度 ${\boldsymbol \omega}$ 角速度 ${\boldsymbol \alpha }$ 角加速度 X 轴分量方向 与图像平面坐标系中 u 轴方向保持一致 Y 轴分量方向 与图像平面坐标系中 v 轴方向保持一致 Z 轴分量方向 铅垂向上 表 6 运动参量的决策树模型识别结果混淆矩阵

Table 6 Confusion matrix of MokiP by using GDBT

真实值

预测值旋翼无人机 鸟类 行人 车辆 其他物体 旋翼无人机 0.67 0.25 0.02 0.01 0.12 鸟类 0.21 0.58 0.01 0.00 0.10 行人 0.01 0.02 0.75 0.06 0.09 车辆 0.01 0.00 0.10 0.80 0.08 其他物体 0.10 0.15 0.12 0.13 0.61 表 7 不同识别方法性能指标对比表

Table 7 Comparison of performance indexes for different detection method

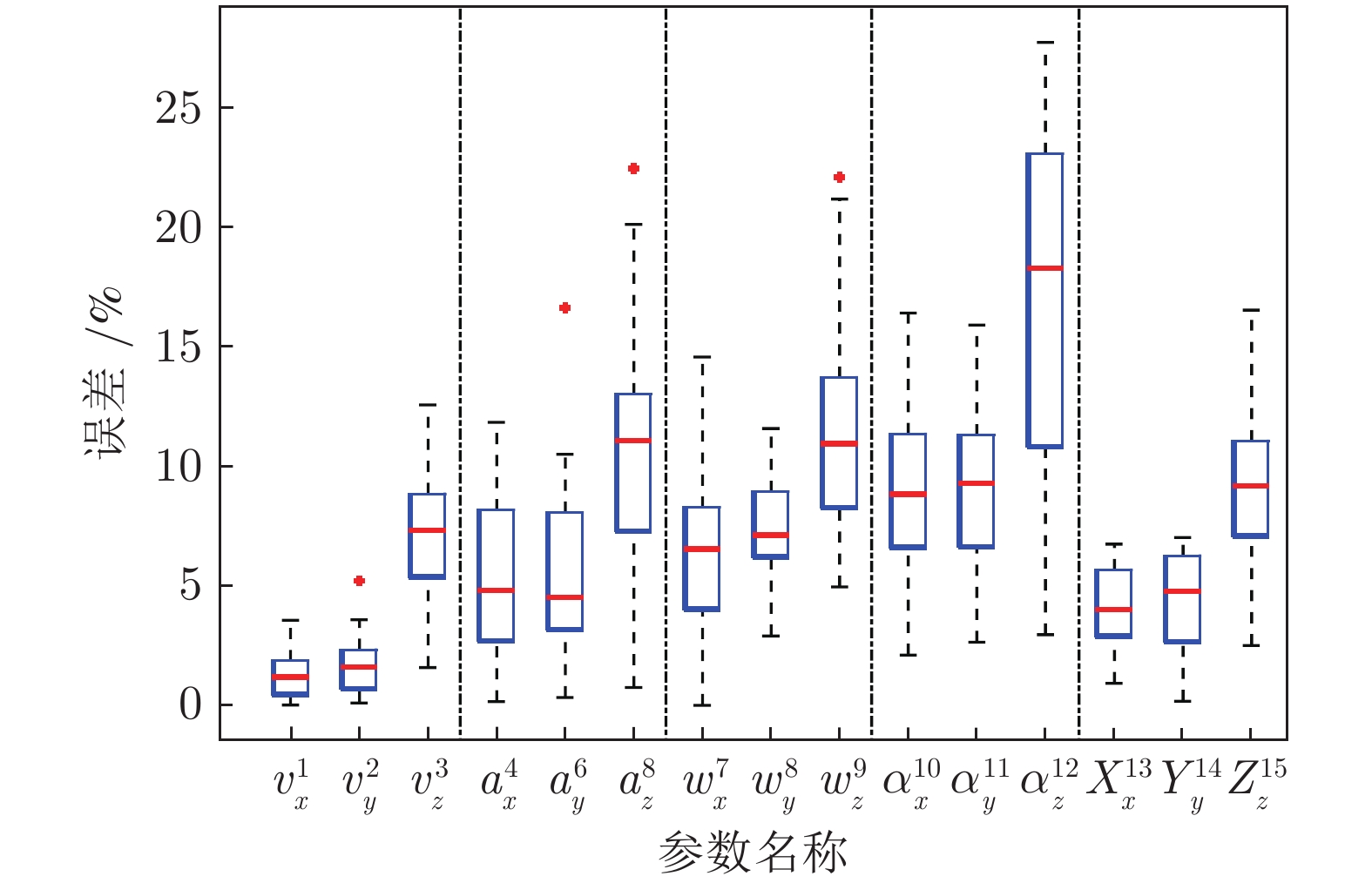

表 8 运动参量的性质对无人机识别的影响表

Table 8 Impact of the parameter properties on UAV detection

参量贡献度$D$ 平动参量 旋转参量 总贡献度 一阶参量 7.2% 20.1% 27.3% 二阶参量 34.1% 38.6% 72.7% 总贡献度 41.3% 58.7% 1 表 9 运动参量的方向对无人机识别的影响表

Table 9 Impact of the parameter direction on UAV detection

参量贡献度$D$ 沿 X 轴方向 沿 Y 轴方向 沿 Z 轴方向 总贡献度 平动参量 8.3% 8.8% 24.2% 41.3% 旋转参量 18.7% 18.8% 22.2% 58.7% 总贡献度 27.0% 27.6% 46.4% 1 -

[1] 李菠, 孟立凡, 李晶, 刘春美, 黄广炎. 低空慢速小目标探测与定位技术研究. 中国测试, 2016, 42(12): 64-69 doi: 10.11857/j.issn.1674-5124.2016.12.014Li Bo, Meng Li-Fan, Li Jing, Liu Chun-Mei, Huang Guang-Yan. Research on detecting and locating technology of LSS-UAV. China Measurement & Test, 2016, 42(12): 64-69 doi: 10.11857/j.issn.1674-5124.2016.12.014 [2] Wang Z H, Lin X P, Xiang X Y, Blasch E, Pham K, Chen G S, et al. An airborne low SWaP-C UAS sense and avoid system. In: Proceedings of SPIE 9838, Sensors and Systems for Space Applications IX. Baltimore, USA: SPIE, 2016. 98380C [3] Busset J, Perrodin F, Wellig P, Ott B, Heutschi K, Rühl T, et al. Detection and tracking of drones using advanced acoustic cameras. In: Proceedings of SPIE 9647, Unmanned/Unattended Sensors and Sensor Networks XI; and Advanced Free-Space Optical Communication Techniques and Applications. Toulouse, France: SPIE, 2015. 96470F [4] Mezei J, Fiaska V, Molnár A. Drone sound detection. In: Proceedings of the 16th IEEE International Symposium on Computational Intelligence and Informatics (CINTI). Budapest, Hungary: IEEE, 2015. 333−338 [5] 张号逵, 李映, 姜晔楠. 深度学习在高光谱图像分类领域的研究现状与展望. 自动化学报, 2018, 44(6): 961-977Zhang Hao-Kui, Li Ying, Jiang Ye-Nan. Deep learning for hyperspectral imagery classification: The state of the art and prospects. Acta Automatica Sinica, 2018, 44(6): 961-977 [6] 贺霖, 潘泉, 邸, 李远清. 高光谱图像高维多尺度自回归有监督检测. 自动化学报, 2009, 35(5): 509-518He Lin, Pan Quan, Di Wei, Li Yuan-Qing. Supervised detection for hyperspectral imagery based on high-dimensional multiscale autoregression. Acta Automatica Sinica, 2009, 35(5): 509-518 [7] 叶钰, 王正, 梁超, 韩镇, 陈军, 胡瑞敏. 多源数据行人重识别研究综述. 自动化学报, 2020, 46(9): 1869-1884Ye Yu, Wang Zheng, Liang Chao, Han Zhen, Chen Jun, Hu Rui-Min. A survey on multi-source person re-identification. Acta Automatica Sinica, 2020, 46(9): 1869-1884 [8] Zhao J F, Feng H J, Xu Z H, Li Q, Peng H. Real-time automatic small target detection using saliency extraction and morphological theory. Optics & Laser Technology, 2013, 47: 268-277 [9] Nguyen P, Ravindranatha M, Nguyen A, Han R, Vu T. Investigating cost-effective RF-based detection of drones. In: Proceedings of the 2nd Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use. Singapore: Association for Computing Machinery, 2016. 17−22 [10] Drozdowicz J, Wielgo M, Samczynski P, Kulpa K, Krzonkalla J, Mordzonek M, et al. 35 GHz FMCW drone detection system. In: Proceedings of the 17th International Radar Symposium. Krakow, Poland: IEEE, 2016. 1−4 [11] Felzenszwalb P F, Girshick R B, McAllester D, Ramanan D. Object detection with discriminatively trained part-based models. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 32(9): 1627-1645 doi: 10.1109/TPAMI.2009.167 [12] Ren S Q, He K M, Girshick R, Sun J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149 doi: 10.1109/TPAMI.2016.2577031 [13] Dollar P, Tu Z W, Perona P, Belongie S. Integral channel features. In: Proceedings of the 2009 British Machine Vision Conference. London, UK: BMVA Press, 2009. 91.1−91.11 [14] Aker C, Kalkan S. Using deep networks for drone detection. In: Proceedings of the 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS). Lecce, Italy: IEEE, 2017. 1−6 [15] Rozantsev A, Lepetit V, Fua P. Flying objects detection from a single moving camera. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Boston, USA: IEEE, 2015. 4128−4136 [16] Coluccia A, Ghenescu M, Piatrik T, De Cubber G, Schumann A, Sommer L, et al. Drone-vs-Bird detection challenge at IEEE AVSS2017. In: Proceedings of the 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS). Lecce, Italy: IEEE, 2017. 1−6 [17] Schumann A, Sommer L, Klatte J, Schuchert T, Beyerer J. Deep cross-domain flying object classification for robust UAV detection. In: Proceedings of the 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS). Lecce, Italy: IEEE, 2017. 1−6 [18] Sommer L, Schumann A, Müller T, Schuchert T, Beyerer J. Flying object detection for automatic UAV recognition. In: Proceedings of the 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS). Lecce, Italy: IEEE, 2017. 1−6 [19] Sapkota K R, Roelofsen S, Rozantsev A, Lepetit V, Gillet D, Fua P, et al. Vision-based unmanned aerial vehicle detection and tracking for sense and avoid systems. In: Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Daejeon, Korea: IEEE, 2016. 1556−1561 [20] Carrio A, Vemprala S, Ripoll A, Saripall S, Campoy P. Drone detection using depth maps. In: Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Madrid, Spain: IEEE, 2018. 1034−1037 [21] Carrio A, Tordesillas J, Vemprala S, Saripalli S, Campoy P, How J P. Onboard detection and localization of drones using depth maps. IEEE Access, 2020, 8: 30480-30490 doi: 10.1109/ACCESS.2020.2971938 [22] Ganti S R, Kim Y. Implementation of detection and tracking mechanism for small UAS. In: Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS). Arlington, USA: IEEE, 2016. 1254−1260 [23] Farhadi M, Amandi R. Drone detection using combined motion and shape features. In: IEEE International Workshop on Small-Drone Surveillance Detection and Counteraction Techniques. Lecce, Italy: IEEE, 2017. 1−6 [24] Alom M Z, Hasan M, Yakopcic C, Taha T M, Asari V K. Improved inception-residual convolutional neural network for object recognition. Neural Computing and Applications, 2020, 32(1): 279-293 doi: 10.1007/s00521-018-3627-6 [25] Saqib M, Khan S D, Sharma N, Blumenstein M. A study on detecting drones using deep convolutional neural networks. In: Proceedings of the 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS). Lecce, Italy: IEEE, 2017. 1−5 [26] Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C Y, et al. SSD: Single shot MultiBox detector. In: Proceedings of the 14th European Conference on Computer Vision - ECCV 2016. Amsterdam, The Netherlands: Springer, 2016. 21−37. [27] 周卫祥, 孙德宝, 彭嘉雄. 红外图像序列运动小目标检测的预处理算法研究. 国防科技大学学报, 1999, 21(5): 60-63Zhou Wei-Xiang, Sun De-Bao, Peng Jia-Xiong. The study of preprocessing algorithm of small moving target detection in infrared image sequences. Journal of National University of Defense Technology, 1999, 21(5): 60-63 [28] Wu Y W, Sui Y, Wang G H. Vision-based real-time aerial object localization and tracking for UAV sensing system. IEEE Access, 2017, 5: 23969-23978 doi: 10.1109/ACCESS.2017.2764419 [29] Lv P Y, Lin C Q, Sun S L. Dim small moving target detection and tracking method based on spatial-temporal joint processing model. Infrared Physics & Technology, 2019, 102: Article No. 102973 [30] Van Droogenbroeck M, Paquot O. Background subtraction: Experiments and improvements for ViBe. In: Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops. Providence, USA: IEEE, 2012. 32−37 [31] Zamalieva D, Yilmaz A. Background subtraction for the moving camera: A geometric approach. Computer Vision and Image Understanding, 2014, 127: 73-85 doi: 10.1016/j.cviu.2014.06.007 [32] Sun Y F, Liu G, Xie L. MaxFlow: A convolutional neural network based optical flow algorithm for large displacement estimation. In: Proceedings of the 17th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES). Wuxi, China: IEEE, 2018. 119−122 [33] Dosovitskiy A, Fischer P, Ilg E, Häusser P, Hazirbas C, Golkov V, et al. FlowNet: Learning optical flow with convolutional networks. In: Proceedings of the 2015 International Conference on Computer Vision (ICCV). Santiago, Chile: IEEE, 2015. 2758−2766 [34] Ilg E, Mayer N, Saikia T, Keuper M, Dosovitskiy A, Brox T. FlowNet 2.0: Evolution of optical flow estimation with deep networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 1647−1655 [35] 陈鑫, 魏海军, 吴敏, 曹卫华. 基于高斯回归的连续空间多智能体跟踪学习. 自动化学报, 2013, 39(12): 2021-2031Chen Xin, Wei Hai-Jun, Wu Min, Cao Wei-Hua. Tracking learning based on gaussian regression for multi-agent systems in continuous space. Acta Automatica Sinica, 2013, 39(12): 2021-2031 [36] Shi S N, Shui P L. Detection of low-velocity and floating small targets in sea clutter via income-reference particle filters. Signal Processing, 2018, 148: 78-90 doi: 10.1016/j.sigpro.2018.02.005 [37] Kang K, Li H S, Yan J J, Zeng X Y, Yang B, Xiao T, et al. T-CNN: Tubelets with convolutional neural networks for object detection from videos. IEEE Transactions on Circuits and Systems for Video Technology, 2018, 28(10): 2896-2907 doi: 10.1109/TCSVT.2017.2736553 [38] Zhu X Z, Xiong Y W, Dai J F, Yuan L, Wei Y C. Deep feature flow for video recognition. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 4141−4150 [39] Zhu X Z, Wang Y J, Dai J F, Yuan L, Wei Y C. Flow-guided feature aggregation for video object detection. In: Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV). Venice, Italy: IEEE, 2017. 408−417 [40] Bertasius G, Torresani L, Shi J B. Object detection in video with spatiotemporal sampling networks. In: Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: Springer, 2018. 342−357 [41] Luo H, Huang L C, Shen H, Li Y, Huang C, Wang X G. Object detection in video with spatial-temporal context aggregation. arXiv: 1907.04988, 2019. [42] Xiao F Y, Lee Y J. Video object detection with an aligned spatial-temporal memory. In: Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: Springer, 2018. 494−510. [43] Chen X Y, Yu J Z, Wu Z X. Temporally identity-aware SSD with attentional LSTM. IEEE Transactions on Cybernetics, 2020, 50(6): 2674-2686. doi: 10.1109/TCYB.2019.2894261 [44] Shi X J, Chen Z R, Wang H, Yeung D Y, Wong W K, Woo W C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In: Proceedings of the 28th International Conference on Neural Information Processing Systems. Montreal, Canada: MIT Press, 2015. 802−810. [45] 高雪琴, 刘刚, 肖刚, Bavirisetti D P, 史凯磊. 基于FPDE的红外与可见光图像融合算法. 自动化学报, 2020, 46(4): 796-804.Gao Xue-Qin, Liu Gang, Xiao Gang, Bavirisetti Durga Prasad, Shi Kai-Lei. Fusion Algorithm of Infrared and Visible Images Based on FPDE. Acta Automatica Sinica, 2020, 46(4): 796-804 [46] Bluche T, Messina R. Gated convolutional recurrent neural networks for multilingual handwriting recognition. In: Proceedings of the 14th IAPR International Conference on Document Analysis and Recognition (ICDAR). Kyoto, Japan: IEEE, 2017. 646−651 [47] Deng J, Dong W, Socher R, Li L J, Li K, Li F F. ImageNet: A large-scale hierarchical image database. In: Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition. Miami, USA: IEEE, 2009. 248−255 [48] Son J, Jung I, Park K, Han B. Tracking-by-segmentation with online gradient boosting decision tree. In: Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV). Santiago, Chile: IEEE, 2015. 3056−3064 [49] Wang F, Jiang M Q, Qian C, Yang S, Li C, Zhang H G, et al. Residual attention network for image classification. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 6450−6458 [50] 张秀伟, 张艳宁, 郭哲, 赵静, 仝小敏. 可见光-热红外视频运动目标融合检测的研究进展及展望. 红外与毫米波学报, 2011, 30(4): 354-360Zhang Xiu-Wei, Zhang Yan-Ning, Guo Zhe, Zhao Jing, Tong Xiao-Min. Advances and perspective on motion detection fusion in visual and thermal framework. Journal of Infrared and Millimeter Waves, 2011, 30(4): 354-360 [51] Fu H, Gong M M, Wang C H, Batmanghelich K, Tao D C. Deep ordinal regression network for monocular depth estimation. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 2002−2011 [52] Dragon R, van Gool L. Ground plane estimation using a hidden Markov model. In: Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition. Columbus, USA: IEEE, 2014. 4026−4033 [53] Rublee E, Rabaud V, Konolige K, Bradski G. ORB: An efficient alternative to SIFT or SURF. In: Proceedings of the 2011 International Conference on Computer Vision. Barcelona. Spain: IEEE, 2011. 2564−2571 [54] Lepetit V, Moreno-Noguer F, Fua P. EPnP: An accurate O(n) solution to the PnP problem. International Journal of Computer Vision, 2009, 81(2): 155-166 doi: 10.1007/s11263-008-0152-6 [55] Geiger A, Lenz P, Urtasun R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In: Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition. Providence, USA: IEEE, 2012. 3354−3361 [56] Bagautdinov T, Fleuret F, Fua P. Probability occupancy maps for occluded depth images. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Boston, USA: IEEE, 2015. 2829−2837 [57] Kranstauber B, Cameron A, Weinzerl R, Fountain T, Tilak S, Wikelski M, et al. The Movebank data model for animal tracking. Environmental Modelling & Software, 2011, 26(6): 834-835 [58] Belongie S, Perona P, Van Horn G, Branson S. NABirds dataset: Download it now! [Online], available: https://dl.allaboutbirds.org/nabirds, March 30, 2020 [59] Xiang Y, Mottaghi R, Savarese S. Beyond PASCAL: A benchmark for 3D object detection in the wild. In: Proceedings of the 2014 IEEE Winter Conference on Applications of Computer Vision. Steamboat Springs, USA: IEEE, 2014. 75−82 [60] Silberman N, Hoiem D, Kohli P, Fergus R. Indoor segmentation and support inference from RGBD images. In: Proceedings of the 12th European conference on Computer Vision. Florence, Italy: Springer, 2012. 746−760 [61] Lucas B D, Kanade T. An iterative image registration technique with an application to stereo vision. In: Proceedings of the 7th International Joint Conference on Artificial Intelligence. Vancouver, Canada: Morgan Kaufmann, 1981. 674−679 [62] Yazdian-Dehkordi M, Rojhani O R, Azimifar Z. Visual target tracking in occlusion condition: A GM-PHD-based approach. In: Proceedings of the 16th CSI International Symposium on Artificial Intelligence and Signal Processing (AISP 2012). Shiraz, Iran: IEEE, 2012. 538−541 [63] Yin Z C, Shi J P. GeoNet: Unsupervised learning of dense depth, optical flow and camera pose. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 1983−1992 [64] Mur-Artal R, Tardós J D. ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Transactions on Robotics, 2017, 33(5): 1255-1262 doi: 10.1109/TRO.2017.2705103 -

下载:

下载: