Multi-scale Visual Semantic Enhancement for Multimodal Named Entity Recognition Method

-

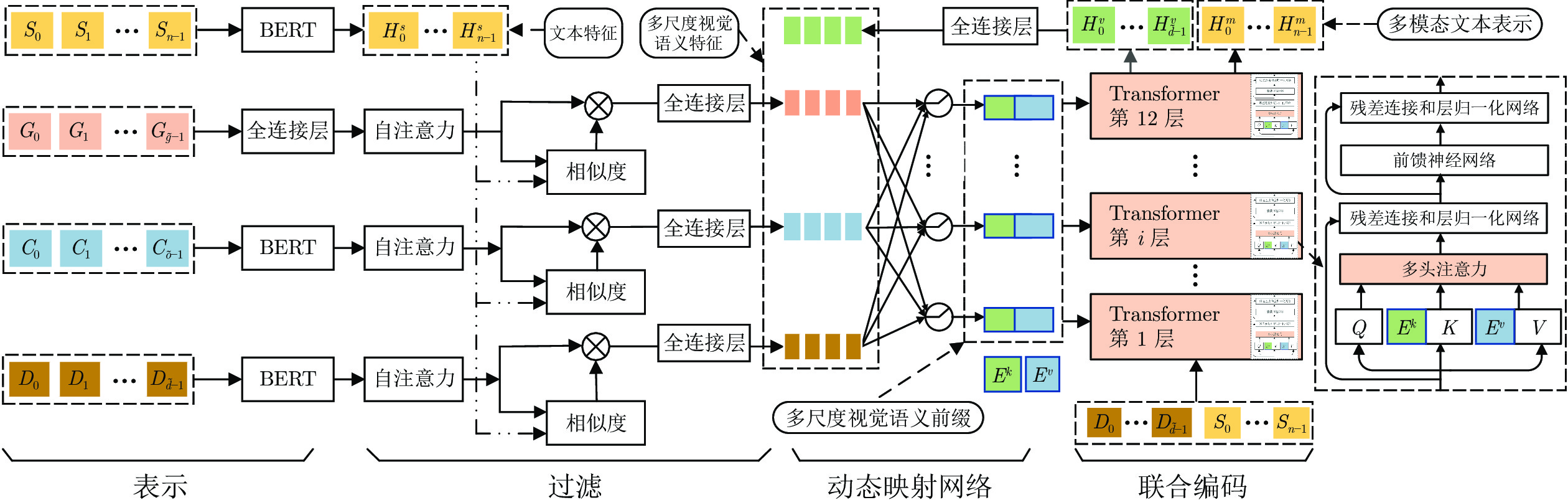

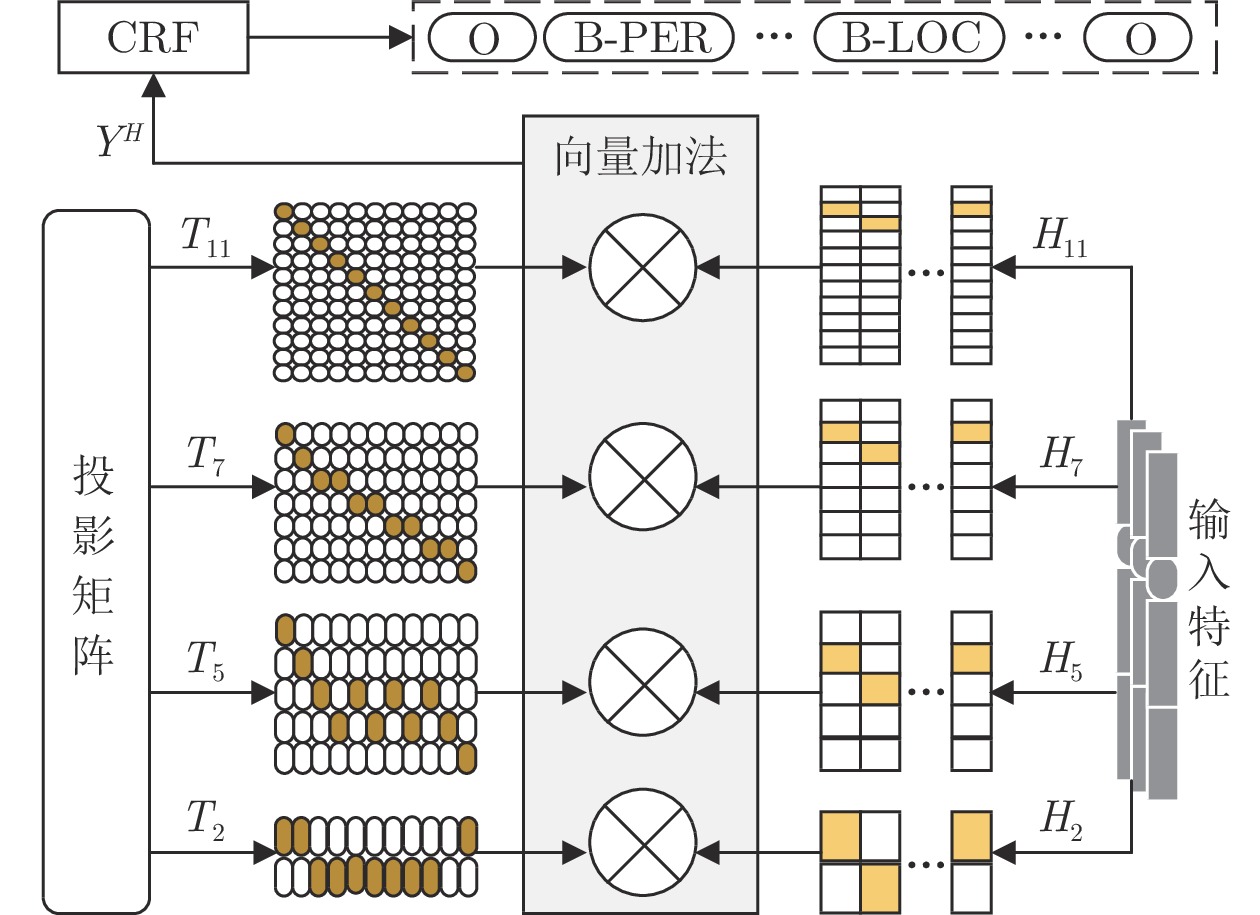

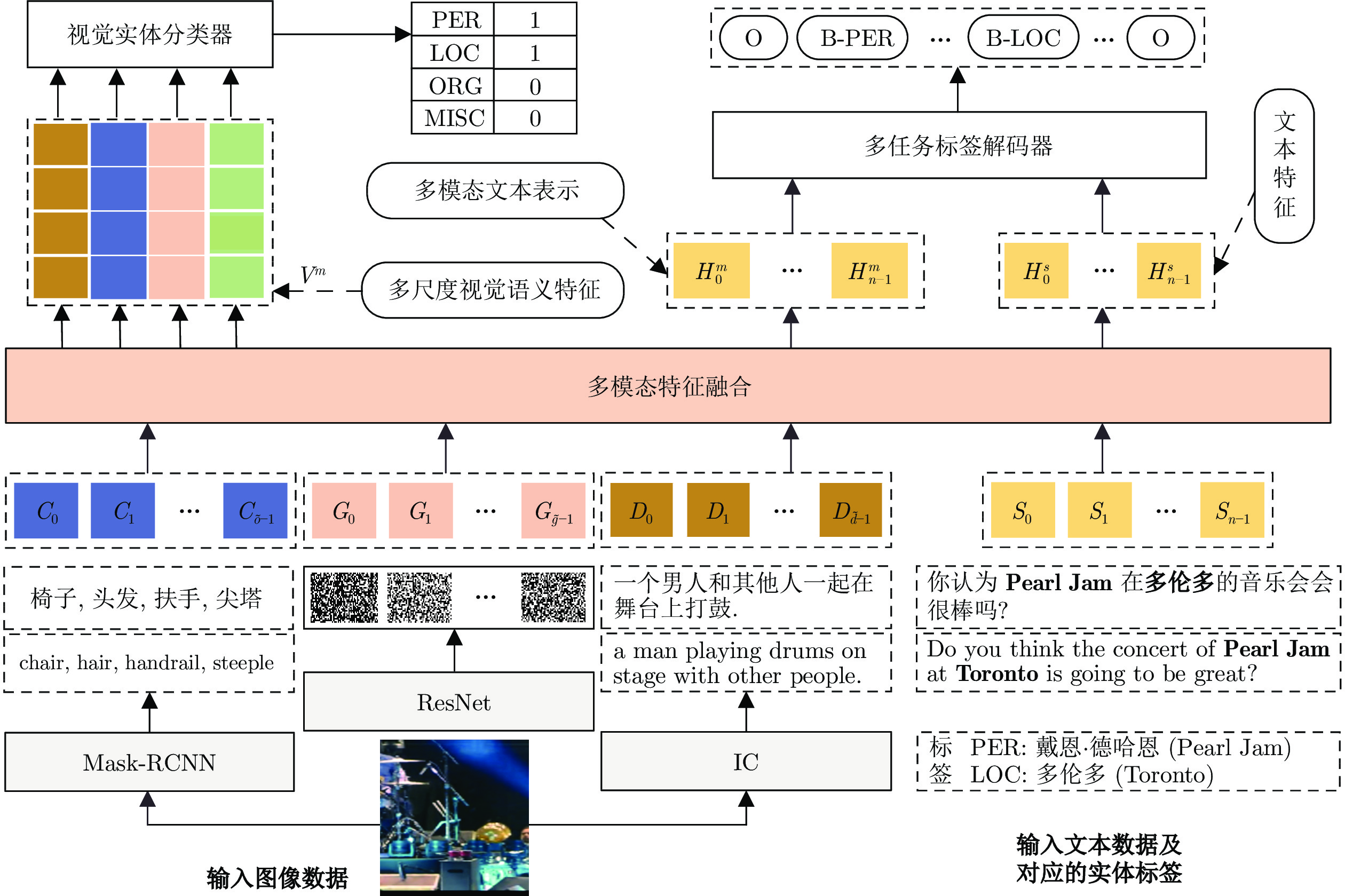

摘要: 为解决多模态命名实体识别(Multimodal named entity recognition, MNER)方法研究中存在的图像特征语义缺失和多模态表示语义约束较弱等问题, 提出多尺度视觉语义增强的多模态命名实体识别方法(Multi-scale visual semantic enhancement for multimodal named entity recognition method, MSVSE). 该方法提取多种视觉特征用于补全图像语义, 挖掘文本特征与多种视觉特征间的语义交互关系, 生成多尺度视觉语义特征并进行融合, 得到多尺度视觉语义增强的多模态文本表示; 使用视觉实体分类器对多尺度视觉语义特征解码, 实现视觉特征的语义一致性约束; 调用多任务标签解码器挖掘多模态文本表示和文本特征的细粒度语义, 通过联合解码解决语义偏差问题, 从而进一步提高命名实体识别准确度. 为验证该方法的有效性, 在Twitter-2015和Twitter-2017数据集上进行实验, 并与其他10种方法进行对比, 该方法的平均F1值得到提升.

-

关键词:

- 多模态命名实体识别 /

- 多任务学习 /

- 多模态融合 /

- Transformer

Abstract: To address the issues of semantic loss in image features and weak semantic constraints in multimodal representations encountered in the research of multimodal named entity recognition (MNER) methods, multi-scale visual semantic enhancement for multimodal named entity recognition method (MSVSE) is proposed. After supplementing image semantics by extracting multiple visual features, the semantic interaction and feature fusion between text features and various visual features are explored through a multimodal feature fusion module. This process outputs multi-scale visual semantic-enhanced multimodal text representations. The visual entity classifier is used to decode multi-scale visual semantic features to learn the semantic consistency between various visual features. The multi-task decoder is invoked to mine the fine-grained semantic representation in multimodal text repre-sentation and text features, and carry out joint decoding to solve the semantic bias problem, thereby further improving the accuracy of named entity recognition. To verify the effectiveness of the method, experiments were carried out on Twitter-2015 and Twitter-2017 respectively, and compared with other 10 methods. The average F1 values of the MSVSE on the two datasets have increased. -

表 1 数据集上方法性能比较(%)

Table 1 Performance comparison of method on dataset (%)

方法 Twitter-2015 Twitter-2017 PER LOC ORG MISC F1 PER LOC ORG MISC F1 MSB 86.44 77.16 52.91 36.05 73.47 — — — — 84.32 MAF 84.67 81.18 63.35 41.82 73.42 91.51 85.80 85.10 68.79 86.25 UMGF 84.26 83.17 62.45 42.42 74.85 91.92 85.22 83.13 69.83 85.51 M3S 86.05 81.32 62.97 41.36 75.03 92.73 84.81 82.49 69.53 86.06 UMT 85.24 81.58 63.03 39.45 73.41 91.56 84.73 82.24 70.10 85.31 UAMNer 84.95 81.28 61.41 38.34 73.10 90.49 81.52 82.09 64.32 84.90 VAE 85.82 81.56 63.20 43.67 75.07 91.96 81.89 84.13 74.07 86.37 MNER-QG 85.68 81.42 63.62 41.53 74.94 93.17 86.02 84.64 71.83 87.25 RGCN 86.36 82.08 60.78 41.56 75.00 92.86 86.10 84.05 72.38 87.11 HvpNet 85.74 81.78 61.92 40.81 74.33 92.28 84.81 84.37 65.20 85.80 MSVSE 86.72 81.63 64.08 38.91 75.11 93.24 85.96 85.22 70.00 87.34 –HvpNet 0.98 –0.15 2.16 –1.90 0.78 0.96 1.15 0.85 4.80 1.54 表 2 模型结构消融实验(%)

Table 2 Structural ablation experiments for the model (%)

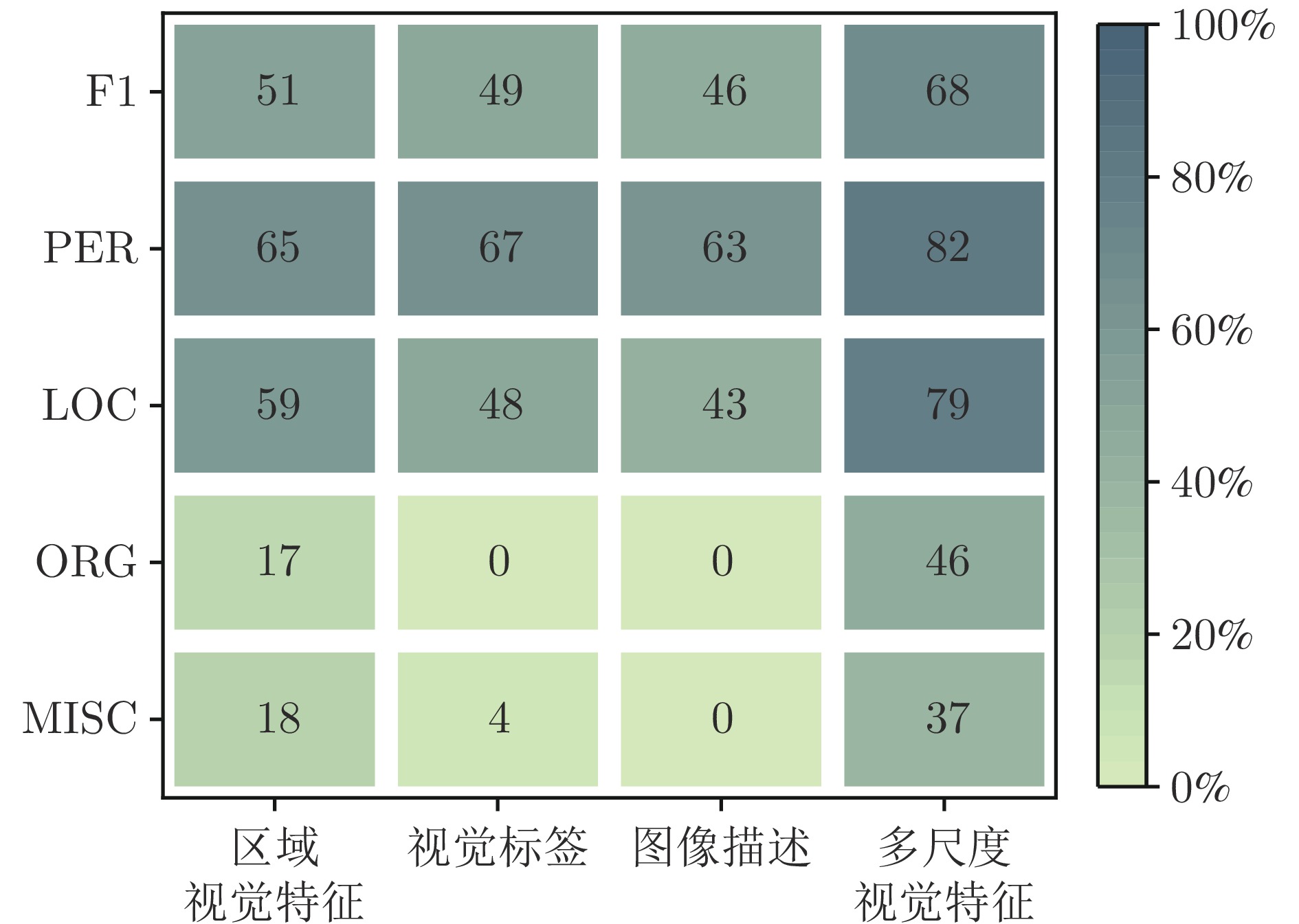

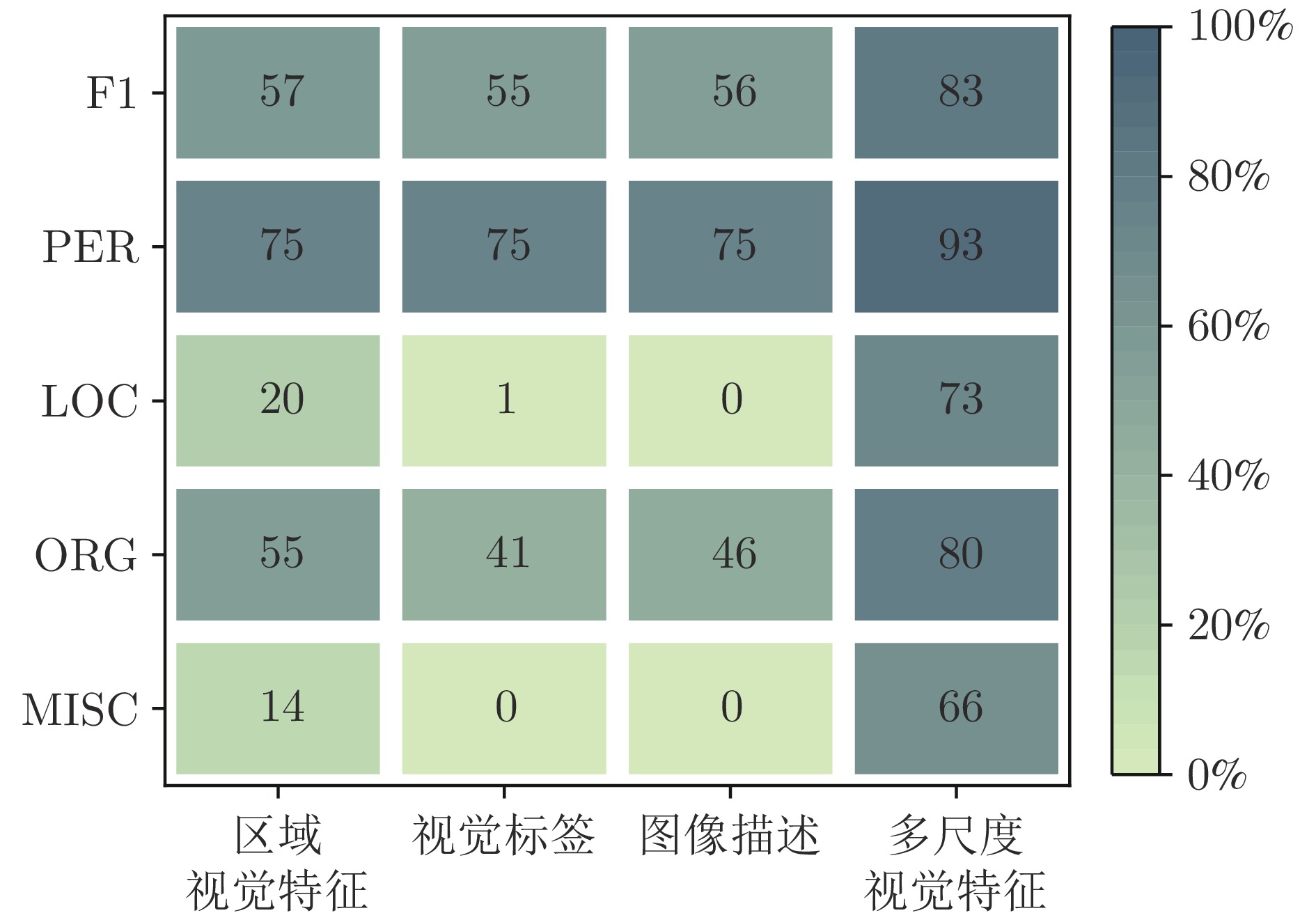

方法 Twitter-2015 Twitter-2017 PER LOC ORG MISC F1 PER LOC ORG MISC F1 MSVSE 86.72 81.63 64.08 38.91 75.11 93.24 85.96 85.22 70.00 87.34 w/o自注意力机制 86.49 81.20 63.21 41.56 74.83 93.05 86.52 84.37 67.34 86.79 w/o相似度 86.33 81.59 63.15 40.84 74.91 92.94 86.59 84.07 68.24 86.75 w/o自注意力机制加相似度 86.80 81.38 63.32 39.62 74.67 92.97 85.87 84.41 67.96 86.67 w/o多任务标签解码器 86.49 81.78 62.68 37.60 74.69 92.98 84.83 85.02 71.66 87.14 w/o视觉实体分类器 86.52 81.64 63.06 39.89 74.79 93.37 84.83 85.82 66.24 86.92 表 3 联合编码器中视觉特征消融实验(%)

Table 3 Visual feature ablation experiments in the joint encoder (%)

文本 视觉标签 图像描述 Twitter-2015 Twitter-2017 PER LOC ORG MISC F1 PER LOC ORG MISC F1 $ \checkmark$ — $ \checkmark$ 86.72 81.63 64.08 38.91 75.11 93.24 85.96 85.22 70.00 87.34 $ \checkmark$ — — 86.76 81.68 61.21 39.46 74.73 92.95 86.20 84.60 70.82 87.11 $ \checkmark$ $ \checkmark$ — 86.87 81.74 63.72 37.80 74.87 93.03 85.71 84.43 71.71 87.16 $ \checkmark$ $ \checkmark$ $ \checkmark$ 86.51 81.85 62.20 38.36 74.72 93.73 85.96 84.62 70.97 87.38 表 4 多尺度视觉语义前缀中视觉特征消融实验(%)

Table 4 Visual feature ablation experiments in multi-scale visual semantic prefixes (%)

区域视觉特征 视觉标签 图像描述 Twitter-2015 Twitter-2017 PER LOC ORG MISC F1 PER LOC ORG MISC F1 $ \checkmark$ $ \checkmark$ $ \checkmark$ 86.72 81.63 64.08 38.91 75.11 93.24 85.96 85.22 70.00 87.34 $ \checkmark$ — — 86.25 81.93 63.99 38.23 74.76 93.16 84.83 85.47 69.10 87.13 $ \checkmark$ $ \checkmark$ — 86.56 81.60 64.01 38.59 74.93 93.02 85.79 85.97 68.67 87.28 $ \checkmark$ — $ \checkmark$ 86.87 81.79 63.36 38.68 74.98 92.94 86.52 85.14 68.94 87.14 表 5 单尺度视觉特征下方法性能对比(%)

Table 5 Performance comparison of methods under single scale visual feature (%)

方法 单尺度视觉特征 Twitter-2015

F1Twitter-2017

F1MAF 区域视觉特征 73.42 86.25 MSB 图像标签 73.47 84.32 ITA 视觉标签 75.18 85.67 ITA 5个视觉描述 75.17 85.75 ITA 光学字符识别 75.01 85.64 MSVSE only区域视觉特征 74.84 86.75 MSVSE only视觉标签 74.66 87.17 MSVSE only视觉描述 74.56 87.23 MSVSE w/o视觉前缀 74.89 87.08 MSVSE (本文方法) 75.11 87.34 表 6 不同学习率的方法性能对比(%)

Table 6 Performance comparison of methods under different learning rates (%)

数据集 学习率($\times\; { {10}^{-5} }$) 1 2 3 4 5 6 Twitter-2015 73.4 75.0 75.1 74.8 74.6 74.5 Twitter-2017 87.1 86.8 87.3 87.5 87.2 87.3 表 7 参数量及时间效率对比

Table 7 Comparison of parameter number and time efficiency

方法 参数量(MB) 训练时间(s) 验证时间(s) MSB 122.97 45.80 3.31 UMGF 191.32 314.42 18.73 MAF 136.09 103.39 6.37 ITA 122.97 65.40 4.69 UMT 148.10 156.73 8.59 HvpNet 143.34 70.36 9.34 MSVSE (本文方法) 119.27 75.81 7.03 表 8 基于预训练语言模型的MNER方法性能对比(%)

Table 8 Performance comparison of MNER method based on pre-trained language model (%)

方法 Twitter-2015 Twitter-2017 Glove-BiLSTM-CRF 69.15 79.37 BERT-CRF 71.81 83.44 BERT-large-CRF 73.53 86.81 XLMR-CRF 77.37 89.39 Prompting ChatGPT 79.33 91.43 MSVSE 75.11 87.34 -

[1] Moon S, Neves L, Carvalho V. Multimodal named entity recognition for short social media posts. In: Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. New Orleans, USA: NAACL Press, 2018. 852−860 [2] Lu D, Neves L, Carvalho V, Zhang N, Ji H. Visual attention model for name tagging in multimodal social media. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne, Australia: 2018. 1990−1999 [3] Asgari-Chenaghlu M, Farzinvash M R, Farzinvash L, Balafar M A, Motamed C. CWI: A multimodal deep learning approach for named entity recognition from social media using character, word and image features. Neural Computing and Applications, 2022, 34(3): 1905−1922 doi: 10.1007/s00521-021-06488-4 [4] Zhang Q, Fu J L, Liu X Y, Huang X J. Adaptive co-attention network for named entity recognition in tweets. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence, the 30th Innovative Applications of Artificial Intelligence Conference, and the 8th AAAI Symposium on Educational Advances in Artificial Intelligence. New Orleans, USA: AAAI Press, 2018. 5674− 5681 [5] Zheng C M, Wu Z W, Wang T, Cai Y, Li Q. Object-aware multimodal named entity recognition in social media posts with adversarial learning. IEEE Transactions on Multimedia, 2020, 23: 2520−2532 [6] Wu Z W, Zheng C M, Cai Y, Chen J Y, Leung H F, Li Q. Multimodal representation with embedded visual guiding objects for named entity recognition in social media posts. In: Proceedings of the 28th ACM International Conference on Multimedia. Seattle, USA: ACM, 2020. 1038−1046 [7] Yu J F, Jiang J, Yang L, Xia R. Improving multimodal named entity recognition via entity span detection with unified multimodal transformer. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Virtual Event: 2020. 3342−3352 [8] Xu B, Huang S Z, Sha C F, Wang H Y. MAF: A general matching and alignment framework for multimodal named entity recognition. In: Proceedings of the 15th ACM International Conference on Web Search and Data Mining. New York, USA: Association for Computing Machinery, 2022. 1215−1223 [9] Wang X W, Ye J B, Li Z X, Tian J F, Jiang Y, Yan M, et al. CAT-MNER: Multimodal named entity recognition with knowledge refined cross-modal attention. In: Proceedings of the IEEE International Conference on Multimedia and Exposition. Taipei, China: 2022. 1−6 [10] Zhang D, Wei S Z, Li S S, Wu H Q, Zhu Q M, Zhou G D. Multimodal graph fusion for named entity recognition with targeted visual guidance. In: Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto, USA: AAAI Press, 2021. 14347− 14355 [11] 钟维幸, 王海荣, 王栋, 车淼. 多模态语义协同交互的图文联合命名实体识别方法. 广西科学, 2022, 29(4): 681−690Zhong Wei-Xing, Wang Hai-Rong, Wang Dong, Che Miao. lmage-text joint named entity recognition method based on multimodal semantic interaction. Guangxi Sciences, 2022, 29(4): 681−690 [12] Yu T, Sun X, Yu H F, Li Y, Fu K. Hierarchical self-adaptation network for multimodal named entity recognition in social media. Neurocomputing, 2021, 439 : 12−21 [13] Wang X Y, Gui M, Jiang Y, Jia Z X, Bach N, Wang T, et al. ITA: Image-text alignments for multimodal named entity recognition. In: Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Seattle, USA: Association for Computational Linguistics, 2022. 3176−3189 [14] Liu L P, Wang M L, Zhang M Z, Qing L B, He X H. UAMNer: Uncertainty aware multimodal named entity recognition in social media posts. Applied Intelligence, 2022, 52(4): 4109−4125 doi: 10.1007/s10489-021-02546-5 [15] 李晓腾, 张盼盼, 勾智楠, 高凯. 基于多任务学习的多模态命名实体识别方法. 计算机工程, 2023, 49(4): 114−119Li Xiao-Teng, Zhang Pan-Pan, Gou Zhi-Nan, Gao Kai. Multimodal named entity recognition method based on multi-task learning. Computer Engineering, 2023, 49(4): 114−119 [16] Wang J, Yang Y, Liu K Y, Zhu Z P, Liu X R. M3S: Scene graph driven multi-granularity multi-task learning for multimodal NER. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2023, 31 : 111−120 [17] Chen X, Zhang N Y, Li L, Yao Y Z, Deng S M, Tan C Q, et al. Good visual guidance make a better extractor: Hierarchical visual prefix for multimodal entity and relation extraction. In: Proceedings of the Association for Computational Linguistics. Seattle, USA: Association for Computational Linguistics, 2022. 1607−1618 [18] Jia M, Shen L, Shen X, Liao L J, Chen M, He X D, et al. MNER-QG: An end-to-end MRC framework for multimodal named entity recognition with query grounding. AAAI, 2022, 37(7): 8032−8040 [19] Sun L, Wang J Q, Su Y D, Weng F S, Sun Y X, Zheng Z W, et al. RIVA: A pre-trained tweet multimodal model based on text-image relation for multimodal NER. In: Proceedings of the 28th International Conference on Computational Linguistics. Virtual Event: 2022. 1852−1862 [20] Sun L, Wang J Q, Zhang K, Su Y D, Weng F S. RpBERT: A text-image relation propagation-based BERT model for multimodal NER. In: Proceedings of the AAAI Conference on Artificial Intelligence. Virtual Event: 2021. 13860−13868 [21] Xu B, Huang S, Du M, Wang H Y, Song H, Sha C F, et al. Different data, different modalities reinforced data splitting for effective multimodal information extraction from social media posts. In: Proceedings of the 29th International Conference on Computational Linguistics. Virtual Event: 2022. 1855−1864 [22] Zhao F, Li C H, Wu Z, Xing S Y, Dai X Y. Learning from different text-image pairs: A relation-enhanced graph convolutional network for multimodal NER. In: Proceedings of the 30th ACM International Conference on Multimedia, Association for Computing Machinery. New York, USA: 2022. 3983−3992 [23] Zhou B H, Zhang Y, Song K H, Guo W Y, Zhao G Q, Wang W B, et al. A span-based multimodal variational autoencoder for semi-supervised multimodal named entity recognition. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Abu Dhabi, United Arab Emirates: Association for Computational Linguistics, 2022. 6293−6302 [24] He K M, Gkioxari G, Dollár P, Girshick R. Mask-RCNN. In: Proceedings of the IEEE International Conference on Computer Vision. Venice, Italy: 2017. 2980−2988 [25] Vinyals O, Toshev A, Bengio S, Erhan D. Show and tell: A neural image caption generator. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: 2015. 3156−3164 [26] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: 2016. 770−778 [27] 王海荣, 徐玺, 王彤, 荆博祥. 多模态命名实体识别方法研究进展. 多模态命名实体识别方法研究进展. 郑州大学学报(工学版), 2024, 45 (2): 60−71 doi: 10.13705/j.issn.1671-6833Wang Hai-Rong, Xu Xi, Wang Tong, Jing Bo-Xiang. Research progress of multimodal named entity recognition. Journal of Zhengzhou University (Engineering Science), 2024, 45 (2): 60−71 doi: 10.13705/j.issn.1671-6833 [28] Li J Y, Li H, Pan Z, Sun D, Wang J H, Zhang W K, et al. Prompting ChatGPT in MNER: Enhanced multimodal named entity recognition with auxiliary refined knowledge. In: Proceedings of the Association for Computational Linguistics. Singapore: 2023. 2787−2802 -

下载:

下载: