|

[1]

|

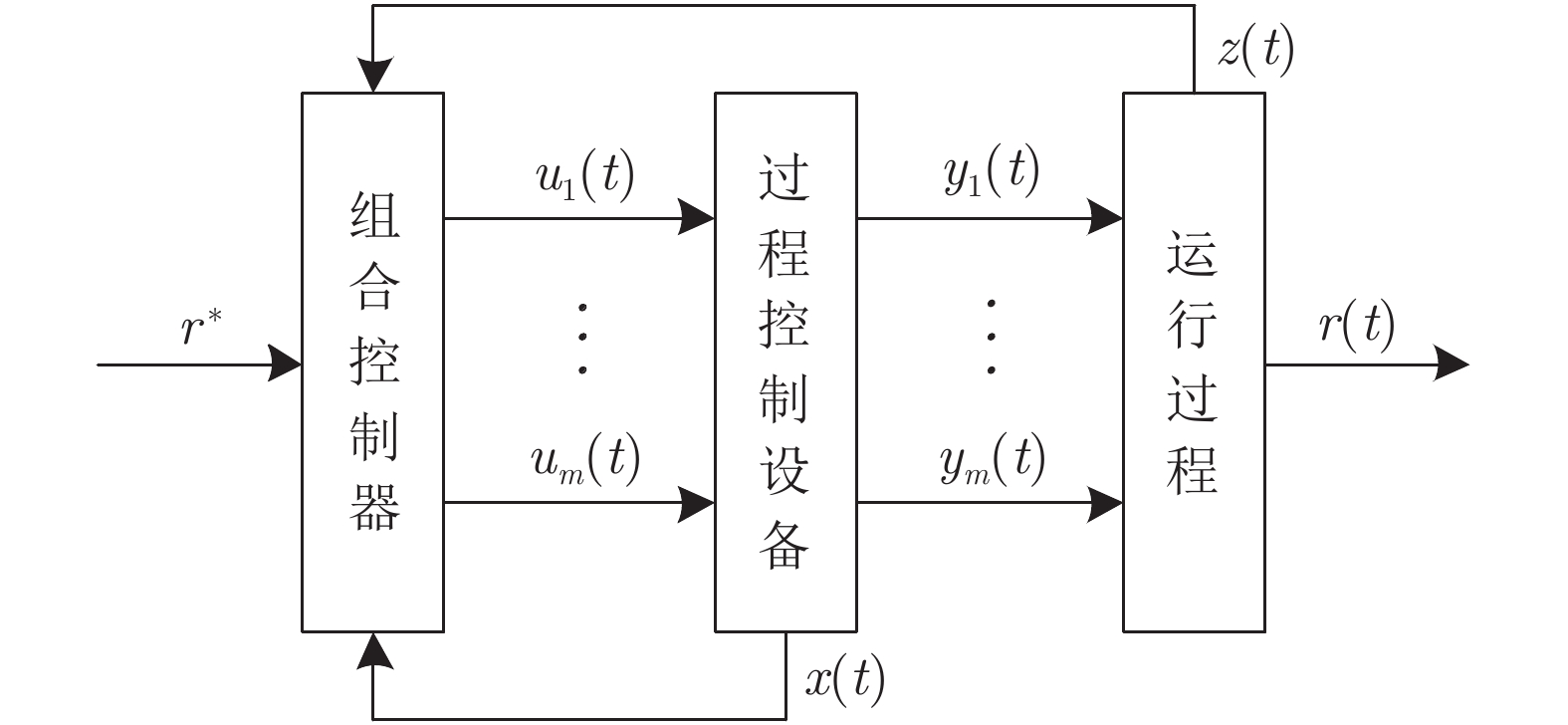

柴天佑. 工业过程控制系统研究现状与发展方向. 中国科学: 信息科学, 2016, 46: 1003-1015 doi: 10.1360/N112016-00062Chai Tian-You. Industrial process control systems: Research status and development direction. Scientia Sinica Informationis, 2016, 46: 1003-1015 doi: 10.1360/N112016-00062

|

|

[2]

|

柴天佑. 工业人工智能发展方向. 自动化学报, 2020, 46(10): 2005-2012Chai Tian-You. Development directions of industrial artificial intelligence. Acta Automatica Sinica, 2020, 46(10): 2005-2012

|

|

[3]

|

Jiang Y, Fan J L, Chai T Y, Lewis F L. Dual-rate operational optimal control for flotation industrial process with unknown operational model. IEEE Transactions on Industrial Electronics, 2019, 66(6): 4587-4599 doi: 10.1109/TIE.2018.2856198

|

|

[4]

|

代伟, 陆文捷, 付俊, 马小平. 工业过程多速率分层运行优化控制. 自动化学报, 2019, 45(10): 1946-1959Dai Wei, Lu Wen-Jie, Fu Jun, Ma Xiao-Ping. Multi-rate layered optimal operational control of industrial processes. Acta Automatica Sinica, 2019, 45(10): 1946-1959

|

|

[5]

|

Xue W Q, Fan J L, Lopez V G, Li J N, Jiang Y, Chai T Y. New methods for optimal operational control of industrial processes using reinforcement learning on two time scales. IEEE Transactions on Industrial Informatics, 2020, 16(5): 3085-3099 doi: 10.1109/TII.2019.2912018

|

|

[6]

|

柴天佑, 刘强, 丁进良, 卢绍文, 宋延杰, 张艺洁. 工业互联网驱动的流程工业智能优化制造新模式研究展望. 中国科学: 技术科学, 2022, 52(1): 14-25 doi: 10.1360/SST-2021-0405Chai Tian-You, Liu Qiang, Ding Jin-Liang, Lu Shao-Wen, Song Yan-Jie, Zhang Yi-Jie. Perspectives on industrial-internet-driven intelligent optimized manufacturing mode for process industries. Scientia Sinica Technologica, 2022, 52(1): 14-25 doi: 10.1360/SST-2021-0405

|

|

[7]

|

Yang Y R, Zou Y Y, Li S Y. Economic model predictive control of enhanced operation performance for industrial hierarchical systems. IEEE Transactions on Industrial Electronics, 2022, 69(6): 6080-6089 doi: 10.1109/TIE.2021.3088334

|

|

[8]

|

富月, 杜琼. 一类工业运行过程多模型自适应控制方法. 自动化学报, 2018, 44(7): 1250-1259Fu Yue, Du Qiong. Multi-model adaptive control method for a class of industrial operational processes. Acta Automatica Sinica, 2018, 44(7): 1250-1259

|

|

[9]

|

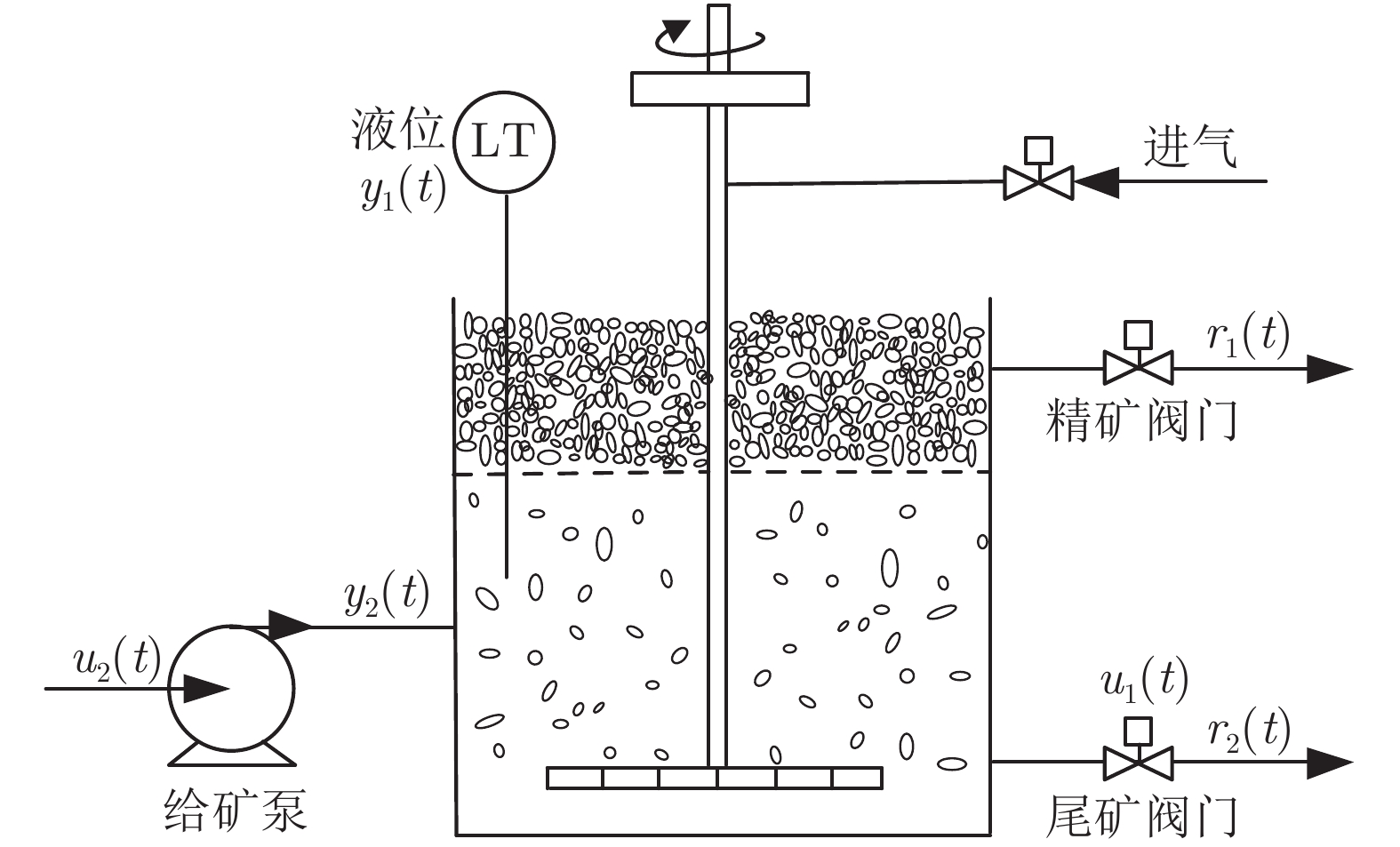

Wang L Y, Jia Y, Chai T Y, Xie W F. Dual-rate adaptive control for mixed separation thickening process using compensation signal based approach. IEEE Transactions on Industrial Electronics, 2018, 65(4): 3621-3632 doi: 10.1109/TIE.2017.2752144

|

|

[10]

|

Lu X L, Kiumarsi B, Chai T Y, Jiang Y, Lewis F L. Operational control of mineral grinding processes using adaptive dynamic programming and reference governor. IEEE Transactions on Industrial Informatics, 2019, 15(4): 2210-2221 doi: 10.1109/TII.2018.2868473

|

|

[11]

|

Sutton R S, Barto A G. Reinforcement Learning: An Introduction. Cambridge: MIT Press, 2nd edition, 2018.

|

|

[12]

|

Bradtke S J, Ydstie B E, Barto A G. Adaptive linear quadratic control using policy iteration. In: Proceedings of the American Control Conference. Baltimore, USA: IEEE, 1994. 3475−3479

|

|

[13]

|

Kiumarsi B, Lewis F L, Modares H, Karimpour A, Naghibi-Sistani M B. Reinforcement $Q$ -learning for optimal tracking control of linear discrete-time systems with unknown dynamics. Automatica, 2014, 50(4): 1167-1175 doi: 10.1016/j.automatica.2014.02.015 -learning for optimal tracking control of linear discrete-time systems with unknown dynamics. Automatica, 2014, 50(4): 1167-1175 doi: 10.1016/j.automatica.2014.02.015

|

|

[14]

|

吴倩, 范家璐, 姜艺, 柴天佑. 无线网络环境下数据驱动混合选别浓密过程双率控制方法. 自动化学报, 2019, 45(6): 1122-1135 doi: 10.16383/j.aas.c180202Wu Qian, Fan Jia-Lu, Jiang Yi, Chai Tian-You. Data-driven dual-rate control for mixed separation thickening process in a wireless network environment. Acta Automatica Sinica, 2019, 45(6): 1122-1135 doi: 10.16383/j.aas.c180202

|

|

[15]

|

Dai W, Zhang L Z, Fu J, Chai T Y, Ma X P. Dual-rate adaptive optimal tracking control for dense medium separation process using neural networks. IEEE Transactions on Neural Networks and Learning Systems, 2021, 32(9): 4202-4216 doi: 10.1109/TNNLS.2020.3017184

|

|

[16]

|

Li J N, Kiumarsi B, Chai T Y, Fan J L. Off-policy reinforcement learning: Optimal operational control for two-time-scale industrial processes. IEEE Transactions on Cybernetics, 2017, 47(12): 4547-4558 doi: 10.1109/TCYB.2017.2761841

|

|

[17]

|

Li J N, Chai T Y, Lewis F L, Fan J L, Ding Z T, Ding J L. Off-policy $Q$ -learning: Set-point design for optimizing dual-rate rougher flotation operational processes. IEEE Transactions on Industrial Electronics, 2018, 65(5): 4092-4102 doi: 10.1109/TIE.2017.2760245 -learning: Set-point design for optimizing dual-rate rougher flotation operational processes. IEEE Transactions on Industrial Electronics, 2018, 65(5): 4092-4102 doi: 10.1109/TIE.2017.2760245

|

|

[18]

|

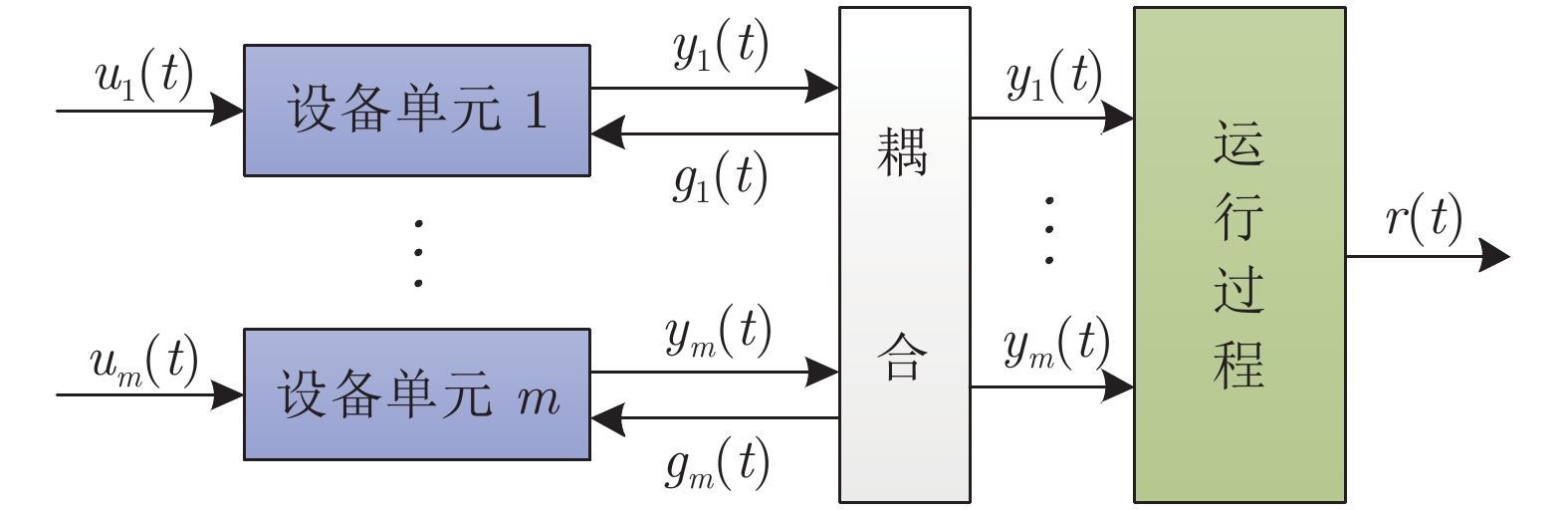

Zhao J G, Yang C Y, Dai W, Gao W N. Reinforcement learning-based composite optimal operational control of industrial systems with multiple unit devices. IEEE Transactions on Industrial Informatics, 2022, 18(2): 1091-1101 doi: 10.1109/TII.2021.3076471

|

|

[19]

|

Kokotovic P V, Khalil H K, O'Reilly J. Singular Perturbation Methods in Control: Analysis and Design. Philadelphia: SIAM, 1999.

|

|

[20]

|

Zhao J G, Yang C Y, Gao W N. Reinforcement learning based optimal control of linear singularly perturbed systems. IEEE Transactions on Circuits and Systems Ⅱ: Express Briefs, 2022, 69(3): 1362-1366 doi: 10.1109/TCSII.2021.3105652

|

|

[21]

|

Litkouhi B, Khalil H K. Multirate and composite control of two-time-scale discrete-time systems. IEEE Transactions on Automatic Control, 1985, 30(7): 645-651 doi: 10.1109/TAC.1985.1104024

|

|

[22]

|

Zhou L N, Zhao J G, Ma L, Yang C Y. Decentralized composite suboptimal control for a class of two-time-scale interconnected networks with unknown slow dynamics. Neurocomputing, 2020, 382: 71-79 doi: 10.1016/j.neucom.2019.11.057

|

|

[23]

|

Li J N, Ding J L, Chai T Y, Lewis F L. Nonzero-sum game reinforcement learning for performance optimization in large-scale industrial processes. IEEE Transactions on Cybernetics, 2020, 50(9): 4132-4145 doi: 10.1109/TCYB.2019.2950262

|

|

[24]

|

袁兆麟, 何润姿, 姚超, 李佳, 班晓娟. 基于强化学习的浓密机底流浓度在线控制算法. 自动化学报, 2021, 47(7): 1558-1571 doi: 10.16383/j.aas.c190348Yuan Zhao-Lin, He Run-Zi, Yao Chao, Li Jia, Ban Xiao-Juan. Online reinforcement learning control algorithm for concentration of thickener underflow. Acta Automatica Sinica, 2021, 47(7): 1558-1571 doi: 10.16383/j.aas.c190348

|

|

[25]

|

Granzotto M, Postoyan R, Busoniu L, Nešić D, Daafouz J. Finite-horizon discounted optimal control: $\rm{S}$ tability and performance. IEEE Transactions on Automatic Control, 2021, 66(2): 550-565 doi: 10.1109/TAC.2020.2985904 tability and performance. IEEE Transactions on Automatic Control, 2021, 66(2): 550-565 doi: 10.1109/TAC.2020.2985904

|

|

[26]

|

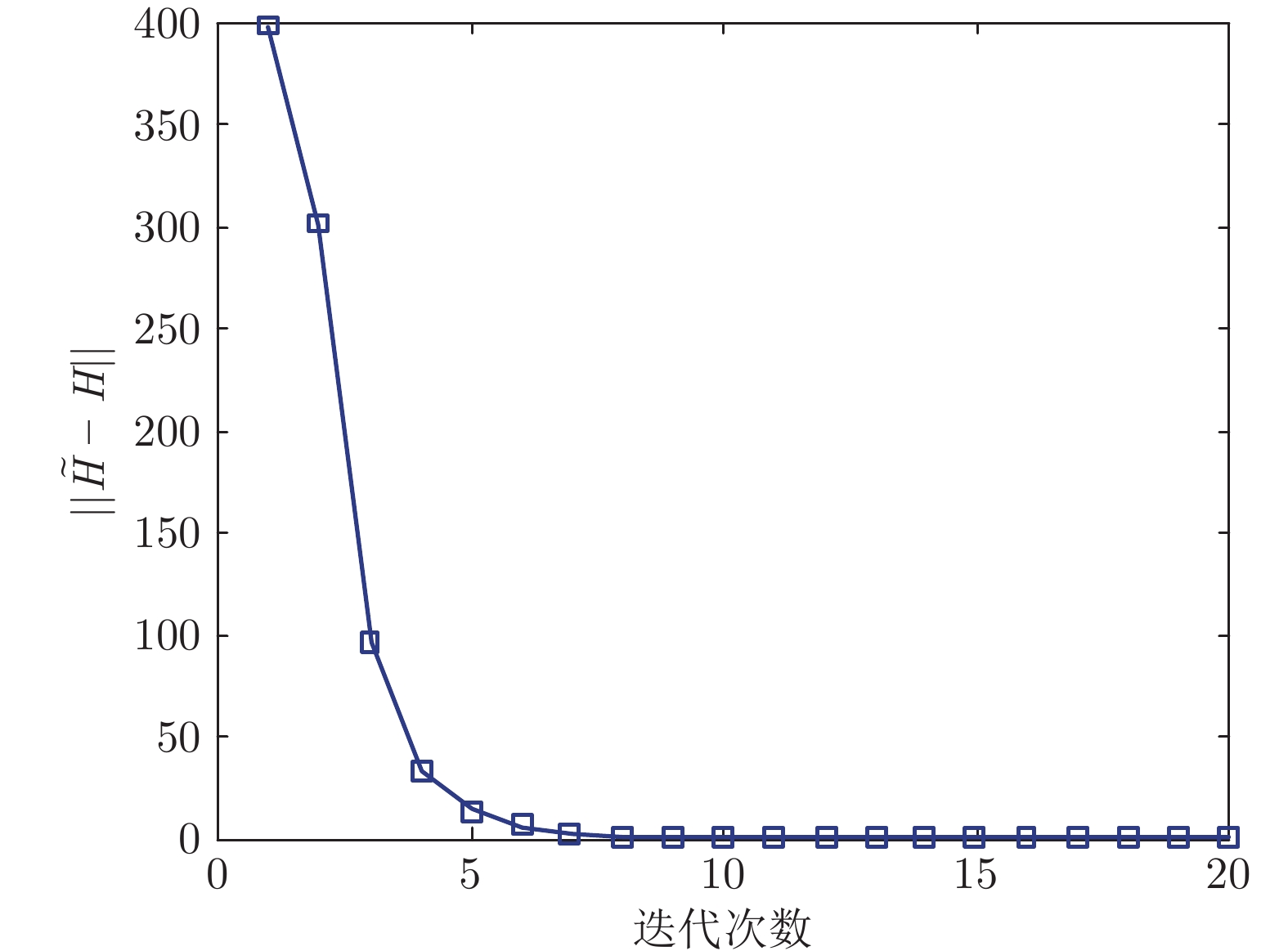

Zhao J G, Yang C Y, Gao W N, Zhou L N. Reinforcement learning and optimal setpoint tracking control of linear systems with external disturbances. IEEE Transactions on Industrial Informatics, 2022, 18(11): 7770−7779

|

|

[27]

|

李彦瑞, 杨春节, 张瀚文, 李俊方. 流程工业数字孪生关键技术探讨. 自动化学报, 2021, 47(3): 501-514 doi: 10.16383/j.aas.c200147Li Yan-Rui, Yang Chun-Jie, Zhang Han-Wen, Li Jun-Fang. Discussion on key technologies of digital twin in process industry. Acta Automatica Sinica, 2021, 47(3): 501-514 doi: 10.16383/j.aas.c200147

|

|

[28]

|

姜艺, 范家璐, 柴天佑. 数据驱动的保证收敛速率最优输出调节. 自动化学报, 2022, 48(4): 980-991 doi: 10.16383/j.aas.c200932Jiang Yi, Fan Jia-Lu, Chai Tian-You. Data-driven optimal output regulation with assured convergence rate. Acta Automatica Sinica, 2022, 48(4): 980-991 doi: 10.16383/j.aas.c200932

|

|

[29]

|

Jiang Y, Gao W N, Na J, Zhang D, Hämäläinen T T, Stojanovic V, et al. Value iteration and adaptive optimal output regulation with assured convergence rate. Control Engineering Practice, 2022, 121: Article No. 105042

|

|

[30]

|

Huang J. Nonlinear Output Regulation: Theory and Applications. Philadelphia: SIAM, 2004.

|

|

[31]

|

Lewis F L, Vrabie D, Syrmos V L. Optimal Control. New York: John Wiley and Sons, 3rd edition, 2012.

|

|

[32]

|

Mukherjee S, Bai H, Chakrabortty A. Reduced-dimensional reinforcement learning control using singular perturbation approximations. Automatica, 2021, 126: Article No. 109451

|

|

[33]

|

Rizvi S A A, Lin Z L. Output feedback $Q$ -learning for discrete-time linear zero-sum games with application to the $H$ -learning for discrete-time linear zero-sum games with application to the $H$ -infinity control. Automatica, 2018, 95: 213-221 doi: 10.1016/j.automatica.2018.05.027 -infinity control. Automatica, 2018, 95: 213-221 doi: 10.1016/j.automatica.2018.05.027

|

|

[34]

|

Liu D R, Xue S, Zhao B, Luo B, Wei Q L. Adaptive dynamic programming for control: A survey and recent advances. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 2021, 51(1): 142-160 doi: 10.1109/TSMC.2020.3042876

|

|

[35]

|

Jiang Y, Kiumarsi B, Fan J L, Chai T Y, Li J N, Lewis F L. Optimal output regulation of linear discrete-time systems with unknown dynamics using reinforcement learning. IEEE Transactions on Cybernetics, 2020, 50(7): 3147-3156 doi: 10.1109/TCYB.2018.2890046

|

|

[36]

|

Liu X M, Yang C Y, Luo B, Dai W. Suboptimal control for nonlinear slow-fast coupled systems using reinforcement learning and Takagi-Sugeno fuzzy methods. International Journal of Adaptive Control and Signal Processing, 2021, 35(6): 1017-1038 doi: 10.1002/acs.3234

|

下载:

下载: