Research Reviews of Combinatorial Optimization Methods Based on Deep Reinforcement Learning

-

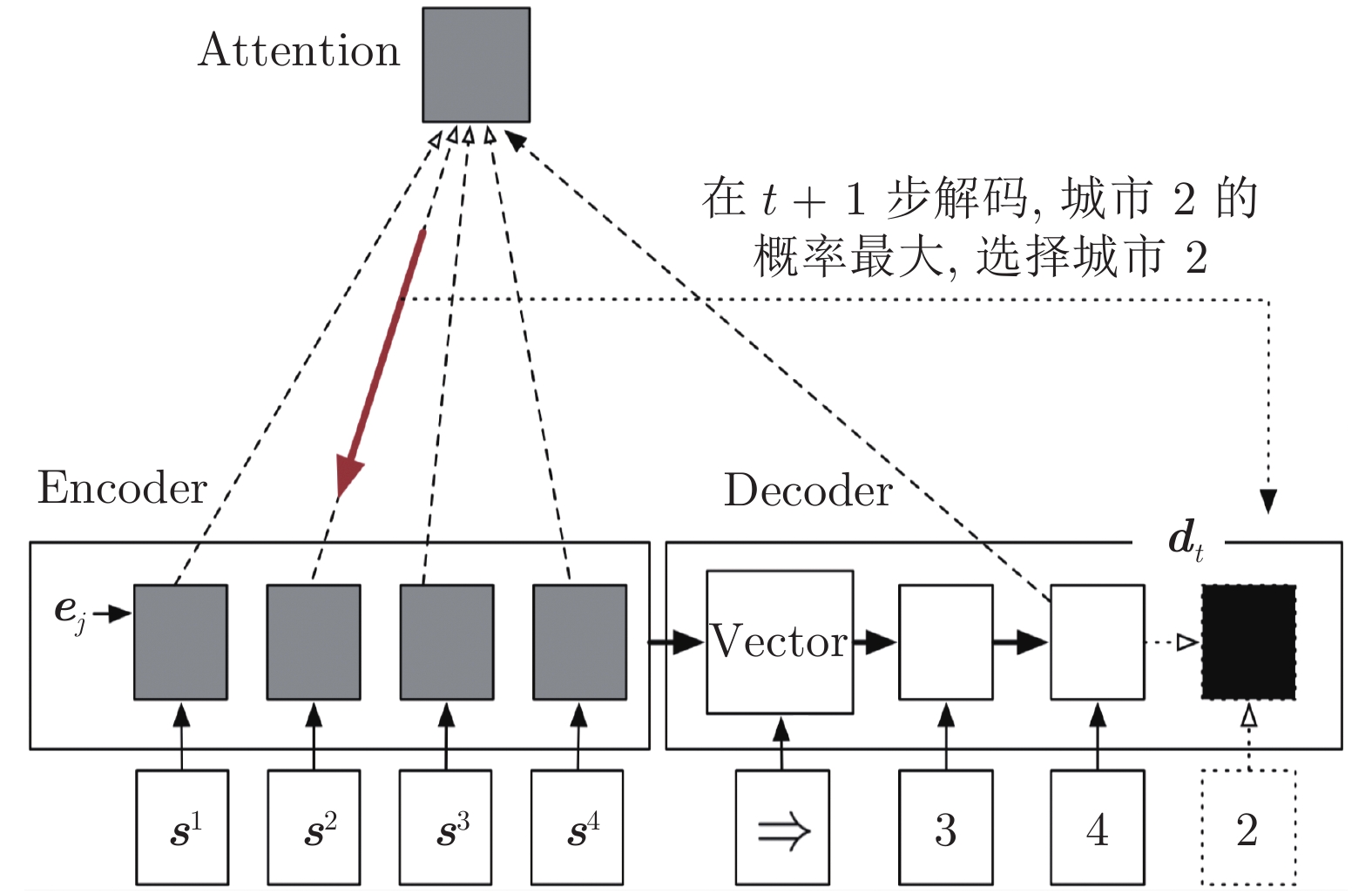

摘要: 组合优化问题广泛存在于国防、交通、工业、生活等各个领域, 几十年来, 传统运筹优化方法是解决组合优化问题的主要手段, 但随着实际应用中问题规模的不断扩大、求解实时性的要求越来越高, 传统运筹优化算法面临着很大的计算压力, 很难实现组合优化问题的在线求解. 近年来随着深度学习技术的迅猛发展, 深度强化学习在围棋、机器人等领域的瞩目成果显示了其强大的学习能力与序贯决策能力. 鉴于此, 近年来涌现出了多个利用深度强化学习方法解决组合优化问题的新方法, 具有求解速度快、模型泛化能力强的优势, 为组合优化问题的求解提供了一种全新的思路. 因此本文总结回顾近些年利用深度强化学习方法解决组合优化问题的相关理论方法与应用研究, 对其基本原理、相关方法、应用研究进行总结和综述, 并指出未来该方向亟待解决的若干问题.Abstract: Combinatorial optimization problems widely exist in various fields such as national defense, transportation, industry and life. For decades, traditional operational research methods are the main means to solve combinatorial optimization problems. However, with the increase of problem size in practical applications and the increasing demands for real-time optimization, traditional methods suffer from great computational burdens, and it is difficult to realize the online solution of combinatorial optimization problems. In recent years, with the rapid development of deep learning technology, the achievements of deep reinforcement learning in AlphaGo, robot and other fields show its strong learning ability and sequential decision-making ability. In view of this, in recent years, a number of new methods using deep reinforcement learning to solve combinatorial optimization problems have emerged, which have the advantages of fast solving speed and strong model generalization ability. It provides a new idea for solving combinatorial optimization problems. Therefore, this paper summarizes and reviews the theoretical methods and application researches of this kind of methods in recent years.

-

表 1 现有算法模型、训练方法、求解问题、以及优化效果比较

Table 1 Comparison of model, training method, solving problems and performance with existing algorithms

方法类别 研究 模型以及训练方法 求解问题及优化效果 基于 Pointer

network 的端

到端方法2015 年 Vinyals 等[30] Ptr-Net + 监督式训练 30 TSP问题: 接近最优解, 优于启发式算法. 40, 50-TSP:

与最优解存在一定差距. 凸包问题、三角剖分问题.2017 年 Bello 等[31] Ptr-Net + REINFORCE &

Critic baseline50-TSP: 优于文献 [30]. 100-TSP: 接近 Concorde 最优解.

200-Knapsack: 达到最优解.2018 年 Nazari 等[32] Ptr-Net + REINFORCE &

Critic baseline100-TSP: 与文献 [31]优化效果相近, 训练时间降低约60 %.

100-CVRP/随机CVRP: 优于多个启发式算法.2018 年 Deudon 等[33] Transformer attention +

REINFORCE & Critic baseline20, 50-TSP: 优于文献 [31]. 100-TSP: 与文献 [31]优化效果相近. 2019 年 Kool 等[34] Transformer attention +

REINFORCE & Rollout baseline100-TSP: 优于文献 [30-33, 37, 40]. 100-CVRP、100-SDVRP、100-OP、100-PCTSP、SPCTSP: 接近 Gurobi 最优解, 优于多种启发式方法. 2020 年 Ma 等[35] Graph pointer network + HRL 20, 50-TSP: 优于文献 [31, 37], 劣于文献 [34]. 250, 500, 1000-TSP:

优于文献 [31, 34]. 20-TSPTW: 优于OR-Tools、蚁群算法.2021 年 Li 等[36] Ptr-Net + REINFORCE & Critic

baseline & 分解策略/参数迁移40, 100, 150, 200, 500-两目标/三目标TSP :

优于 MOEA/D、NSGA-II、MOGLS.基于图神经网络

的端到端方法2017 年 Dai 等[37] structure2vec + DQN 1200-TSP: 接近文献 [31]. 1200-MVC (最小顶点覆盖): 接近最优解.

1200-MAXCUT (最大割集): 接近最优解.2020 年

Manchanda 等[38]GCN + DQN 2k至20k-MCP (最大覆盖问题): 优于文献 [37]. 10k, 20k, 50k-MVC: 优于文献 [37]. 2018 年 Li 等[39] GCN + 监督式训练 +

引导树搜索实际数据集 MVC、MIS (最大独立点集)、MC (极大团)、

Satisfiability (适定性问题): 优于文献 [37].2017 年 Nowak 等[40] GNN + 监督式训练 +

波束搜索20-TSP: 劣于文献 [30]. 2019 年 Joshi 等[41] GCN + 监督式训练 +

波束搜索20, 50, 100-TSP: 略微优于文献 [30-31, 33-34], 优于文献 [37]. 深度强化学习改

进的局部搜索

方法2019 年 Chen 等[47] Ptr-Net + Actor-critic 20-CVRP: 达到最优解. 50, 100-CVRP: 优于文献 [32, 34]、OR-Tools. 作业车间调度: 优于OR-Tools、DeepRM. 2019 年 Yolcu 等[48] GNN + REINFORCE 实际数据集 Satisfiability、MIS、MVC、MC、图着色问题: 更少

搜索步数得到最优解、但单步运行时间长于传统算法.2020 年 Gao 等[49] Graph attention + PPO 100-CVPR: 优于文献 [34]. 100-CVPRTW: 优于多个启发式方法.

400-CVRPTW: 劣于单个启发式方法, 优于其他.2020 年 Lu 等[50] Transformer attention +

REINFORCE20, 50, 100-CVRP: 优于文献 [32, 34, 47], 以及优于 OR Tools、

LKH3. 且运行时间远低于 LKH3.表 2 端到端模型在TSP问题上优化性能比较

Table 2 Comparison of end-to-end model on TSP

方法类别 模型 TSP-20 TSP-50 TSP-100 最优 Concorde 3.84 5.70 7.76 基于指

针网络

(Attention

机制)Vinyals 等[30] 3.88 7.66 — Bello 等[31] 3.89 5.95 8.30 Nazari 等[32] 3.97 6.08 8.44 Deudon 等[33] 3.86 5.81 8.85 Deudon 等[33] + 2OPT 3.85 5.85 8.17 Kool 等[34] (Greedy) 3.85 (0 s) 5.80 (2 s) 8.12 (6 s) Kool 等[34] (Sampling) 3.84 (5 m) 5.73 (24 m) 7.94 (1 h) 基于图神

经网络Dai 等[37] 3.89 5.99 8.31 Nowak 等[40] 3.93 — — Joshi 等[41] (Greedy) 3.86 (6 s) 5.87 (55 s) 8.41 (6 m) Joshi 等[41] (BS) 3.84 (12 m) 5.70 (18 m) 7.87 (40 m) 表 3 多个模型在VRP问题上优化性能比较

Table 3 Comparison of models on VRP

表 4 不同组合优化问题求解算法统计与比较

Table 4 Summary and comparison of algorithms on different combinatorial optimization problems

组合优化问题 文献 模型细节 TSP 问题 [30–36] 基于 Ptr-Net 架构

(Encoder-decoder-attention)[37] GNN + DQN [40–41] GNN + 监督式训练 + 波束搜索 VRP 问题 [32, 34] 基于Ptr-Net 架构 (Encoder-

decoder-attention)[47, 49–50] DRL 训练局部搜索算子. [47]:

Ptr-Net 模型, [49]: Graph

attention 模型, [50]: Transformer

attention 模型.最小顶点覆盖问题(MVC) [37–38, 48] GNN + RL [39] GNN + 监督式训练 + 树搜索 最大割集问题(MaxCut) [37] GNN + DQN [57] Message passing neural network

(MPNN) + DQN[58] * CNN&RNN + PPO 适定性问题(Satisfiability) [39, 48] GNN + 监督式训练/RL 最小支配集问题 (MDS) [48] GNN + RL [59] * Decision diagram + RL 极大团问题 (MC) [39, 48] GNN + 监督式训练/RL 最大独立集问题 (MIS) [39] GNN + 监督式训练 + 树搜索 [60] * GNN + RL + 蒙特卡洛树搜索 背包问题 (Knapsack) [31] Ptr-Net + RL 车间作业调度问题 [47] LSTM + RL 训练局部搜索算子 装箱问题 (BPP) [61] * LSTM + RL [62] * NN + RL + 蒙特卡洛树搜索 图着色问题 [48] GNN + RL [63] * LSTM + RL + 蒙特卡洛树搜索 -

[1] Papadimitriou C H, Steiglitz K. Combinatorial Optimization: Algorithms and Complexity. Mineola, New York: Dover Publications, 1998. [2] Festa P. A brief introduction to exact, approximation, and heuristic algorithms for solving hard combinatorial optimization problems. In: Proceedings of the 16th International Conference on Transparent Optical Networks (ICTON). Graz, Austria: IEEE, 2014. 1−20 [3] Lawler E L, Wood D E. Branch-and-bound methods: A survey. Operations Research, 1966, 14(4): 699-719 doi: 10.1287/opre.14.4.699 [4] Bertsekas D P. Dynamic Programming and Optimal Control. Belmont, MA: Athena Scientific, 1995. [5] Sniedovich M. Dynamic Programming: Foundations and Principles (Second edition). Boca Raton, FL: CRC Press, 2010. [6] Williamson D P, Shmoys D B. The Design of Approximation Algorithms. Cambridge: Cambridge University Press, 2011. [7] Vazirani V V. Approximation Algorithms. Berlin, Heidelberg: Springer, 2003. [8] Hochba D S. Approximation algorithms for NP-hard problems. ACM Sigact News, 1997, 28(2): 40-52 doi: 10.1145/261342.571216 [9] Teoh E J, Tang H J, Tan K C. A columnar competitive model with simulated annealing for solving combinatorial optimization problems. In: Proceedings of the 2006 IEEE International Joint Conference on Neural Network. Vancouver, BC, Canada: IEEE, 2006. 3254−3259 [10] Van Laarhoven P J M, Aarts E H L, Lenstra J K. Job shop scheduling by simulated annealing. Operations Research, 1992, 40(1): 113-125 doi: 10.1287/opre.40.1.113 [11] Wesley Barnes J, Laguna M. Solving the multiple-machine weighted flow time problem using tabu search. IIE Transactions, 1993, 25(2): 121-128 doi: 10.1080/07408179308964284 [12] Basu S. Tabu search implementation on traveling salesman problem and its variations: A literature survey. American Journal of Operations Research, 2012, 2(2): 163-173 doi: 10.4236/ajor.2012.22019 [13] Halim A H, Ismail I. Combinatorial optimization: Comparison of heuristic algorithms in travelling salesman problem. Archives of Computational Methods in Engineering, 2019, 26(2): 367-380 doi: 10.1007/s11831-017-9247-y [14] Rezoug A, Bader-El-Den M, Boughaci D. Guided genetic algorithm for the multidimensional knapsack problem. Memetic Computing, 2018, 10(1): 29-42 doi: 10.1007/s12293-017-0232-7 [15] Lin B, Sun X Y, Salous S. Solving travelling salesman problem with an improved hybrid genetic algorithm. Journal of Computer and Communications, 2016, 4(15): 98-106 doi: 10.4236/jcc.2016.415009 [16] Prado R S, Silva R C P, Guimaraes F G, Neto O M. Using differential evolution for combinatorial optimization: A general approach. In: Proceedings of the 2010 IEEE International Conference on Systems, Man and Cybernetics. Istanbul, Turkey: IEEE, 2010. 11−18 [17] Onwubolu G C, Davendra D. Differential Evolution: A Handbook for Global Permutation-Based Combinatorial Optimization. Vol. 175. Berlin, Heidelberg: Springer, 2009. [18] Deng W, Xu J J, Zhao H M. An improved ant colony optimization algorithm based on hybrid strategies for scheduling problem. IEEE Access, 2019, 7: 20281-20292 doi: 10.1109/ACCESS.2019.2897580 [19] Ramadhani T, Hertono G F, Handari B D. An Ant Colony Optimization algorithm for solving the fixed destination multi-depot multiple traveling salesman problem with non-random parameters. AIP Conference Proceedings, 2017, 1862: 030123 [20] Zhong Y W, Lin J, Wang L J, Zhang H. Discrete comprehensive learning particle swarm optimization algorithm with Metropolis acceptance criterion for traveling salesman problem. Swarm and Evolutionary Computation, 2018, 42: 77-88 doi: 10.1016/j.swevo.2018.02.017 [21] Nouiri M, Bekrar A, Jemai A, Niar S, Ammari A C. An effective and distributed particle swarm optimization algorithm for flexible job-shop scheduling problem. Journal of Intelligent Manufacturing, 2018, 29(3): 603-615 doi: 10.1007/s10845-015-1039-3 [22] Lourenco H R, Martin O C, Stutzle T. Iterated local search: Framework and applications. Handbook of Metaheuristics. Springer, 2019. 129−168 [23] Grasas A, Juan A A, Lourenco H R. SimILS: A simulation-based extension of the iterated local search metaheuristic for stochastic combinatorial optimization. Journal of Simulation, 2016, 10(1): 69-77 doi: 10.1057/jos.2014.25 [24] Zhang G H, Zhang L J, Song X H, Wang Y C, Zhou C. A variable neighborhood search based genetic algorithm for flexible job shop scheduling problem. Cluster Computing, 2019, 22(5): 11561-11572 [25] Hore S, Chatterjee A, Dewanji A. Improving variable neighborhood search to solve the traveling salesman problem. Applied Soft Computing, 2018, 68: 83-91 doi: 10.1016/j.asoc.2018.03.048 [26] Silver D, Schrittwieser J, Simonyan K, Antonoglou I, Huang A, Guez A, et al. Mastering the game of go without human knowledge. Nature, 2017, 550(7676): 354-359 doi: 10.1038/nature24270 [27] Mnih V, Kavukcuoglu K, Silver D, Rusu A A, Veness J, Bellemare M G, et al. Human-level control through deep reinforcement learning. Nature, 2015, 518(7540): 529-533 doi: 10.1038/nature14236 [28] Hopfield J J, Tank D W. ``Neural'' computation of decisions in optimization problems. Biological Cybernetics, 1985, 52(3): 141-152 [29] Smith K A. Neural networks for combinatorial optimization: A review of more than a decade of research. INFORMS Journal on Computing, 1999, 11(1): 15-34 doi: 10.1287/ijoc.11.1.15 [30] Vinyals O, Fortunato M, Jaitly N. Pointer networks. In: Proceedings of the 28th International Conference on Neural Information Processing Systems. Montreal, Canada: MIT Press, 2015. 2692−2700 [31] Bello I, Pham H, Le Q V, Norouzi M, Bengio S. Neural combinatorial optimization with reinforcement learning. In: Proceedings of the 5th International Conference on Learning Representations (ICLR 2017). Toulon, France, 2017. [32] Nazari M, Oroojlooy A, TakacM, Snyder L V. Reinforcement learning for solving the vehicle routing problem. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montreal, Canada: Curran Associates Inc., 2018. 9861−9871 [33] Deudon M, Cournut P, Lacoste A, Adulyasak Y, Rousseau L M. Learning heuristics for the TSP by policy gradient. In: Proceedings of the 15th International Conference on the Integration of Constraint Programming, Artificial Intelligence, and Operations Research. Delft, The Netherlands: Springer, 2018. 170−181 [34] Kool W, Van Hoof H, Welling M. Attention, learn to solve routing problems! In: Proceedings of the 7th International Conference on Learning Representations (ICLR2019). New Orleans, LA, USA, 2019. [35] Ma Q, Ge S W, He D Y, Thaker D, Drori I. Combinatorial optimization by graph pointer networks and hierarchical reinforcement learning. In: Proceedings of the 1st International Workshop on Deep Learning on Graphs: Methodologies and Applications. New York, NY, USA: AAAI, 2020. [36] Li K W, Zhang T, Wang R. Deep reinforcement learning for multiobjective optimization. IEEE Transactions on Cybernetics, 2021, 51(6): 3103-3114 doi: 10.1109/TCYB.2020.2977661 [37] Dai H J, Khalil E B, Zhang Y Y, Dilkina B, Song L. Learning combinatorial optimization algorithms over graphs. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, California, USA: Curran Associates Inc., 2017. 6351−6361 [38] Manchanda S, Mittal A, Dhawan A, Medya S, Ranu S, Singh A. Learning heuristics over large graphs via deep reinforcement learning. arXiv preprint arXiv: 1903.03332, 2020 [39] Li Z W, Chen Q F, Koltun V. Combinatorial optimization with graph convolutional networks and guided tree search. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montreal, Canada: Curran Associates Inc., 2018. 537−546 [40] Nowak A, Villar S, Bandeira A S, Bruna J. A note on learning algorithms for quadratic assignment with graph neural networks. In: Proceeding of the 34th International Conference on Machine Learning (ICML). Sydney, Australia, 2017. [41] Joshi C K, Laurent T, Bresson X. An efficient graph convolutional network technique for the travelling salesman problem. arXiv preprint arXiv: 1906.01227, 2019 [42] Helsgaun K. An Extension of the Lin-Kernighan-Helsgaun TSP Solver for Constrained Traveling Salesman and Vehicle Routing Problems, Technical Report, Roskilde University, Denmark, 2017. [43] Perron L, Furnon V. Google's OR-Tools [Online], available: https://developersgooglecom/optimization, 2019 [44] OPTIMIZATION G. INC. Gurobi optimizer reference manual, 2015 [Online], available: http://wwwgurobicom, 2014 [45] Applegate D, Bixby R, Chvatal V, Cook W. Concorde TSP solver. 2006 [46] Bengio Y, Lodi A, Prouvost A. Machine learning for combinatorial optimization: A methodological tour d'Horizon. arXiv preprint arXiv: 1811.06128, 2020 [47] Chen X Y, Tian Y D. Learning to perform local rewriting for combinatorial optimization. In: Proceedings of the 33rd Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates, Inc., 2019. 6278−6289 [48] Yolcu E, Poczos B. Learning local search heuristics for boolean satisfiability. In: Proceedings of the 33rd Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates, Inc., 2019. 7992−8003 [49] Gao L, Chen M X, Chen Q C, Luo G Z, Zhu N Y, Liu Z X. Learn to design the heuristics for vehicle routing problem. arXiv preprint arXiv: 2002.08539, 2020 [50] Lu H, Zhang X W, Yang S. A learning-based iterative method for solving vehicle routing problems. In: Proceedings of the 8th International Conference on Learning Representations. Addis Ababa, Ethiopia, 2020. [51] Scarselli F, Gori M, Tsoi A C, Hagenbuchner M, Monfardini G. The graph neural network model. IEEE Transactions on Neural Networks, 2009, 20(1): 61-80 doi: 10.1109/TNN.2008.2005605 [52] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, et al. Attention is all you need. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, California, USA: Curran Associates Inc., 2017. 6000−6010 [53] Hsu C H, Chang S H, Liang J H, Chou H P, Liu C H, Chang S C, et al. MONAS: Multi-objective neural architecture search using reinforcement learning. arXiv preprint arXiv: 1806.10332, 2018 [54] Mossalam H, Assael Y M, Roijers D M, Whiteson S. Multi-objective deep reinforcement learning. arXiv preprint arXiv: 1610.02707, 2016 [55] Joshi C K, Laurent T, Bresson X. On Learning paradigms for the travelling salesman problem. In: Proceedings of the 33rd Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates, Inc., 2019. [56] Joshi C K, Cappart Q, Rousseau L M, Laurent T, Bresson X. Learning TSP requires rethinking generalization. arXiv preprint arXiv: 2006.07054, 2020 [57] Barrett T, Clements W, Foerster J, Lvovsky A. Exploratory combinatorial optimization with reinforcement learning. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(4): 3243-3250 doi: 10.1609/aaai.v34i04.5723 [58] Beloborodov D, Ulanov A E, Foerster J N, Whiteson S, Lvovsky A I. Reinforcement learning enhanced quantum-inspired algorithm for combinatorial optimization. arXiv preprint arXiv: 2002.04676, 2020 [59] Cappart Q, Goutierre E, Bergman D, Rousseau L M. Improving optimization bounds using machine learning: Decision diagrams meet deep reinforcement learning. Proceedings of the AAAI Conference on Artificial Intelligence, 2019, 331(1): 1443-1451 [60] Abe K, Xu Z J, Sato I, Sugiyama M. Solving NP-hard problems on graphs by reinforcement learning without domain knowledge. arXiv preprint arXiv: 1905.11623, 2020 [61] Hu H Y, Zhang X D, Yan X W, Wang L F, Xu Y H. Solving a new 3D bin packing problem with deep reinforcement learning method. arXiv preprint arXiv: 1708.05930, 2017 [62] Laterre A, Fu Y G, Jabri M K, Cohen A S, Kas D, Hajjar K, et al. Ranked reward: Enabling self-play reinforcement learning for combinatorial optimization. arXiv preprint arXiv: 1807.01672, 2018 [63] Huang J Y, Patwary M, Diamos G. Coloring big graphs with alphagozero. arXiv preprint arXiv: 1902.10162, 2019 [64] Li J L, Shi W S, Zhang N, Shen X M. Delay-aware VNF scheduling: A reinforcement learning approach with variable action set. IEEE Transactions on Cognitive Communications and Networking, 2021, 7(1): 304-318 doi: 10.1109/TCCN.2020.2988908 [65] Mijumbi R, Hasija S, Davy S, Davy A, Jennings B, Boutaba R. Topology-aware prediction of virtual network function resource requirements. IEEE Transactions on Network and Service Management, 2017, 14(1): 106-120 doi: 10.1109/TNSM.2017.2666781 [66] Mijumbi R, Hasija S, Davy S, Davy A, Jennings B, Boutaba R. A connectionist approach to dynamic resource management for virtualised network functions. In: Proceedings of the 12th Conference on Network and Service Management (CNSM). Montreal, Quebec, Canada: IEEE, 2016. 1−9 [67] Quang P T A, Hadjadj-Aoul Y, Outtagarts A. A deep reinforcement learning approach for VNF forwarding graph embedding. IEEE Transactions on Network and Service Management, 2019, 16(4): 1318-1331 doi: 10.1109/TNSM.2019.2947905 [68] Solozabal R, Ceberio J, Sanchoyerto A, Zabala L, Blanco B, Liberal F. Virtual network function placement optimization with deep reinforcement learning. IEEE Journal on Selected Areas in Communications, 2020, 38(2): 292-303 doi: 10.1109/JSAC.2019.2959183 [69] Liu Q, Han T, Moges E. EdgeSlice: Slicing wireless edge computing network with decentralized deep reinforcement learning. arXiv preprint arXiv: 2003.12911, 2020 [70] Van Huynh N, Hoang D T, Nguyen D N, Dutkiewicz E. Optimal and fast real-time resource slicing with deep dueling neural networks. IEEE Journal on Selected Areas in Communications, 2019, 37(6): 1455-1470 doi: 10.1109/JSAC.2019.2904371 [71] Mseddi A, Jaafar W, Elbiaze H, Ajib W. Intelligent resource allocation in dynamic fog computing environments. In: Proceedings of the 8th International Conference on Cloud Networking (CloudNet). Coimbra, Portugal: IEEE, 2019. 1−7 [72] Almasan P, Suarez-Varela J, Badia-Sampera A, Rusek K, Barlet-Ros P, Cabellos-Aparicio A. Deep reinforcement learning meets graph neural networks: Exploring a routing optimization use case. arXiv preprint arXiv: 1910.07421, 2020 [73] Meng X Y, Inaltekin H, Krongold B. Deep reinforcement learning-based topology optimization for self-organized wireless sensor networks. In: Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM). Waikoloa, HI, USA: IEEE, 2019. 1−6 [74] Lu J Y, Feng L Y, Yang J, Hassan M M, Alelaiwi A, Humar I. Artificial agent: The fusion of artificial intelligence and a mobile agent for energy-efficient traffic control in wireless sensor networks. Future Generation Computer Systems, 2019, 95: 45-51 doi: 10.1016/j.future.2018.12.024 [75] Zhang S, Shen W L, Zhang M, Cao X H, Cheng Y. Experience-driven wireless D2D network link scheduling: A deep learning approach. In: Proceedings of the 2019 IEEE International Conference on Communications (ICC). Shanghai, China: IEEE, 2019. 1−6 [76] Huang L, Bi S Z, Zhang Y J A. Deep reinforcement learning for online computation offloading in wireless powered mobile-edge computing networks. IEEE Transactions on Mobile Computing, 2020, 19(11): 2581-2593 doi: 10.1109/TMC.2019.2928811 [77] Wang J, Hu J, Min G Y, Zhan W H, Ni Q, Georgalas N. Computation offloading in multi-access edge computing using a deep sequential model based on reinforcement learning. IEEE Communications Magazine, 2019, 57(5): 64-69 doi: 10.1109/MCOM.2019.1800971 [78] Jiang Q M, Zhang Y, Yan J Y. Neural combinatorial optimization for energy-efficient offloading in mobile edge computing. IEEE Access, 2020, 8: 35077-35089 doi: 10.1109/ACCESS.2020.2974484 [79] Yu J J Q, Yu W, Gu J T. Online vehicle routing with neural combinatorial optimization and deep reinforcement learning. IEEE Transactions on Intelligent Transportation Systems, 2019, 20(10): 3806-3817 doi: 10.1109/TITS.2019.2909109 [80] Holler J, Vuorio R, Qin Z W, Tang X C, Jiao Y, Jin T C, et al. Deep reinforcement learning for multi-driver vehicle dispatching and repositioning problem. In: Proceedings of the 2019 IEEE International Conference on Data Mining (ICDM). Beijing, China: IEEE, 2019. 1090−1095 [81] Liang X Y, Du X S, Wang G L, Han Z. A deep reinforcement learning network for traffic light cycle control. IEEE Transactions on Vehicular Technology, 2019, 68(2): 1243-1253 doi: 10.1109/TVT.2018.2890726 [82] Chen X Y, Tian Y D. Learning to progressively plan. arXiv preprint arXiv: 1810.00337, 2018 [83] Zheng P, Zuo L L, Wang J L, Zhang J. Pointer networks for solving the permutation flow shop scheduling problem. In: Proceedings of the 48th International Conference on Computers & Industrial Engineering (CIE48). Auckland, New Zealand, 2018. 2−5 [84] Pan R Y, Dong X Y, Han S. Solving permutation flowshop problem with deep reinforcement learning. In: Proceedings of the 2020 Prognostics and Health Management Conference (PHM-Besancon). Besancon, France: IEEE, 2020. 349−353 [85] Mirhoseini A, Pham H, Le Q V, Steiner B, Larsen R, Zhou Y F, et al. Device placement optimization with reinforcement learning. arXiv preprint arXiv: 1706.04972, 2017 [86] Mirhoseini A, Goldie A, Pham H, Steiner B, Le Q V, Dean J. A hierarchical model for device placement. In: Proceedings of the 6th International Conference on Learning Representations. Vancouver, BC, Canada, 2018. [87] Francois-Lavet V, Taralla D, Ernst D, Fonteneau R. Deep reinforcement learning solutions for energy microgrids management. In: Proceedings of the 13th European Workshop on Reinforcement Learning (EWRL 2016). Barcelona, Spain, 2016. [88] 张自东, 邱才明, 张东霞, 徐舒玮, 贺兴. 基于深度强化学习的微电网复合储能协调控制方法. 电网技术, 2019, 43(6): 1914-1921Zhang Zi-Dong, Qiu Cai-Ming, Zhang Dong-Xia, Xu Shu-Wei, He Xing. A coordinated control method for hybrid energy storage system in microgrid based on deep reinforcement learning. Power System Technology, 2019, 43(6): 1914-1921 [89] Valladares W, Galindo M, Gutierrez J, Wu W C, Liao K K, Liao J C, et al. Energy optimization associated with thermal comfort and indoor air control via a deep reinforcement learning algorithm. Building and Environment, 2019, 155: 105-117 doi: 10.1016/j.buildenv.2019.03.038 [90] Mocanu E, Mocanu D C, Nguyen P H, Liotta A, Webber M E, Gibescu M, et al. On-line building energy optimization using deep reinforcement learning. IEEE Transactions on Smart Grid, 2019, 10(4): 3698-3708 doi: 10.1109/TSG.2018.2834219 -

下载:

下载: