-

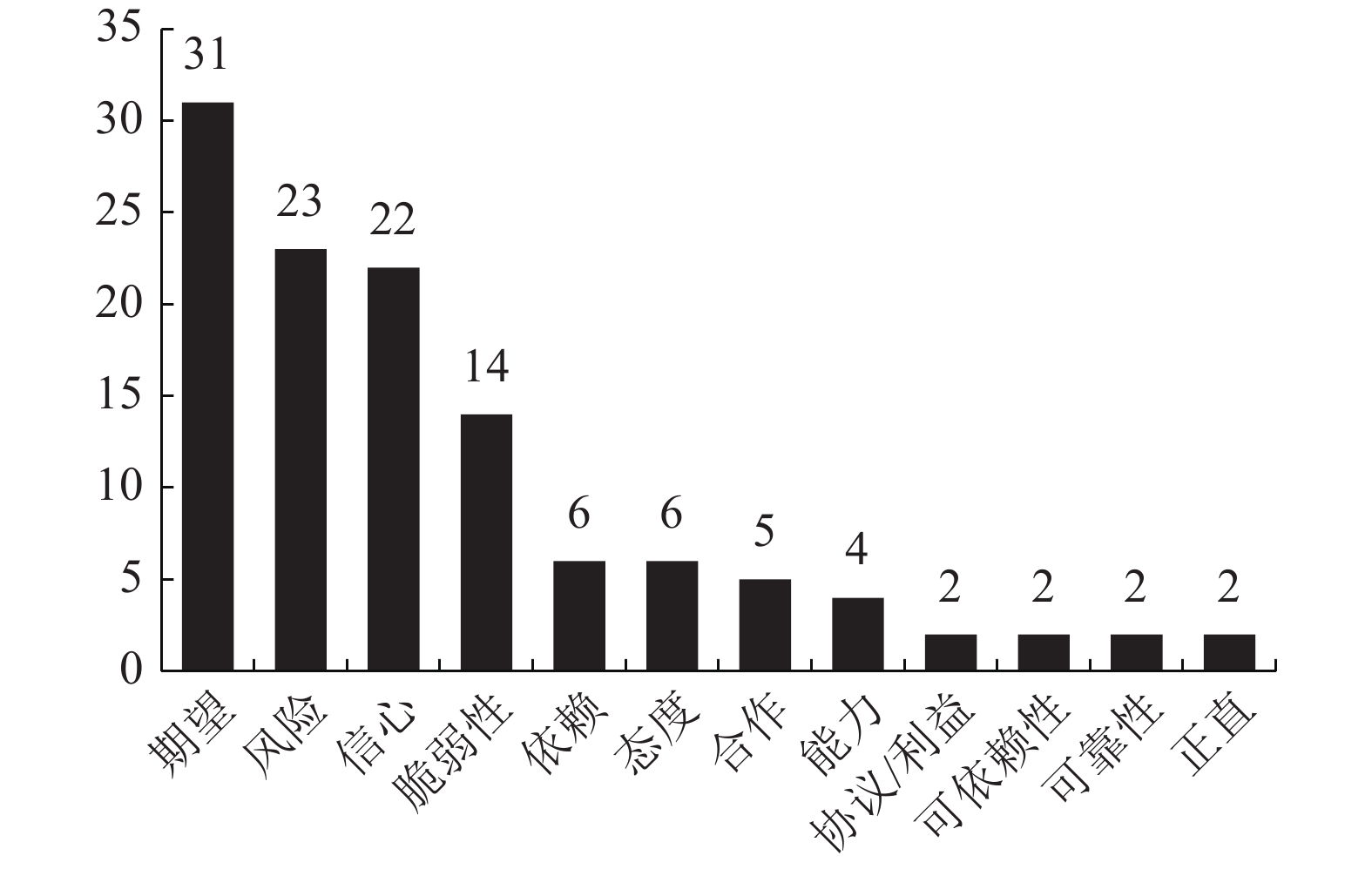

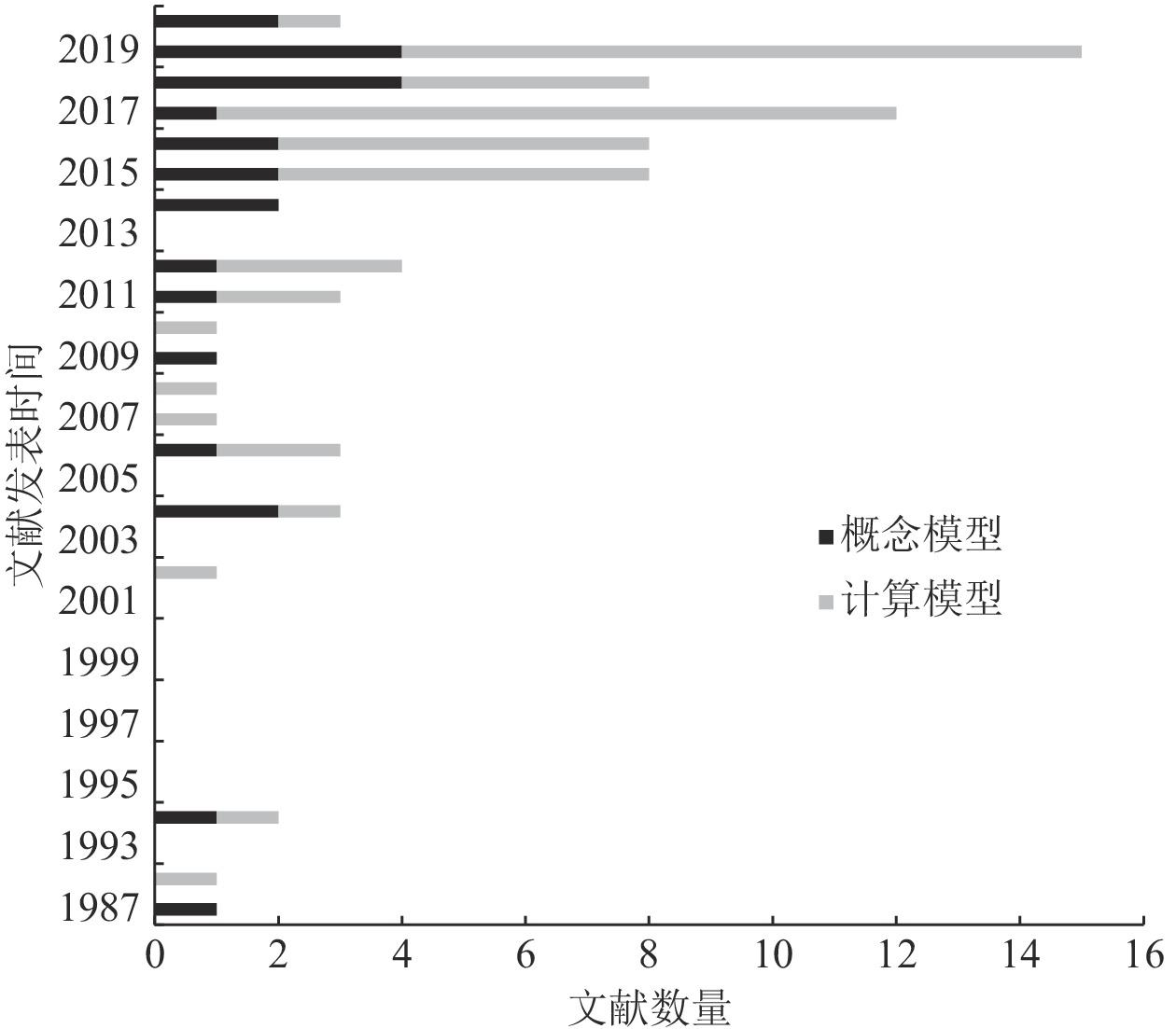

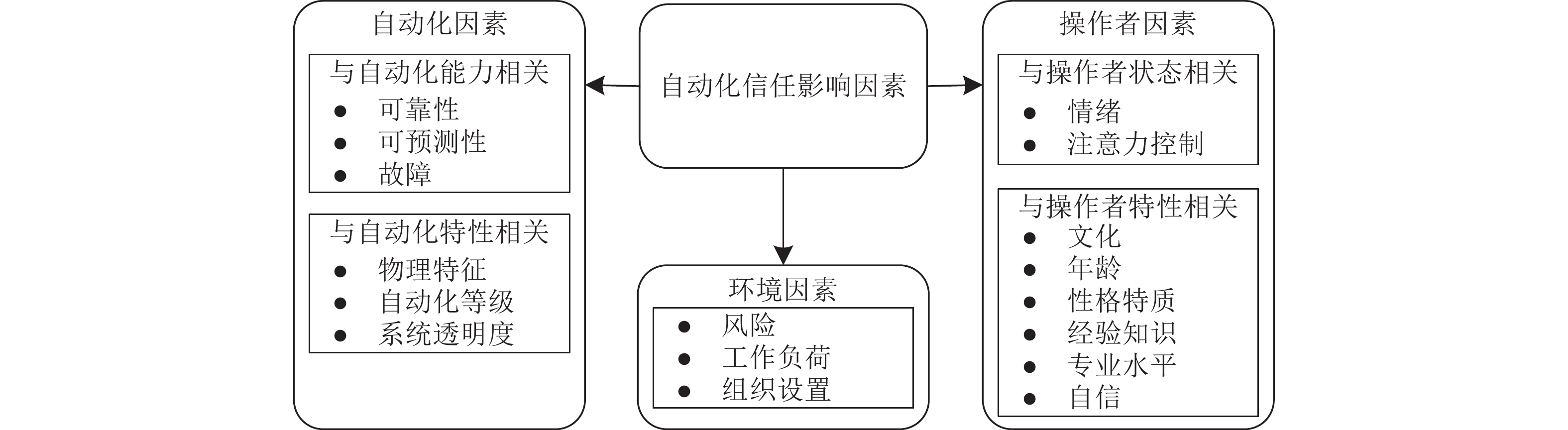

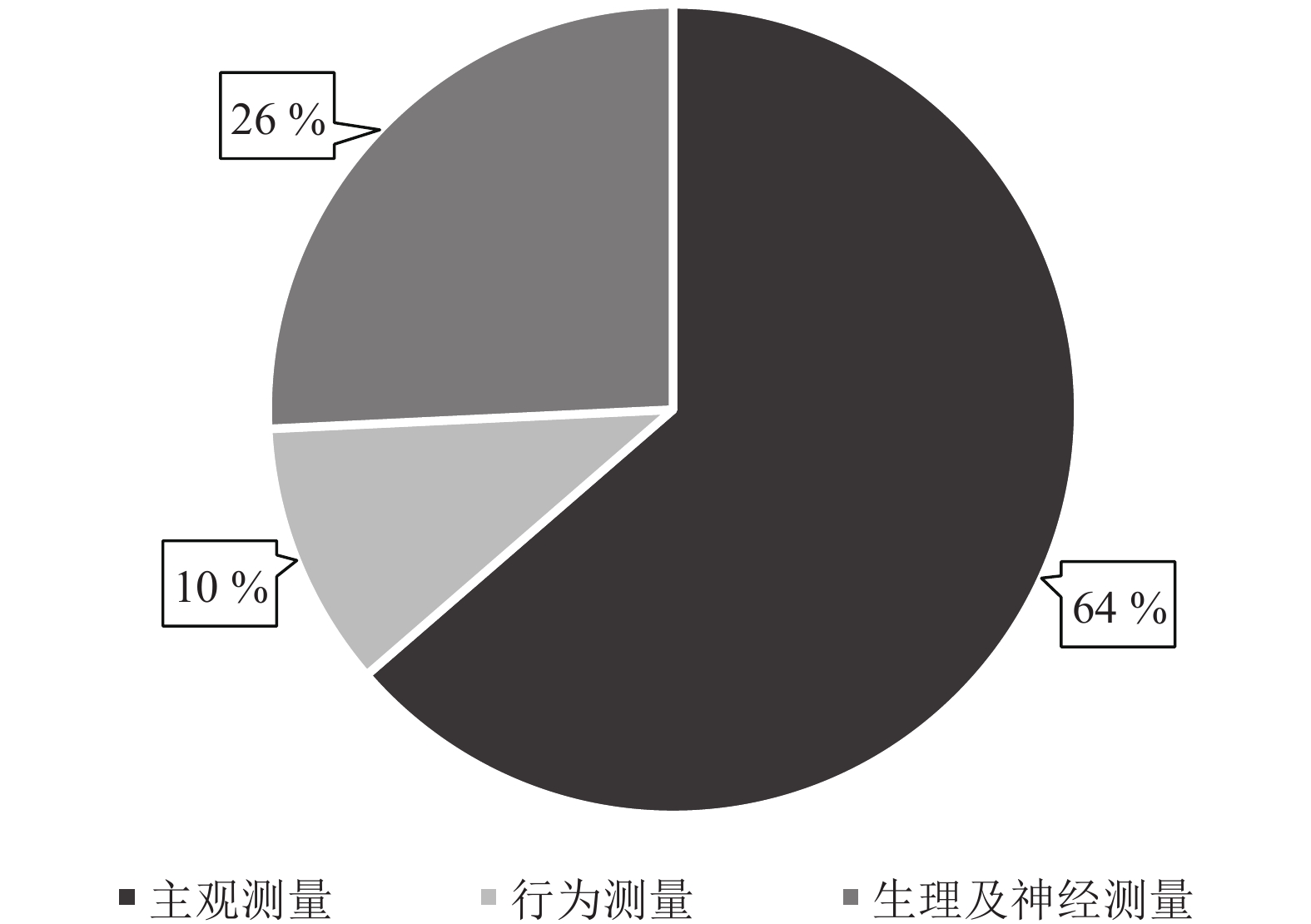

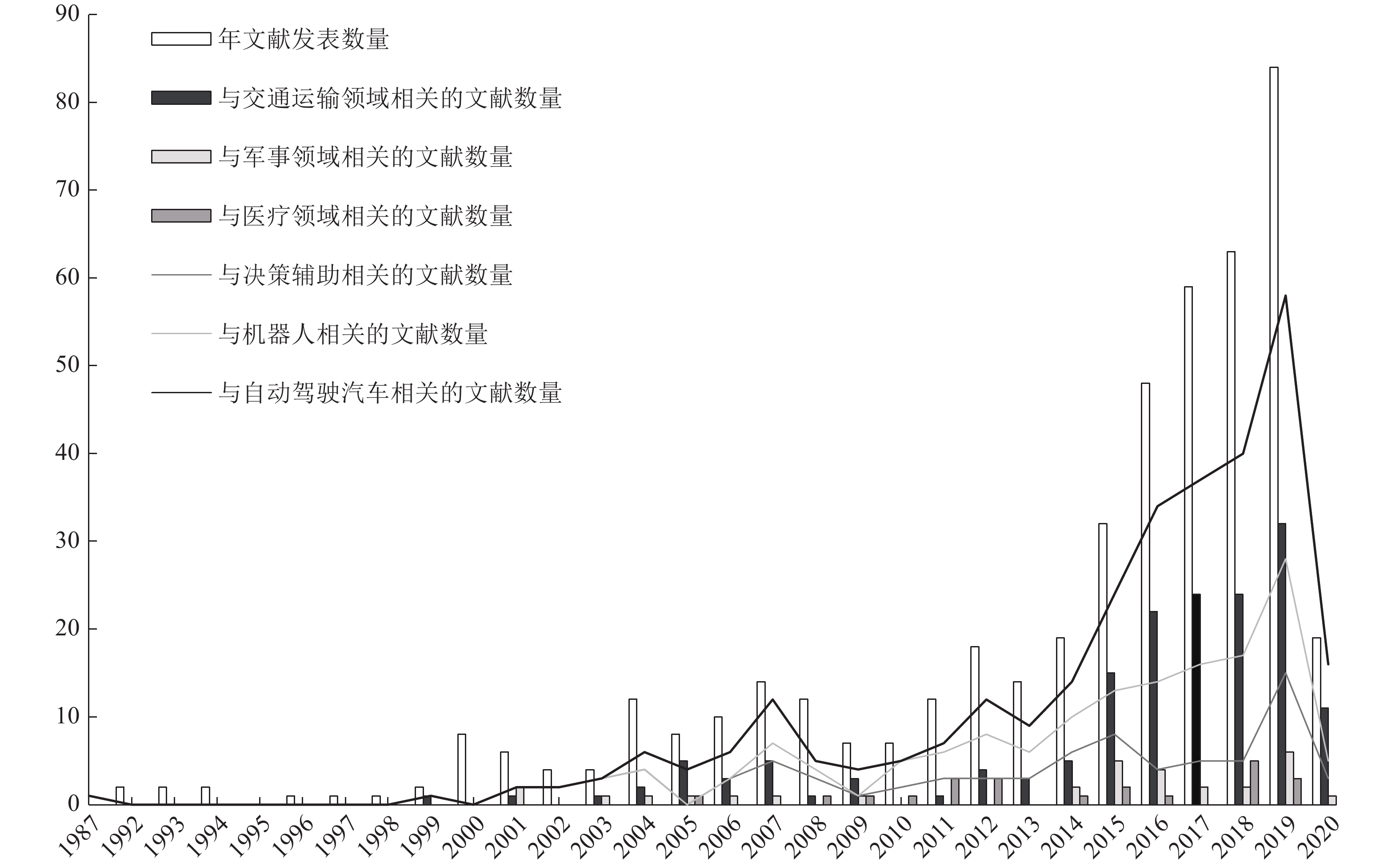

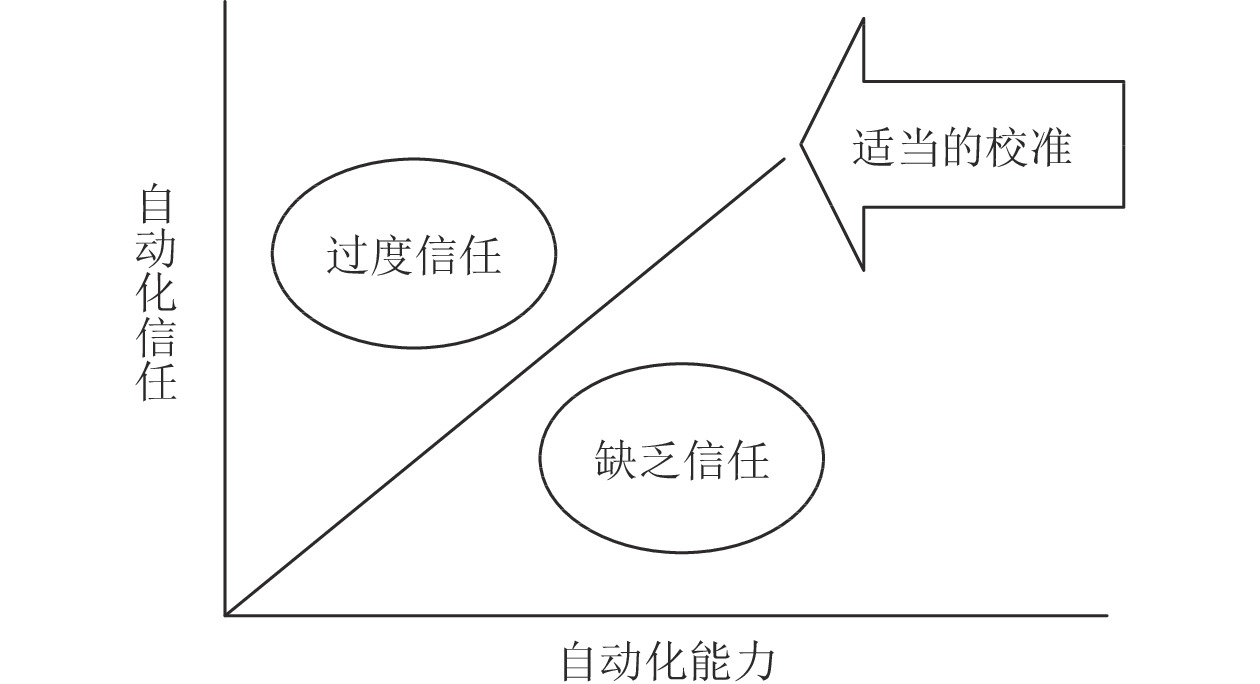

摘要: 随着自动化能力的快速提升, 人机关系发生深刻变化, 人的角色逐渐从自动化的主要控制者转变为与其共享控制的合作者. 为了实现绩效和安全目标, 人机协同控制需要操作人员适当地校准他们对自动化机器的信任, 自动化信任问题已经成为实现安全有效的人机协同控制所面临的最大挑战之一. 本文回顾了自动化信任相关文献, 围绕自动化信任概念、模型、影响因素及测量方法, 对迄今为止该领域的主要理论和实证工作进行了详细总结. 最后, 本文在研究综述和相关文献分析的基础上提出了现有自动化信任研究工作中存在的局限性, 并从人机系统设计的角度为未来的自动化信任研究提供一些建议.Abstract: With the rapid improvement of automation capability, the human-machine relationship has undergone profound changes. The role of human has gradually changed from the main controller of automation to the partner sharing control with it. Human-machine collaborative control requires the human operator to appropriately calibrate their trust in automatic machine in order to achieve performance and safety goals. Trust in automation has proved to be one of the greatest challenges to achieve safe and effective human-machine collaborative control. This paper reviews the literature related to trust in automation, and summarizes the main theoretical and empirical work in this field up to now in detail, centering on the concepts, models, influencing factors and measurement methods of trust in automation. Finally, this paper explains limitations that are present in existing research works based on research review and relevant literature analysis, and provides some suggestions for future research on trust in automation from the point of human-machine system design.

-

表 1 自动化信任计算模型总结

Table 1 Summary of computational models of trust in automation

类型 离线信任模型 在线信任模型 输入 先验参数 先验参数及实时行为和

生理及神经数据作用 在可能的情景范围内进行

模拟以预测自动化信任水平在系统实际运行期间实

时估计自动化信任水平应用 用于自动化系统设计阶段 用于自动化系统部署阶段 结果 静态改进自动化设计 动态调整自动化行为 表 2 常见的自动化信任行为测量方法总结

Table 2 Summary of common behavioural measures of trust in automation

表 3 重要的生理及神经测量方法及其依据

Table 3 Important physiological and neural measures of trust in automation and their basis

测量方法 方法依据 通过眼动追踪捕获操作者的凝视行为来对自动化信任进行持续测量. 监视行为等显性行为与主观自动化信任的联系更加紧密[78]. 虽然关于自动化信任与监视行为的实验证据并不是单一的[142], 但大多数实证研究表明, 自动化信任主观评分与操作者监视频率之间存在显著的负相关关系[48]. 表征操作者监视程度的凝视行为可以为实时自动化信任测量提供可靠信息[140, 142-143]. 利用 EEG 信号的图像特征来检测操作者的自动化信任状态. 许多研究检验了人际信任的神经关联[144-148], 使用神经成像工具检验自动化信任的神经关联是可行的. EEG 比其他工具 (如功能性磁共振成像) 具有更好的时间动态性[149], 在脑−机接口设计中使用 EEG 图像模式来识别用户认知和情感状态已经具有良好的准确性[149]. 自动化信任是一种认知结构, 利用 EEG 信号的图像特征来检测操作者的自动化信任校准是可行的, 并且已经取得了较高的准确性[68-69, 150]. 通过 EDA 水平推断自动化信任水平 已有研究表明, 较低的自动化信任水平可能与较高的 EDA 水平相关[151]. 将该方法与其他生理及神经测量方法结合使用比单独使用某种方法的自动化信任测量准确度更高, 例如将 EDA 与眼动追踪[142] 或 EEG 结合使用[68-69]. 表 4 自动化信任的主要研究团体及其研究贡献

Table 4 Main research groups of trust in automation and their research contributions

序号 国别 机构 团队及代表学者 研究贡献 文献数 1 美国 美国陆军研究实验室 人类研究和工程局的

Chen提出基于系统透明度的一系列自动化信任校准方法 26 2 美国 美国空军研究实验室 人类信任与交互分部的Lyons 进行军事背景下的自动化信任应用研究 24 3 美国 中佛罗里达大学 仿真模拟与培训学院的Hancock 建立人−机器人信任的理论体系并进行相关影响

因素实证研究21 4 美国 克莱姆森大学 机械工程系的 Saeidi 和Wang 建立基于信任计算模型的自主分配策略来

提高人机协作效能20 5 美国 乔治梅森大学 心理学系的 de Visser 建立并完善自动化信任修复相关理论, 着重研究

自动化的拟人特征对信任修复的作用18 6 日本 筑波大学 风险工程系的 Itoh 和Inagaki 基于自动化信任校准的人−自动驾驶汽车协同系统设计方法 14 -

[1] Schörnig N. Unmanned systems: The robotic revolution as a challenge for arms control. Information Technology for Peace and Security: IT Applications and Infrastructures in Conflicts, Crises, War, and Peace. Wiesbaden: Springer, 2019. 233−256 [2] Meyer G, Beiker S. Road Vehicle Automation. Cham: Springer, 2019. 73−109 [3] Bahrin M A K, Othman M F, Azli N H N, Talib M F. Industry 4.0: A review on industrial automation and robotic. Jurnal Teknologi, 2016, 78(6−13): 137−143 [4] Musen M A, Middleton B, Greenes R A. Clinical decision-support systems. Biomedical Informatics: Computer Applications in Health Care and Biomedicine. London: Springer, 2014. 643−674 [5] Janssen C P, Donker S F, Brumby D P, Kun A L. History and future of human-automation interaction. International Journal of Human-Computer Studies, 2019, 131: 99−107 doi: 10.1016/j.ijhcs.2019.05.006 [6] 许为, 葛列众. 智能时代的工程心理学. 心理科学进展, 2020, 28(9): 1409−1425 doi: 10.3724/SP.J.1042.2020.01409Xu Wei, Ge Lie-Zhong. Engineering psychology in the era of artificial intelligence. Advances in Psychological Science, 2020, 28(9): 1409−1425 doi: 10.3724/SP.J.1042.2020.01409 [7] Parisi G I, Kemker R, Part J L, Kanan C, Wermter S. Continual lifelong learning with neural networks: A review. Neural Networks, 2019, 113: 54−71 doi: 10.1016/j.neunet.2019.01.012 [8] Grigsby S S. Artificial intelligence for advanced human-machine symbiosis. In: Proceeding of the 12th International Conference on Augmented Cognition: Intelligent Technologies. Berlin, Germany: Springer, 2018. 255−266 [9] Gogoll J, Uhl M. Rage against the machine: Automation in the moral domain. Journal of Behavioral and Experimental Economics, 2018, 74: 97−103 doi: 10.1016/j.socec.2018.04.003 [10] Gunning D. Explainable artificial intelligence (XAI) [Online], available: https://www.cc.gatech.edu/~alanwags/DLAI2016/(Gunning)%20IJCAI-16%20DLAI%20WS.pdf, April 26, 2020 [11] Endsley M R. From here to autonomy: Lessons learned from human-automation research. Human Factors: the Journal of the Human Factors and Ergonomics Society, 2017, 59(1): 5−27 doi: 10.1177/0018720816681350 [12] Blomqvist K. The many faces of trust. Scandinavian Journal of Management, 1997, 13(3): 271−286 doi: 10.1016/S0956-5221(97)84644-1 [13] Rotter J B. A new scale for the measurement of interpersonal trust. Journal of Personality, 1967, 35(4): 651−665 doi: 10.1111/j.1467-6494.1967.tb01454.x [14] Muir B M. Trust in automation: Part I. Theoretical issues in the study of trust and human intervention in automated systems. Ergonomics, 1994, 37(11): 1905−1922 doi: 10.1080/00140139408964957 [15] Lewandowsky S, Mundy M, Tan G P A. The dynamics of trust: Comparing humans to automation. Journal of Experimental Psychology: Applied, 2000, 6(2): 104−123 doi: 10.1037/1076-898X.6.2.104 [16] Muir B M. Trust between humans and machines, and the design of decision aids. International Journal of Man-Machine Studies, 1987, 27(5-6): 527−539 doi: 10.1016/S0020-7373(87)80013-5 [17] Parasuraman R, Riley V. Humans and automation: Use, misuse, disuse, abuse. Human Factors: The Journal of the Human Factors and Ergonomics Society, 1997, 39(2): 230−253 doi: 10.1518/001872097778543886 [18] Levin S. Tesla fatal crash: ‘autopilot’ mode sped up car before driver killed, report finds [Online], available: https://www.theguardian.com/technology/2018/jun/07/tesla-fatal-crash-silicon-valley-autopilot-mode-report, June 8, 2020 [19] The Tesla Team. An update on last Week' s accident [Online], available: https://www.tesla.com/en_GB/blog/update-last-week%E2%80%99s-accident, March 20, 2020 [20] Mayer R C, Davis J H, Schoorman F D. An integrative model of organizational trust. Academy of Management Review, 1995, 20(3): 709−734 doi: 10.5465/amr.1995.9508080335 [21] McKnight D H, Cummings L L, Chervany N L. Initial trust formation in new organizational relationships. Academy of Management Review, 1998, 23(3): 473−490 doi: 10.5465/amr.1998.926622 [22] Jarvenpaa S L, Knoll K, Leidner D E. Is anybody out there? Antecedents of trust in global virtual teams. Journal of Management Information Systems, 1998, 14(4): 29−64 doi: 10.1080/07421222.1998.11518185 [23] Siau K, Shen Z X. Building customer trust in mobile commerce. Communications of the ACM, 2003, 46(4): 91−94 doi: 10.1145/641205.641211 [24] Gefen D. E-commerce: The role of familiarity and trust. Omega, 2000, 28(6): 725−737 doi: 10.1016/S0305-0483(00)00021-9 [25] McKnight D H, Choudhury V, Kacmar C. Trust in e-commerce vendors: A two-stage model. In: Proceeding of the 21st International Conference on Information Systems. Brisbane, Australia: Association for Information Systems, 2000. 96−103 [26] Li X, Hess T J, Valacich J S. Why do we trust new technology? A study of initial trust formation with organizational information systems. The Journal of Strategic Information Systems, 2008, 17(1): 39−71 doi: 10.1016/j.jsis.2008.01.001 [27] Lee J D, See K A. Trust in automation: Designing for appropriate reliance. Human Factors: the Journal of the Human Factors and Ergonomics Society, 2004, 46(1): 50−80 doi: 10.1518/hfes.46.1.50.30392 [28] Pan B, Hembrooke H, Joachim' s T, Lorigo L, Gay G, Granka L. In Google we trust: Users' & decisions on rank, position, and relevance. Journal of Computer-Mediated Communication, 2007, 12(3): 801−823 doi: 10.1111/j.1083-6101.2007.00351.x [29] Riegelsberger J, Sasse M A, McCarthy J D. The researcher' s dilemma: Evaluating trust in computer-mediated communication. International Journal of Human-Computer Studies, 2003, 58(6): 759−781 doi: 10.1016/S1071-5819(03)00042-9 [30] Hancock P A, Billings D R, Schaefer K E, Chen J Y C, de Visser E J, Parasuraman R. A meta-analysis of factors affecting trust in human-robot interaction. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2011, 53(5): 517−527 doi: 10.1177/0018720811417254 [31] Billings D R, Schaefer K E, Chen J Y C, Hancock P A. Human-robot interaction: Developing trust in robots. In: Proceeding of the 7th ACM/IEEE International Conference on Human-Robot Interaction. Boston, USA: ACM, 2012. 109−110 [32] Madhavan P, Wiegmann D A. Similarities and differences between human-human and human-automation trust: An integrative review. Theoretical Issues in Ergonomics Science, 2007, 8(4): 277−301 doi: 10.1080/14639220500337708 [33] Walker G H, Stanton N A, Salmon P. Trust in vehicle technology. International Journal of Vehicle Design, 2016, 70(2): 157−182 doi: 10.1504/IJVD.2016.074419 [34] Siau K, Wang W Y. Building trust in artificial intelligence, machine learning, and robotics. Cutter Business Technology Journal, 2018, 31(2): 47−53 [35] Schaefer K E, Chen J Y C, Szalma J L, Hancock P A. A meta-analysis of factors influencing the development of trust in automation: Implications for understanding autonomy in future systems. Human Factors: the Journal of the Human Factors and Ergonomics Society, 2016, 58(3): 377−400 doi: 10.1177/0018720816634228 [36] Hoff K A, Bashir M. Trust in automation: Integrating empirical evidence on factors that influence trust. Human Factors: the Journal of the Human Factors and Ergonomics Society, 2015, 57(3): 407−434 doi: 10.1177/0018720814547570 [37] Kaber D B. Issues in human-automation interaction modeling: Presumptive aspects of frameworks of types and levels of automation. Journal of Cognitive Engineering and Decision Making, 2018, 12(1): 7−24 doi: 10.1177/1555343417737203 [38] Hancock P A. Imposing limits on autonomous systems. Ergonomics, 2017, 60(2): 284−291 doi: 10.1080/00140139.2016.1190035 [39] Schaefer K E. The Perception and Measurement of Human-Robot Trust [Ph. D. dissertation], University of Central Florida, USA, 2013. [40] Schaefer K E, Billings D R, Szalma J L, Adams J K, Sanders T L, Chen J Y C, et al. A Meta-Analysis of Factors Influencing the Development of Trust in Automation: Implications for Human-Robot Interaction, Technical Report ARL-TR-6984, Army Research Laboratory, USA, 2014. [41] Nass C, Fogg B J, Moon Y. Can computers be teammates? International Journal of Human-Computer Studies, 1996, 45(6): 669−678 doi: 10.1006/ijhc.1996.0073 [42] Madhavan P, Wiegmann D A. A new look at the dynamics of human-automation trust: Is trust in humans comparable to trust in machines? Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 2004, 48(3): 581−585 doi: 10.1177/154193120404800365 [43] Dimoka A. What does the brain tell us about trust and distrust? Evidence from a functional neuroimaging study. MIS Quarterly, 2010, 34(2): 373−396 doi: 10.2307/20721433 [44] Riedl R, Hubert M, Kenning P. Are there neural gender differences in online trust? An fMRI study on the perceived trustworthiness of eBay offers. MIS Quarterly, 2010, 34(2): 397−428 doi: 10.2307/20721434 [45] Billings D, Schaefer K, Llorens N, Hancock P A. What is Trust? Defining the construct across domains. In: Proceeding of the American Psychological Association Conference. Florida, USA: APA, 2012. 76−84 [46] Barber B. The Logic and Limits of Trust. New Jersey: Rutgers University Press, 1983. 15−22 [47] Rempel J K, Holmes J G, Zanna M P. Trust in close relationships. Journal of Personality and Social Psychology, 1985, 49(1): 95−112 doi: 10.1037/0022-3514.49.1.95 [48] Muir B M, Moray N. Trust in automation. Part Ⅱ. Experimental studies of trust and human intervention in a process control simulation. Ergonomics, 1996, 39(3): 429−460 doi: 10.1080/00140139608964474 [49] Ajzen I. The theory of planned behavior. Organizational Behavior and Human Decision Processes, 1991, 50(2): 179−211 doi: 10.1016/0749-5978(91)90020-T [50] Dzindolet M T, Pierce L G, Beck H P, Dawe L A, Anderson B W. Predicting misuse and disuse of combat identification systems. Military Psychology, 2001, 13(3): 147−164 doi: 10.1207/S15327876MP1303_2 [51] Madhavan P, Wiegmann D A. Effects of information source, pedigree, and reliability on operator interaction with decision support systems. Human Factors: the Journal of the Human Factors and Ergonomics Society, 2007, 49(5): 773−785 doi: 10.1518/001872007X230154 [52] Goodyear K, Parasuraman R, Chernyak S, de Visser E, Madhavan P, Deshpande G, et al. An fMRI and effective connectivity study investigating miss errors during advice utilization from human and machine agents. Social Neuroscience, 2017, 12(5): 570−581 doi: 10.1080/17470919.2016.1205131 [53] Hoffmann H, Söllner M. Incorporating behavioral trust theory into system development for ubiquitous applications. Personal and Ubiquitous Computing, 2014, 18(1): 117−128 doi: 10.1007/s00779-012-0631-1 [54] Ekman F, Johansson M, Sochor J. Creating appropriate trust in automated vehicle systems: A framework for HMI design. IEEE Transactions on Human-Machine Systems, 2018, 48(1): 95−101 doi: 10.1109/THMS.2017.2776209 [55] Xu A Q, Dudek G. OPTIMo: Online probabilistic trust inference model for asymmetric human-robot collaborations. In: Proceedings of the 10th Annual ACM/IEEE International Conference on Human-Robot Interaction (HRI). Portland, USA: IEEE, 2015. 221−228 [56] Nam C, Walker P, Lewis M, Sycara K. Predicting trust in human control of swarms via inverse reinforcement learning. In: Proceeding of the 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN). Lisbon, Portugal: IEEE, 2017. 528−533 [57] Akash K, Polson K, Reid T, Jain N. Improving human-machine collaboration through transparency-based feedback-part I: Human trust and workload model. IFAC-PapersOnLine, 2019, 51(34): 315−321 doi: 10.1016/j.ifacol.2019.01.028 [58] Gao J, Lee J D. Extending the decision field theory to model operators' reliance on automation in supervisory control situations. IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans, 2006, 36(5): 943−959 doi: 10.1109/TSMCA.2005.855783 [59] Wang Y, Shi Z, Wang C, Zhang F. Human-robot mutual trust in (Semi) autonomous underwater robots. Cooperative Robots and Sensor Networks. Berlin: Springer, 2014. 115−137 [60] Clare A S. Modeling Real-time Human-automation Collaborative Scheduling of Unmanned Vehicles [Ph. D. dissertation], Massachusetts Institute of Technology, USA, 2013. [61] Gao F, Clare A S, Macbeth J C, Cummings M L. Modeling the impact of operator trust on performance in multiple robot control. In: Proceeding of the AAAI Spring Symposium Series. Palo Alto, USA: AAAI, 2013. 164−169 [62] Hoogendoorn M, Jaffry S W, Treur J. Cognitive and neural modeling of dynamics of trust in competitive trustees. Cognitive Systems Research, 2012, 14(1): 60−83 doi: 10.1016/j.cogsys.2010.12.011 [63] Hussein A, Elsawah S, Abbass H A. Towards trust-aware human-automation interaction: An overview of the potential of computational trust models. In: Proceedings of the 53rd Hawaii International Conference on System Sciences. Hawaii, USA: University of Hawaii, 2020. 47−57 [64] Lee J, Moray N. Trust, control strategies and allocation of function in human-machine systems. Ergonomics, 1992, 35(10): 1243−1270 doi: 10.1080/00140139208967392 [65] Akash K, Hu W L, Reid T, Jain N. Dynamic modeling of trust in human-machine interactions. In: Proceedings of the 2017 American Control Conference (ACC). Seattle, USA: IEEE, 2017. 1542−1548 [66] Hu W L, Akash K, Reid T, Jain N. Computational modeling of the dynamics of human trust during human-machine interactions. IEEE Transactions on Human-Machine Systems, 2019, 49(6): 485−497 doi: 10.1109/THMS.2018.2874188 [67] Akash K, Reid T, Jain N. Improving human-machine collaboration through transparency-based feedback-part Ⅱ: Control design and synthesis. IFAC-PapersOnLine, 2019, 51(34): 322−328 doi: 10.1016/j.ifacol.2019.01.026 [68] Hu W L, Akash K, Jain N, Reid T. Real-time sensing of trust in human-machine interactions. IFAC-PapersOnLine, 2016, 49(32): 48−53 doi: 10.1016/j.ifacol.2016.12.188 [69] Akash K, Hu W L, Jain N, Reid T. A classification model for sensing human trust in machines using EEG and GSR. ACM Transactions on Interactive Intelligent Systems, 2018, 8(4): Article No. 27 [70] Akash K, Reid T, Jain N. Adaptive probabilistic classification of dynamic processes: A case study on human trust in automation. In: Proceedings of the 2018 Annual American Control Conference (ACC). Milwaukee, USA: IEEE, 2018. 246−251 [71] Merritt S M, Ilgen D R. Not all trust is created equal: Dispositional and history-based trust in human-automation interactions. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2008, 50(2): 194−210 doi: 10.1518/001872008X288574 [72] Bagheri N, Jamieson G A. The impact of context-related reliability on automation failure detection and scanning behaviour. In: Proceedings of the 2004 International Conference on Systems, Man and Cybernetics. The Hague, Netherlands: IEEE, 2004. 212−217 [73] Cahour B, Forzy J F. Does projection into use improve trust and exploration? An example with a cruise control system. Safety Science, 2009, 47(9): 1260−1270 doi: 10.1016/j.ssci.2009.03.015 [74] Cummings M L, Clare A, Hart C. The role of human-automation consensus in multiple unmanned vehicle scheduling. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2010, 52(1): 17−27 doi: 10.1177/0018720810368674 [75] Kraus J M, Forster Y, Hergeth S, Baumann M. Two routes to trust calibration: effects of reliability and brand information on trust in automation. International Journal of Mobile Human Computer Interaction, 2019, 11(3): 1−17 doi: 10.4018/IJMHCI.2019070101 [76] Kelly C, Boardman M, Goillau P, Jeannot E. Guidelines for Trust in Future ATM Systems: A Literature Review, Technical Report HRS/HSP-005-GUI-01, European Organization for the Safety of Air Navigation, Belgium, 2003. [77] Riley V. A general model of mixed-initiative human-machine systems. Proceedings of the Human Factors Society Annual Meeting, 1989, 33(2): 124−128 doi: 10.1177/154193128903300227 [78] Parasuraman R, Manzey D H. Complacency and bias in human use of automation: An attentional integration. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2010, 52(3): 381−410 doi: 10.1177/0018720810376055 [79] Bailey N R, Scerbo M W, Freeman F G, Mikulka P J, Scott L A. Comparison of a brain-based adaptive system and a manual adaptable system for invoking automation. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2006, 48(4): 693−709 doi: 10.1518/001872006779166280 [80] Moray N, Hiskes D, Lee J, Muir B M. Trust and Human Intervention in Automated Systems. Expertise and Technology: Cognition & Human-computer Cooperation. New Jersey: L. Erlbaum Associates Inc, 1995. 183−194 [81] Yu K, Berkovsky S, Taib R, Conway D, Zhou J L, Chen F. User trust dynamics: An investigation driven by differences in system performance. In: Proceedings of the 22nd International Conference on Intelligent User Interfaces. Limassol, Cyprus: ACM, 2017. 307−317 [82] De Visser E J, Monfort S S, McKendrick R, Smith M A B, McKnight P E, Krueger F, et al. Almost human: Anthropomorphism increases trust resilience in cognitive agents. Journal of Experimental Psychology: Applied, 2016, 22(3): 331−349 doi: 10.1037/xap0000092 [83] Pak R, Fink N, Price M, Bass B, Sturre L. Decision support aids with anthropomorphic characteristics influence trust and performance in younger and older adults. Ergonomics, 2012, 55(9): 1059−1072 doi: 10.1080/00140139.2012.691554 [84] De Vries P, Midden C, Bouwhuis D. The effects of errors on system trust, self-confidence, and the allocation of control in route planning. International Journal of Human-Computer Studies, 2003, 58(6): 719−735 doi: 10.1016/S1071-5819(03)00039-9 [85] Moray N, Inagaki T, Itoh M. Adaptive automation, trust, and self-confidence in fault management of time-critical tasks. Journal of Experimental Psychology: Applied, 2000, 6(1): 44−58 doi: 10.1037/1076-898X.6.1.44 [86] Lewis M, Sycara K, Walker P. The role of trust in human-robot interaction. Foundations of Trusted Autonomy. Cham: Springer, 2018. 135−159 [87] Verberne F M F, Ham J, Midden C J H. Trust in smart systems: Sharing driving goals and giving information to increase trustworthiness and acceptability of smart systems in cars. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2012, 54(5): 799−810 doi: 10.1177/0018720812443825 [88] de Visser E, Parasuraman R. Adaptive aiding of human-robot teaming: Effects of imperfect automation on performance, trust, and workload. Journal of Cognitive Engineering and Decision Making, 2011, 5(2): 209−231 doi: 10.1177/1555343411410160 [89] Endsley M R. Situation awareness in future autonomous vehicles: Beware of the unexpected. In: Proceedings of the 20th Congress of the International Ergonomics Association (IEA 2018)}. Cham, Switzerland: Springer, 2018. 303−309 [90] Wang L, Jamieson G A, Hollands J G. Trust and reliance on an automated combat identification system. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2009, 51(3): 281−291 doi: 10.1177/0018720809338842 [91] Dzindolet M T, Peterson S A, Pomranky R A, Pierce G L, Beck H P. The role of trust in automation reliance. International Journal of Human-Computer Studies, 2003, 58(6): 697−718 doi: 10.1016/S1071-5819(03)00038-7 [92] Davis S E. Individual Differences in Operators´ Trust in Autonomous Systems: A Review of the Literature, Technical Report DST-Group-TR-3587, Joint and Operations Analysis Division, Defence Science and Technology Group, Australia, 2019. [93] Merritt S M. Affective processes in human-automation interactions. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2011, 53(4): 356−370 doi: 10.1177/0018720811411912 [94] Stokes C K, Lyons J B, Littlejohn K, Natarian J, Case E, Speranza N. Accounting for the human in cyberspace: Effects of mood on trust in automation. In: Proceedings of the 2010 International Symposium on Collaborative Technologies and Systems. Chicago, USA: IEEE, 2010. 180−187 [95] Merritt S M, Heimbaugh H, LaChapell J, Lee D. I trust it, but I don' t know why: Effects of implicit attitudes toward automation on trust in an automated system. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2013, 55(3): 520−534 doi: 10.1177/0018720812465081 [96] Ardern‐Jones J, Hughes D K, Rowe P H, Mottram D R, Green C F. Attitudes and opinions of nursing and medical staff regarding the supply and storage of medicinal products before and after the installation of a drawer-based automated stock-control system. International Journal of Pharmacy Practice, 2009, 17(2): 95−99 doi: 10.1211/ijpp.17.02.0004 [97] Gao J, Lee J D, Zhang Y. A dynamic model of interaction between reliance on automation and cooperation in multi-operator multi-automation situations. International Journal of Industrial Ergonomics, 2006, 36(5): 511−526 doi: 10.1016/j.ergon.2006.01.013 [98] Reichenbach J, Onnasch L, Manzey D. Human performance consequences of automated decision aids in states of sleep loss. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2011, 53(6): 717−728 doi: 10.1177/0018720811418222 [99] Chen J Y C, Terrence P I. Effects of imperfect automation and individual differences on concurrent performance of military and robotics tasks in a simulated multitasking environment. Ergonomics, 2009, 52(8): 907−920 doi: 10.1080/00140130802680773 [100] Chen J Y C, Barnes M J. Supervisory control of multiple robots in dynamic tasking environments. Ergonomics, 2012, 55(9): 1043−1058 doi: 10.1080/00140139.2012.689013 [101] Naef M, Fehr E, Fischbacher U, Schupp J, Wagner G G. Decomposing trust: Explaining national and ethnical trust differences. International Journal of Psychology, 2008, 43(3-4): 577−577 [102] Huerta E, Glandon T A, Petrides Y. Framing, decision-aid systems, and culture: Exploring influences on fraud investigations. International Journal of Accounting Information Systems, 2012, 13(4): 316−333 doi: 10.1016/j.accinf.2012.03.007 [103] Chien S Y, Lewis M, Sycara K, Liu J S, Kumru A. Influence of cultural factors in dynamic trust in automation. In: Proceedings of the 2016 International Conference on Systems, Man, and Cybernetics (SMC). Budapest, Hungary: IEEE, 2016. 2884−2889 [104] Donmez B, Boyle L N, Lee J D, McGehee D V. Drivers' attitudes toward imperfect distraction mitigation strategies. Transportation Research Part F: Traffic Psychology and Behaviour, 2006, 9(6): 387−398 doi: 10.1016/j.trf.2006.02.001 [105] Kircher K, Thorslund B. Effects of road surface appearance and low friction warning systems on driver behaviour and confidence in the warning system. Ergonomics, 2009, 52(2): 165−176 doi: 10.1080/00140130802277547 [106] Ho G, Wheatley D, Scialfa C T. Age differences in trust and reliance of a medication management system. Interacting with Computers, 2005, 17(6): 690−710 doi: 10.1016/j.intcom.2005.09.007 [107] Steinke F, Fritsch T, Silbermann L. Trust in ambient assisted living (AAL) − a systematic review of trust in automation and assistance systems. International Journal on Advances in Life Sciences, 2012, 4(3-4): 77−88 [108] McBride M, Morgan S. Trust calibration for automated decision aids [Online], available: https://www.researchgate.net/publication/303168234_Trust_calibration_for_automated_decision_aids, May 15, 2020 [109] Gaines Jr S O, Panter A T, Lyde M D, Steers W N, Rusbult C E, Cox C L, et al. Evaluating the circumplexity of interpersonal traits and the manifestation of interpersonal traits in interpersonal trust. Journal of Personality and Social Psychology, 1997, 73(3): 610−623 doi: 10.1037/0022-3514.73.3.610 [110] Looije R, Neerincx M A, Cnossen F. Persuasive robotic assistant for health self-management of older adults: Design and evaluation of social behaviors. International Journal of Human-Computer Studies, 2010, 68(6): 386−397 doi: 10.1016/j.ijhcs.2009.08.007 [111] Szalma J L, Taylor G S. Individual differences in response to automation: The five factor model of personality. Journal of Experimental Psychology: Applied, 2011, 17(2): 71−96 doi: 10.1037/a0024170 [112] Balfe N, Sharples S, Wilson J R. Understanding is key: An analysis of factors pertaining to trust in a real-world automation system. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2018, 60(4): 477−495 doi: 10.1177/0018720818761256 [113] Rajaonah B, Anceaux F, Vienne F. Trust and the use of adaptive cruise control: A study of a cut-in situation. Cognition, Technology & Work, 2006, 8(2): 146−155 [114] Fan X C, Oh S, McNeese M, Yen J, Cuevas H, Strater L, et al. The influence of agent reliability on trust in human-agent collaboration. In: Proceedings of the 15th European Conference on Cognitive Ergonomics: The Ergonomics of Cool Interaction. Funchal, Portugal: Association for Computing Machinery, 2008. Article No: 7 [115] Sanchez J, Rogers W A, Fisk A D, Rovira E. Understanding reliance on automation: Effects of error type, error distribution, age and experience. Theoretical Issues in Ergonomics Science, 2014, 15(2): 134−160 doi: 10.1080/1463922X.2011.611269 [116] Riley V. Operator reliance on automation: Theory and data. Automation and Human Performance: Theory and Applications. Mahwah, NJ: Lawrence Erlbaum Associates, 1996. 19−35 [117] Lee J D, Moray N. Trust, self-confidence, and operators' adaptation to automation. International Journal of Human-Computer Studies, 1994, 40(1): 153−184 doi: 10.1006/ijhc.1994.1007 [118] Perkins L A, Miller J E, Hashemi A, Burns G. Designing for human-centered systems: Situational risk as a factor of trust in automation. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 2010, 54(25): 2130−2134 doi: 10.1177/154193121005402502 [119] Bindewald J M, Rusnock C F, Miller M E. Measuring human trust behavior in human-machine teams. In: Proceedings of the AHFE 2017 International Conference on Applied Human Factors in Simulation and Modeling. Los Angeles, USA: Springer, 2017. 47−58 [120] Biros D P, Daly M, Gunsch G. The influence of task load and automation trust on deception detection. Group Decision and Negotiation, 2004, 13(2): 173−189 doi: 10.1023/B:GRUP.0000021840.85686.57 [121] Workman M. Expert decision support system use, disuse, and misuse: A study using the theory of planned behavior. Computers in Human Behavior, 2005, 21(2): 211−231 doi: 10.1016/j.chb.2004.03.011 [122] Jian J Y, Bisantz A M, Drury C G. Foundations for an empirically determined scale of trust in automated systems. International Journal of Cognitive Ergonomics, 2000, 4(1): 53−71 doi: 10.1207/S15327566IJCE0401_04 [123] Buckley L, Kaye S A, Pradhan A K. Psychosocial factors associated with intended use of automated vehicles: A simulated driving study. Accident Analysis & Prevention, 2018, 115: 202−208 [124] Mayer R C, Davis J H. The effect of the performance appraisal system on trust for management: A field quasi-experiment. Journal of Applied Psychology, 1999, 84(1): 123−136 doi: 10.1037/0021-9010.84.1.123 [125] Madsen M, Gregor S. Measuring human-computer trust. In: Proceedings of the 11th Australasian Conference on Information Systems. Brisbane, Australia: Australasian Association for Information Systems, 2000. 6−8 [126] Chien S Y, Semnani-Azad Z, Lewis M, Sycara K. Towards the development of an inter-cultural scale to measure trust in automation. In: Proceedings of the 6th International Conference on Cross-cultural Design. Heraklion, Greece: Springer, 2014. 35−36 [127] Garcia D, Kreutzer C, Badillo-Urquiola K, Mouloua M. Measuring trust of autonomous vehicles: A development and validation study. In: Proceedings of the 2015 International Conference on Human-Computer Interaction. Los Angeles, USA: Springer, 2015. 610−615 [128] Yagoda R E, Gillan D J. You want me to trust a ROBOT? The development of a human-robot interaction trust scale. International Journal of Social Robotics, 2012, 4(3): 235−248 doi: 10.1007/s12369-012-0144-0 [129] Dixon S R, Wickens C D. Automation reliability in unmanned aerial vehicle control: A reliance-compliance model of automation dependence in high workload. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2006, 48(3): 474−486 doi: 10.1518/001872006778606822 [130] Chiou E, Lee J D. Beyond reliance and compliance: Human-automation coordination and cooperation. In: Proceedings of the 59th International Annual Meeting of the Human Factors and Ergonomics Society. Los Angeles, USA: SAGE, 2015. 159−199 [131] Bindewald J M, Rusnock C F, Miller M E. Measuring human trust behavior in human-machine teams. In: Proceedings of the AHFE 2017 International Conference on Applied Human Factors in Simulation and Modeling. Los Angeles, USA: Springer, 2017. 47−58 [132] Chancey E T, Bliss J P, Yamani Y, Handley H A H. Trust and the compliance-reliance paradigm: The effects of risk, error bias, and reliability on trust and dependence. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2017, 59(3): 333−345 doi: 10.1177/0018720816682648 [133] Gremillion G M, Metcalfe J S, Marathe A R, Paul V J, Christensen J, Drnec K, et al. Analysis of trust in autonomy for convoy operations. In: Proceedings of SPIE 9836, Micro-and Nanotechnology Sensors, Systems, and Applications VⅢ. Washington, USA: SPIE, 2016. 9836−9838 [134] Basu C, Singhal M. Trust dynamics in human autonomous vehicle interaction: A review of trust models. In: Proceedings of the 2016 AAAI Spring Symposium Series. Palo Alto, USA: AAAI, 2016. 238−245 [135] Hester M, Lee K, Dyre B P. “Driver Take Over’’: A preliminary exploration of driver trust and performance in autonomous vehicles. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 2017, 61(1): 1969−1973 doi: 10.1177/1541931213601971 [136] De Visser E J, Monfort S S, Goodyear K, Lu L, O’Hara M, Lee M R, et al. A little anthropomorphism goes a long way: Effects of oxytocin on trust, compliance, and team performance with automated agents. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2017, 59(1): 116−133 doi: 10.1177/0018720816687205 [137] Gaudiello I, Zibetti E, Lefort S, Chetouani M, Ivaldi S. Trust as indicator of robot functional and social acceptance. An experimental study on user conformation to iCub answers. Computers in Human Behavior, 2016, 61: 633−655 doi: 10.1016/j.chb.2016.03.057 [138] Desai M, Kaniarasu P, Medvedev M, Steinfeld A, Yanco H. Impact of robot failures and feedback on real-time trust. In: Proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI). Tokyo, Japan: IEEE, 2013. 251−258 [139] Payre W, Cestac J, Delhomme P. Fully automated driving: Impact of trust and practice on manual control recovery. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2016, 58(2): 229−241 doi: 10.1177/0018720815612319 [140] Hergeth S, Lorenz L, Vilimek R, Krems J F. Keep your scanners peeled: Gaze behavior as a measure of automation trust during highly automated driving. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2016, 58(3): 509−519 doi: 10.1177/0018720815625744 [141] Khalid H M, Shiung L W, Nooralishahi P, Rasool Z, Helander M G, Kiong L C, Ai-Vyrn C. Exploring psycho-physiological correlates to trust: Implications for human-robot-human interaction. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 2016, 60(1): 697−701 doi: 10.1177/1541931213601160 [142] Gold C, Körber M, Hohenberger C, Lechner D, Bengler K. Trust in automation-Before and after the experience of take-over scenarios in a highly automated vehicle. Procedia Manufacturing, 2015, 3: 3025−3032 doi: 10.1016/j.promfg.2015.07.847 [143] Walker F, Verwey W B, Martens M. Gaze behaviour as a measure of trust in automated vehicles. In: Proceedings of the 6th Humanist Conference. Washington, USA: HUMANIST, 2018. 117−123 [144] Adolphs R. Trust in the brain. Nature Neuroscience, 2002, 5(3): 192−193 doi: 10.1038/nn0302-192 [145] Delgado M R, Frank R H, Phelps E A. Perceptions of moral character modulate the neural systems of reward during the trust game. Nature Neuroscience, 2005, 8(11): 1611−1618 doi: 10.1038/nn1575 [146] King-Casas B, Tomlin D, Anen C, Camerer C F, Quartz S R, Montague P R. Getting to know you: Reputation and trust in a two-person economic exchange. Science, 2005, 308(5718): 78−83 doi: 10.1126/science.1108062 [147] Krueger F, McCabe K, Moll J, Kriegeskorte N, Zahn R, Strenziok M, et al. Neural correlates of trust. Proceedings of the National Academy of Sciences of the United States of America, 2007, 104(50): 20084−20089 doi: 10.1073/pnas.0710103104 [148] Long Y, Jiang X, Zhou X. To believe or not to believe: Trust choice modulates brain responses in outcome evaluation. Neuroscience, 2012, 200: 50−58 doi: 10.1016/j.neuroscience.2011.10.035 [149] Minguillon J, Lopez-Gordo M A, Pelayo F. Trends in EEG-BCI for daily-life: Requirements for artifact removal. Biomedical Signal Processing and Control, 2017, 31: 407−418 doi: 10.1016/j.bspc.2016.09.005 [150] Choo S, Sanders N, Kim N, Kim W, Nam C S, Fitts E P. Detecting human trust calibration in automation: A deep learning approach. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 2019, 63(1): 88−90 doi: 10.1177/1071181319631298 [151] Morris D M, Erno J M, Pilcher J J. Electrodermal response and automation trust during simulated self-driving car use. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 2017, 61(1): 1759−1762 doi: 10.1177/1541931213601921 [152] Drnec K, Marathe A R, Lukos J R, Metcalfe J S. From trust in automation to decision neuroscience: Applying cognitive neuroscience methods to understand and improve interaction decisions involved in human automation interaction. Frontiers in Human Neuroscience, 2016, 10: Article No. 290 [153] 刘伟. 人机融合智能的现状与展望. 国家治理, 2019, (4): 7−15Liu Wei. Current situation and prospect of human-computer fusion intelligence. Governance, 2019, (4): 7−15 [154] 许为. 五论以用户为中心的设计: 从自动化到智能时代的自主化以及自动驾驶车. 应用心理学, 2020, 26(2): 108−128 doi: 10.3969/j.issn.1006-6020.2020.02.002Xu Wei. User-centered design (V): From automation to the autonomy and autonomous vehicles in the intelligence era. Chinese Journal of Applied Psychology, 2020, 26(2): 108−128 doi: 10.3969/j.issn.1006-6020.2020.02.002 [155] 王新野, 李苑, 常明, 游旭群. 自动化信任和依赖对航空安全的危害及其改进. 心理科学进展, 2017, 25(9): 1614−1622 doi: 10.3724/SP.J.1042.2017.01614Wang Xin-Ye, Li Yuan, Chang Ming, You Xu-Qun. The detriments and improvement of automation trust and dependence to aviation safety. Advances in Psychological Science, 2017, 25(9): 1614−1622 doi: 10.3724/SP.J.1042.2017.01614 [156] 曹清龙. 自动化信任和依赖对航空安全的危害及其改进分析. 技术与市场, 2018, 25(4): 160 doi: 10.3969/j.issn.1006-8554.2018.04.082Cao Qing-Long. Analysis of the detriments and improvement of automation trust and dependence to aviation safety. Technology and Market, 2018, 25(4): 160 doi: 10.3969/j.issn.1006-8554.2018.04.082 [157] Adams B D, Webb R D G. Trust in small military teams. In: Proceedings of the 7th International Command and Control Technology Symposium. Virginia, USA: DTIC, 2002. 1−20 [158] Beggiato M, Pereira M, Petzoldt T, Krems J. Learning and development of trust, acceptance and the mental model of ACC. A longitudinal on-road study. Transportation Research Part F: Traffic Psychology and Behaviour, 2015, 35: 75−84 doi: 10.1016/j.trf.2015.10.005 [159] Reimer B. Driver assistance systems and the transition to automated vehicles: A path to increase older adult safety and mobility? Public Policy & Aging Report, 2014, 24(1): 27−31 [160] Large D R, Burnett G E. The effect of different navigation voices on trust and attention while using in-vehicle navigation systems. Journal of Safety Research, 2014, 49: 69.e1−75 doi: 10.1016/j.jsr.2014.02.009 [161] Zhang T R, Tao D, Qu X D, Zhang X Y, Zeng J H, Zhu H Y, et al. Automated vehicle acceptance in China: Social influence and initial trust are key determinants. Transportation Research Part C: Emerging Technologies, 2020, 112: 220−233 doi: 10.1016/j.trc.2020.01.027 [162] Choi J K, Ji Y G. Investigating the importance of trust on adopting an autonomous vehicle. International Journal of Human-Computer Interaction, 2015, 31(10): 692−702 doi: 10.1080/10447318.2015.1070549 [163] Kaur K, Rampersad G. Trust in driverless cars: Investigating key factors influencing the adoption of driverless cars. Journal of Engineering and Technology Management, 2018, 48: 87−96 doi: 10.1016/j.jengtecman.2018.04.006 [164] Lee J D, Kolodge K. Exploring trust in self-driving vehicles through text analysis. Human Factors: The Journal of the Human Factors and Ergonomics Society, 2020, 62(2): 260−277 doi: 10.1177/0018720819872672 -

下载:

下载: