WCGAN-based Illumination-invariant Color Measuring of Mineral Flotation Froth Images

-

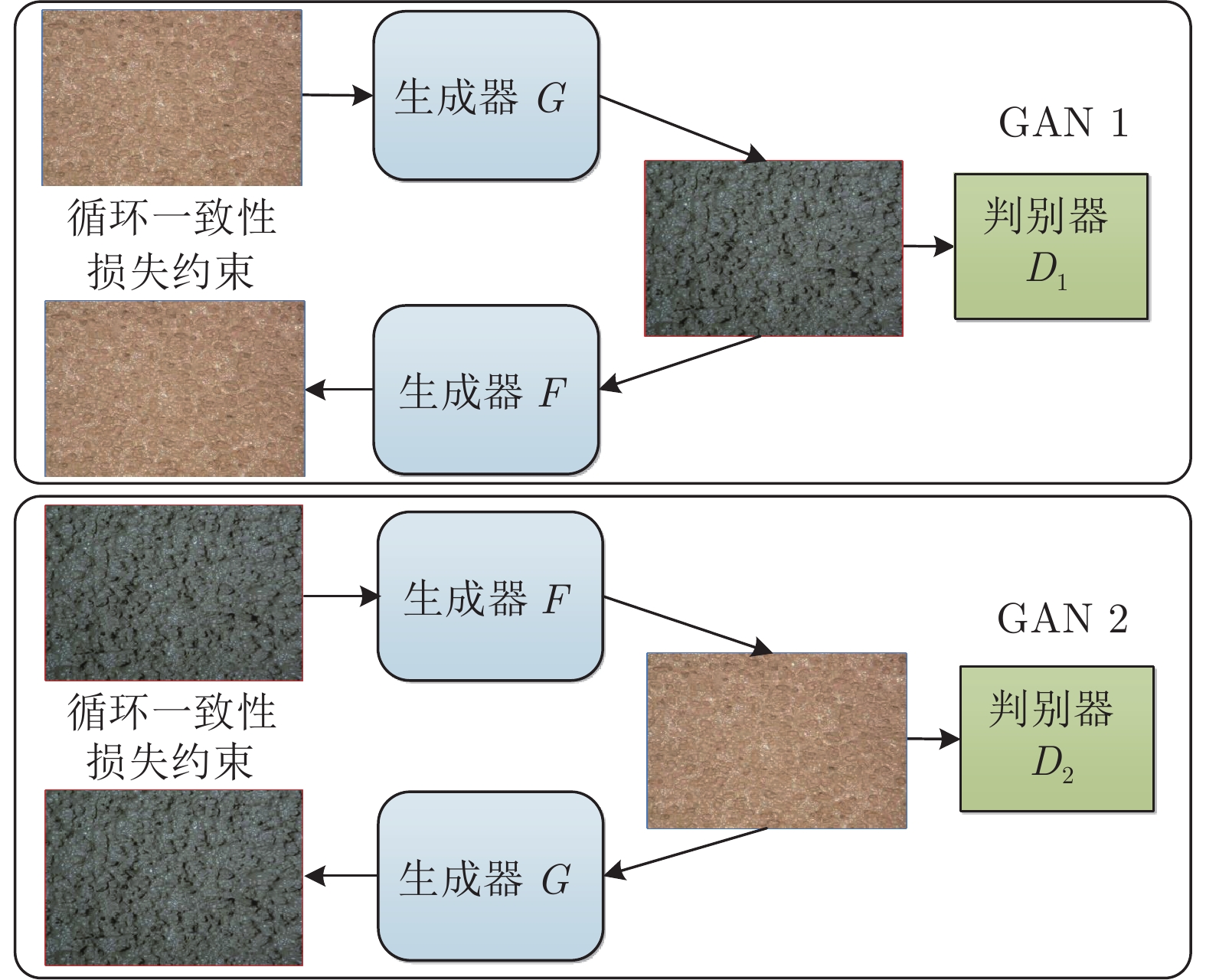

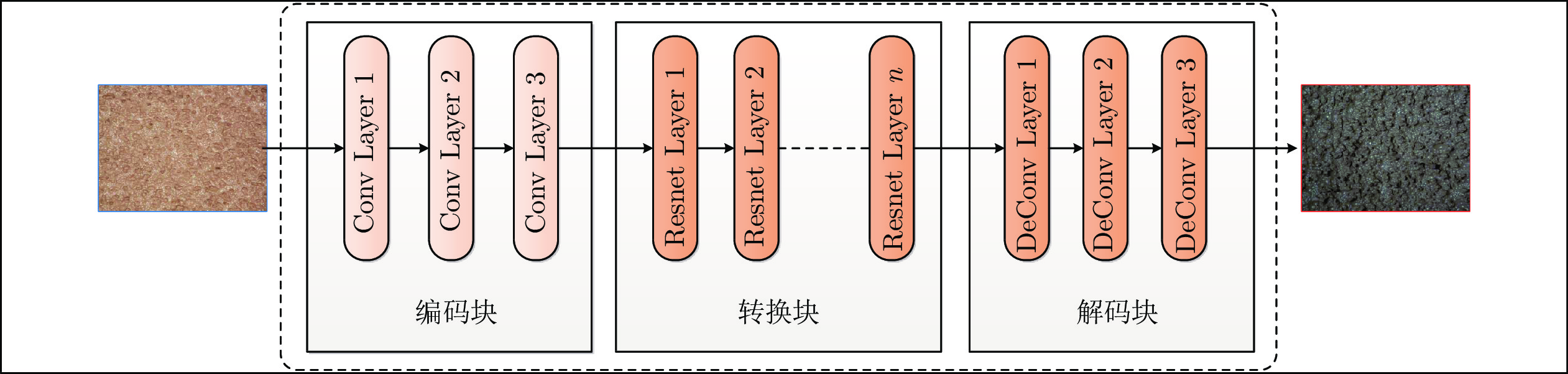

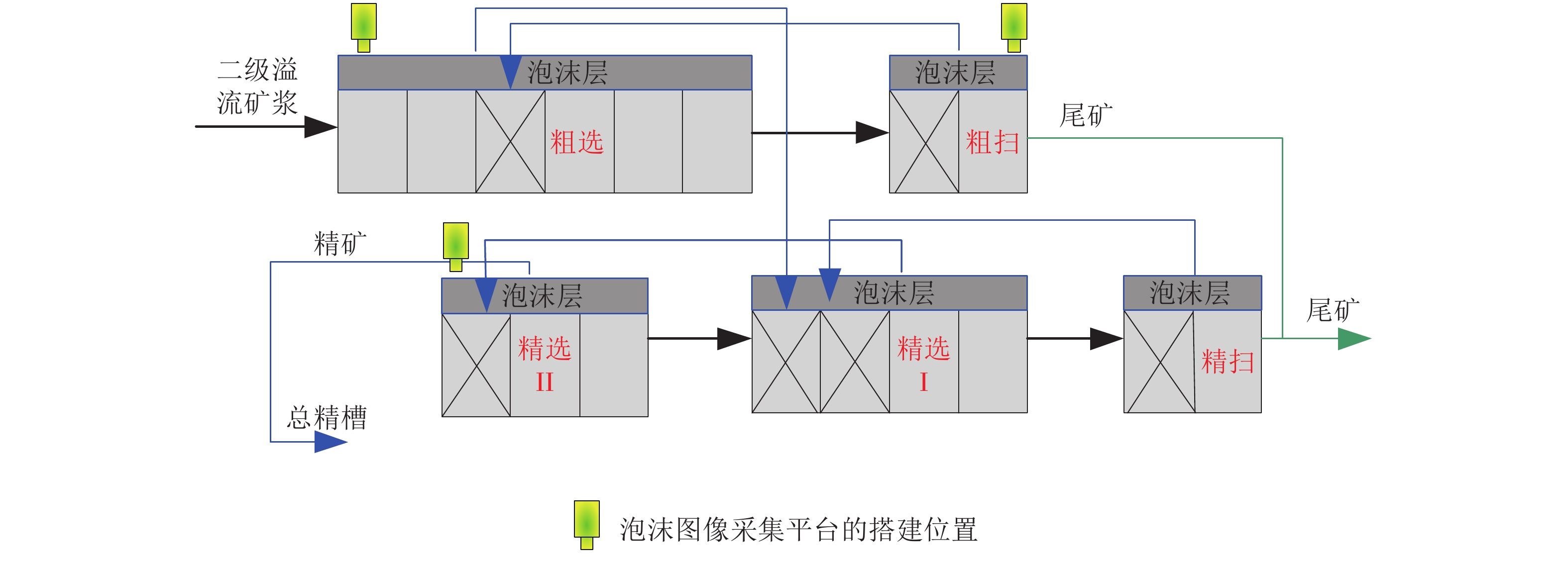

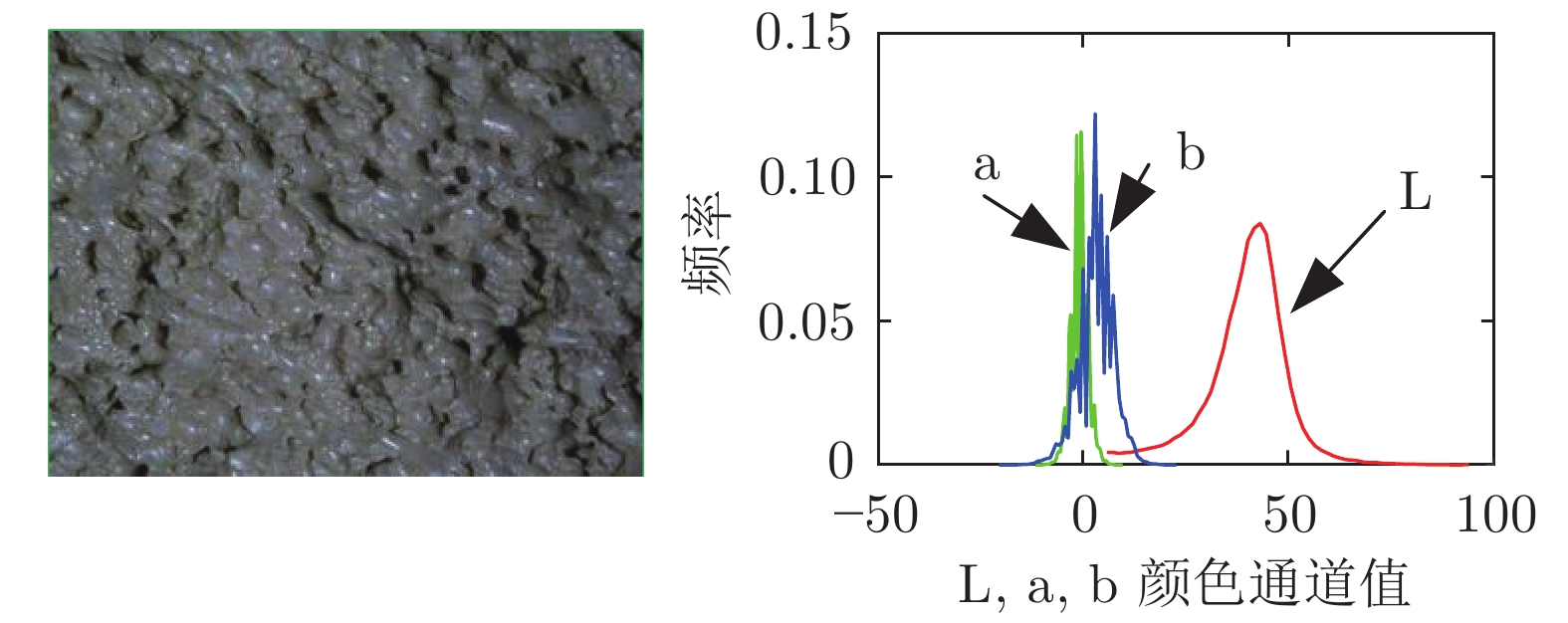

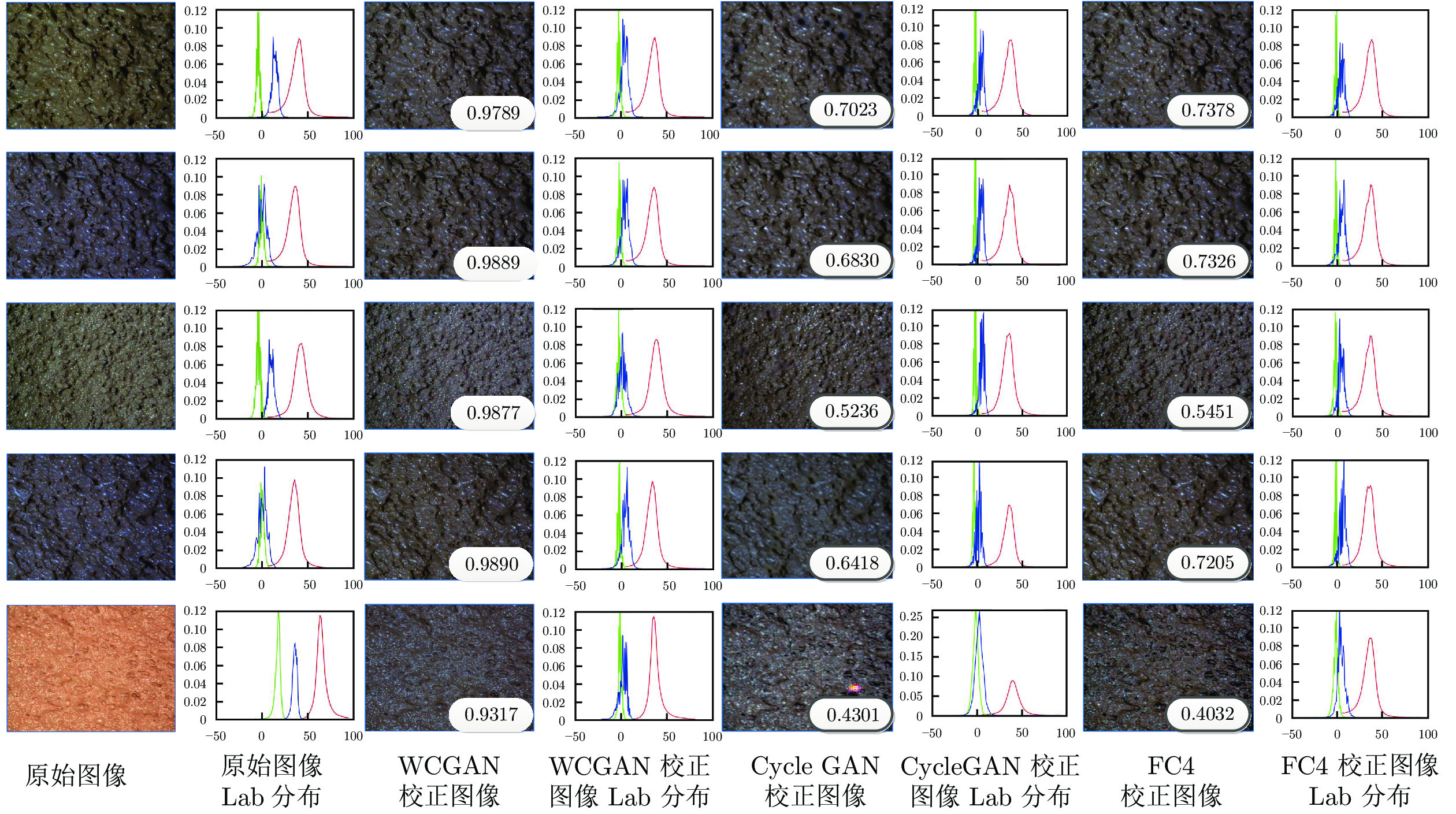

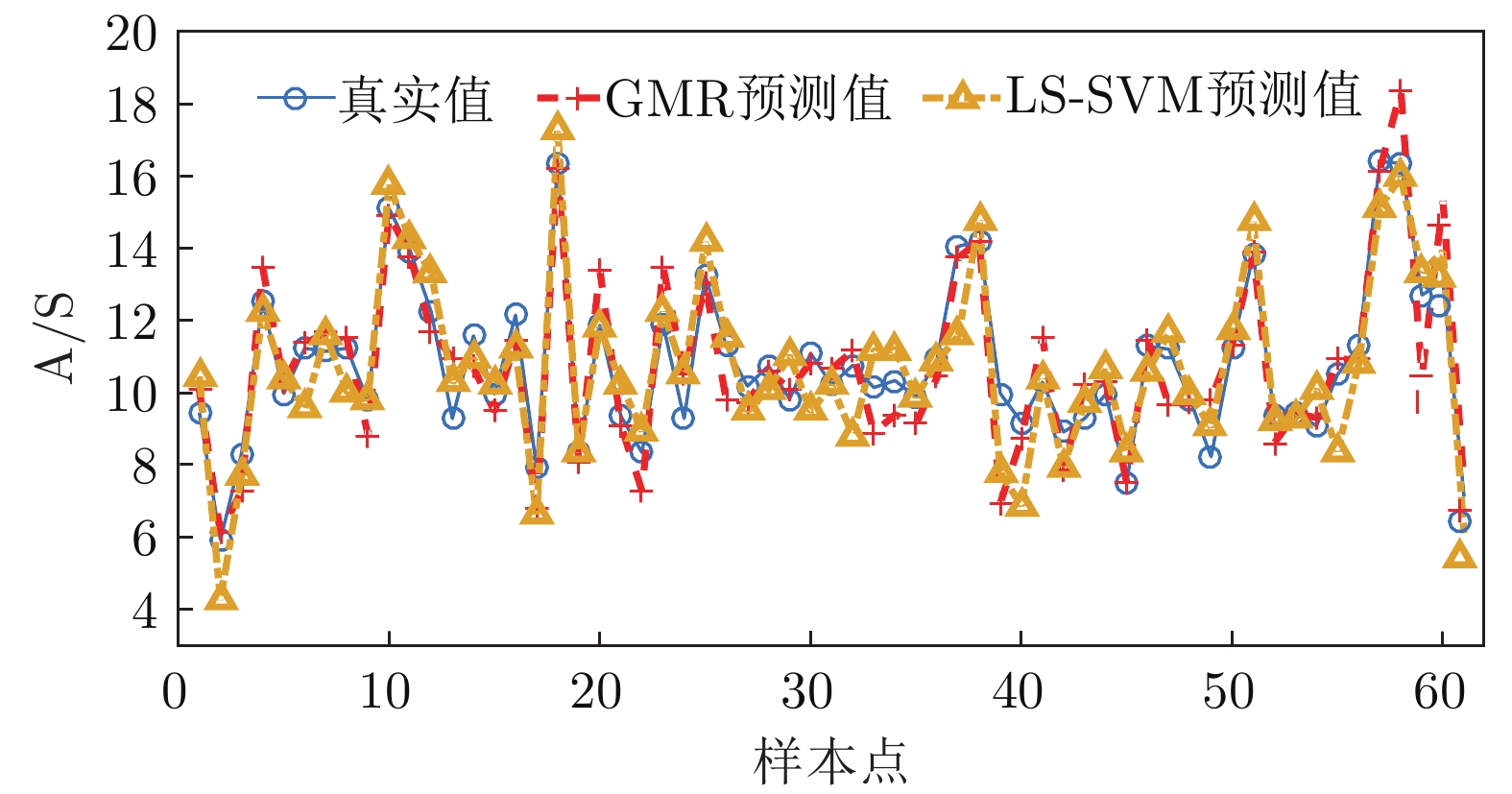

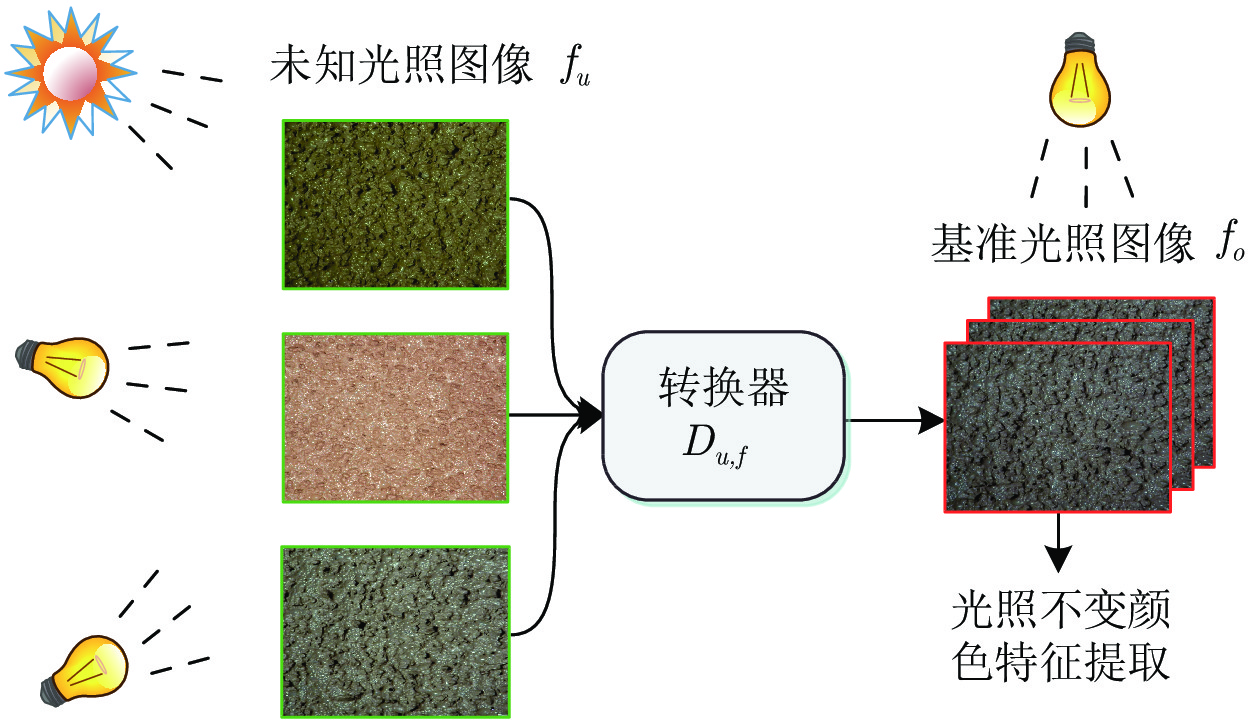

摘要: 浮选泡沫表面颜色是选矿生产指标(精矿品位)最为快速便捷的直接指示器. 然而, 泡沫图像信号因受多种可变光照的交叉干扰而不可避免存在严重色偏, 导致浮选指标难以准确评估. 本文将传统的基于光照估计的图像颜色恒常问题转换为一种结构保持的图到图颜色(风格)转移问题, 提出一种基于Wasserstein距离的循环生成对抗网络(Wasserstein distance-based cycle generative adversarial network, WCGAN)用于泡沫图像光照不变颜色特征在线监测. 在标准颜色恒常数据集和实际的工业铝土矿浮选过程进行实验验证, 结果表明, WCGAN能有效实现各种未知光照条件下(色偏)图像到基准光照条件下的颜色转换, 转换速度快且具有模型在线更新功能. 与传统的基于生成对抗学习的颜色转换模型相比, WCGAN能更好地保持泡沫图像的轮廓和表面纹理等结构信息, 为基于机器视觉的矿物浮选过程生产指标的在线监测提供了有效的客观评价信息.

-

关键词:

- 浮选泡沫图像 /

- 循环生成对抗网络 /

- 光照不变颜色特征 /

- Wasserstein距离 /

- 结构保持

Abstract: The surface color of flotation froth can be referred as an instant and direct production index (e.g., concentrate grade) of mineral flotation process. However, serious color deviations will inevitably exist in the collected froth images, due to the adverse interference of varying multi-source illuminations, resulting in great difficulties in achieving accurate evaluation results of production indexes. This paper formulates the illumination estimation task in traditional color constancy as a structure-preserved image-to-image color translation task. It presents a Wasserstein distance-based cycle generative adversarial network (WCGAN) for the illumination-invariant froth color feature measuring. Extensive validation and comparative experiments on benchmark color constancy datasets and an industrial flotation process demonstrate that WCGAN can effectively realize the color translation of froth images from various unknown illuminations to a canonical illumination, which has the merits of fast translation and online model updating. Compared with the traditional generative adversarial networks-based color translation models, WCGAN can maintain the structural features invariance, e.g., geometric and texture structures, of original images more effectively, providing effective and objective evaluation information for the on-line monitoring of production indices of mineral flotation process. -

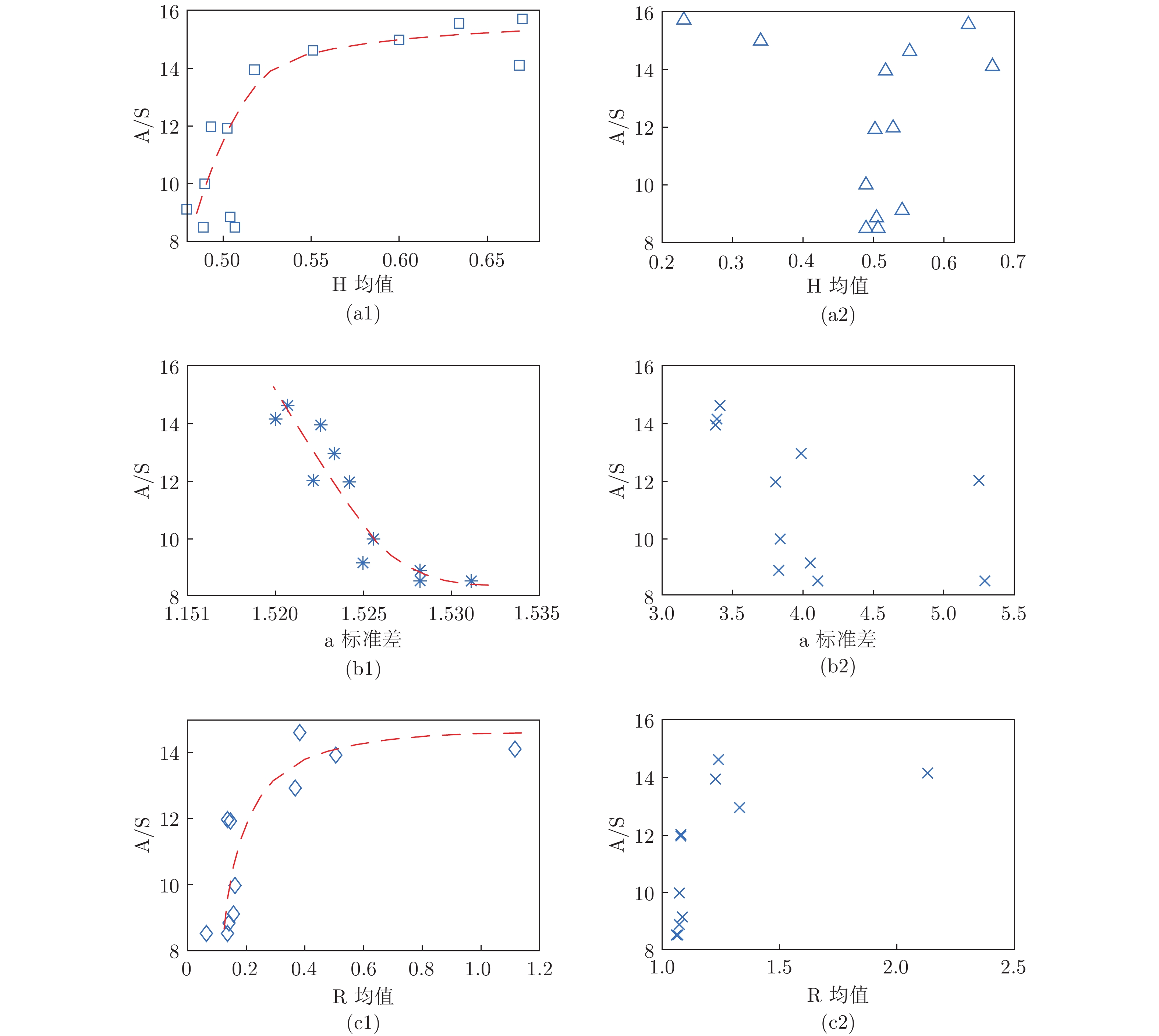

图 8 泡沫图像颜色特征与A/S间相关性 ((a1)和(a2)分别代表校正后和校正前H均值与A/S间的相关性;(b1)和(b2)分别代表校正后和校正前a通道的标准差与A/S的相关性; (c1)和(c2)分别代表校正后和校正前的归一化R通道均值与A/S之间的相关性)

Fig. 8 The correlation between color characteristics of froth images and A/S ((a1) and (a2) represent the correlation between H-means and A/S after correction and before correction; (b1) and (b2) represent the correlation between standard deviation of a-channel and A/S after correction and before correction; (c1) and (c2) represent the correlation between normalized R-channel mean and A/S after correction and before correction, respectively)

表 1 基于统计量的颜色恒常方法在Gehler-Shi 568 data 上的对比结果

Table 1 Comparison of statistics-based color constancy methods on Gehler-Shi 568 data

表 2 基于机器学习的颜色恒常方法在Gehler-Shi 568 data上的对比结果

Table 2 Comparison of machine learning-based color constancy methods on Gehler-Shi 568 data

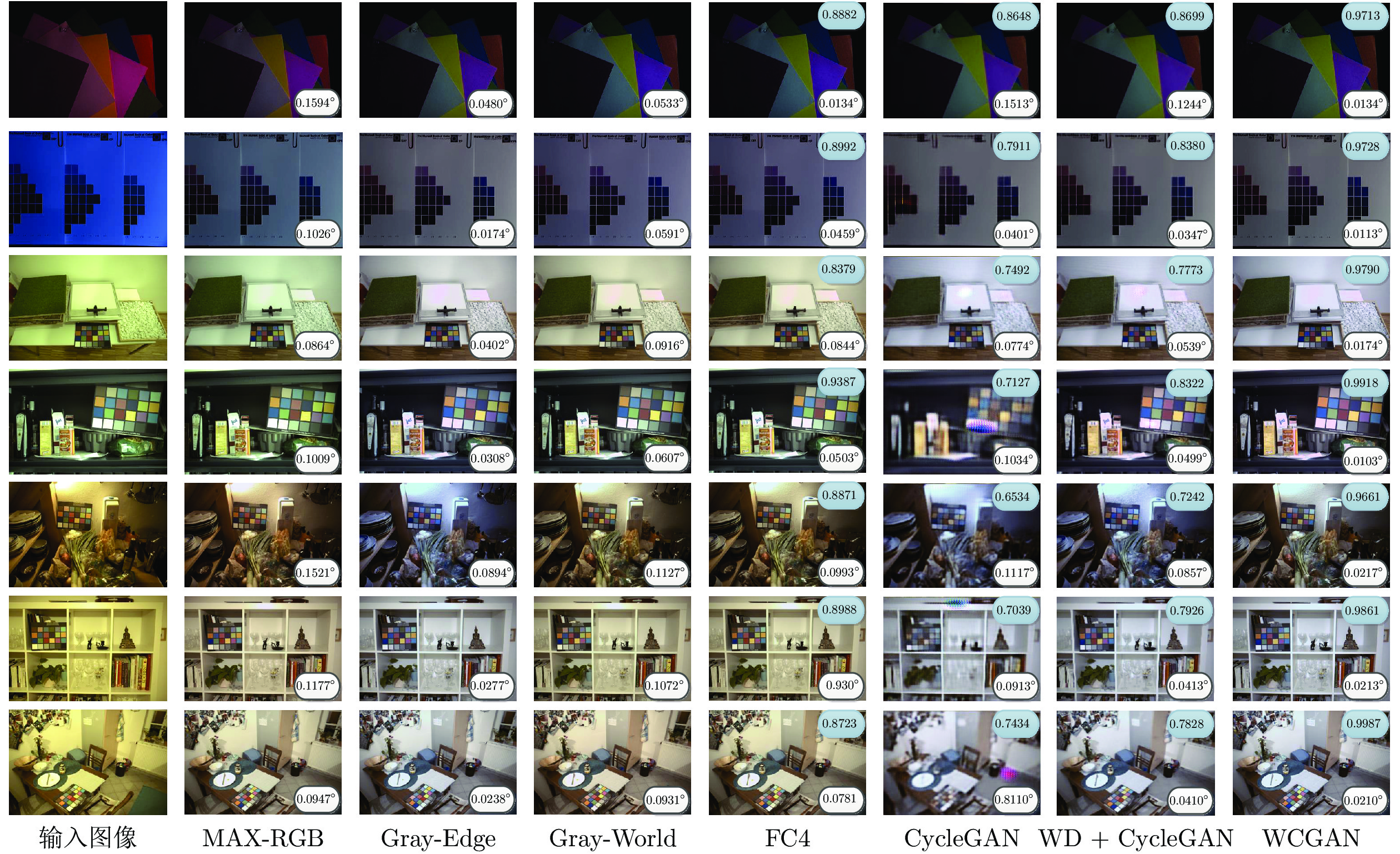

方法 SSIM 色度误差 角度误差 训练时间 (s) 测试时间 (s) Median Max RMS Mean Max RMS FC4[13] 0.8576 0.57 1.39 0.65 4.7 11.3 5.6 1.7 0.9 Neural Gray[33] 0.9166 0.69 1.92 0.77 5.7 13.4 6.5 1.4 0.5 Based-SVR[34] 0.8945 0.61 1.88 0.70 5.4 12.6 6.3 1.6 1.2 CycleGAN[35] 0.6918 0.98 3.11 1.07 6.3 16.5 7.4 3.0 0.12 WD + CycleGAN 0.8399 0.76 1.84 0.69 5.1 14.3 5.9 3.0 0.12 WCGAN 0.9897 0.42 1.31 0.50 4.3 10.5 5.4 1.5 0.06 表 3 基于统计量的颜色恒常方法在SFU 321 lab images上的对比结果

Table 3 Comparison of statistics-based color constancy methods on SFU 321 lab images

表 4 基于机器学习的颜色恒常方法在SFU 321 lab images上的对比结果

Table 4 Comparison of machine learning-based color constancy methods on SFU 321 lab images

方法 SSIM 色度误差 角度误差 训练时间 (s) 测试时间 (s) Median Max RMS Mean Max RMS FC4[13] 0.8791 0.61 1.45 0.69 5.2 9.0 6.0 1.1 0.7 Neural Gray[33] 0.9286 0.71 1.87 0.80 6.4 12.1 7.3 0.9 0.4 Based-SVR[34] 0.9139 0.63 1.84 0.72 5.8 12.1 6.5 1.3 0.9 CycleGAN[35] 0.7347 0.84 2.11 0.92 6.2 15.7 7.9 2.7 0.09 WD+CycleGAN 0.9145 0.70 1.75 0.66 4.7 13.9 6.9 2.7 0.09 WCGAN 0.9936 0.39 1.28 0.45 3.1 12.2 4.1 1.2 0.05 -

[1] Szczerkowska S, Wiertel-Pochopien A, Zawala J, Larsen E, Kowalczuk P B. Kinetics of froth flotation of naturally hydrophobic solids with different shapes. Minerals Engineering, 2018, 121: 90-99 doi: 10.1016/j.mineng.2018.03.006 [2] 姜艺, 范家璐, 贾瑶, 柴天佑. 数据驱动的浮选过程运行反馈解耦控制方法. 自动化学报, 2019, 45(4): 759-770 doi: 10.16383/j.aas.2018.c170552Jiang Yi, Fan Jia-Lu, Jia Yao, Chai Tian-You. Data-driven flotation process operational feedback decoupling control. Acta Automatica Sinica, 2019, 45(4): 759-770 doi: 10.16383/j.aas.2018.c170552 [3] 桂卫华, 阳春华, 徐德刚, 卢明, 谢永芳. 基于机器视觉的矿物浮选过程监控技术研究进展. 自动化学报, 2013, 39(11): 1879-1888 doi: 10.3724/SP.J.1004.2013.01879Gui Wei-Hua, Yang Chun-Hua, Xu De-Gang, Lu Ming, Xie Yong-Fang. Machine-vision-based online measuring and controlling technologies for mineral flotation——a review. Acta Automatica Sinica, 2013, 39(11): 1879-1888 doi: 10.3724/SP.J.1004.2013.01879 [4] Jahedsaravani A, Massinaei M, Marhaban M H. Development of a machine vision system for real-time monitoring and control of batch flotation process. International Journal of Mineral Processing, 2017, 167: 16-26 doi: 10.1016/j.minpro.2017.07.011 [5] Popli K, Maries V, Afacan A, Liu Q, Prasad V. Development of a vision-based online soft sensor for oil sands flotation using support vector regression and its application in the dynamic monitoring of bitumen extraction. The Canadian Journal of Chemical Engineering, 2018, 96(7): 1532-1540 doi: 10.1002/cjce.23164 [6] Xie Y F, Wu J, Xu D G, Yang C H, Gui W H. Reagent addition control for stibium rougher flotation based on sensitive froth image features. IEEE Transactions on Industrial Electronics, 2017, 64(5): 4199-4206 doi: 10.1109/TIE.2016.2613499 [7] Liu J P, Zhou J M, Tang Z H, Gui W H, Xie Y F, He J Z, et al. Toward flotation process operation-state identification via statistical modeling of biologically inspired Gabor filtering responses. IEEE Transactions on Cybernetics, 2020, 50(10): 4242-4255 doi: 10.1109/TCYB.2019.2909763 [8] Reddick J F, Hesketh A H, Morar S H, Bradshaw D J. An evaluation of factors affecting the robustness of colour measurement and its potential to predict the grade of flotation concentrate. Minerals Engineering, 2009, 22(1): 64-69 doi: 10.1016/j.mineng.2008.03.018 [9] Gijsenij A, Gevers T, Weijer J V D. Computational color constancy: Survey and experiments. IEEE Transactions on Image Processing, 2011, 20(9): 2475-2489 doi: 10.1109/TIP.2011.2118224 [10] Oh S W, Kim S J. Approaching the computational color constancy as a classification problem through deep learning. Pattern Recognition, 2017, 61: 405-416 doi: 10.1016/j.patcog.2016.08.013 [11] Gatta C, Farup I. Gamut mapping in RGB colour spaces with the iterative ratios diffusion algorithm. In: Proceedings of the 2017 S&T International Symposium on Electronic Imaging: Color Imaging XXII: Displaying, Processing, Hardcopy, and Applications. Burlingame, USA: Ingenta, 2017. 12−20 [12] Bianco S, Cusano C, Schettini R. Single and multiple illuminant estimation using convolutional neural networks. IEEE Transactions on Image Processing, 2017, 26(9): 4347-4362 doi: 10.1109/TIP.2017.2713044 [13] Hu Y M, Wang B Y, Lin S. FC.4: Fully convolutional color constancy with confidence-weighted pooling. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 330−339 [14] Wang C, Gao R, Wei W, Shafie-khah M, Bi T S, Catalão J P S. Risk-based distributionally robust optimal gas-power flow with Wasserstein distance. IEEE Transactions on Power Systems, 2019, 34(3): 2190-2204 doi: 10.1109/TPWRS.2018.2889942 [15] 赵洪伟, 谢永芳, 蒋朝辉, 徐德刚, 阳春华, 桂卫华. 基于泡沫图像特征的浮选槽液位智能优化设定方法. 自动化学报, 2014, 40(6): 1086-1097Zhao Hong-Wei, Xie Yong-Fang, Jiang Zhao-Hui, Xu De-Gang, Yang Chun-Hua, Gui Wei-Hua. An intelligent optimal setting approach based on froth features for level of flotation cells. Acta Automatica Sinica, 2014, 40(6): 1086-1097 [16] Goodfellow I J, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative adversarial nets. In: Proceedings of the 27th International Conference on Neural Information Processing Systems. Montreal, Canada: MIT Press, 2014. 2672−2680 [17] Isola P, Zhu J Y, Zhou T H, Efros A A. Image-to-image translation with conditional adversarial networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 5967−5976 [18] Kim T, Cha M, Kim H, Lee J K, Kim J. Learning to discover cross-domain relations with generative adversarial networks. In: Proceedings of the 34th International Conference on Machine Learning. Sydney, Australia: JMLR.org, 2017. 1857−1865 [19] Arjovsky M, Chintala S, Bottou L. Wasserstein generative adversarial networks. In: Proceedings of the 34th International Conference on Machine Learning. Sydney, Australia: JMLR.org, 2017. 214−223 [20] Nowozin S, Cseke B, Tomioka R. $f \text{-}{\rm{GAN}} :$Training generative neural samplers using variational divergence minimization. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: Curran Associates Inc., 2016. 271−279 [21] Arjovsky M, Bottou L. Towards principled methods for training generative adversarial networks. In: Proceedings of the 5th International Conference on Learning Representations (ICLR). Toulon, France: OpenReview.net, 2017. 1−17 [22] 姚乃明, 郭清沛, 乔逢春, 陈辉, 王宏安. 基于生成式对抗网络的鲁棒人脸表情识别. 自动化学报, 2018, 44(5): 865-877 doi: 10.16383/j.aas.2018.c170477Yao Nai-Ming, Guo Qing-Pei, Qiao Feng-Chun, Chen Hui, Wang Hong-An. Robust facial expression recognition with generative adversarial networks. Acta Automatica Sinica, 2018, 44(5): 865-877 doi: 10.16383/j.aas.2018.c170477 [23] Mukkamala M C, Hein M. Variants of RMSProp and Adagrad with logarithmic regret bounds. In: Proceedings of the 34th International Conference on Machine Learning. Sydney, Australia: JMLR.org, 2017. 2545−2553 [24] Sultana N N, Mandal B, Puhan N B. Deep residual network with regularised fisher framework for detection of melanoma. IET Computer Vision, 2018, 12(8): 1096-1104 doi: 10.1049/iet-cvi.2018.5238 [25] Chen G, Chacón L, Barnes D C. An efficient mixed-precision, hybrid CPU–GPU implementation of a nonlinearly implicit one-dimensional particle-in-cell algorithm. Journal of Computational Physics, 2012, 231(16): 5374-5388 doi: 10.1016/j.jcp.2012.04.040 [26] Gehler P V, Rother C, Blake A, Minka T, Sharp T. Bayesian color constancy revisited. In: Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition. Anchorage, USA: IEEE, 2008. 1−8 [27] Barnard K, Martin L, Funt B, Coath A. A data set for color research. Color Research & Application, 2002, 27(3): 147-151 [28] Hussain A, Akbari A S. Color constancy algorithm for mixed-illuminant scene images. IEEE Access, 2018, 6: 8964-8976 doi: 10.1109/ACCESS.2018.2808502 [29] Sulistyo S B, Woo W L, Dlay S S. Regularized neural networks fusion and genetic algorithm based on-field nitrogen status estimation of wheat plants. IEEE Transactions on Industrial Informatics, 2017, 13(1): 103-114 doi: 10.1109/TII.2016.2628439 [30] Yoo J H, Kyung W J, Choi J S, Ha Y H. Color image enhancement using weighted multi-scale compensation based on the gray world assumption. Journal of Imaging Science and Technology, 2017, 61(3): Article No. 030507 [31] Joze H R V, Drew M S. White patch gamut mapping colour constancy. In: Proceedings of the 19th IEEE International Conference on Image Processing. Orlando, USA: IEEE, 2012. 801−804 [32] Wang Z, Bovik A C, Sheikh H R, Simoncelli E P. Image quality assessment: From error visibility to structural similarity. IEEE Transactions on Image Processing, 2004, 13(4): 600-612 doi: 10.1109/TIP.2003.819861 [33] Faghih M M, Moghaddam M E. Neural Gray: A color constancy technique using neural network. Color Research & Application, 2014, 39(6): 571-581 [34] Zhang J X, Zhang P, Wu X L, Zhou Z Y, Yang C. Illumination compensation in textile colour constancy, based on an improved least-squares support vector regression and an improved GM(1,1) model of grey theory. Coloration Technology, 2017, 133(2): 128-134 doi: 10.1111/cote.12243 [35] Zhu J Y, Park T, Isola P, Efros A A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV). Venice, Italy: IEEE, 2017. 2242−2251 [36] Yuan X F, Ge Z Q, Song Z H. Soft sensor model development in multiphase/multimode processes based on Gaussian mixture regression. Chemometrics and Intelligent Laboratory Systems, 2014, 138: 97-109 doi: 10.1016/j.chemolab.2014.07.013 -

下载:

下载: