A Deep Residual − Feature Pyramid Network Framework for Scattered Point Cloud Semantic Segmentation

-

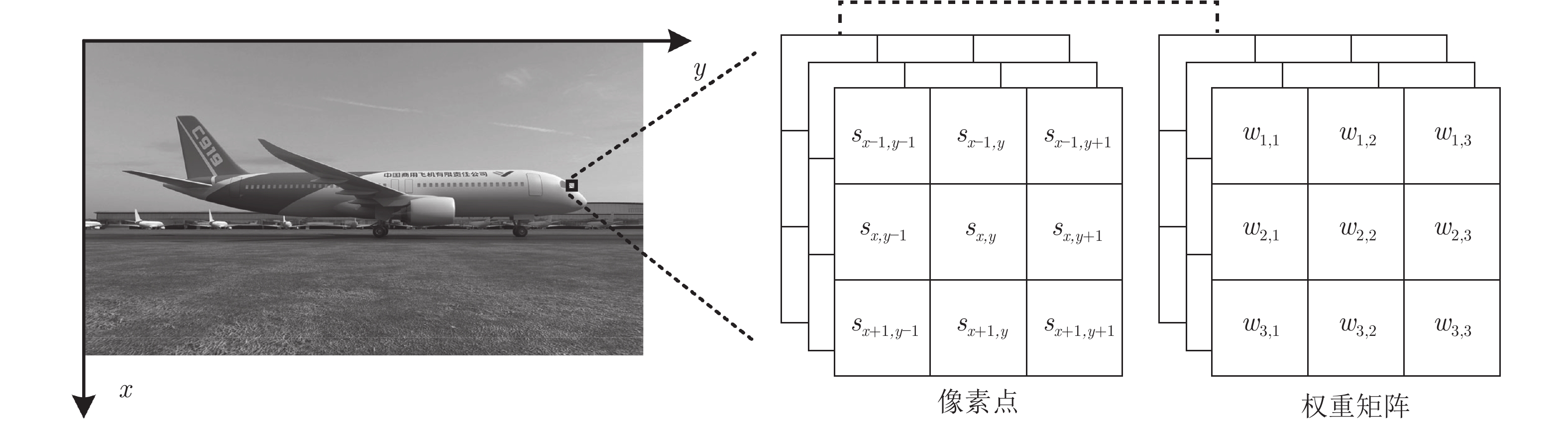

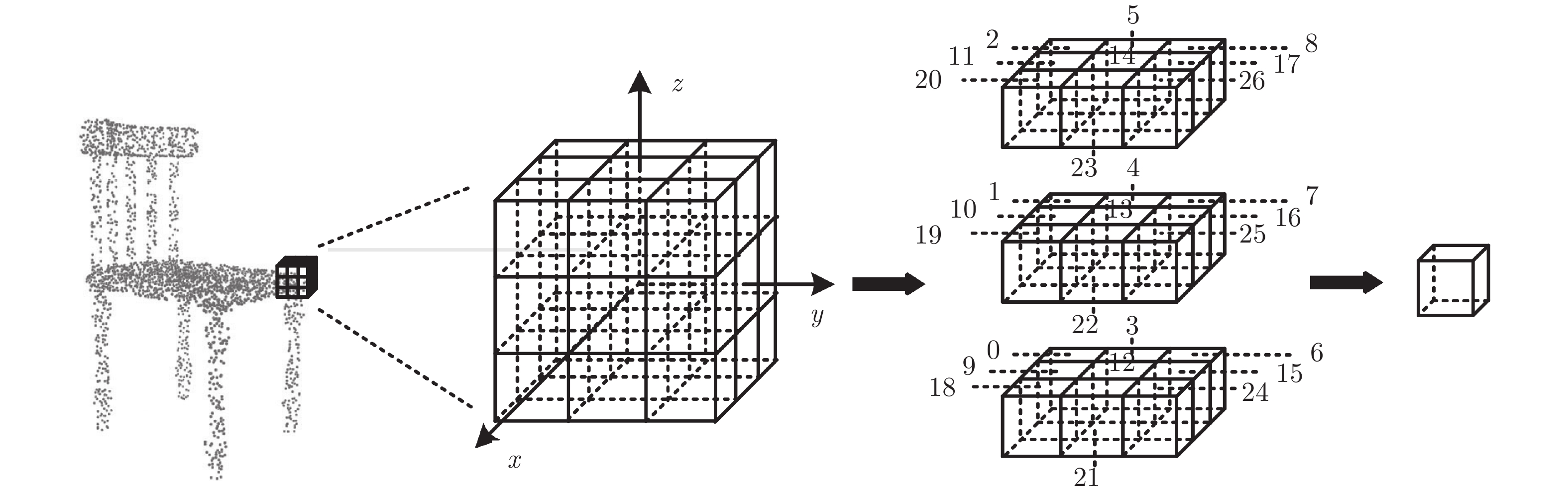

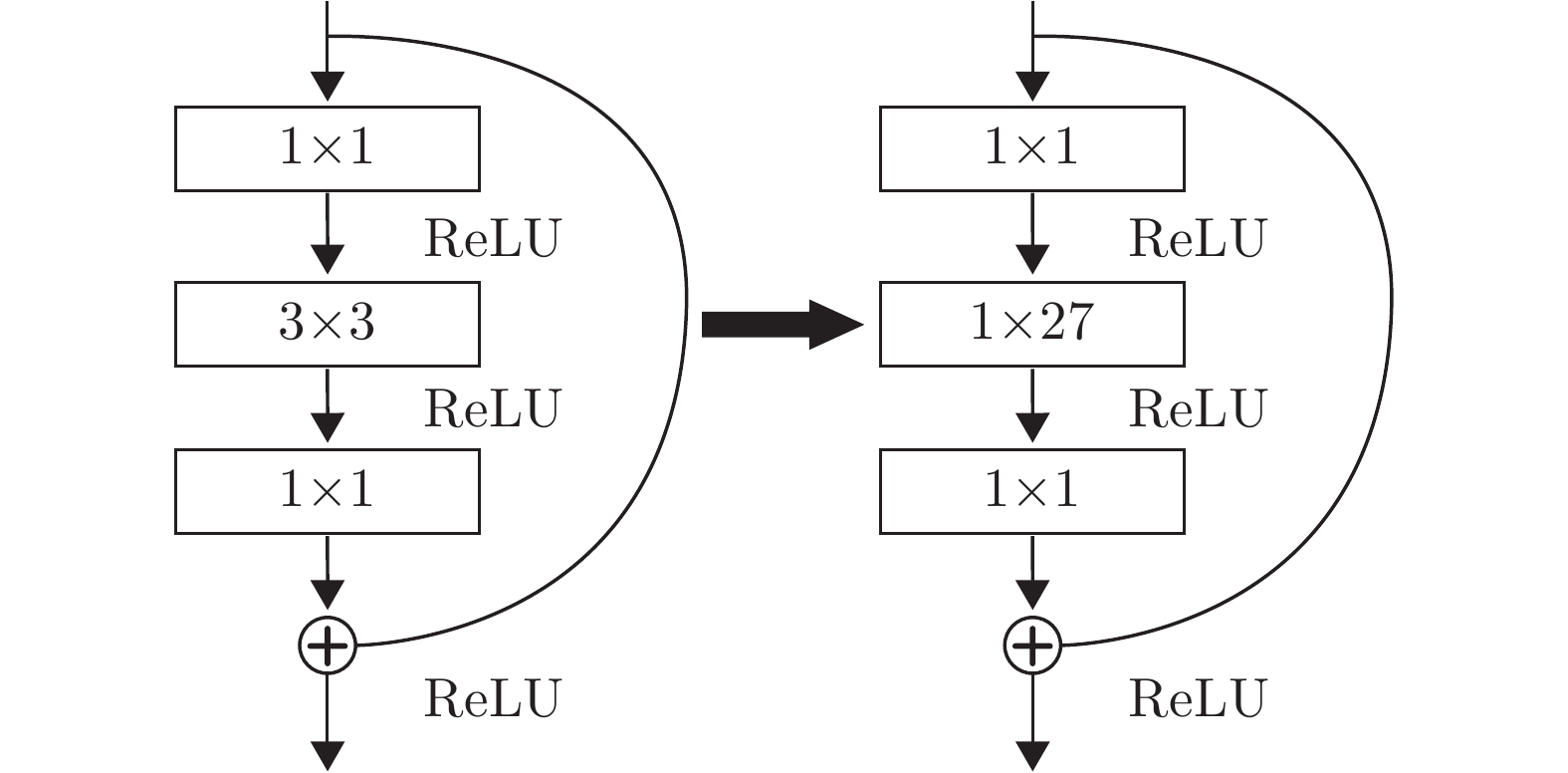

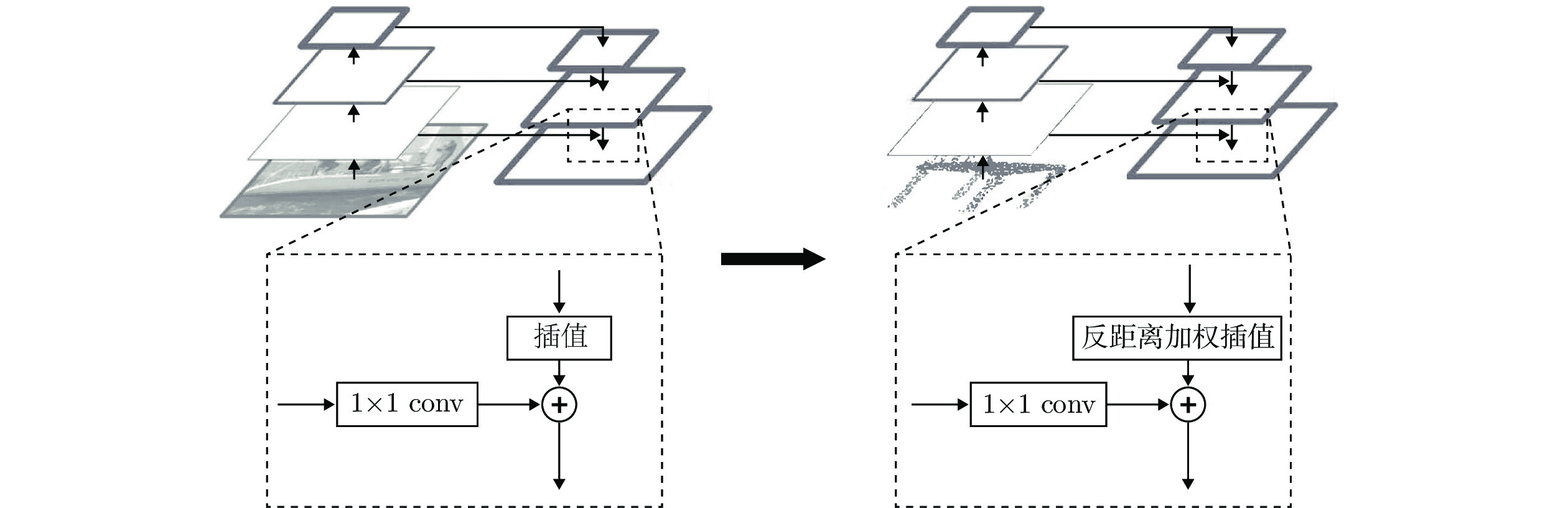

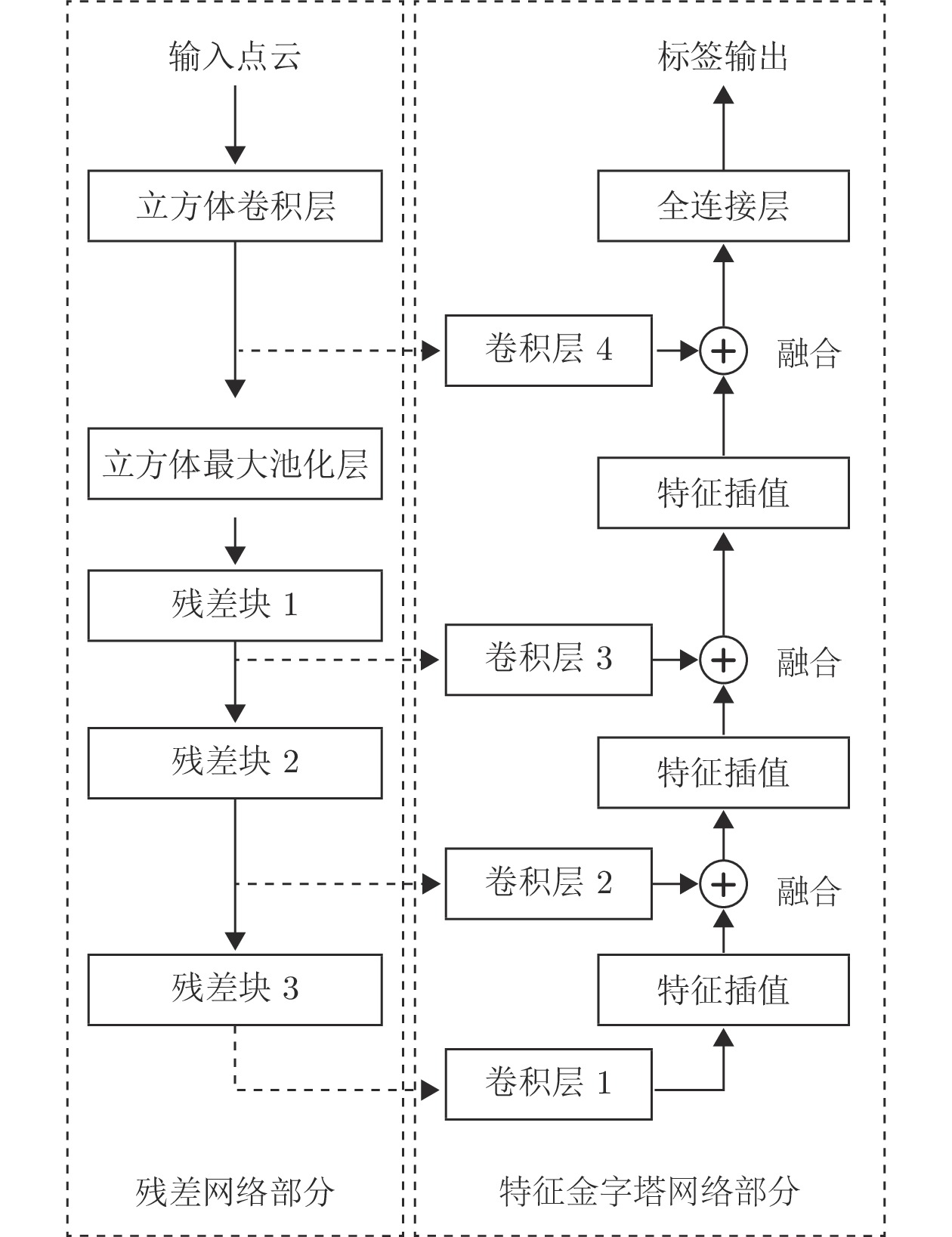

摘要: 针对当前基于深度学习的散乱点云语义特征提取方法通用性差以及特征提取不足导致的分割精度和可靠性差的难题, 提出了一种散乱点云语义分割深度残差−特征金字塔网络框架. 首先, 针对当前残差网络在卷积方式上的局限性, 定义一种立方体卷积运算, 不仅可以通过二维卷积运算实现三维表示点的高层特征的抽取, 还可以解决现有的参数化卷积设计通用性差的问题;其次, 将定义的立方体卷积计算与残差网络相结合, 构建面向散乱点云语义分割的深度残差特征学习网络框架; 进一步, 将深度残差网络与特征金字塔网络相结合, 实现三维表示点高层特征多尺度学习与散乱点云场景语义分割. 实验结果表明, 本文提出的立方体卷积运算具有良好的适用性, 且本文提出的深度残差−特征金字塔网络框架在分割精度方面优于现存同类方法.Abstract: Aiming at the problem that the current scattered point cloud semantic segmentation based on the deep learning method is poor in generality and the problem of poor segmentation accuracy and reliability caused by insufficient feature extraction, a scattered point cloud depth residual-feature pyramid network framework is proposed. Firstly, for the limitation of the current residual network in the convolution mode, a cube convolution operation is defined, which can not only extract the high-level features of the three-dimensional representation point through the two-dimensional convolution operation, but also solve the problem of poor generality of current parameterized convolution design. Secondly, a deep residual feature learning network framework is constructed for scattered point cloud semantic segmentation through integrating the defined cube convolution operation into the residual network. Additionally, the proposed deep residual network is combined with the feature pyramid network to enable multi-scale learning of high-level features of three-dimensional representation points and semantic segmentation of scattered point cloud. The experimental results show that the cube convolution proposed in this paper has good applicability and the depth residual-feature pyramid network framework is superior to the existing similar methods in terms of segmentation accuracy.1) 收稿日期 2019-01-26 录用日期 2019-07-30 Manuscript received January 26, 2019; accepted July 30, 2019 国家重点研发计划(2017YFB0306402), 国家自然科学基金 (51305390, 61601401), 河北省自然科学基金(F2016203312, E2020303188), 河北省高等学校青年拔尖人才计划项目(BJ2018018), 河北省教育厅高等学校科技计划重点项目(ZD2019039) 资助 Supported by National Key Research and Development Program of China (2017YFB0306402), National Natural Science Foundation of China (51305390, 61601401), Natural Science Foundation of Hebei Province (F2016203312, E2020303188), Young Talent Program of Colleges inHebei Province (BJ2018018), and KeyFoundation of Hebei Educational Committee (ZD2019039)2) 本文责任编委 黄庆明 Recommended by Associate Editor HUANG Qing-Ming 1. 燕山大学信息科学与工程学院 秦皇岛 066004 2. 燕山大学电气工程学院 秦皇岛 066004 1. School of Information Science and Engineering, YanshanUniversity, Qinhuangdao 066004 2. School of Electrical Engin-eering, Yanshan University, Qinhuangdao 066004

-

表 1 参数设计

Table 1 The parameter design

层 卷积核大小 立方体边长 (m) 输出点个数 输出特征通道数 立方体卷积 $1\times27,64$ 0.1 8 192 64 立方体最大池化 $1\times27,64$ 0.1 2 048 64 残差块1 $\left[\begin{aligned} 1\times1,64\;\\ 1\times27,64\\ 1\times1,256 \end{aligned}\right]\times3$ 0.2 2 048 256 残差块2 $\left[\begin{aligned} 1\times1,12\;8\\ 1\times27,128\\ 1\times1,512\; \end{aligned}\right]\times4$ 0.4 512 512 残差块3 $\left[ \begin{aligned}1\times1,256\;\\ 1\times27,256\\ 1\times1,1024\end{aligned}\right]\times6$ 0.8 128 1 024 卷积层1 $1\times1,256$ — 128 256 卷积层2 $1\times1,256$ — 512 256 卷积层3 $1\times1,256$ — 2 048 256 卷积层4 $1\times1,256$ — 8 192 256 全连接层 — — 8 192 20 表 2 S3DIS数据集分割结果比较 (%)

Table 2 Segmentation result comparisons on the S3DIS dataset (%)

表 3 S3DIS数据集各类别IoU分割结果比较 (%)

Table 3 Comparison of IoU for all categories on the S3DIS dataset (%)

类别 PointNet[7] RSNet[9] PointCNN[10] ResNet-FPN_C (本文) U-Net_C (本文) ceiling 88.0 92.48 94.78 91.99 91.46 floor 88.7 92.83 97.30 94.99 94.12 wall 69.3 78.56 75.82 77.04 79.00 beam 42.4 32.75 63.25 50.29 51.92 column 23.1 34.37 51.71 39.40 40.35 window 47.5 51.62 58.38 65.57 65.63 door 51.6 68.11 57.18 72.38 72.60 table 54.1 60.13 71.63 72.20 71.57 chair 42.0 59.72 69.12 77.10 77.56 sofa 9.6 50.22 39.08 54.87 55.89 bookcase 38.2 16.42 61.15 59.24 59.10 board 29.4 44.85 52.19 53.44 54.54 clutter 35.2 52.03 58.59 63.11 62.11 表 4 ScanNet数据集分割结果比较 (%)

Table 4 Segmentation result comparisons on the ScanNet dataset (%)

表 5 ScanNet数据集各类别IoU分割结果比较 (%)

Table 5 Comparison of IoU for all categories on the ScanNet dataset (%)

类别 PointNet[7] PointNet++[8] RSNet[9] PointCNN[10] ResNet-FPN_C (本文) U-Net_C (本文) wall 69.44 77.48 79.23 74.5 77.1 77.8 floor 88.59 92.50 94.10 90.7 90.6 90.8 chair 35.93 64.55 64.99 68.8 76.4 76.6 table 32.78 46.60 51.04 55.3 52.5 50.8 desk 2.63 12.69 34.53 28.8 29.1 25.8 bed 17.96 51.32 55.95 56.1 57.0 57.4 bookshelf 3.18 52.93 53.02 38.9 42.7 42.3 sofa 32.79 52.27 55.41 60.1 61.5 60.9 sink 0.00 30.23 34.84 41.9 41.8 41.7 bathtub 0.17 42.72 49.38 73.5 61.0 68.6 toilet 0.00 31.37 54.16 73.4 69.8 73.2 curtain 0.00 32.97 6.78 36.1 35.4 41.9 counter 5.09 20.04 22.72 22.5 20.0 19.7 door 0.00 2.02 3.00 7.5 26.0 28.4 window 0.00 3.56 8.75 11.0 15.9 18.8 shower curtain 0.00 27.43 29.92 40.6 38.1 42.6 refrigerator 0.00 18.51 37.90 43.4 47.3 52.3 picture 0.00 0.00 0.95 1.3 5.8 8.6 cabinet 4.99 23.81 31.29 26.4 27.5 27.5 other furniture 0.13 2.20 18.98 23.6 25.8 24.5 -

[1] Anguelov D, Taskarf B, Chatalbashev V, Koller D, Gupta D, Heitz G, et al. Discriminative learning of Markov random fields for segmentation of 3D scan data. In: Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. San Diego, USA: IEEE, 2005. 169−176 [2] Wu Z R, Song S R, Khosla A, Yu F, Zhang L G, Tang X O, et al. 3D ShapeNets: A deep representation for volumetric shapes. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 1912−1920 [3] Maturana D, Scherer S. VoxNet: A 3D convolutional neural network for real-time object recognition. In: Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems. Hamburg, Germany: IEEE, 2015. 922−928 [4] Riegler G, Ulusoys A, Geiger A. OctNet: Learning deep 3D representations at high resolutions. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 6620−6629 [5] Wang P S, Liu Y, Guo Y X, Sun C Y, Tong X. O-CNN: Octree-based convolutional neural networks for 3D shape analysis. ACM Transactions on Graphics, 2017, 36(4): Article No. 72 [6] Klokov R, Lempitsky V. Escape from cell: Deep kd-networks for the recognition of 3D point cloud models. In: Proceedings of the 2017 International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 863−872 [7] Qi C R, Su H, Mo K C, Guibas L J. Pointnet: Deep learning on point sets for 3D classification and segmentation. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 77−85 [8] Qi C R, Yi L, Su H, Guibas L J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In: Proceedings of the 2017 Advances in Neural Information Processing Systems. Long Beach, USA: Curran Associates, 2017. 5100−5109 [9] Huang Q G, Wang W Y, Neumann U. Recurrent slice networks for 3D segmentation of point clouds. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 262−2635 [10] Li Y Y, Bu R, Sun M C, Wu W, Di X H, Chen B Q. PointCNN: Convolution on X-transformed points. In: Proceedings of the 2018 Advances in Neural Information Processing Systems. Montreal, Canada: Curran Associates, 2018. 828−838 [11] Ronneberger O, Fischer P, Brox T. U-Net: Convolutional networks for biomedical image segmentation. In: Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention. Munich, Germany: MICCAI, 2015. 234−241 [12] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 770−778 [13] Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the inception architecture for computer vision. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 2818−2826 [14] Huang G, Liu Z, Maaten L V D, Weinberger K Q. Densely connected convolutional networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 2261−2269 [15] Lin T Y, Dollar P, Girshick R, He K M, Hariharan B, Belongie S. Feature pyramid networks for object detection. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 936−944 [16] Girshick R, Donahue J, Darrell T, Malik J. Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition. Columbus, USA: IEEE, 2014. 580−587 [17] Girshick R. Fast R-CNN. In: Proceedings of the 2015 IEEE International Conference on Computer Vision. Santiago, Chile: IEEE, 2015. 1440−1448 [18] Ren S Q, He K M, Girshick R, Sun J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149 doi: 10.1109/TPAMI.2016.2577031 [19] He K M, Gkioxari G, Dollar P, Girshick R. Mask R-CNN. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 2980−2988 [20] Liu S, Qi L, Qin H F, Shi J P, Jia J. Path aggregation network for instance segmentation. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 8759−8768 [21] Armeni I, Sener O, Zamir A R, Jiang H L, Brilakis L, Fischer M, Savarese S. 3D semantic parsing of large-scale indoor spaces. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 1534−1543 [22] Dai A, Chang A X, Savva M, Halber M, Funkhouser T, Nieβner M. ScanNet: Richly-annotated 3D reconstructions of indoor scenes. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 2432−2443 -

下载:

下载: