Missing Argument Filling of Uyghur Event Based on Independent Recurrent Neural Network and Capsule Network

-

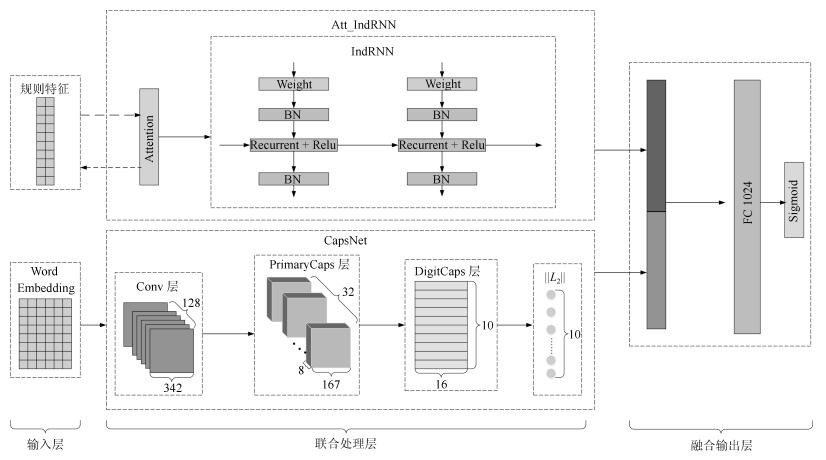

摘要: 提出了注意力机制独立循环神经网络和胶囊网络并行的维吾尔语事件缺失元素填充模型(Att IndRNN CapsNet).首先, 抽取18项事件和事件元素的内部特征, 作为结合注意力机制的独立循环神经网络模型的输入, 进一步获取高阶特征; 同时, 引入词嵌入技术将事件触发词和候选元素映射为词向量, 通过胶囊网络挖掘事件和事件元素的上下文语义特征; 然后, 将两种特征融合, 作为分类器的输入, 进而完成事件缺失元素的填充. 实验结果表明, 该方法用于维吾尔语事件缺失元素填充准确率为86.94 %, 召回率为84.14 %, 衡量模型整体性能的F1值为85.52 %, 从而证明了该方法在维吾尔语事件缺失元素填充上的有效性.Abstract: A parallel model (Att IndRNN CapsNet) for missing arguments fllling of Uyghur event is proposed based on attention-mechanical independent recurrent neural network and capsule network. Firstly, 18 features of events and event arguments are extracted, to be the input of the independent recurrent neural network model combined with the attention mechanism, is used to acquire high-order features. At the same time, the word embedding technique is introduced to map the event triggers and candidate arguments into word vectors. The capsule network mines the contextual semantic features of events and event arguments. Then the two kinds of features are fused as the input to the classifler to complete the fllling of the missing arguments of the event. The experimental results show that the accuracy rate of the missing arguments in Uyghur events is 86.94 %, the recall rate is 84.14 %, and the F1 value of the overall performance of the model is 85.52 %, which proves the efiectiveness of the method on the fllling of missing arguments in Uyghur events.

-

Key words:

- Attention mechanism /

- capsule network /

- event extraction /

- independently recurrent neural network /

- rniss argument fllling

1) 本文责任编委 赵铁军 -

表 1 事件句1中的元素

Table 1 Arguments in event sentence 1

元素 对应内容 译文 Time-Arg

2017年1月1日时间11时左右 Place-Arg

南京雨花西路和共青团路交叉口 Wrecker-Arg Suffer-Arg

母亲 Tool-Arg

一辆货车 表 2 事件句2中的元素

Table 2 Arguments in event sentence 2

元素 对应内容 译文 Agent-Arg

过路人 Artifact-Arg

女子怀里9个月的婴儿 Tool-Arg Origin-Arg Destination-Arg

南京市第一医院 Time-Arg 表 3 模型最优参数表

Table 3 Optimal parameters

参数 值 lr 0.005 lrdr 0.1 bs 16 ep 50 dr 0.3 opt adam 表 4 不同样本对实验性能的影响(%)

Table 4 Hyper parameters of experiment (%)

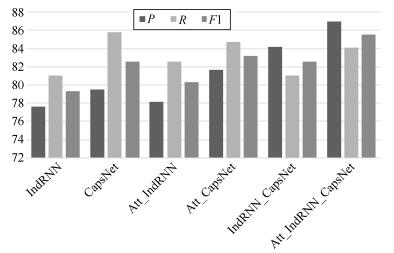

样本种类 P R F1 样本1 85.76 80.6 83.1 样本2 86.94 84.14 85.52 表 5 本文模型与其他模型实验性能对比(%)

Table 5 Comparison between our model and other models (%)

模型 P R F1 IndRNN 77.6 81.06 79.29 CapsNet 79.51 85.84 82.55 Att_IndRNN 78.13 82.54 80.27 Att_CapsNet 81.63 84.74 83.16 IndRNN_CapsNet 84.17 81.02 82.56 Att_IndRNN_CapsNet 86.94 84.14 85.52 表 6 词向量对实验性能的影响(%)

Table 6 Influence of word vector dimension (%)

维度 P R F1 10 78.3 83.58 80.85 30 81.44 84.27 82.83 50 86.94 84.14 85.52 100 84.45 83.55 84 150 80.17 80.09 81.12 表 7 不同种类特征对实验性能的影响(%)

Table 7 Influence of different kinds of features

特征 P R F1 语义特征A 77.84 81.92 79.83 语义特征B 78.85 83.66 81.18 规则特征 74.66 77.87 76.23 语义特征A +规则特征 81.17 86.44 83.72 语义特征B +规则特征 86.94 84.14 85.52 表 8 独立特征与融合特征对实验性能的影响(%)

Table 8 Influence of independent features and fusion features (%)

模型 P R F1 Att_IndRNNh+w_CapsNeth+w 82.7 83.61 83.15 Att_IndRNNw_CapsNeth 76.6 86.87 81.41 Att_IndRNNh_CapsNetw 86.94 84.14 85.52 表 9 独立循环神经网络层数对实验性能的影响(%)

Table 9 Influence of the number of IndRNN (%)

层数 P R F1 1 81.89 83.96 82.96 2 86.94 84.14 85.52 3 82.56 81.38 81.96 -

[1] Mann G S, Yarowsky D. Multi-field information extraction and cross-document fusion. In: Proceedings of the 43rd Annual Meeting on Association for Computational Linguistics. Ann Arbor, USA: ACL, 2005. 483-490 [2] 姜吉发. 自由文本的信息抽取模式获取的研究[博士学位论文], 中国科学院研究生院(计算技术研究所), 2004Jiang Ji-Fa. A Research about the Pattern Acquisition for Free Text IE [Ph. D. dissertation], Graduate School of Chinese Academy of Sciences (Institute of Computing Technology), 2004 [3] Patwardhan S, Riloff E. Effective information extraction with semantic affinity patterns and relevant regions. In: Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning. Prague, Czech Republic: ACL, 2007. 717-727 [4] Chen C, Ng V. Joint modeling for chinese event extraction with rich linguistic features. In: Proceedings of COLING 2012. Mumbai, India: ACL, 2012. 529-544 [5] 余凯, 贾磊, 陈雨强, 徐伟. 深度学习的昨天, 今天和明天. 计算机研究与发展, 2013, 50(9): 1799-1804 https://www.cnki.com.cn/Article/CJFDTOTAL-JFYZ201309002.htmYu Kai, Jia Lei, Chen Yu-Qiang, Xu Wei. Deep learning: yesterday, today, and tomorrow. Journal of Computer Research and Development, 2013, 50(9): 1799-1804 https://www.cnki.com.cn/Article/CJFDTOTAL-JFYZ201309002.htm [6] 林奕欧, 雷航, 李晓瑜, 吴佳. 自然语言处理中的深度学习: 方法及应用. 电子科技大学学报, 2017, 46(6): 913-919 doi: 10.3969/j.issn.1001-0548.2017.06.021Lin Yi-Ou, Lei Hang, LI Xiao-Yu, Wu Jia. Deep learning in NLP: Methods and applications. Journal of University of Electronic Science and Technology of China, 2017, 46(6): 913-919 doi: 10.3969/j.issn.1001-0548.2017.06.021 [7] 奚雪峰, 周国栋. 面向自然语言处理的深度学习研究. 自动化学报, 2016, 42(10): 1445-1465 doi: 10.16383/j.aas.2016.c150682Xi Xue-Feng, Zhou Guo-Dong. A survey on deep learning for natural language processing. Acta Automatica Sinica, 2016, 42(10): 1445-1465 doi: 10.16383/j.aas.2016.c150682 [8] Chen Y B, Xu L H, Liu K, Zeng D L, Zhao J. Event extraction via dynamic multi-pooling convolutional neural networks. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing. Beijing, China: ACL, 2015. 167-176 [9] Chang C Y, Teng Z, Zhang Y. Expectation-regulated neural model for event mention extraction. In: Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. San Diego, USA: ACL, 2016. 400-410 [10] Zeng Y, Yang H H, Feng Y S, Wang Z, Zhao D Y. A convolution BiLSTM neural network model for Chinese event extraction. In: Proceedings of the International Conference on Computer Processing of Oriental Languages. Cham, Switzerland: Springer, 2016. 275-287 [11] 田生伟, 胡伟, 禹龙, 吐尔根·依布拉音, 赵建国, 李圃. 结合注意力机制的Bi-LSTM维吾尔语事件时序关系识别. 东南大学学报(自然科学版), 2018, 48(03): 393-399 https://www.cnki.com.cn/Article/CJFDTOTAL-DNDX201803004.htmTian Sheng-Wei, Hu Wei, Yu long, Turglm Ibrahim, Zhao Jian-Guo, Li Pu. Temporal relation identification of Uyghur event based on Bi-LSTM with attention mechanism. Journal of Southeast University (Natural Science Edition), 2018, 48(03): 393-399 https://www.cnki.com.cn/Article/CJFDTOTAL-DNDX201803004.htm [12] 黎红, 禹龙, 田生伟, 吐尔根·依布拉音, 赵建国. 基于DCNNs-LSTM模型的维吾尔语突发事件识别研究. 中文信息学报, 2018, 32(6): 52-61Li Hong, Yu Long, Tian Sheng-Wei, Turglm Ibrahim. Uyghur emergency event extraction based on DCNNs-LSTM model. Journal of Chinese Information Processing, 2018, 32(6): 52-61 [13] Gupta P, Ji H. Predicting unknown time arguments based on cross-event propagation. In: Proceedings of the ACL-IJCNLP 2009 Conference Short Papers. Suntec, Singapore: ACL, 2009. 369-372 [14] Huang R, Riloff E. Peeling back the layers: detecting event role fillers in secondary contexts. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1. Portland, USA: ACL, 2011. 1137-1147 [15] 侯立斌, 李培峰, 朱巧明, 钱培德. 基于跨事件理论的缺失事件角色填充研究. 计算机科学, 2012, 39(7): 200-204 doi: 10.3969/j.issn.1002-137X.2012.07.045Hou Li-Bin, Li Pei-Feng, Zhu Qiao-Ming, Qian Pei-De. Using cross-event inference to fill missing event argument. Computer Science, 2012, 39(7): 200-204 doi: 10.3969/j.issn.1002-137X.2012.07.045 [16] 赵文娟, 刘忠宝, 王永芳. 基于句法依存分析的事件角色填充研究. 情报科学, 2017, 35(07): 65-69 https://www.cnki.com.cn/Article/CJFDTOTAL-QBKX201707012.htmZhao Wen-Juan, Liu Zhong-Bao, Wang Yong-Fang. Research on event role annotation based on syntactic dependency analysis. Information Science, 2017, 35(07): 65-69 https://www.cnki.com.cn/Article/CJFDTOTAL-QBKX201707012.htm [17] Yin Q Y, Zhang Y, Zhang W N, Liu T, Wang W. Zero pronoun resolution with attention-based neural network. In: Proceedings of the 27th International Conference on Computational Linguistics. Santa Fe, USA: ACL, 2018. 13-23 [18] 冯冲, 康丽琪, 石戈, 黄河燕. 融合对抗学习的因果关系抽取. 自动化学报, 2018, 44(05): 811-818 doi: 10.16383/j.aas.2018.c170481Feng Chong, Kang Li-Qi, Shi Ge, Huang He-Yan. Causality extraction with GAN. Acta Automatica Sinica, 2018, 44(05): 811-818 doi: 10.16383/j.aas.2018.c170481 [19] 付剑锋. 面向事件的知识处理研究[博士学位论文], 上海大学, 中国, 2010Fu Jian-Feng. Research on Event-Oriented Knowledge Processing [Ph. D. dissertation], Shanghai University, China, 2010 [20] Lakew S M, Cettolo M, Federico M. NA Comparison of Transformer and Recurrent Neural Networks on Multilingual Neural Machine Translation [Online], available: https://arxiv.org/pdf/1806.06957.pdf, June 20, 2018 [21] Li S, Li W, Cook C, Zhu C, Gao Y B. Independently recurrent neural network (indrnn): building a longer and deeper RNN. In: Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 5457-5466 [22] Sabour S, Frosst N, Hinton G E. Dynamic routing between capsules. In: Proceedings of the Advances in Neural Information Processing Systems. Long Beach, USA: Curran Associates, Inc., 2017. 3856-3866 [23] Zhao W, Ye J B, Yang M, Lei Z Y, Zhang S F, Zhao Z. Investigating Capsule Networks with Dynamic Routing for Text Classification [Online], available: https://arxiv.org/pdf/1804.00538.pdf, September 3, 2018 [24] 贺宇, 潘达, 付国宏. 基于自动编码特征的汉语解释性意见句识别. 北京大学学报(自然科学版), 2015, 51(2): 234-240 https://www.cnki.com.cn/Article/CJFDTOTAL-BJDZ201502006.htmHe Yu, Pan Da, Fu Guo-Hong. Chinese explanatory opinionated sentence recognition based on auto-encoding features. Acta Scientiarum Naturalium Universitatis Pekinensis, 2015, 51(2): 234-240 https://www.cnki.com.cn/Article/CJFDTOTAL-BJDZ201502006.htm [25] Mikolov T, Sutskever I, Chen K, Corrado G S, Distributed representations of words and phrases and their compositionality. In: Proceedings of the Advances in Neural Information Processing Systems. Lake Tahoe, USA: Curran Associates, Inc., 2013. 3111-3119 -

下载:

下载: