-

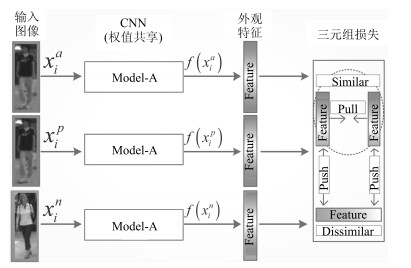

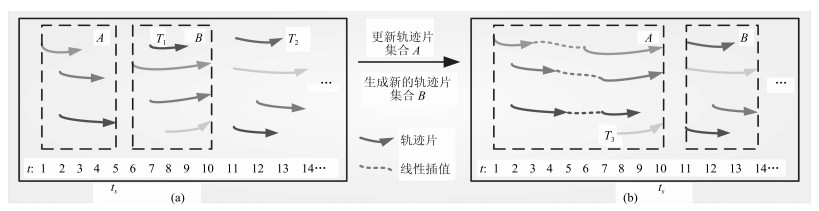

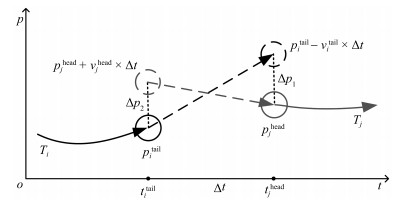

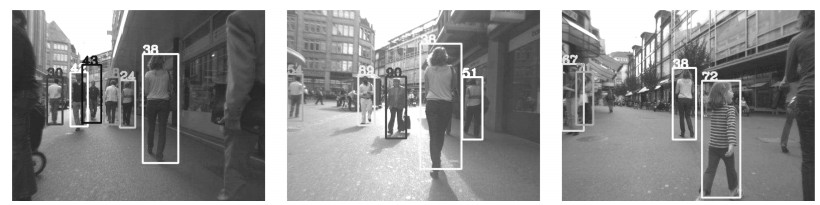

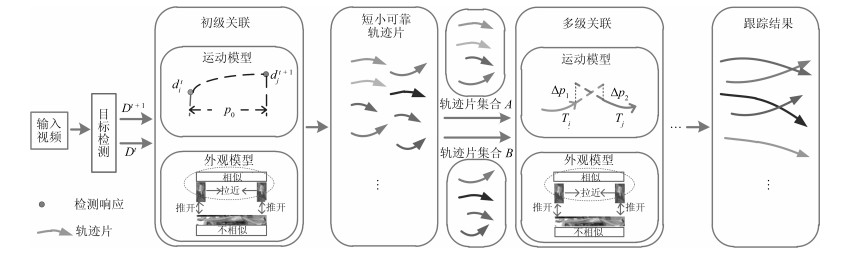

摘要: 近年来, 深度学习在计算机视觉领域的应用取得了突破性进展, 但基于深度学习的视频多目标跟踪(Multiple object tracking, MOT)研究却相对甚少, 而鲁棒的关联模型设计是基于检测的多目标跟踪方法的核心.本文提出一种基于深度神经网络和度量学习的关联模型:采用行人再识别(Person re-identification, Re-ID)领域中广泛使用的度量学习技术和卷积神经网络(Convolutional neural networks, CNNs)设计目标外观模型, 即利用三元组损失函数设计一个三通道卷积神经网络, 提取更具判别性的外观特征构建目标外观相似度; 再结合运动模型计算轨迹片间的关联概率.在关联策略上, 采用匈牙利算法, 首先以逐帧关联方式得到短小可靠的轨迹片集合, 再通过自适应时间滑动窗机制多级关联, 输出各目标最终轨迹.在2DMOT2015、MOT16公开数据集上的实验结果证明了所提方法的有效性, 与当前一些主流算法相比较, 本文方法取得了相当或者领先的跟踪效果.Abstract: While deep learning has made a breakthrough in many sub-fields of computer vision recently, there are only a few deep learning approaches to multiple object tracking (MOT). Since the key component in detection based multiple object tracking is to design a robust affinity model, this paper proposes a novel affinity model based on deep neural network and metric learning, that is, metric learning, a widely used technique in the task of person re-identification (Re-ID), is exploited with convolutional neural networks (CNNs) to design the object's appearance model. Specifically, we adopt a three-channel CNNs that is learned by triplet loss function, to extract the discriminative appearance features and compute appearance similarity between objects. The appearance affinity is then combined with motion model to estimate associating probability among trajectories. A hierarchical association strategy is employed by the Hungarian algorithm. At the low level, a set of short but reliable tracklets are generated in a frame by frame fashion. These tracklets are then further associated to form longer tracklets at the higher levels via an adaptive sliding-window mechanism. Experiment results in the challenging MOT benchmark demonstrate the validity of the proposed method. Compared with several state-of-the-art approaches, our method has achieved competitive or superior performance.

-

Key words:

- Multiple object tracking (MOT) /

- deep learning /

- metric learning /

- affinity model /

- multi-level association

1) 本文责任编委 桑农 -

表 1 剥离对比实验结果

Table 1 Results of ablation study

Trackers MOTA ($\uparrow$) MOTP ($\uparrow$) MT ($\uparrow$) (%) ML ($\downarrow$) (%) FP ($\downarrow$) FN ($\downarrow$) IDS ($\downarrow$) A + T 19.5 74.6 7.41 66.70 109 14 202 43 M + T 17.6 74.6 7.40 64.80 307 14 326 70 A + M + T 21.0 74.3 9.26 70.40 175 13 893 16 A + M + V 14.7 75.1 1.85 67.00 60 14 804 339 表 2 MOT16测试集结果

Table 2 Results of MOT16 test set

Trackers Mode MOTA ($\uparrow$) MOTP ($\uparrow$) MT ($\uparrow$) (%) ML ($\downarrow$) (%) FP ($\downarrow$) FN ($\downarrow$) IDS ($\downarrow$) HZ ($\uparrow$) AMIR[20] Online 47.2 75.8 14.0 41.6 2 681 92 856 774 1.0 CDA[45] Online 43.9 74.7 10.7 44.4 6 450 95 175 676 0.5 本文 Online 43.1 74.2 12.4 47.7 4 228 99 057 495 0.7 EAMTT[41] Online 38.8 75.1 7.9 49.1 8 114 102 452 965 11.8 OVBT[42] Online 38.4 75.4 7.5 47.3 11 517 99 463 1 321 0.3 [2mm] Quad-CNN[17] Batch 44.1 76.4 14.6 44.9 6 388 94 775 745 1.8 LIN1[43] Batch 41.0 74.8 11.6 51.3 7 896 99 224 430 4.2 CEM[44] Batch 33.2 75.8 7.8 54.4 6 837 114 322 642 0.3 表 3 2DMOT2015测试集结果

Table 3 Results of 2DMOT2015 test set

Trackers Mode MOTA ($\uparrow$) MOTP ($\uparrow$) MT ($\uparrow$) (%) ML ($\downarrow$) (%) FP ($\downarrow$) FN ($\downarrow$) IDS ($\downarrow$) HZ ($\uparrow$) AMIR[20] Online 37.6 71.7 15.8 26.8 7 933 29 397 1 026 1.9 本文 Online 34.2 71.9 8.9 40.6 7965 31665 794 0.7 CDA[45] Online 32.8 70.7 9.7 42.2 4 983 35 690 614 2.3 RNN_LSTM[24] Online 19.0 71.0 5.5 45.6 11 578 36 706 1 490 165.2 Quad-CNN[17] Batch 33.8 73.4 12.9 36.9 7 879 32 061 703 3.7 MHT_DAM[46] Batch 32.4 71.8 16.0 43.8 9 064 32 060 435 0.7 CNNTCM[22] Batch 29.6 71.8 11.2 44.0 7 786 34 733 712 1.7 Siamese CNN[19] Batch 29.0 71.2 8.5 48.4 5 160 37 798 639 52.8 LIN1[43] Batch 24.5 71.3 5.5 64.6 5 864 40 207 298 7.5 表 4 UA-DETRAC数据集跟踪结果

Table 4 Tracking results of UA-DETRAC dataset

MOTA ($\uparrow$) MOTP ($\uparrow$) MT ($\uparrow$) (%) ML ($\downarrow$) (%) FP ($\downarrow$) FN ($\downarrow$) IDS ($\downarrow$) 车辆跟踪 65.3 78.5 75.0 8.3 1 069 481 27 -

[1] Luo W H, Xing J L, Milan A, Zhang X Q, Liu W, Zhao X W, et al. Multiple object tracking: A literature review. arXiv preprint arXiv: 1409.7618, 2014. [2] Felzenszwalb P F, Girshick R B, McAllester D, Ramanan D. Object detection with discriminatively trained part-based models. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 32(9): 1627-1645 doi: 10.1109/TPAMI.2009.167 [3] Girshick R. Fast R-CNN. In: Proceedings of the 2015 IEEE International Conference on Computer Vision. Santiago, Chile: IEEE, 2015. 1440-1448 [4] 尹宏鹏, 陈波, 柴毅, 刘兆栋.基于视觉的目标检测与跟踪综述.自动化学报, 2016, 42(10): 1466-1489 doi: 10.16383/j.aas.2016.c150823Yin Hong-Peng, Chen Bo, Chai Yi, Liu Zhao-Dong. Vision-based object detection and tracking: A review. Acta Automatica Sinica, 2016, 42(10): 1466-1489 doi: 10.16383/j.aas.2016.c150823 [5] Xiang J, Sang N, Hou J H, Huang R, Gao C X. Hough forest-based association framework with occlusion handling for multi-target tracking. IEEE Signal Processing Letters, 2016, 23(2): 257-261 doi: 10.1109/LSP.2015.2512878 [6] Yang B, Nevatia R. Multi-target tracking by online learning of non-linear motion patterns and robust appearance models. In: Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition. Providence, USA: IEEE, 2012. 1918-1925 [7] Nummiaro K, Koller-Meier E, Van Gool L. An adaptive color-based particle filter. Image and Vision Computing, 2003, 21(1): 99-110 doi: 10.1016/S0262-8856(02)00129-4 [8] Dalal N, Triggs B. Histograms of oriented gradients for human detection. In: Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. San Diego, USA: IEEE, 2005. 886-893 [9] Tuzel O, Porikli F, Meer P. Region covariance: A fast descriptor for detection and classification. In: Proceedings of the 2006 European Conference on Computer Vision. Graz, Austria: Springer, 2006. 589-600 [10] Xiang J, Sang N, Hou J H, Huang R, Gao C X. Multitarget tracking using Hough forest random field. IEEE Transactions on Circuits and Systems for Video Technology, 2016, 26(11): 2028-2042 doi: 10.1109/TCSVT.2015.2489438 [11] Milan A, Leal-Taixé L, Reid I, Roth S, Schindler K. MOT16: A benchmark for multi-object tracking. arXiv preprint arXiv: 1603.00831, 2016. [12] Leal-Taixé L, Milan A, Reid I, Roth S, Schindler K. MOTChallenge 2015: Towards a benchmark for multi-target tracking. arXiv preprint arXiv: 1504.01942, 2015. [13] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks. In: Proceedings of the 25th International Conference on Neural Information Processing Systems. Lake Tahoe, USA: MIT, 2012. 1097-1105 [14] 管皓, 薛向阳, 安志勇.深度学习在视频目标跟踪中的应用进展与展望.自动化学报, 2016, 42(6): 834-847 doi: 10.16383/j.aas.2016.c150705Guan Hao, Xue Xiang-Yang, An Zhi-Yong. Advances on application of deep learning for video object tracking. Acta Automatica Sinica, 2016, 42(6): 834-847 doi: 10.16383/j.aas.2016.c150705 [15] Bertinetto L, Valmadre J, Henriques J F, Vedaldi A, Torr P H S. Fully-convolutional siamese networks for object tracking. In: Proceedings of the 2016 European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 850-865 [16] Danelljan M, Robinson A, Khan F S, Felsberg M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In: Proceedings of the 2016 European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 472-488 [17] Son J, Baek M, Cho M, Han B. Multi-object tracking with quadruplet convolutional neural networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 3786-3795 [18] Emami P, Pardalos P M, Elefteriadou L, Ranka S. Machine learning methods for solving assignment problems in multi-target tracking. arXiv preprint arXiv: 1802.06897, 2018. [19] Leal-Taixé L, Canton-Ferrer C, Schindler K. Learning by tracking: Siamese CNN for robust target association. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops. Las Vegas, USA: IEEE, 2016. 418-425 [20] Sadeghian A, Alahi A, Savarese S. Tracking the untrackable: Learning to track multiple cues with long-term dependencies. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 300- 311 [21] Tang S Y, Andriluka M, Andres B, Schiele B. Multiple people tracking by lifted multicut and person re-identification. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 3701-3710 [22] Wang B, Wang L, Shuai B, Zuo Z, Liu T, Chan K L, Wang G. Joint learning of convolutional neural networks and temporally constrained metrics for tracklet association. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops. Las Vegas, USA: IEEE, 2016. 368-393 [23] Gold S, Rangarajan A. Softmax to softassign: Neural network algorithms for combinatorial optimization. Journal of Artificial Neural Networks, 1996, 2(4): 381-399 http://dl.acm.org/citation.cfm?id=235919 [24] Milan A, Rezatofighi S H, Dick A, Schindler K, Reid I. Online multi-target tracking using recurrent neural networks. In: Proceedings of the 2017 AAAI Conference on Artificial Intelligence. San Francisco, USA: AAAI, 2017. 2-4 [25] Beyer L, Breuers S, Kurin V, Leibe B. Towards a principled integration of multi-camera re-identification and tracking through optimal Bayes filters. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Honolulu, USA: IEEE, 2017. 1444-1453 [26] Farazi H, Behnke S. Online visual robot tracking and identification using deep LSTM networks. In: Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems. Vancouver, Canada: IEEE, 2017. 6118 -6125 [27] Kuo C H, Nevatia R. How does person identity recognition help multi-person tracking? In: Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition. Providence, RI, USA: IEEE, 2011. 1217-1224 [28] Xiao Q Q, Luo H, Zhang C. Margin sample mining loss: A deep learning based method for person re-identification. arXiv preprint arXiv: 1710.00478, 2017. [29] Huang C, Wu B, Nevatia R. Robust object tracking by hierarchical association of detection responses. In: Proceedings of the 2008 European Conference on Computer Vision. Marseille, France: Springer, 2008. 788-801 [30] Cheng D, Gong Y H, Zhou S P, Wang J J, Zheng N N. Person re-identification by multi-channel parts-based CNN with improved triplet loss function. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 1335-1344 [31] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 770-778 [32] Ioffe S, Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: Proceedings of the 32nd International Conference on Machine Learning. Lille, France: ACM, 2015. 448-456 [33] Glorot X, Bordes A, Bengio Y. Deep sparse rectifier neural networks. In: Proceedings of the 14th International Conference on Artificial Intelligence and Statistics. Fort Lauderdale, USA: JMLR, 2011. 315-323 [34] Schroff F, Kalenichenko D, Philbin J. FaceNet: A unified embedding for face recognition and clustering. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 815-823 [35] Zheng L, Shen L Y, Tian L, Wang S J, Wang J D, Tian Q. Scalable person re-identification: A benchmark. In: Proceedings of the 2015 IEEE International Conference on Computer Vision. Santiago, Chile: IEEE, 2015. 1116-1124 [36] Li W, Zhao R, Xiao T, Wang X G. DeepReID: Deep filter pairing neural network for person re-identification. In: Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition. Columbus, USA: IEEE, 2014. 152 -159 [37] Hermans A, Beyer L, Leibe B. In defense of the triplet loss for person re-identification. arXiv preprint arXiv: 1703. 07737, 2017. [38] Kingma D P, Ba J. Adam: A method for stochastic optimization. arXiv preprint arXiv: 1412.6980, 2014. [39] Yang B, Nevatia R. Multi-target tracking by online learning a CRF model of appearance and motion patterns. International Journal of Computer Vision, 2014, 107(2): 203-217 doi: 10.1007/s11263-013-0666-4 [40] Bernardin K, Stiefelhagen R. Evaluating multiple object tracking performance: The CLEAR MOT metrics. EURASIP Journal on Image and Video Processing, 2008, 2008: 246309 http://dl.acm.org/citation.cfm?id=1453688 [41] Sanchez-Matilla R, Poiesi F, Cavallaro A. Online multi-target tracking with strong and weak detections. In: Proceedings of the 2016 European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 84-99 [42] Ban Y T, Ba S, Alameda-Pineda X, Horaud R. Tracking multiple persons based on a variational Bayesian model. In: Proceedings of the 2016 European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 52- 67 [43] Fagot-Bouquet L, Audigier R, Dhome Y, Lerasle F. Improving multi-frame data association with sparse representations for robust near-online multi-object tracking. In: Proceedings of the 2016 European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 774-790 [44] Milan A, Roth S, Schindler K. Continuous energy minimization for multitarget tracking. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(1): 58-72 doi: 10.1109/TPAMI.2013.103 [45] Bae S H, Yoon K J. Confidence-based data association and discriminative deep appearance learning for robust online multi-object tracking. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(3): 595-610 doi: 10.1109/TPAMI.2017.2691769 [46] Kim C, Li F X, Ciptadi A, Rehg J M. Multiple hypothesis tracking revisited. In: Proceedings of the 2015 IEEE International Conference on Computer Vision. Santiago, Chile: IEEE, 2015. 4696-4704 [47] Girshick R, Donahue J, Darrell T, Malik J. Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition. Columbus, USA: IEEE, 2014. 580-587 -

下载:

下载: