|

[1]

|

罗跃嘉, 魏景汉.注意的认知神经科学研究.北京:高等教育出版社, 2004. 27-47 http://www.cnki.com.cn/Article/CJFDTOTAL-SYQY201603027.htmLuo Yue-Jia, Wei Jin-Han. Attentive Research and Cognitive Neuroscience. Beijing:Higher Education Press, 2004. 27-47 http://www.cnki.com.cn/Article/CJFDTOTAL-SYQY201603027.htm

|

|

[2]

|

Pylkkonen J. Towards Efficient and Robust Automatic Speech Recognition:Decoding Techniques and Discriminative Training[Ph.D. dissertation], Aalto University, Finland, 2013

|

|

[3]

|

刘文举, 聂帅, 梁山, 张学良.基于深度学习语音分离技术的研究现状与进展.自动化学报, 2016, 42(6):819-833 http://www.aas.net.cn/CN/abstract/abstract18873.shtmlLiu Wen-Ju, Nie Shuai, Liang Shan, Zhang Xue-Liang. Deep learning based speech separation technology and its developments. Acta Automatica Sinica, 2016, 42(6):819-833 http://www.aas.net.cn/CN/abstract/abstract18873.shtml

|

|

[4]

|

段艳杰, 吕宜生, 张杰, 赵学亮, 王飞跃.深度学习在控制领域的研究现状与展望.自动化学报, 2016, 42(5):643-654 http://www.aas.net.cn/CN/abstract/abstract18852.shtmlDuan Yan-Jie, Lv Yi-Sheng, Zhang Jie, Zhao Xue-Liang, Wang Fei-Yue. Deep learning for control:the state of the art and prospects. Acta Automatica Sinica, 2016, 42(5):643-654 http://www.aas.net.cn/CN/abstract/abstract18852.shtml

|

|

[5]

|

Kayser C, Petkov C I, Lippert M, Logothetis N K. Mechanisms for allocating auditory attention:an auditory saliency map. Current Biology, 2005, 15(21):1943-1947 doi: 10.1016/j.cub.2005.09.040

|

|

[6]

|

Kalinli O, Narayanan S S. A saliency-based auditory attention model with applications to unsupervised prominent syllable detection in speech. In:Proceedings of the 8th Annual Conference of the International Speech Communication Association. Antwerp, Belgium:Interspeech, 2007. 1941-1944

|

|

[7]

|

De Coensel B, Botteldooren D. A model of saliency-based auditory attention to environmental sound. In:Proceedings of the 20th International Congress on Acoustics. Sydney, Australia:International Congress on Acoustics, 2010. 1-8

|

|

[8]

|

Kaya E M, Elhilali M. A temporal saliency map for modeling auditory attention. In:Proceedings of the 46th Annual Conference on Information Sciences and Systems. Princeton, USA:IEEE, 2012. 1-6

|

|

[9]

|

刘扬, 张苗辉, 郑逢斌.听觉选择性注意的认知神经机制与显著性计算模型.计算机科学, 2013, 40(6):283-287 http://www.cnki.com.cn/Article/CJFDTOTAL-JSJA201306065.htmLiu Yang, Zhang Miao-Hui, Zheng Feng-Bin. Cognitive neural mechanisms and saliency computational model of auditory selective attention. Computer Science, 2013, 40(6):283-287 http://www.cnki.com.cn/Article/CJFDTOTAL-JSJA201306065.htm

|

|

[10]

|

Bizley J K, Cohen Y E. The what, where and how of auditory-object perception. Nature Reviews Neuroscience, 2013, 14(10):693-707 doi: 10.1038/nrn3565

|

|

[11]

|

Roman N, Wang D L, Brown G J. Speech segregation based on sound localization. The Journal of the Acoustical Society of America, 2003, 114(4):2236-2252 doi: 10.1121/1.1610463

|

|

[12]

|

Friederici A D, Singer W. Grounding language processing on basic neurophysiological principles. Trends in Cognitive Sciences, 2015, 19(6):329-338 doi: 10.1016/j.tics.2015.03.012

|

|

[13]

|

Kayser C, Wilson C, Safaai H, Sakata S, Panzeri S. Rhythmic auditory cortex activity at multiple timescales shapes stimulus-Response gain and background firing. Journal of Neuroscience, 2015, 35(20):7750-7762 doi: 10.1523/JNEUROSCI.0268-15.2015

|

|

[14]

|

黎万义, 王鹏, 乔红.引入视觉注意机制的目标跟踪方法综述.自动化学报, 2014, 40(4):561-576 http://www.aas.net.cn/CN/abstract/abstract18323.shtmlLi Wan-Yi, Wang Peng, Qiao Hong. A survey of visual attention based methods for object tracking. Acta Automatica Sinica, 2014, 40(4):561-576 http://www.aas.net.cn/CN/abstract/abstract18323.shtml

|

|

[15]

|

Henry M J, Herrmann B, Obleser J. Selective attention to temporal features on nested time scales. Cerebral Cortex, 2015, 25(2):450-459 doi: 10.1093/cercor/bht240

|

|

[16]

|

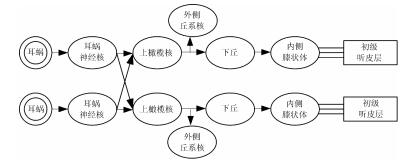

Wang W J, Wu X H, Li L. The dual-pathway model of auditory signal processing. Neuroscience Bulletin, 2008, 24(3):173-182. doi: 10.1007/s12264-008-1226-8

|

|

[17]

|

Qu T, Xiao Z, Gong M. Distance-dependent head-related transfer functions measured with high spatial resolution using a spark gap. IEEE Transactions on Audio, Speech, and Language Processing, 2009, 17(6):1124-1132 doi: 10.1109/TASL.2009.2020532

|

|

[18]

|

Cheng C I, Wakefield G H. Introduction to head-related transfer functions (HRTFs):representations of HRTFs in time, frequency, and space. In:Proceedings of the 107th Convention of the Audio-Engineering-Society. Ann Arbor, USA:University of Michigan, 2001. 231-248

|

|

[19]

|

Zhang J P, Nakamoto K T, Kitzes L M. Modulation of level response areas and stimulus selectivity of neurons in cat primary auditory cortex. Journal of Neurophysiology, 2005, 94(4):2263-2274 doi: 10.1152/jn.01207.2004

|

|

[20]

|

Jin C, Schenkel M, Carlile S. Neural system identification model of human sound localization. The Journal of the Acoustical Society of America, 2000, 108(3):1215-1235 doi: 10.1121/1.1288411

|

|

[21]

|

Algazi V R, Duda R O, Thompson D M, Avendano C. The CIPIC HRTF database. In:Proceedings of the 2009 IEEE Workshop on the Applications of Signal Processing to Audio and Acoustics. New Platz, USA:IEEE, 2001. 99-102

|

|

[22]

|

Catic J, Santurette S, Dau T. The role of reverberation-related binaural cues in the externalization of speech. The Journal of the Acoustical Society of America, 2015, 138(2):1154-1167 doi: 10.1121/1.4928132

|

|

[23]

|

Hassager H G, Gran F, Dau T. The role of spectral detail in the binaural transfer function on perceived externalization in a reverberant environment. The Journal of the Acoustical Society of America, 2016, 139(5):2992-3000 doi: 10.1121/1.4950847

|

下载:

下载: