Energy Scheduling of Heterogeneous Multi-Microgrid Based on Personalized Federated Reinforcement Learning

-

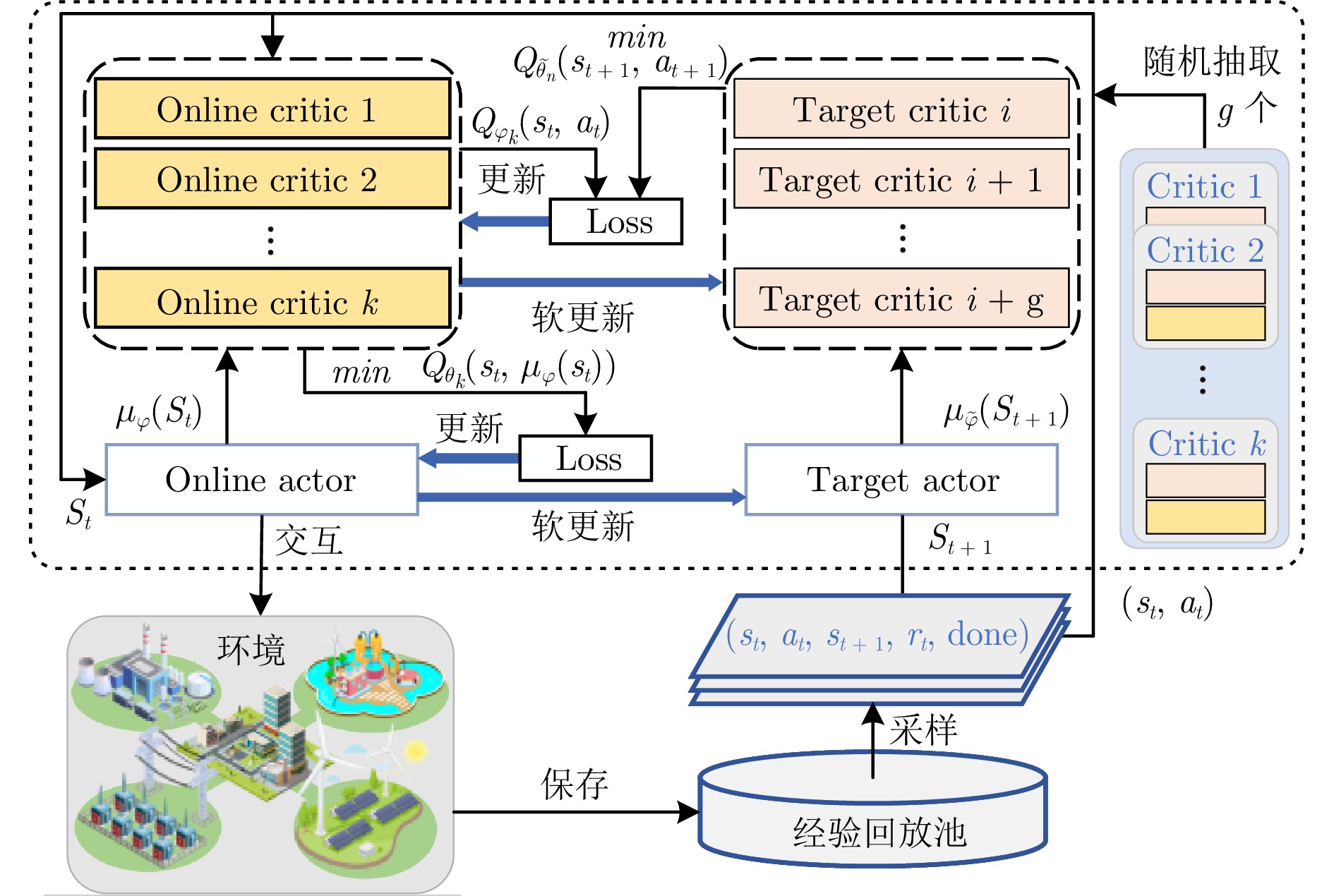

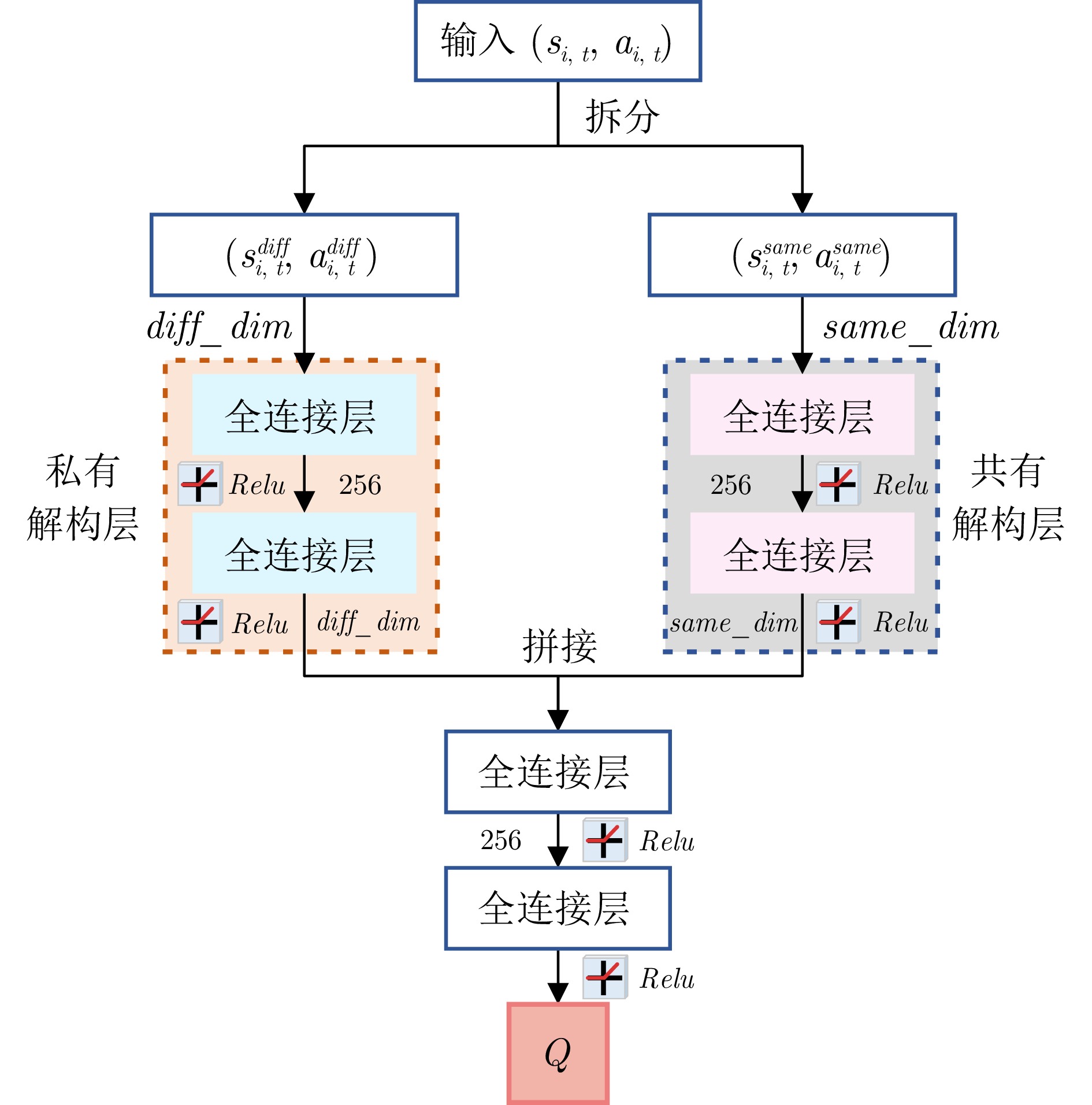

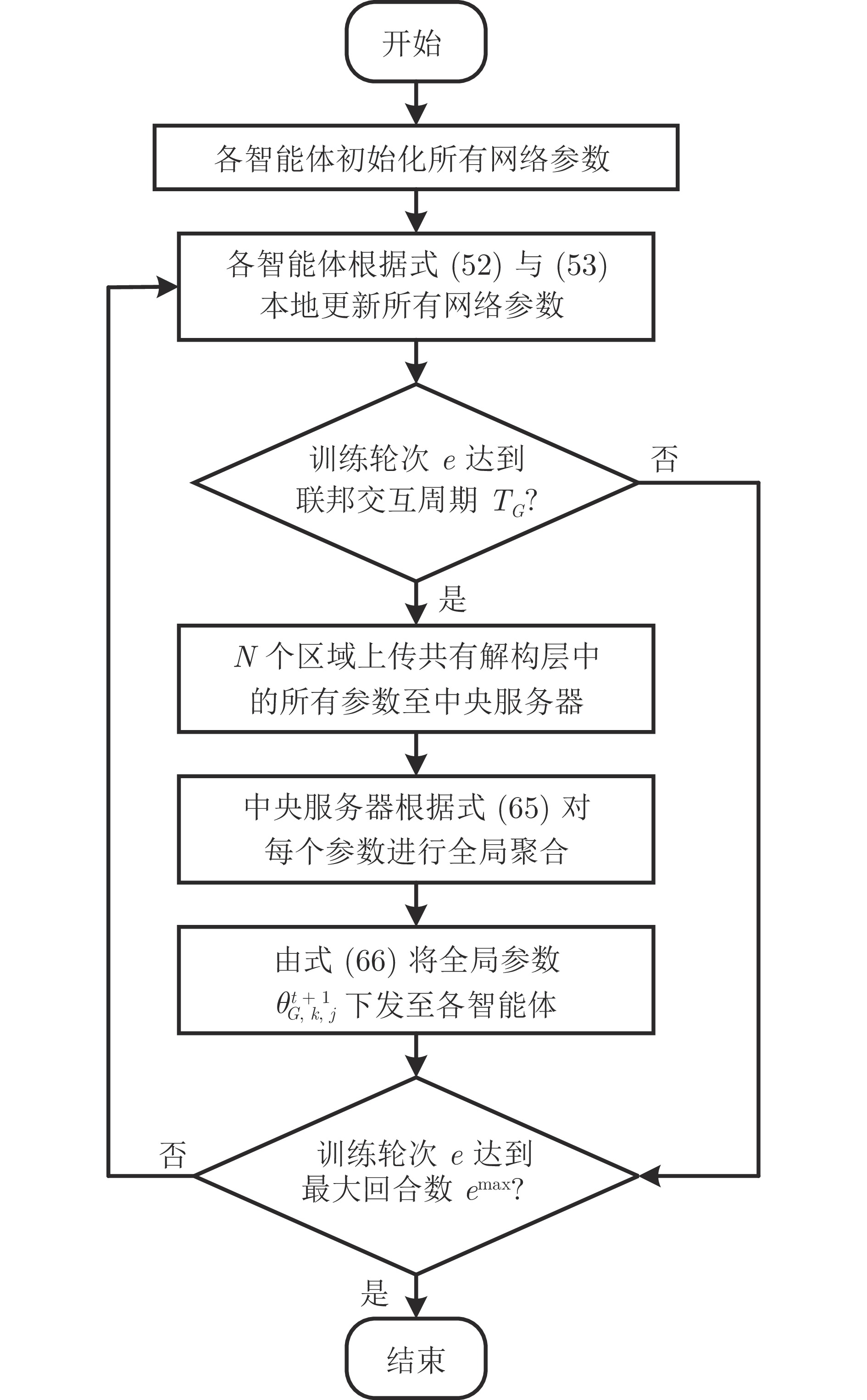

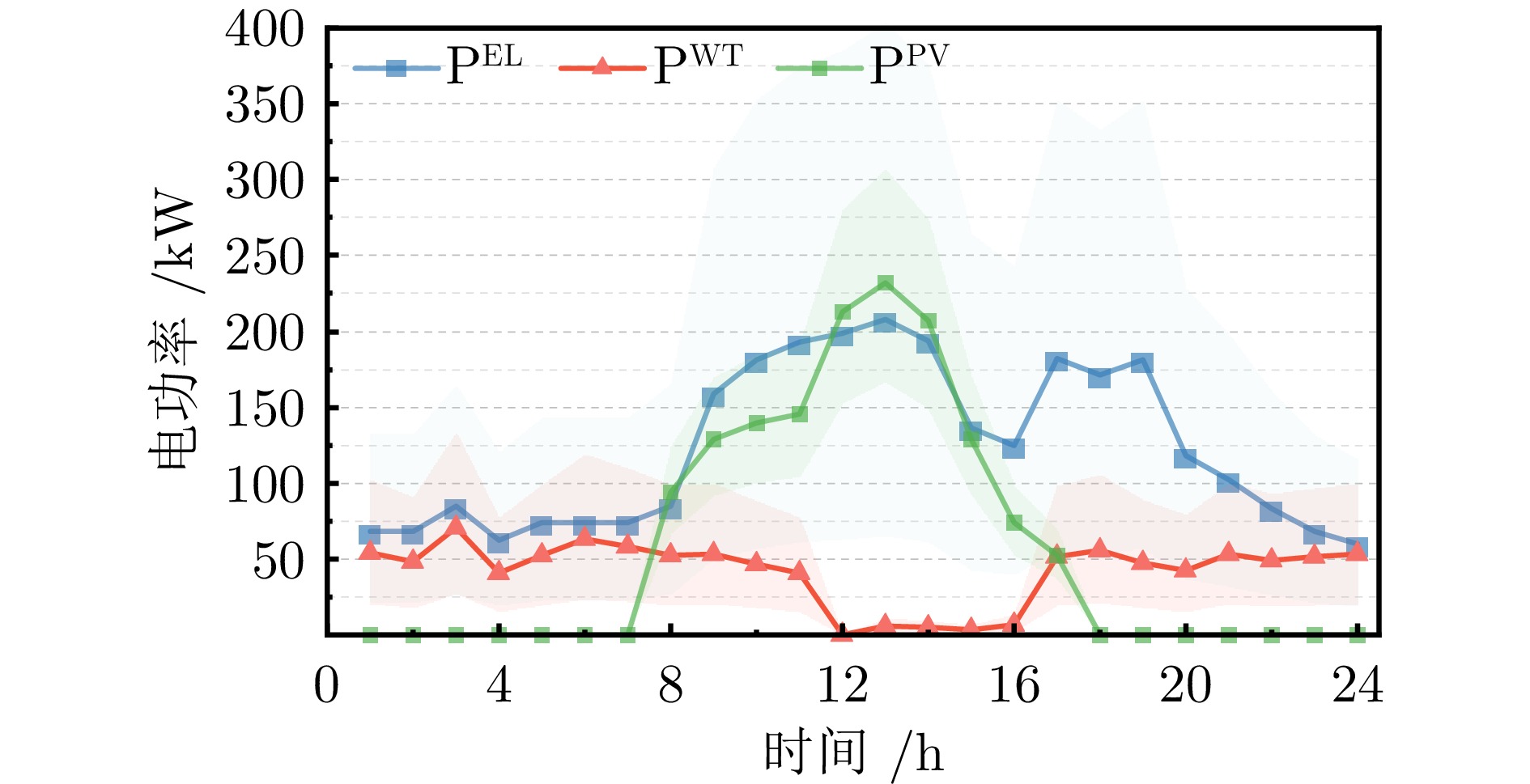

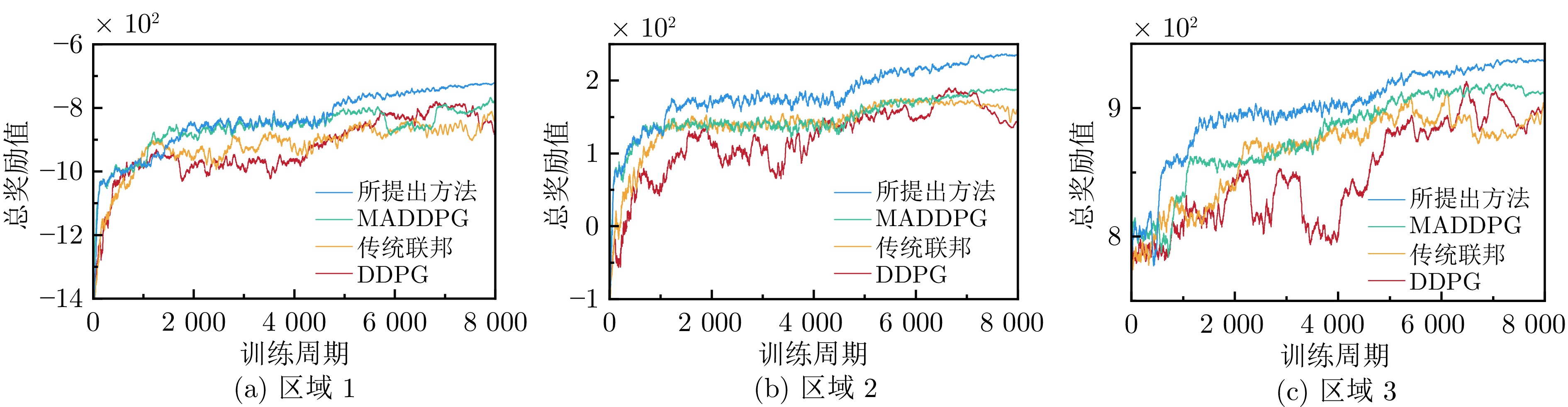

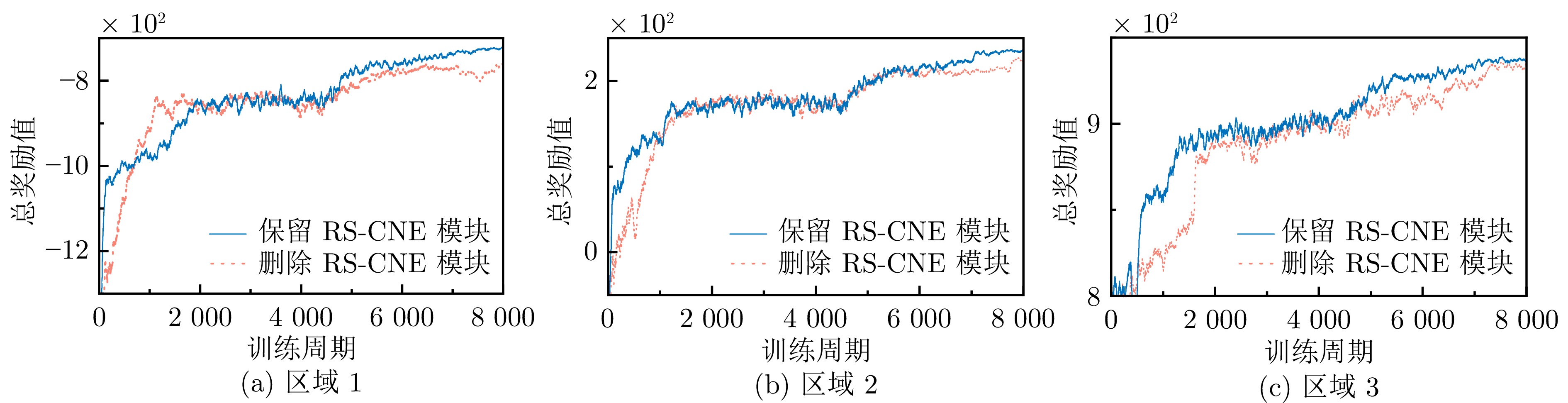

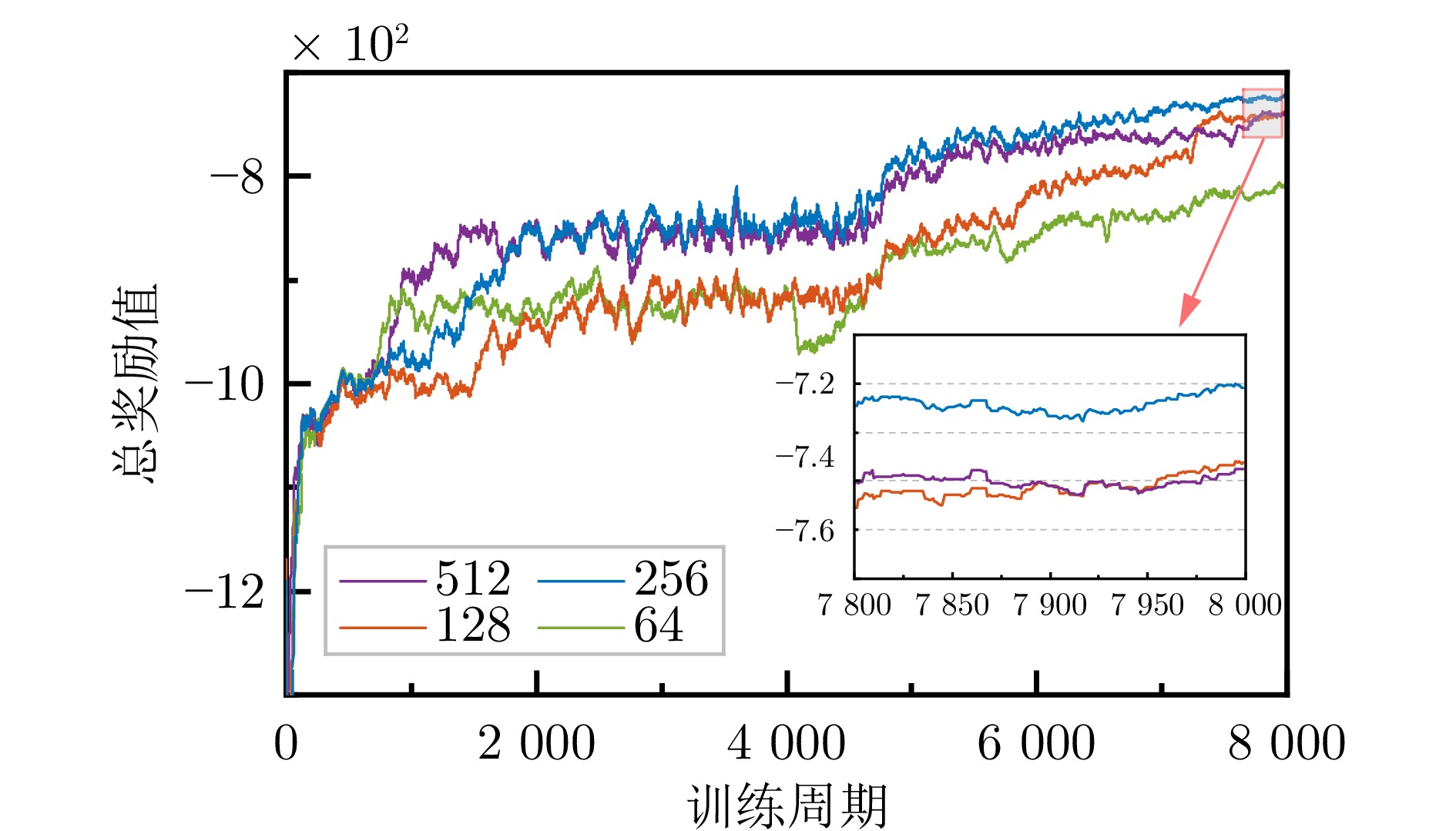

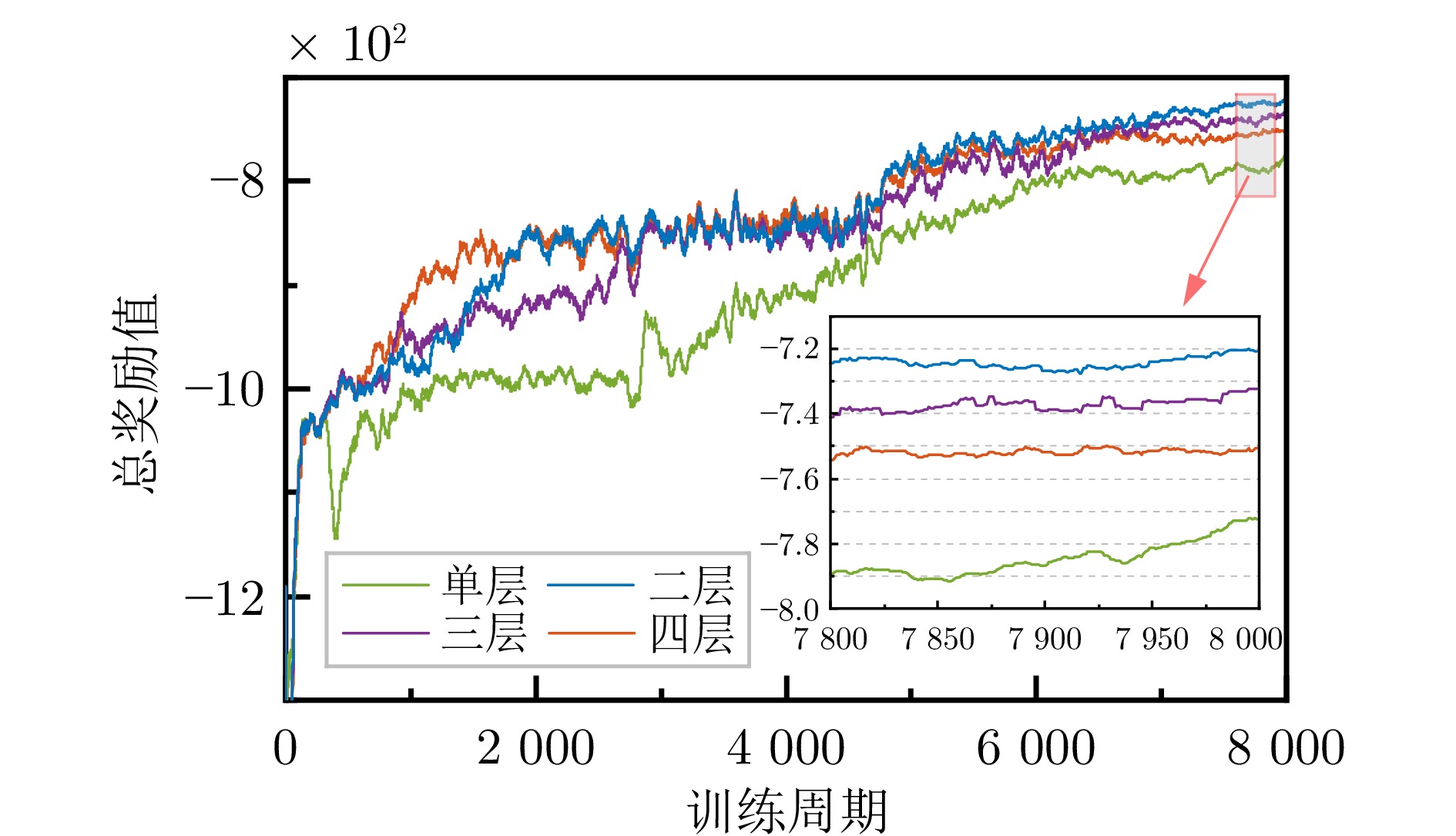

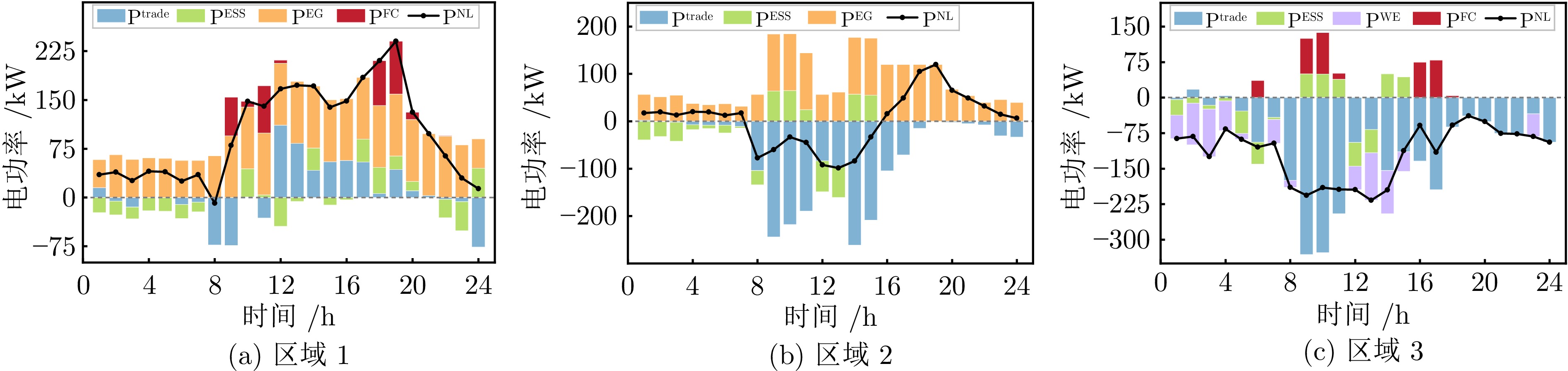

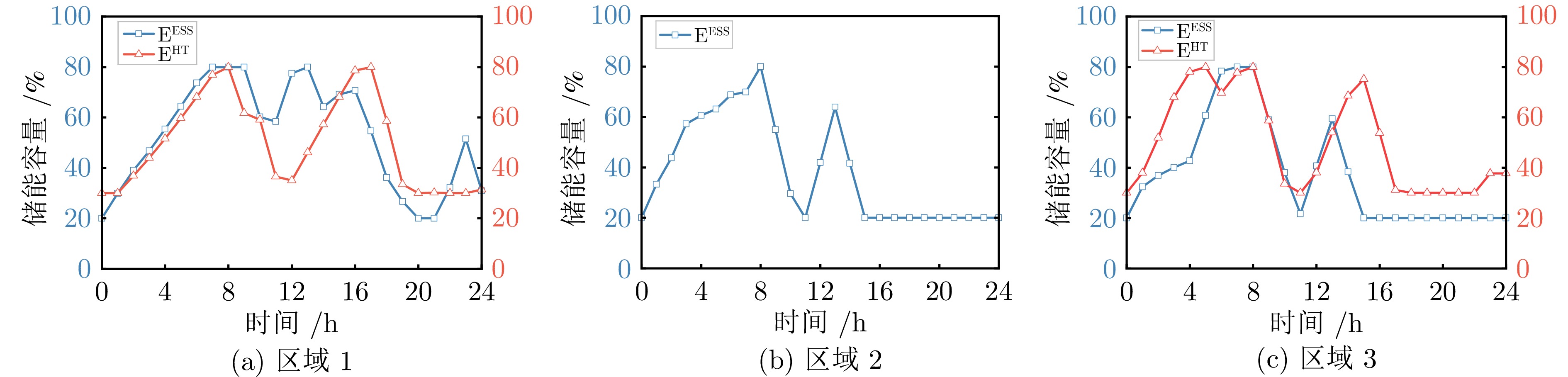

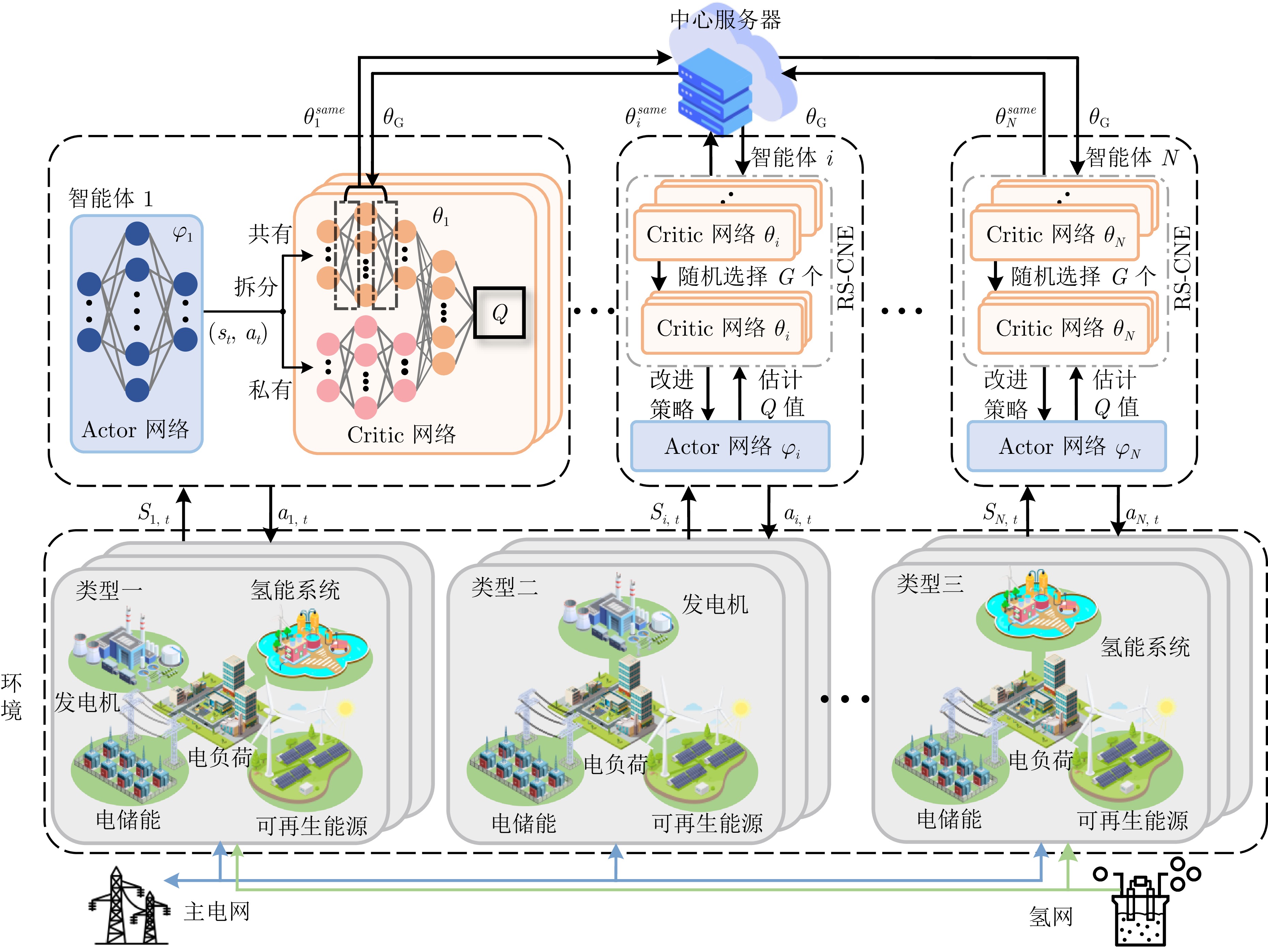

摘要: 针对多智能体强化学习中隐私泄露及联邦强化学习在多微网设备异构环境下失效的问题, 提出了一种基于个性化联邦强化学习的异构多区域微电网能量调度方法. 该方法将状态—动作对拆分为“私有”和“共有”两类, 分别输入模块化Critic网络中的私有解构层和公有解构层, 仅在前者中部署联邦框架, 既实现了公共设备网络参数的同步共享, 又保留了各区域私有设备的个性化训练, 从而在保护数据隐私的前提下完成协同优化; 同时, 引入多Critic网络随机抽样架构进行本地训练, 有效缓解Q值高估导致的策略性能下降问题. 最后, 基于三类典型微电网模型构成的异构多区域微网系统开展仿真实验. 结果表明该方法可有效克服设备异构限制, 使区域智能体快速收敛至接近最优的策略, 合理分配设备出力, 实现多微网实时能量调度并提升经济效益.Abstract: To address privacy leakage in multi-agent reinforcement learning and the breakdown of federated reinforcement learning under device heterogeneity in multi-microgrid environments, proposing a personalized federated reinforcement learning–based energy scheduling method for heterogeneous multi-region microgrids. State–action pairs are classified into “private” and “shared” categories and fed into the private and shared deconstruction layers of a modular Critic network, respectively; the federated framework is applied exclusively to the shared layer, enabling synchronous sharing of public device network parameters while preserving each region's private device personalization, thus achieving collaborative optimization under data-privacy constraints. Concurrently, a multi-Critic random-sampling architecture is employed for local training to effectively mitigate strategy performance degradation caused by Q-value overestimation. Simulation experiments on a heterogeneous multi-region microgrid system comprising three representative microgrid models demonstrate that the proposed method overcomes device heterogeneity limitations, accelerates regional agents' convergence to near-optimal policies, enables balanced power allocation, and realizes real-time energy scheduling with enhanced economic benefits.

-

表 1 区域设备运行参数表

Table 1 Operating parameters of regional equipment

设备 $ E_{i,\; \min}^{{\rm{ESS}}} $ $ E_{i,\; \max}^{{\rm{ESS}}} $ $ P_{{\rm{ch}},\; i,\; \max}^{{\rm{ESS}}} $ $ P_{{\rm{dis}},\; i,\; \max}^{{\rm{ESS}}} $ $ \eta _{{\rm{ch}},\; i}^{{\rm{ESS}}} $ $ \eta _{{\rm{dis}},\; i}^{{\rm{ESS}}} $ $ P_{i,\; \max}^{{\rm{EG/WE}}} $ $ R_{i,\; {{\rm{down}}}}^{\rm{EG/WE}} $ $ R_{i,\; {{\rm{up}}}}^{{\rm{EG/WE}}} $ $ a_i^{{\rm{EG/WE}}} $ $ b_i^{{\rm{EG/WE}}} $ $ c_i^{{\rm{EG/WE}}} $ $ \eta _i^{{\rm{WE}}} $ 区域1 45 180 45 45 0.97 0.97 95 −45 45 0.1 0.001 0.5 / 区域2 55 220 65 65 0.93 0.93 120 −65 65 0.1 0.001 0.5 / 区域3 50 200 50 50 0.95 0.95 100 −50 50 0.1 0.001 0.5 0.8 设备 $ E_{i,\; \min}^{{\rm{HT}}} $ $ E_{i,\; \max }^{{\rm{HT}}} $ $ P_{{\rm{ch}},\; i,\; \max }^{{\rm{HT}}} $ $ P_{{\rm{dis}},\; i,\; \max }^{{\rm{HT}}} $ $ \eta _{{\rm{ch}},\; i}^{{\rm{HT}}} $ $ \eta _{{\rm{dis}},\; i}^{{\rm{HT}}} $ $ P_{i,\; \max }^{{\rm{FC}}} $ $ R_{i,\; {{\rm{down}}}}^{{\rm{FC}}} $ $ R_{i,\; {{\rm{up}}}}^{{\rm{FC}}} $ $ \eta _i^{{\rm{FC}}} $ $ a_i^{{\rm{EG}}} $ $ b_i^{{\rm{EG}}} $ $ c_i^{{\rm{EG}}} $ 区域1 135 360 50 100 0.98 0.98 140 −70 70 0.72 0.01 0.0001 0.05 区域3 150 400 50 105 0.98 0.98 150 −75 75 0.7 0.01 0.0001 0.05 表 2 分时购售电价

Table 2 Time-of-Use based electricity purchase and sale prices

时段 23-8 h 9-11 h 12-13 h 14-19 h 购电电价(元/kWh) 0.3578 0.8325 0.3578 0.8325 售电电价(元/kWh) 0.2 0.4125 0.2 0.4125 -

[1] 李远征, 倪质先, 段钧韬, 徐磊, 杨涛, 曾志刚. 面向高比例新能源电网的重大耗能企业需求响应调度. 自动化学报, 2023, 49(4): 754−768Li Yuan-Zheng, Ni Zhi-Xian, Duan Jun-Tao, Xu Lei, Yang Tao, Zeng Zhi-Gang. Demand response scheduling of major energy-consuming enterprises based on a high proportion of renewable energy power grid. Acta Automatica Sinica, 2023, 49(4): 754−768 [2] Wen G, Xu J, Liu Z. Hierarchical regulation strategy for smoothing tie-line power fluctuations in grid-connected microgrids with battery storage aggregators. IEEE Transactions on Industrial Informatics, 2024, 20(10): 12210−12219 doi: 10.1109/TII.2024.3417248 [3] 平作为, 何维, 李俊林, 杨涛. 基于稀疏学习的微电网负载建模. 自动化学报, 2020, 46(9): 1798−1808Ping Zuo-Wei, He Wei, Li Jun-Lin, Yang Tao. Sparse learning for load modeling in microgrids. Acta Automatica Sinica, 2020, 46(9): 1798−1808 [4] 卓振宇, 张宁, 谢小荣, 李浩志, 康重庆. 高比例可再生能源电力系统关键技术及发展挑战. 电力系统自动化, 2021, 45(9): 171−191Zhuo Zhen-Yu, Zhang Ning, Xie Xiao-Rong, Li Hao-Zhi, Kang Chong-Qing. Key Technologies and Developing Challenges of Power System with High Proportion of Renewable Energy. Automation of Electric Power Systems, 2021, 45(9): 171−191 [5] 唐昊, 刘畅, 杨明, 汤必强, 许丹, 吕凯. 考虑电网调峰需求的工业园区主动配电系统调度学习优化. 自动化学报, 2021, 47(10): 2449−2463Tang Hao, Liu Chang, Yang Ming, Tang Bi-Qiang, Xu Dan, Lv Kai. Learning-based optimization of active distribution system dispatch in industrial park considering the peak operation demand of power grid. Acta Automatica Sinica, 2021, 47(10): 2449−2463 [6] Wen G, Yu X, Liu Z. Recent progress on the study of distributed economic dispatch in smart grid: An overview. Frontiers of Information Technology & Electronic Engineering, 2021, 22(1): 25−39 [7] Ye Y, Qiu D, Wu X, Strbac G, Ward J. Model-free real-time autonomous control for a residential multi-energy system using deep reinforcement learning. IEEE Transactions on Smart Grid, 2020, 11(4): 3068−3082 doi: 10.1109/TSG.2020.2976771 [8] Cui Y, Xu Y, Li Y, Wang Y, Zou X. Deep reinforcement learning based optimal energy management of multi-energy microgrids with uncertainties. CSEE Journal of Power and Energy Systems, 2024171−191 [9] Zhou Y, Ma Z, Zhang J, Zou S. Data-driven stochastic energy management of multi energy system using deep reinforcement learning. Energy, 2022, 261: 125187 doi: 10.1016/j.energy.2022.125187 [10] Zhou Y, Ma Z, Wang T, Zhang J, Shi X, Zou S. Joint energy and carbon trading for multi-microgrid system based on multi-agent deep reinforcement learning. IEEE Transactions on Power Systems, 2024, 39(6): 7376−7388 doi: 10.1109/TPWRS.2024.3380070 [11] Wang T, Dong Z Y. Adaptive personalized federated reinforcement learning for multiple-ESS optimal market dispatch strategy with electric vehicles and photovoltaic power generations. Applied Energy, 2024, 365: 123107 doi: 10.1016/j.apenergy.2024.123107 [12] Lee S, Choi D H. Federated reinforcement learning for energy management of multiple smart homes with distributed energy resources. IEEE Transactions on Industrial Informatics, 2020, 18(1): 488−497 [13] Li Y, He S, Li Y, Shi Y, Zeng Z. Federated multiagent deep reinforcement learning approach via physics-informed reward for multimicrogrid energy management. IEEE Transactions on Neural Networks and Learning Systems, 2023, 35(5): 5902−5914 [14] Zhang Y, Ren Y, Liu Z, Li H, Jiang H, Xue Y, et al. Federated deep reinforcement learning for varying-scale multi-energy microgrids energy management considering comprehensive security. Applied Energy, 2025, 380: 125072 doi: 10.1016/j.apenergy.2024.125072 [15] Yang T, Xu Z, Ji S, Liu G, Li X, Kong H. Cooperative optimal dispatch of multi-microgrids for low carbon economy based on personalized federated reinforcement learning. Applied Energy, 2025, 378: 124641 doi: 10.1016/j.apenergy.2024.124641 [16] Fang X, Dong W, Wang Y, Yang Q. Multiple time-scale energy management strategy for a hydrogen-based multi-energy microgrid. Applied Energy, 2022, 328: 120195 doi: 10.1016/j.apenergy.2022.120195 [17] 刘运鑫, 姚良忠, 赵波, 徐箭, 廖思阳, 庞轩佩. 考虑灵活组群的配电网-微电网群低碳经济调度方法. 电力系统自动化, 2024, 48(20): 59−68Liu Yun-Xin, Yao Liang-Zhong, Zhao Bo, Xu jian, Liao Si-Yang, Pang Xuan-Pei. Low-carbon Economic Dispatch of Distribution Network-Microgrid Clusters Considering Flexible Clustering. Automation of Electric Power Systems, 2024, 48(20): 59−68 [18] 徐钰涵, 季天瑶, 李梦诗. 基于深度强化学习的微电网日前日内协调优化调度. 南方电网技术, 2024, 18(9): 106−116Xu Yu-Han, Ji Tian-Yao, Li Meng-Shi. Day-Ahead and Intra-Day Coordinated Optimal Scheduling of Microgrid Based on Deep Reinforcement Learning. Southern Power System Technology, 2024, 18(9): 106−116 [19] Hu B, Gong Y, Liang X, Chung C, Noble B F, Poelzer G. Safe Deep Reinforcement Learning-Based Real-Time Multi-Energy Management in Combined Heat and Power Microgrids. IEEE Access, 2024 [20] Xia Y, Xu Y, Feng X. Hierarchical Coordination of Networked-Microgrids towards Decentralized Operation: A Safe Deep Reinforcement Learning Method. IEEE Transactions on Sustainable Energy, 2024, 15(3): 1981−1993 doi: 10.1109/TSTE.2024.3390808 [21] Hook J, De Silva V, Kondoz A. Deep Multi-Critic Network for accelerating Policy Learning in multi-agent environments. Neural Networks, 2020, 128: 97−106 doi: 10.1016/j.neunet.2020.04.023 [22] Xiong W, Liu Q, Li F, Wang B, Zhu F. Personalized federated reinforcement learning: balancing personalization and experience sharing via distance constraint. Expert Systems with Applications, 2024, 238: 122290 doi: 10.1016/j.eswa.2023.122290 [23] Qi J, Zhou Q, Lei L, Zheng K. Federated reinforcement learning: techniques, applications, and open challenges. Intelligence & Robotics, 2021, 1(1): 18−57 [24] Wang X, Chen S, Yan D, Wei J, Yang Z. Multi-agent deep reinforcement learning–based approach for optimization in microgrid clusters with renewable energy. IEEE International Conference on Power System Technology, 2021413−419 [25] Yang T, Zhao L, Li W, Zomaya A Y. Dynamic energy dispatch strategy for integrated energy system based on improved deep reinforcement learning. Energy, 2021, 235: 121377 doi: 10.1016/j.energy.2021.121377 [26] Rezazadeh F, Bartzoudis N. A federated DRL approach for smart micro-grid energy control with distributed energy resources. IEEE 27th International Workshop on Computer Aided Modeling and Design of Communication Links and Networks, 2022108−114 [27] 郭方洪, 何通, 吴祥, 董辉, 刘冰. 基于分布式深度强化学习的微电网实时优化调度. 控制理论与应用, 2022, 39(10): 1881−1889Guo Fang-Hong, He Tong, Wu Xiang, Dong Hui, Liu Bing. Real-time optimal scheduling for microgrid systems based on distributed deep reinforcement learning. Control Theory & Applications, 2022, 39(10): 1881−1889 -

计量

- 文章访问数: 83

- HTML全文浏览量: 61

- 被引次数: 0

下载:

下载: