-

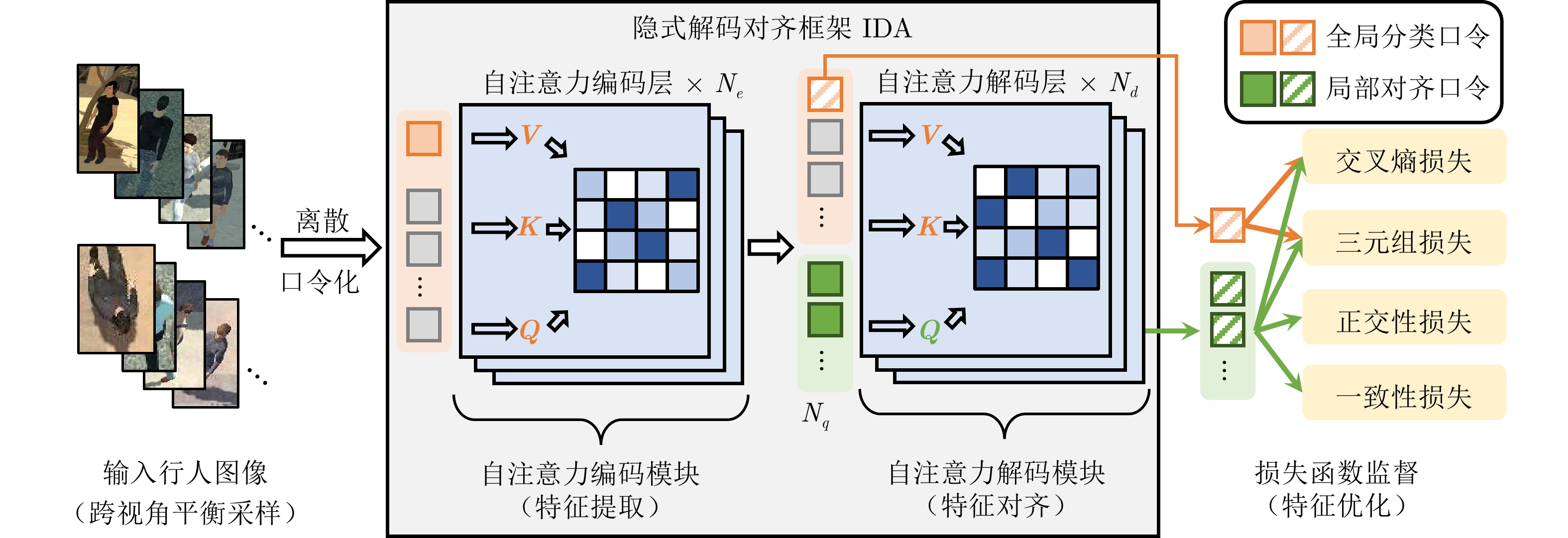

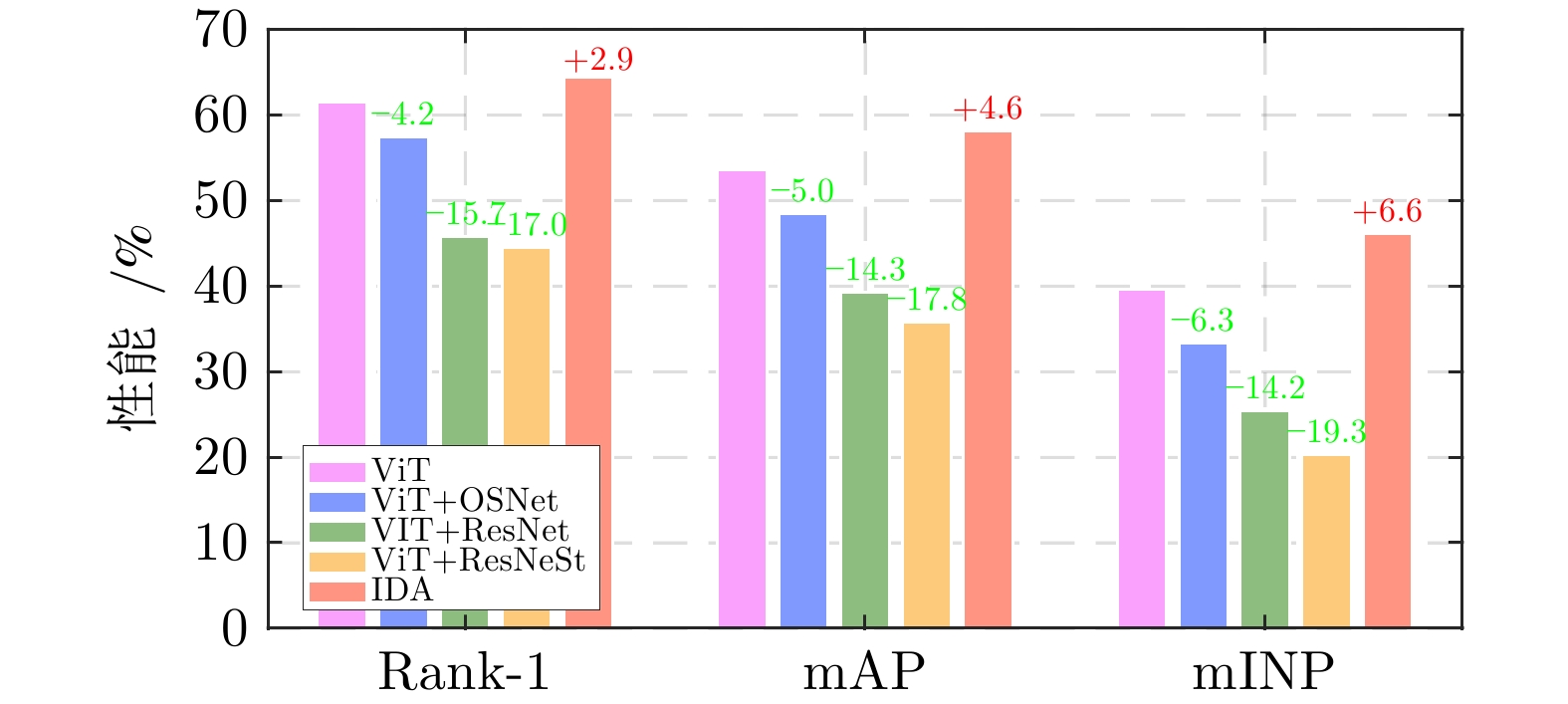

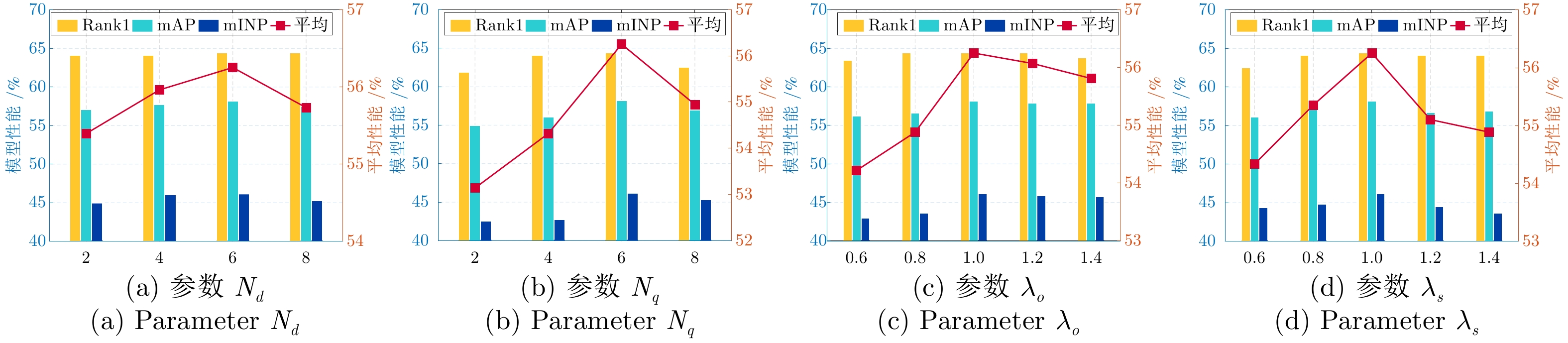

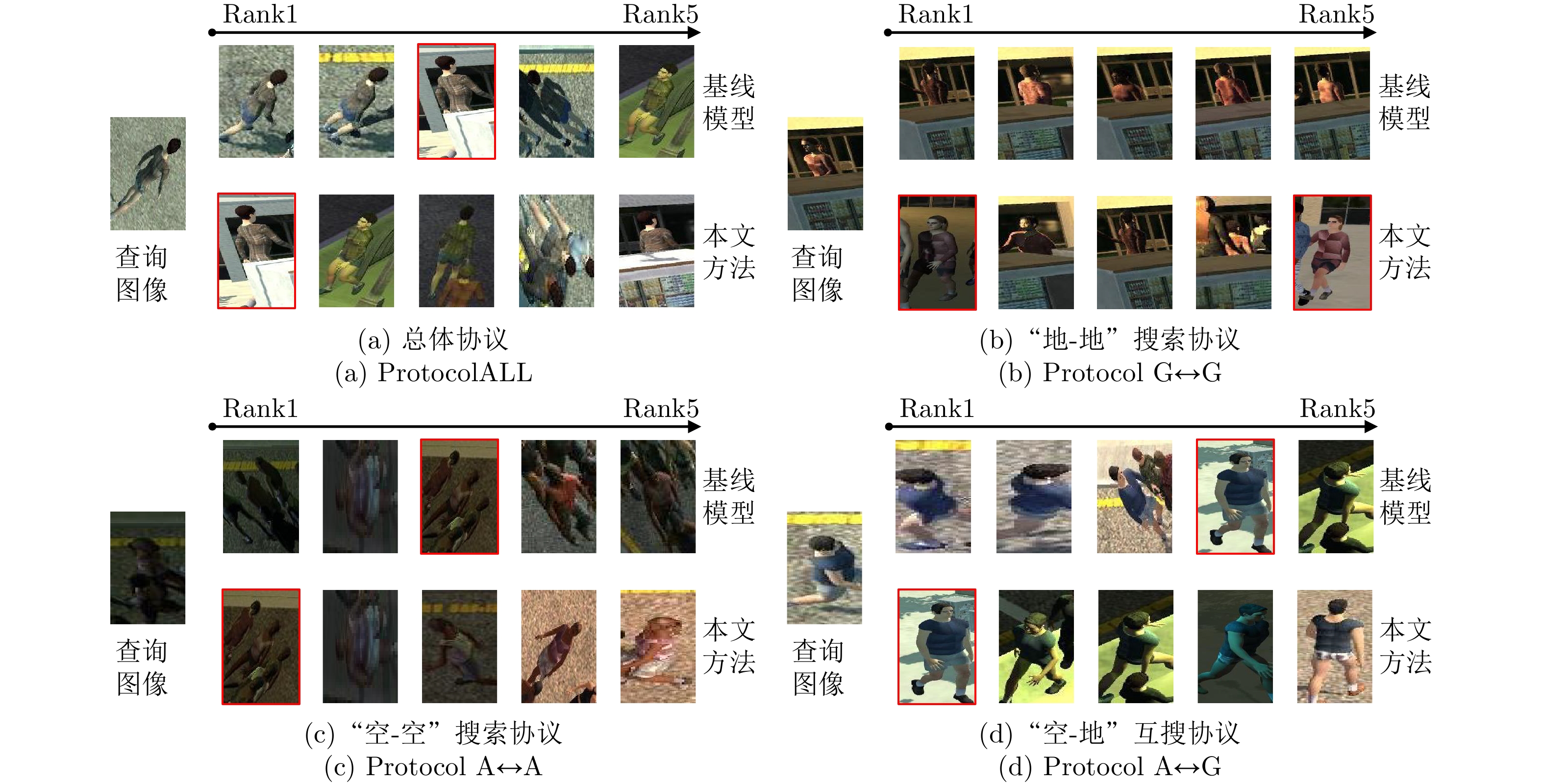

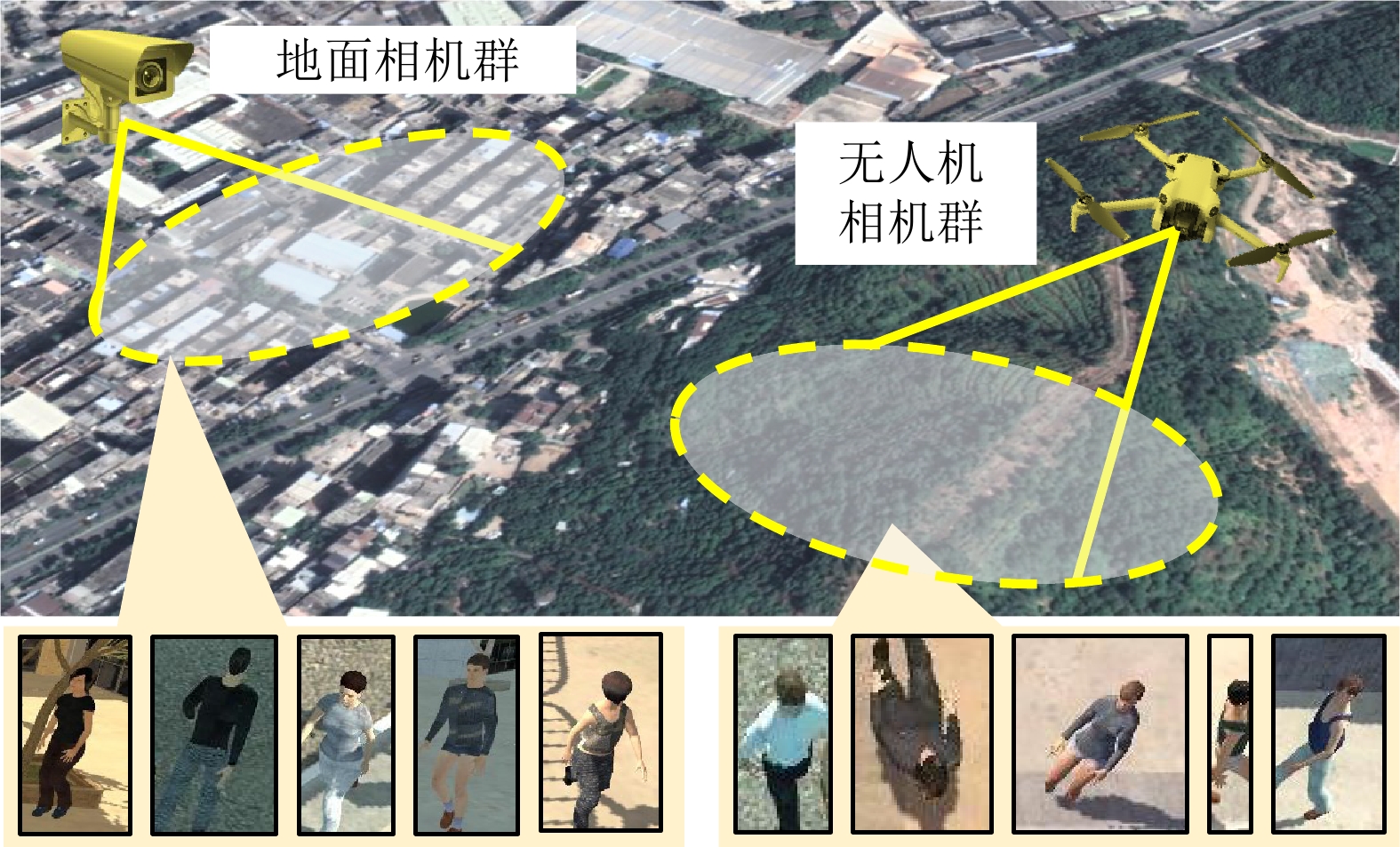

摘要: 空地行人重识别任务旨在包含地面与空中视角的监控相机网络中, 实现对特定行人的精确识别与跨镜关联. 该任务的特有挑战在于克服空地成像设备之间巨大的视角差异对于学习判别性行人身份特征的干扰. 现有工作在行人特征建模方面存在不足, 未充分考虑跨视角特征对齐对识别与检索性能的提升作用. 基于此, 本文提出一种基于隐式特征对齐的空地行人重识别方法, 主要包含两方面的创新: 在模型设计方面, 提出基于自注意力解码器的隐式对齐框架, 通过在解码阶段利用一组可学习的口令特征挖掘行人判别部件区域, 并提取和对齐行人局部特征, 从而实现判别性行人表征的学习; 在优化目标方面, 提出正交性和一致性损失函数, 前者约束口令特征以多样化判别性行人部件为关注点, 后者缓解了跨视角特征表达的偏置分布. 在当前最大可用的空地重识别数据集CARGO上进行实验, 结果表明本文方法在检索性能上优于现有重识别方法, 实现显著的性能提升.Abstract: Aerial-ground person re-identification (AGPReID) refers to the accurate recognition and cross-camera association of the interest person within a surveillance camera network deployed across aerial and ground views. One of the unique challenges is overcoming the significant view differences between aerial and ground cameras, which interfere with discriminate feature learning of person identity. Existing methods are insufficient in modeling person features, as they fail to fully leverage cross-view feature alignment to enhance recognition and retrieval performance. To this end, the implicit decoder alignment is proposed for AGPReID with two main contributions: In terms of model design, we propose an implicit alignment framework based on a self-attention decoder. This framework leverages a set of learnable token features during the decoding stage to identify discriminative person parts and to extract and align local pedestrian features, enabling discriminative person representation learning. In terms of optimization objectives, we propose orthogonality and consistency loss functions. The former constrains token features to focus on diverse discriminative pedestrian parts, while the latter mitigates biased distributions in cross-view feature representations. Experiments were conducted on the largest publicly available cross-view re-identification dataset, CARGO, where our method outperformed existing re-identification methods, achieving superior retrieval performance.

-

Key words:

- Person re-identification /

- image retrieval /

- image recognition /

- Transformer

-

表 1 常用符号及其含义

Table 1 Commonly used symbols and their meanings in our work

符号 含义 $ {\cal{D}},\;{\cal{D}}^{tr},\; {\cal{D}}^{te} $ 空地重识别数据集, 训练集, 测试集 $ B $ 批数据 $ x_i,\; y_i,\; v_i $ 行人图像, 身份标签, 视角标签 $ {\cal{F}},\;{\cal{F}}_e,\; {\cal{F}}_d $ 网络模型, 自注意力编码器和解码器 $ {\boldsymbol{Q}},\;{\boldsymbol{K}},\;{\boldsymbol{V}} $ 注意力操作的查询、键值和内容 $ \theta,\;\theta_e,\; \theta_d $ $ {\cal{F}},\;{\cal{F}}_e,\; {\cal{F}}_d $中的可学习参数 $ {\cal{T}}(\cdot) $ 输入离散口令化 $ t_g,\; t_a $ 可学习口令特征 $ {\boldsymbol{L}} $ 局部口令特征矩阵 $ {\boldsymbol{S}}_{g\leftrightarrow g},\; {\boldsymbol{S}}_{a\leftrightarrow a},\;{\boldsymbol{S}}_{a\leftrightarrow g} $ 相似度矩阵 $ {\cal{L}}_g^c,\; {\cal{L}}_g^t $ 全局特征损失函数 $ {\cal{L}}_a^c,\; {\cal{L}}_a^t,\; {\cal{L}}_a^o,\; {\cal{L}}_a^s $ 局部特征损失函数 $ \left|\cdot\right| $ 集合的阶 表 2 CARGO数据集四种协议的主流方法性能评测. 汇报的指标包括Rank1, mAP, mINP(%). 最佳性能以加粗显示

Table 2 The performance evaluation of the mainstream methods for the four protocols of the CARGO dataset. Rank1, mAP, and mINP (%) are reported. Best performances are shown in bolded

方法 协议1:ALL 协议2:G$ \leftrightarrow $G 协议3:A$ \leftrightarrow $A 协议4:A$ \leftrightarrow $G Rank1 mAP mINP Rank1 mAP mINP Rank1 mAP mINP Rank1 mAP mINP PCB[25, 26] 44.23 38.15 26.14 72.32 61.92 45.72 57.50 42.34 22.50 21.25 21.02 14.22 SBS[20] 50.32 43.09 29.76 73.21 62.99 48.24 67.50 49.73 29.32 31.25 29.00 18.71 BoT[33] 54.81 46.49 32.40 77.68 66.47 51.34 65.00 49.79 29.82 36.25 32.56 21.46 MGN[31] 54.49 46.58 33.55 82.14 69.31 53.60 65.00 48.86 27.42 32.50 30.44 21.53 APNet[32] 58.97 50.24 35.76 77.68 66.83 51.85 67.50 54.57 37.35 44.37 39.35 26.76 VV[56] 45.83 38.84 39.57 72.31 62.99 48.24 67.50 49.73 29.32 31.25 29.00 18.71 AGW[2] 60.26 53.44 40.22 81.25 71.66 58.09 67.50 56.48 40.40 43.57 40.90 29.39 TransReID[35] 60.90 53.17 39.57 — — — — — — — — — VDT[51] 64.10 55.20 41.13 82.14 71.59 58.39 82.50 66.83 50.22 48.12 42.76 29.95 基线模型 61.54 53.54 39.62 82.14 71.34 57.55 80.00 64.47 47.07 43.13 40.11 28.20 IDA 64.42 58.17 46.17 83.04 77.04 67.50 82.50 69.65 54.58 48.75 45.13 33.92 表 3 IDA框架消融实验. 汇报的指标包括Rank1, mAP, mINP(%). 最佳性能以加粗显示

Table 3 Ablation study of IDA framework. Rank1, mAP, and mINP are reported (%). The best performance is shown in bolded

协议1:ALL 协议2:G$ \leftrightarrow $G 协议3:A$ \leftrightarrow $A 协议4:A$ \leftrightarrow $G $ {\cal{F}}(\cdot) $ $ {\cal{L}}_a^o $ $ {\cal{L}}_a^s $ Rank mAP mINP Rank1 mAP mINP Rank1 mAP mINP Rank1 mAP mINP $\checkmark$ 60.26 53.89 41.36 81.25 73.66 62.70 75.00 63.94 47.10 43.75 40.33 28.71 $\checkmark$ $\checkmark$ 63.78 57.55 45.69 83.93 77.33 68.21 77.50 64.55 47.52 46.25 43.97 32.88 $\checkmark$ $\checkmark$ 61.22 55.76 44.22 82.14 75.53 66.28 80.00 69.36 55.21 44.37 41.82 30.88 $\checkmark$ $\checkmark$ $\checkmark$ 64.42 58.17 46.17 83.04 77.04 67.50 82.50 69.65 54.58 48.75 45.13 33.92 -

[1] 叶钰, 王正, 梁超, 韩镇, 陈军, 胡瑞敏. 多源数据行人重识别研究综述. 自动化学报, 2020, 46(9): 1869−1884Ye Yu, Wang Zheng, Liang Chao, Han Zhen, Chen Jun, Hu Rui-Min. A survey on multi-source person re-identification. Acta Automatica Sinica, 2020, 46(9): 1869−1884 [2] Ye M., Chen S., Li C., Zheng WS., Crandall D., Du B. Transformer for object re-identification: A survey. International Journal of Computer Vision, 2024, 1−31 [3] Zhang Q., Lai J., Xie X., Jin X., Huang S. Separable Spatial-Temporal Residual Graph for Cloth-Changing Group Re-Identification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(8): 5791−5805 doi: 10.1109/TPAMI.2024.3369483 [4] Zhang Q., Lai J., Feng Z., Xie X. Uncertainty Modeling for Group Re-Identification. International Journal of Computer Vision, 2024, 132: 3046−3066 doi: 10.1007/s11263-024-02013-x [5] 罗浩, 姜伟, 范星, 张思朋. 基于深度学习的行人重识别研究进展. 自动化学报, 2019, 45(11): 2032−2049Luo Hao, Jiang Wei, Fan Xing, Zhang Si-Peng. A Survey on Deep Learning Based Person Re-identification. Acta Automatica Sinica, 2019, 45(11): 2032−2049 [6] 张永飞, 杨航远, 张雨佳, 豆朝鹏, 廖胜才, 郑伟诗, 等. 行人再识别技术研究进展. 中国图像图形学报, 2023, 28(06): 1829−1862 doi: 10.11834/jig.230022Zhang Yongfei, Yang Hangyuan, Zhang Yujia, Dou Zhaopeng, Liao Shengcai, Zheng Weishi, et al. Recent progress in person re-ID. Journal of Image and Graphics, 2023, 28(06): 1829−1862 doi: 10.11834/jig.230022 [7] Sarker P. K., Zhao Q., Uddin M. K. Transformer-Based Person Re-Identification: A Comprehensive Review. IEEE Transactions on Intelligent Vehicles, 2024, 9(7): 5222−5239 doi: 10.1109/TIV.2024.3350669 [8] Yang X., Liu H., Wang N., Gao X. Image-Level Adaptive Adversarial Ranking for Person Re-Identification. IEEE Transactions on Image Processing, 2024, 33: 5172−5182 doi: 10.1109/TIP.2024.3456000 [9] Cui Z., Zhou J., Peng Y. DMA: Dual Modality-Aware Alignment for Visible-Infrared Person Re-Identification. IEEE Transactions on Information Forensics and Security, 2024, 19: 2696−2708 doi: 10.1109/TIFS.2024.3352408 [10] He W., Deng Y., Tang S., et al. Instruct-ReID: A Multi-purpose Person Re-identification Task with Instructions. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, 17521−17531 [11] Ye M., Shen W., Zhang J., Yang Y., Du B. SecureReID: Privacy-Preserving Anonymization for Person Re-Identification. IEEE Transactions on Information Forensics and Security, 2024, 19: 2840−2853 doi: 10.1109/TIFS.2024.3356233 [12] Zhang Q., Lai J., Feng Z., Xie X. Seeing Like a Human: Asynchronous Learning With Dynamic Progressive Refinement for Person Re-Identification. IEEE Transactions on Image Processing, 2022, 31: 352−365 doi: 10.1109/TIP.2021.3128330 [13] Nguyen H., Nguyen K., Sridharan S., Fookes C. Aerial-Ground Person Re-ID. IEEE International Conference on Multimedia and Expo, 2023, 2585−2590 [14] Wang L., Zhang Q., Qiu J., Lai J. Rotation Exploration Transformer for Aerial Person Re-identification. IEEE International Conference on Multimedia and Expo, 2024, 1−6 [15] Qiu J., Feng Z., Wang L., Lai J. Salient Part-Aligned and Keypoint Disentangling Transformer for Person Re-Identification in Aerial Imagery. IEEE International Conference on Multimedia and Expo, 2024, 1−6 [16] Khaldi K., Nguyen V. D., Mantini P., Shah S. Unsupervised Person Re-Identification in Aerial Imagery. IEEE/CVF Winter Conference on Applications of Computer Vision, 2024, 260−269 [17] Zhang S., Zhang Q., Yang Y., Wei X., Wang P., Jiao B., Zhang Y. Person Re-Identification in Aerial Imagery. IEEE Transactions on Multimedia, 2020, 23: 281−291 [18] Ye M., Shen J., Lin G., Xiang T., Shao L., Hoi S. C. H. Deep Learning for Person Re-Identification: A Survey and Outlook. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(6): 2872−2893 doi: 10.1109/TPAMI.2021.3054775 [19] Luo H., Gu Y., Liao X., Lai S., Jiang W. Bag of Tricks and a Strong Baseline for Deep Person Re-Identification. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2019, 1−9 [20] He L., Liao X., Liu W., Liu X., Cheng P., Mei T. FastReID: A PyTorch Toolbox for General Instance Re-Identification. ACM International Conference on Multimedia, 2023, 9664−9667 [21] Farenzena M., Bazzani L., Perina A., Murino V., Cristani M. Person Re-Identification by Symmetry-Driven Accumulation of Local Features. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2010, 2360−2367 [22] Gheissari N., Sebastian T. B., Hartley R. Person Reidentification Using Spatiotemporal Appearance. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2006, 1528−1535 [23] Layne R., Hospedales T. M., Gong S., Mary Q. Person Re-Identification by Attributes. British Machine Vision Conference, 2012, 2(3): 1−11 [24] Liu C., Gong S., Loy C. C., Lin X. Person Re-Identification: What Features Are Important?. In European Conference on Computer Vision Workshops, 2012, 391−401 [25] Sun Y., Zheng L., Yang Y., Tian Q., Wang S. Beyond Part Models: Person Retrieval with Refined Part Pooling (and a Strong Convolutional Baseline). Proceedings of the European Conference on Computer Vision, 2018, 480−496 [26] Sun Y., Zheng L., Li Y., Yang Y., Tian Q., Wang S. Learning Part-Based Convolutional Features for Person Re-Identification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(3): 902−917 doi: 10.1109/TPAMI.2019.2938523 [27] Zhou K., Yang Y., Cavallaro A., Xiang T. Omni-Scale Feature Learning for Person Re-Identification. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, 3702−3712 [28] Zhou K., Yang Y., Cavallaro A., Xiang T. Learning Generalisable Omni-Scale Representations for Person Re-Identification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(9): 5056−5069 doi: 10.1109/TPAMI.2021.3069237 [29] He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. IEEE/CVF International Conference on Computer Vision, 2016, 770−778 [30] Zhang H., Wu C., Zhang Z., Zhu Y., Lin H., Zhang Z., Sun Y., He T., Mueller J., Manmatha R., Li M. ResneSt: Split-attention networks., 2022: 2736−2746. [31] Wang G., Yuan Y., Chen X., Li J., Zhou X. Learning Discriminative Features with Multiple Granularities for Person Re-Identification. ACM International Conference on Multimedia, 2018, 274−282 [32] Chen G., Gu T., Lu J., Bao JA., Zhou J. Person re-identification via attention pyramid. IEEE Transactions on Image Processing, 2021, 30: 7663−76 doi: 10.1109/TIP.2021.3107211 [33] Luo H., Gu Y., Liao X., Lai S., Jiang W. Bag of Tricks and a Strong Baseline for Deep Person Re-Identification. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2019, 1−9 [34] Luo H., Jiang W., Zhang X., Fan X., Qian J., Zhang C. AlignedReID++: Dynamically Matching Local Information for Person Re-Identification. Pattern Recognition, 2019, 94: 53−61 doi: 10.1016/j.patcog.2019.05.028 [35] He S., Luo H., Wang P., Wang F., Li H., Jiang W. TransReID: Transformer-Based Object Re-Identification. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, 15013−15022 [36] Yang Q., Chen Y., Peng D., Peng X., Zhou J. T., Hu P. Noisy-correspondence learning for text-to-image person re-identification. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, 27197−27206 [37] Zhu J., Wu H., Chen Y., Xu H., Fu Y., Zeng H., Liu L., Lei Z. Cross-Modal Group-Relation Optimization for Visible–Infrared Person Re-Identification. Neural Networks, 2024, 179: 106576 doi: 10.1016/j.neunet.2024.106576 [38] Zhu H., Budhwant P., Zheng Z., Nevatia R. SEAS: ShapE-Aligned Supervision for Person Re-Identification. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, 164−174 [39] Bai S., Chang H., Ma B. Incorporating Texture and Silhouette for Video-Based Person Re-Identification. Pattern Recognition, 2024, 156: 110759 doi: 10.1016/j.patcog.2024.110759 [40] Yu Z., Li L., Xie J., Wang C., Li W., Ning X. Pedestrian 3D Shape Understanding for Person Re-Identification via Multi-View Learning. IEEE Transactions on Circuits and Systems for Video Technology, 2024, 34(7): 5589−5602 doi: 10.1109/TCSVT.2024.3358850 [41] Yang B., Chen J., Ye M. Shallow-Deep Collaborative Learning for Unsupervised Visible-Infrared Person Re-Identification. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, 16870−16879 [42] Nguyen V. D., Khaldi K., Nguyen D., Mantini P., Shah S. Unsupervised person re-identification in aerial imagery. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2024, 1041−1049 [43] Liu M., Wang F., Wang X., Wang Y., Roy-Chowdhury A. K. A Two-Stage Noise-Tolerant Paradigm for Label Corrupted Person Re-Identification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(7): 4944−4956 doi: 10.1109/TPAMI.2024.3361491 [44] Huang Y., Wu Q., Zhang Z., Shan C., Zhong Y., Wang L. Meta Clothing Status Calibration for Long-Term Person Re-Identification. IEEE Transactions on Image Processing, 2024, 33: 2334−2346 doi: 10.1109/TIP.2024.3374634 [45] Rami H., Giraldo J. H., Winckler N., Lathuilière S. Source-guided similarity preservation for online person re-identification. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2024, 1711−1720 [46] Nguyen V. D., Mantini P., Shah S. K. Temporal 3D Shape Modeling for Video-based Cloth-Changing Person Re-Identification. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision Workshops, 2024, 173−182 [47] Liu X., Zhang P., Yu C., Qian X., Yang X., Lu H. A Video Is Worth Three Views: Trigeminal Transformers for Video-Based Person Re-Identification. IEEE Transactions on Intelligent Transportation Systems, 2024, 25(9): 12818−12828 doi: 10.1109/TITS.2024.3386914 [48] Zhang S., Zhang Q., Yang Y., Wei X., Wang P., Jiao B., Zhang Y. Person Re-Identification in Aerial Imagery. IEEE Transactions on Multimedia, 2020, 23: 281−291 [49] Li T., Liu J., Zhang W., Ni Y., Wang W., Li Z. UAV-Human: A Large Benchmark for Human Behavior Understanding with Unmanned Aerial Vehicles. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, 16266−16275 [50] Nguyen H., Nguyen K., Sridharan S., Fookes C. Aerial-Ground Person Re-ID. IEEE International Conference on Multimedia and Expo, 2023, 2585−2590 [51] Zhang Q., Wang L., Patel V. M., Xie X., Lai J. View-decoupled Transformer for Person Re-identification under Aerial-ground Camera Network. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, 22000−22009 [52] Vaswani A., Shazeer N., Parmar N., Uszkoreit J., Jones L., Gomez A. N., Kaiser L., Polosukhin I. Attention is All You Need. Advances in Neural Information Processing Systems, 2017, 30: 1−11 [53] Dosovitskiy A., Beyer L., Kolesnikov A., Weissenborn D., Zhai X., Unterthiner T., Dehghani M., Minderer M., Heigold G., Gelly S., Uszkoreit J., Houlsby N. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. International Conference on Learning Representations, 2021, 1−21 [54] Wu J., Liu H., Su Y., Shi W., Tang H. Learning Concordant Attention via Target-Aware Alignment for Visible-Infrared Person Re-Identification. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, 11122−11131 [55] Chen S., Ye M., Du B. Rotation Invariant Transformer for Recognizing Object in UAVs. Proceedings of the 30th ACM International Conference on Multimedia, 2022, 2565−2574 [56] Kuma R., Weill E., Aghdasi F., Sriram P. Vehicle Re-Identification: An Efficient Baseline Using Triplet Embedding. International Joint Conference on Neural Networks, 2019, 1−9 [57] Deng J., Dong W., Socher R., Li L.-J., Li K., Fei-Fei L. ImageNet: A Large-Scale Hierarchical Image Database. IEEE Conference on Computer Vision and Pattern Recognition, 2009, 248−255 [58] Robbins H., Monro S. A Stochastic Approximation Method. The Annals of Mathematical Statistics, 1951, 22(3): 400−407 doi: 10.1214/aoms/1177729586 [59] Paszke A., Gross S., Massa F., Lerer A., Bradbury J., Chanan G., Killeen T., Lin Z., Gimelshein N., Antiga L., Desmaison A. PyTorch: An Imperative Style, High-Performance Deep Learning Library. Advances in Neural Information Processing Systems, 2019, 32: 1−12 -

计量

- 文章访问数: 24

- HTML全文浏览量: 14

- 被引次数: 0

下载:

下载: