A Micro-expression Recognition Method Based on Multi-level Information Fusion Network

-

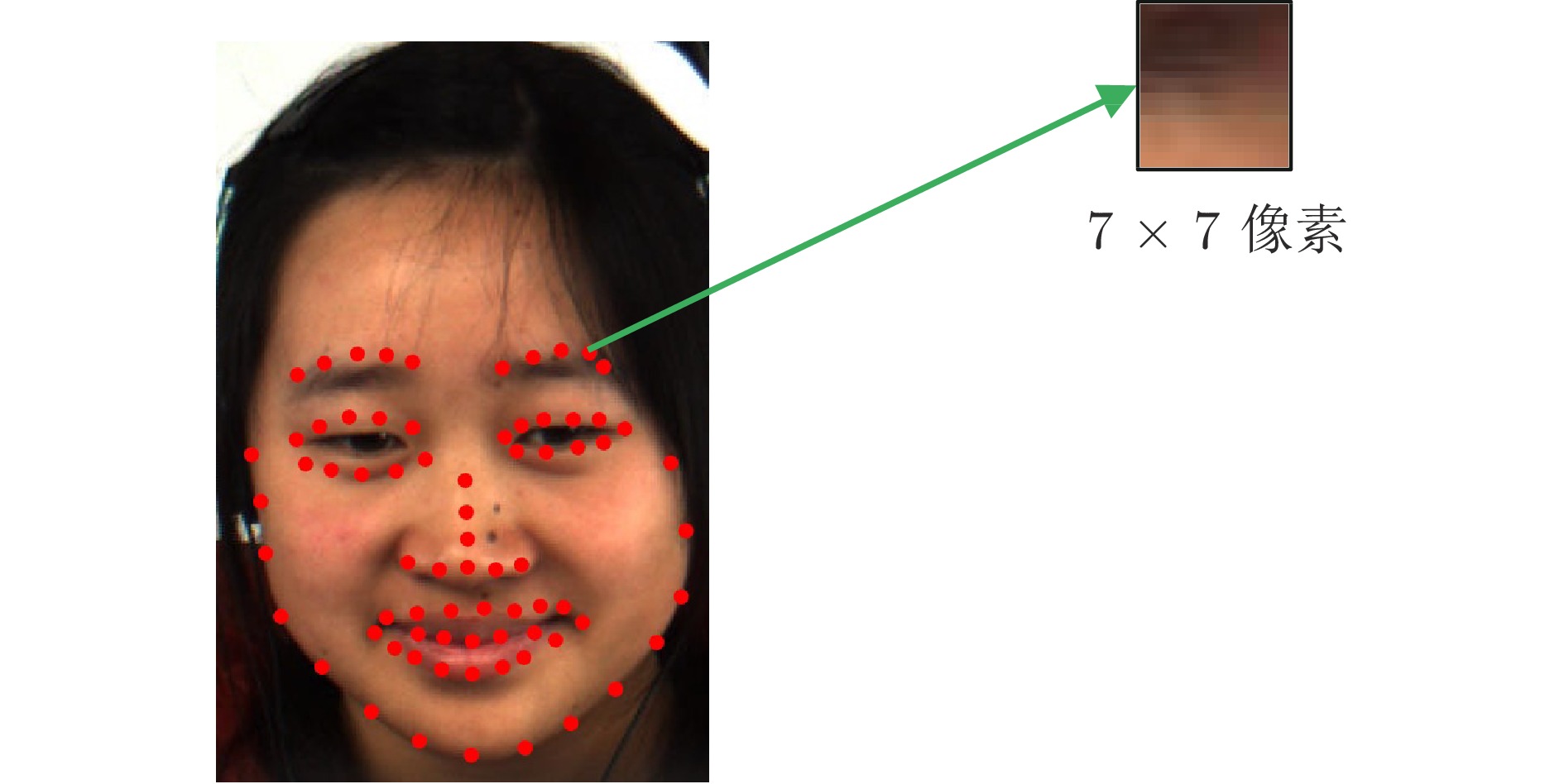

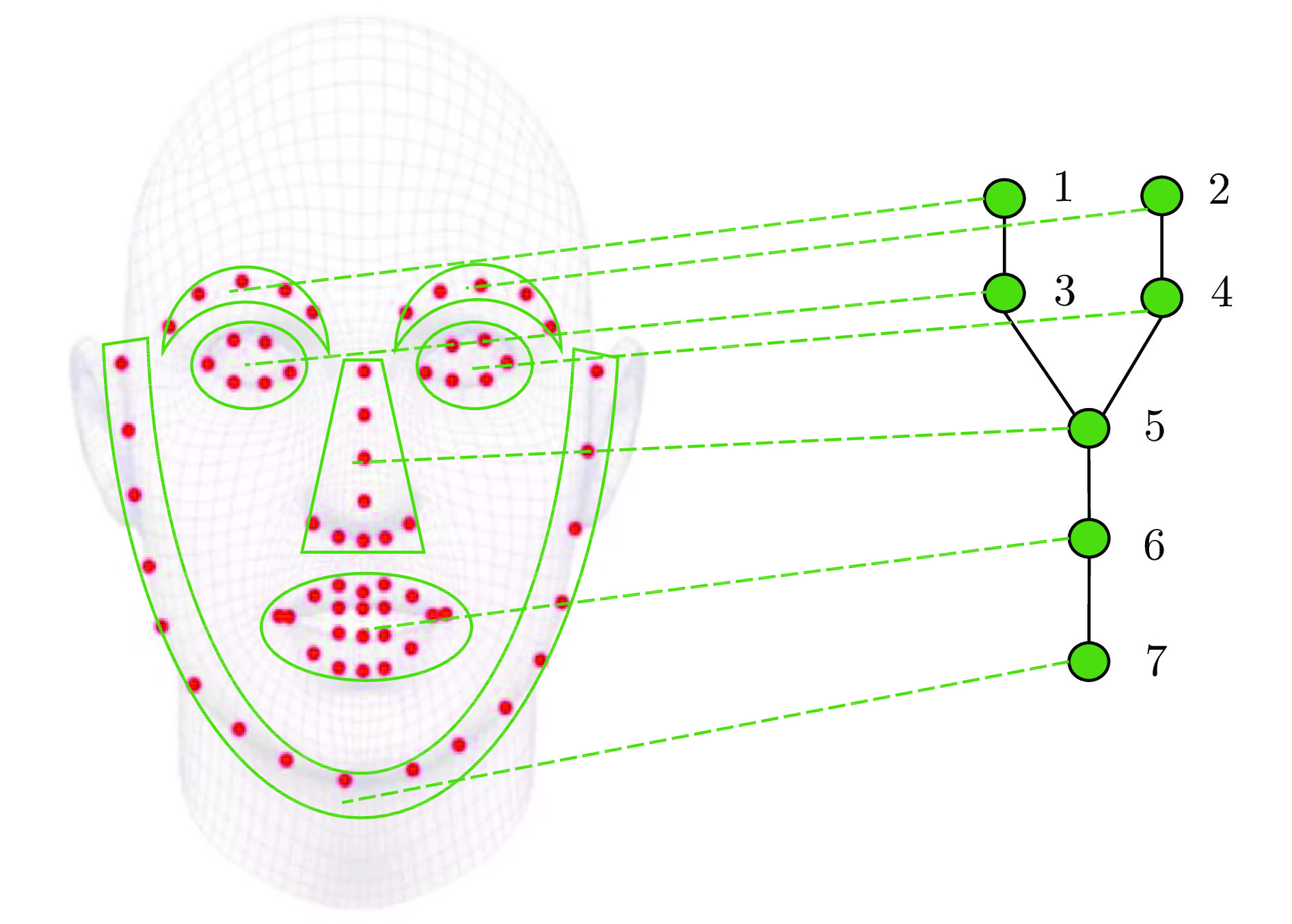

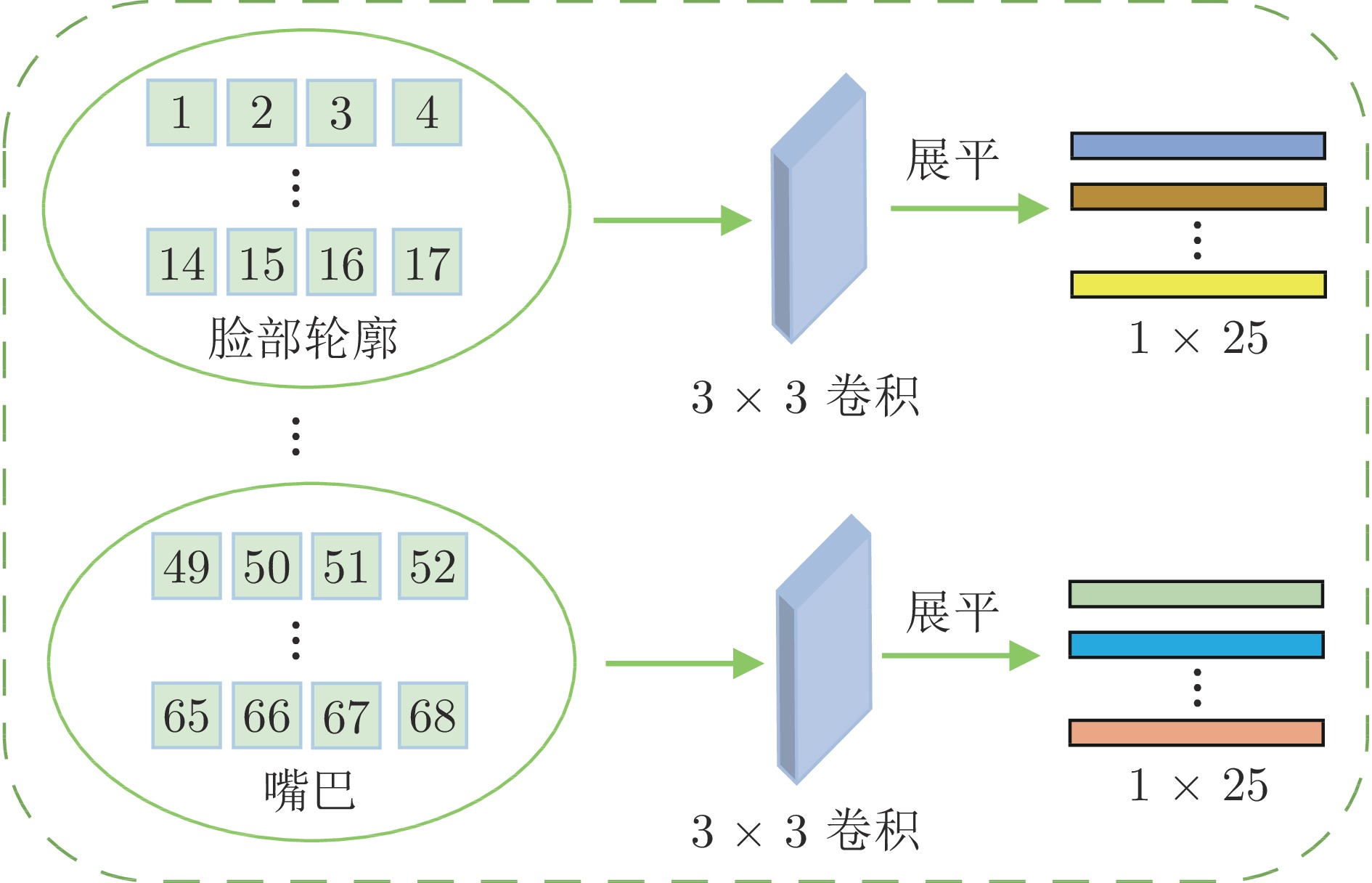

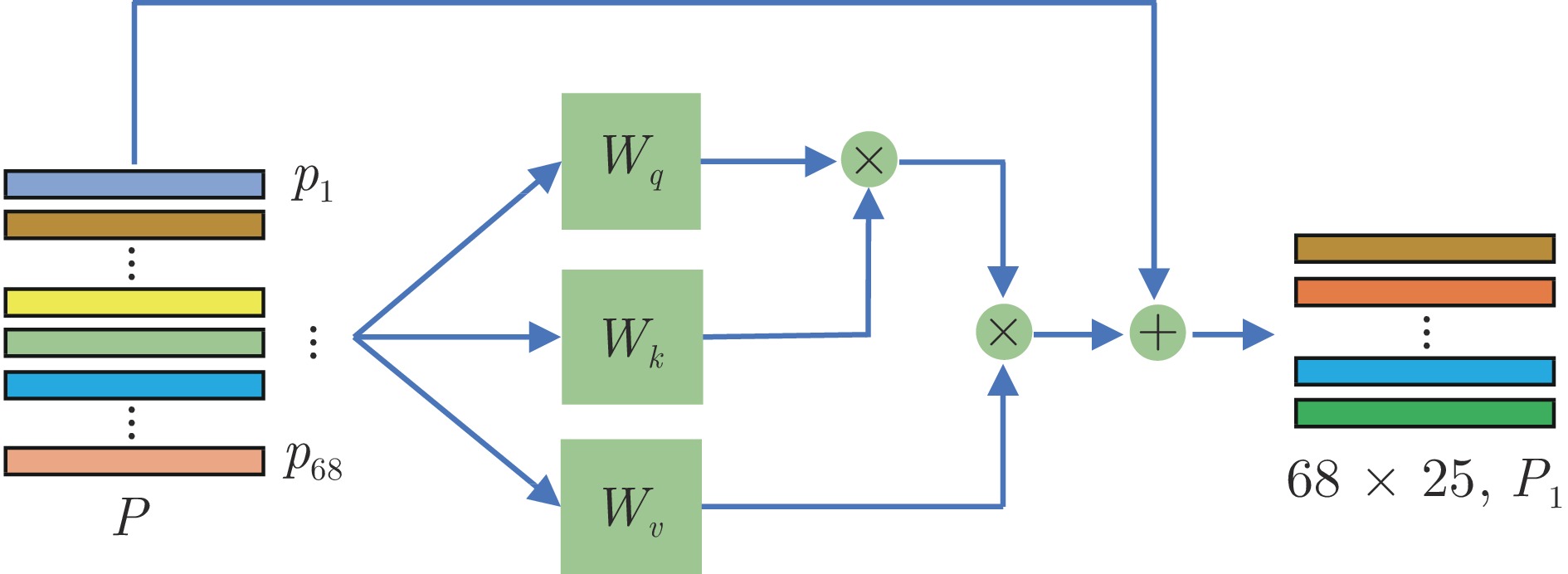

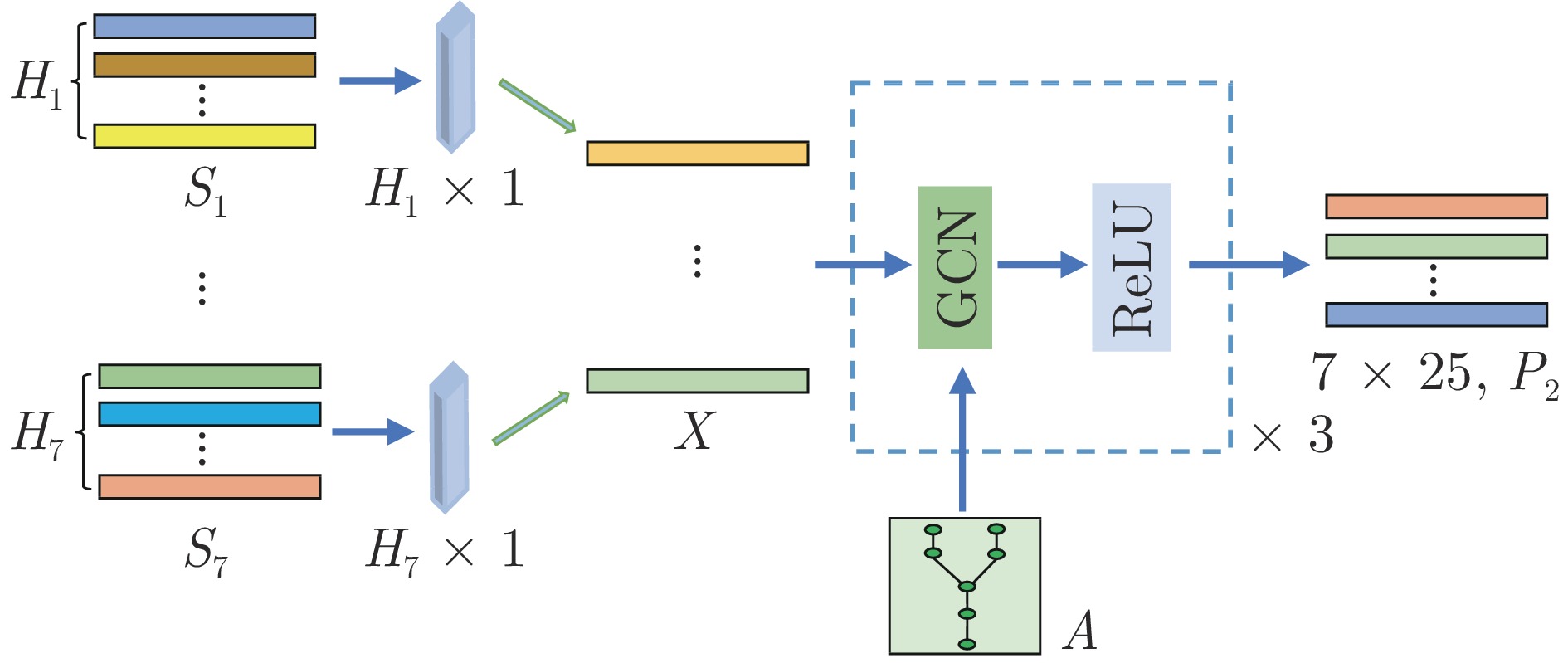

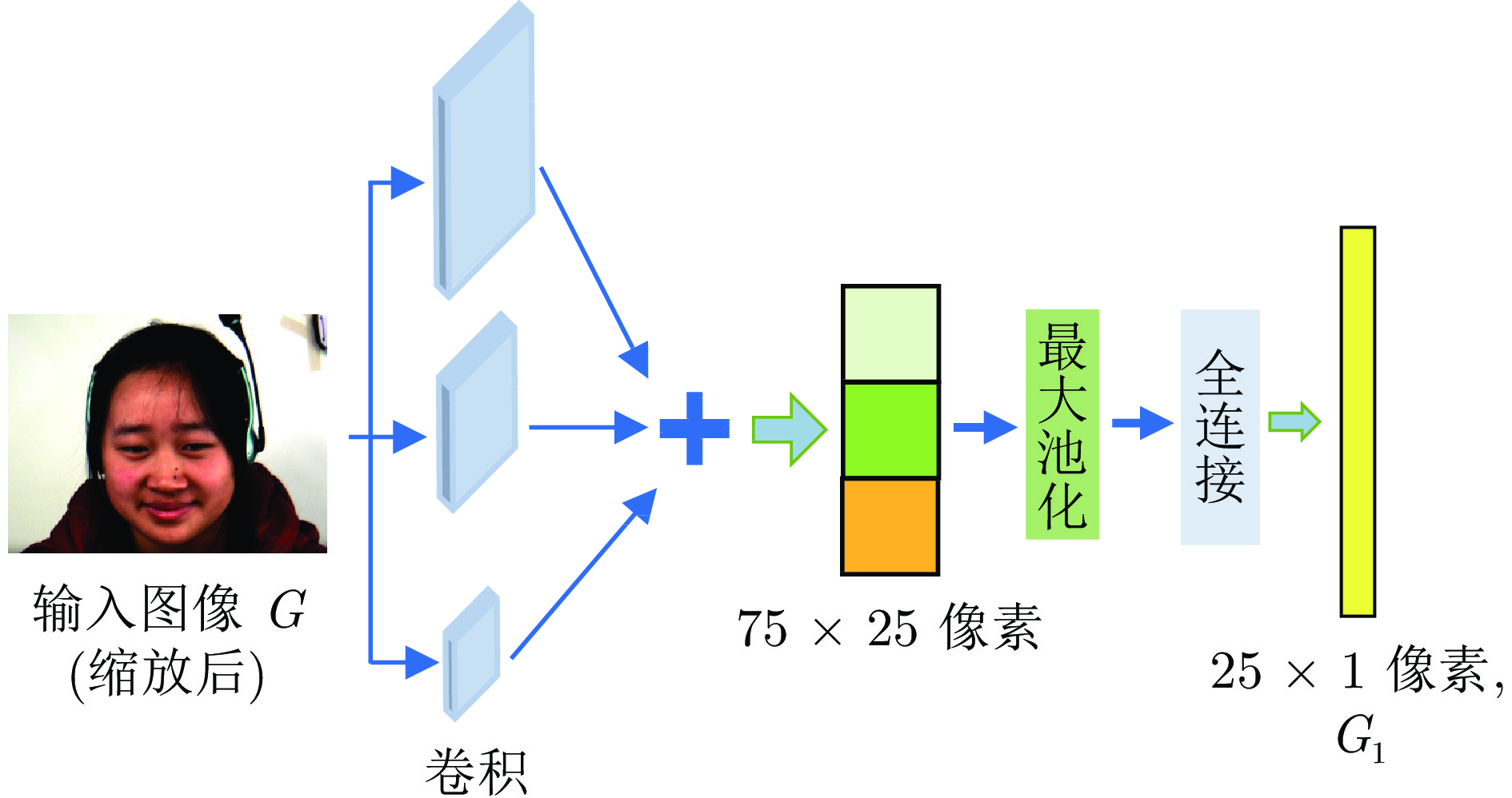

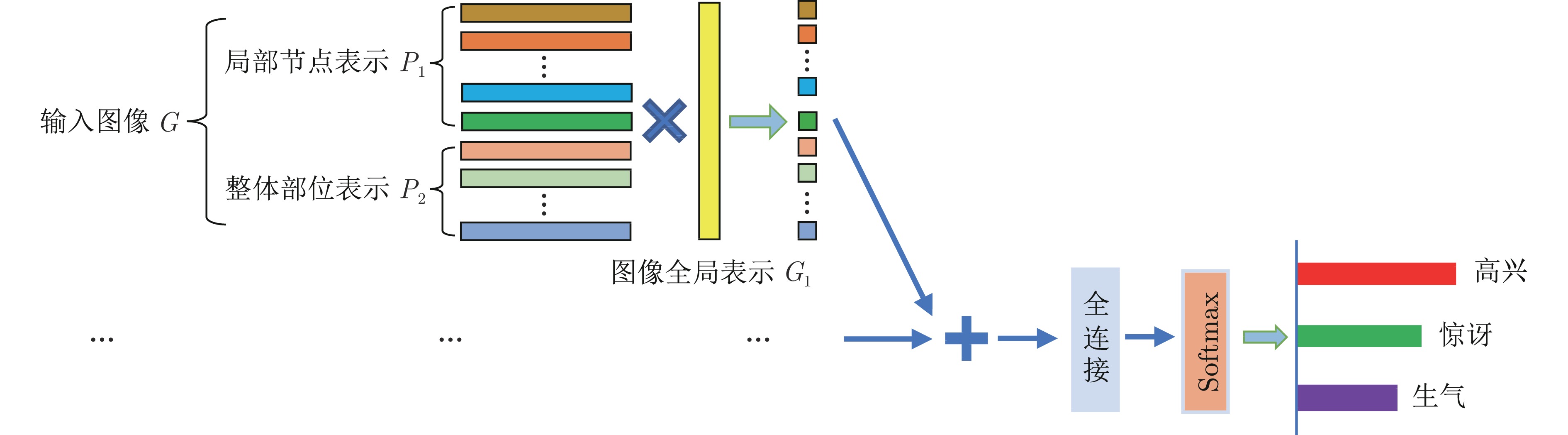

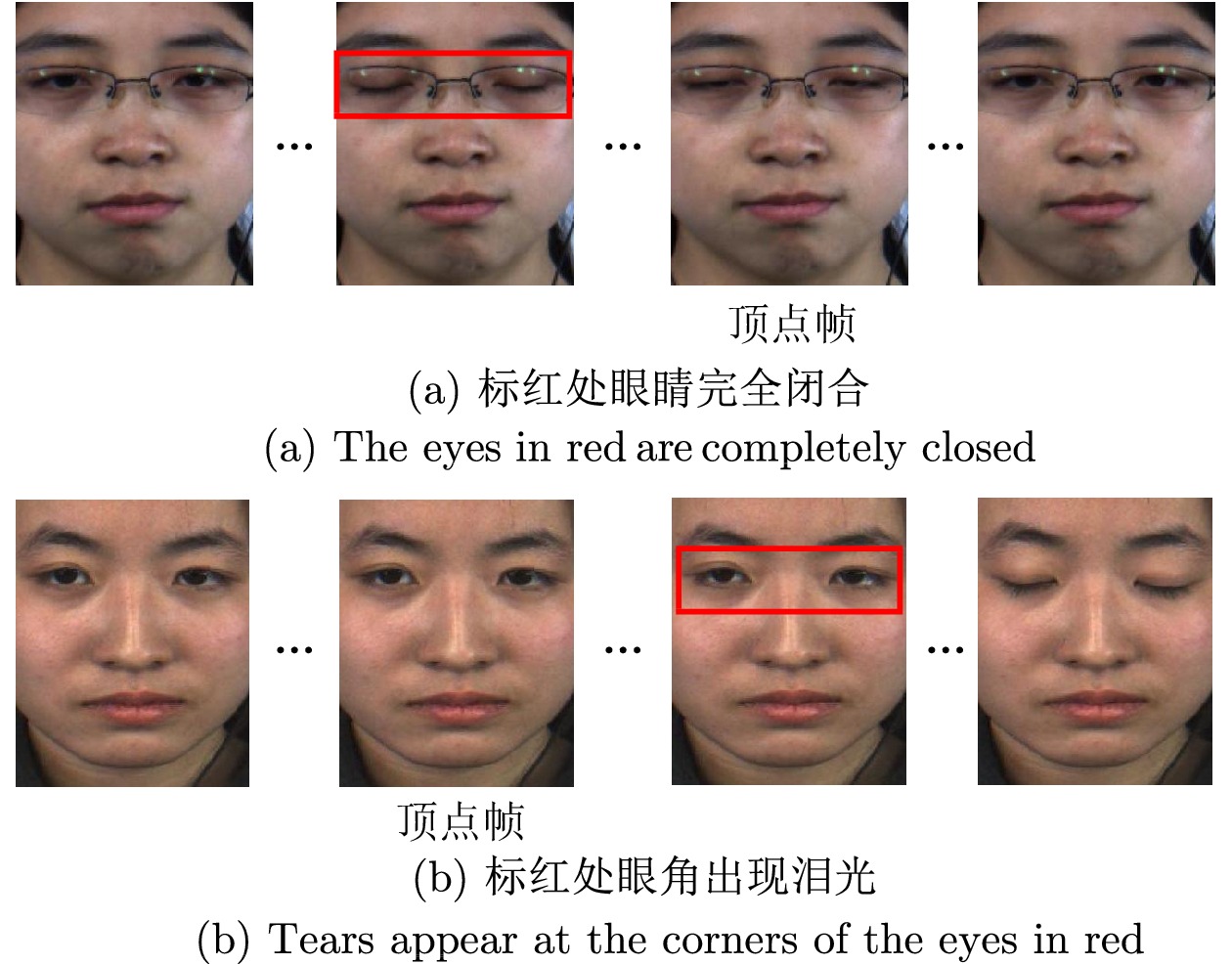

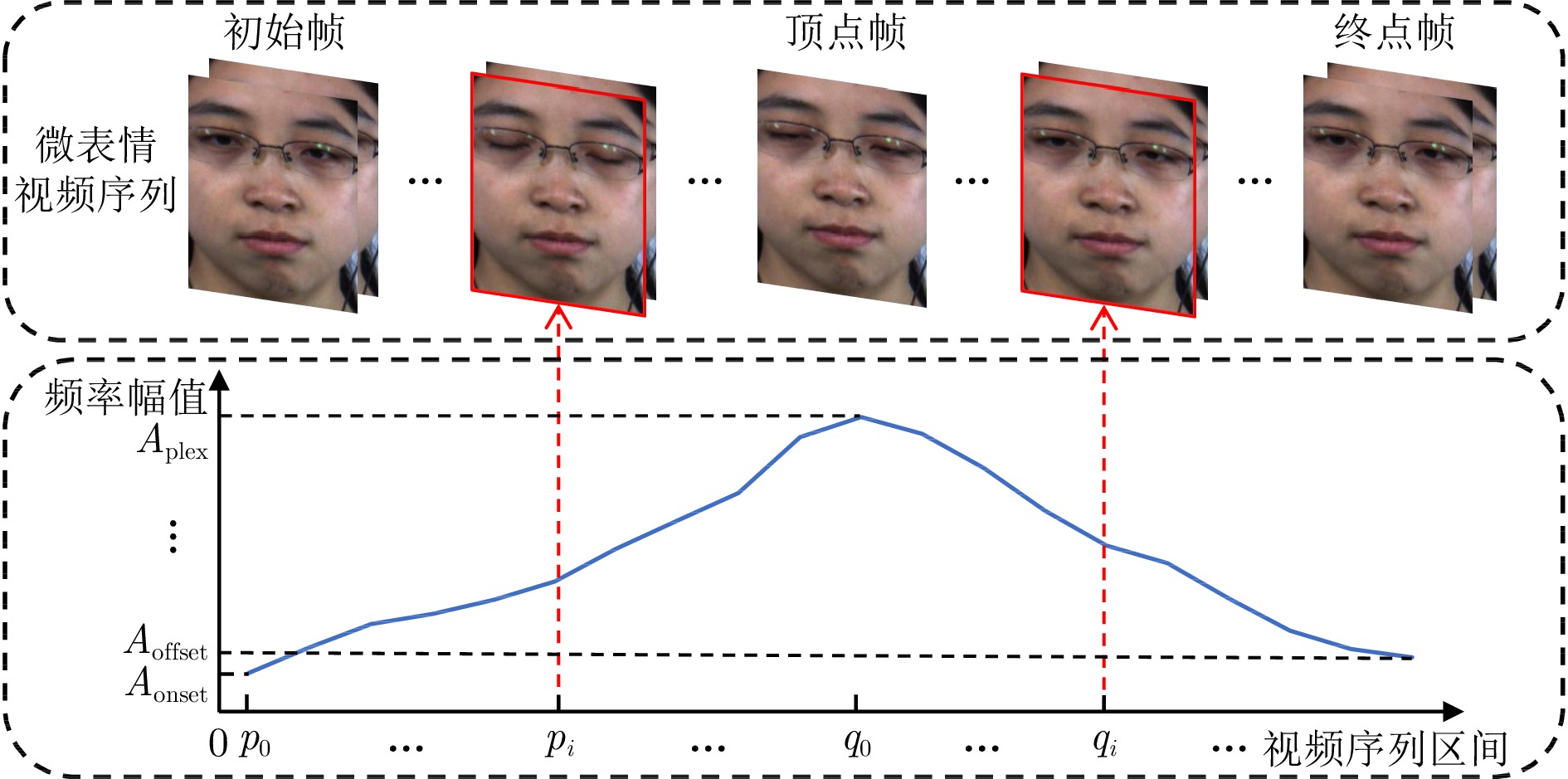

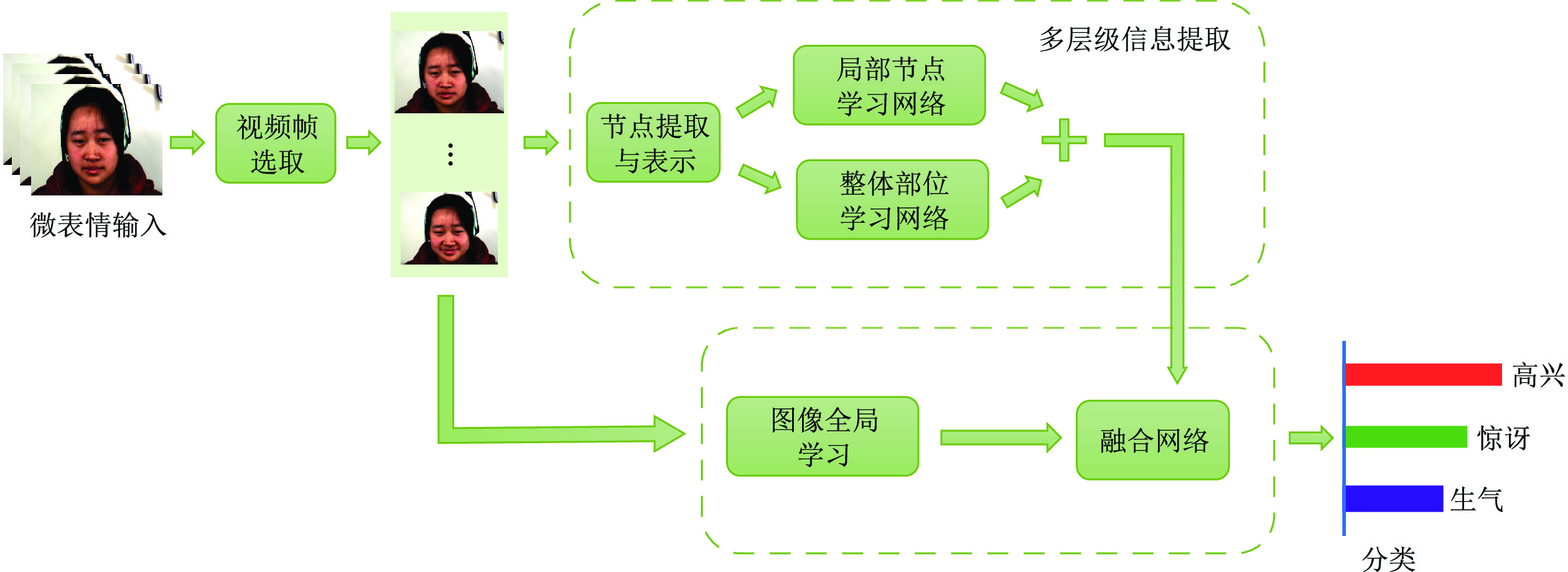

摘要: 微表情是人类情感表达过程中细微且不自主的表情变化, 实现准确和高效的微表情识别, 对于心理疾病的早期诊断和治疗有重要意义. 现有的微表情识别方法大多未考虑面部产生微表情时各个关键部位间的联系, 难以在小样本图像空间上捕捉到微表情的细微变化, 导致识别率不高. 为此, 提出一种基于多层级信息融合网络的微表情识别方法. 该方法包括一个基于频率幅值的视频帧选取策略, 能从微表情视频中筛选出包含高强度表情信息的图像帧、一个基于自注意力机制和图卷积网络的多层级信息提取网络以及一个引入图像全局信息的融合网络, 能从不同层次捕获人脸微表情的细微变化, 来提高对特定类别的辨识度. 在公开数据集上的实验结果表明, 该方法能有效提高微表情识别的准确率, 与其他先进方法相比, 具有更好的性能.Abstract: Micro-expressions are subtle and involuntary changes during emotional expression. Accurate and efficient recognition of these is crucial for the early diagnosis and treatment of mental illnesses. Most of the existing methods often neglect the connections between key facial areas in micro-expressions, making it difficult to capture the subtle changes in small sample image spaces, resulting in low recognition rates. To address this, a micro-expression recognition method is proposed based on a multi-level information fusion network. This method includes a video frame selection strategy based on frequency amplitude, which can select frames with high-intensity expressions from micro-expression videos. Additionally, this method includes a multi-level information extraction network using self-attention mechanisms and graph convolutional networks, and a fusion network that incorporates global image information, which can capture the subtle changes of facial micro-expressions from different levels to improve the recognition of specific categories. Experiments on public datasets show that our method effectively improves the accuracy and outperforms other advanced methods.

-

表 1 SMIC、CASME II和SAMM的3分类样本分布

Table 1 Distribution of 3 categorical samples for SMIC, CASME II and SAMM

数据集 SMIC CASME II SAMM 消极 70 88 92 积极 51 32 26 惊讶 43 25 15 总计 164 145 133 表 2 CASME II、SAMM和MMEW的5分类样本分布

Table 2 Distribution of 5 categorical samples for CASME II、SAMM and MMEW

数据集 CASME II SAMM MMEW 快乐 32 26 36 惊讶 25 15 89 厌恶 63 — 72 恐惧 — — 16 压抑 27 — — 愤怒 — 57 — 蔑视 — 12 — 其他 99 26 66 总计 246 136 279 表 3 不同帧数的性能对比

Table 3 Performance comparison for different numbers of frames

$N$ SAMM (3分类) MMEW (5分类) 准确率(%) UF1 准确率(%) UF1 0 75.94 0.6462 68.45 0.5732 1 84.96 0.7842 75.26 0.6927 2 89.47 0.8356 81.36 0.7834 3 81.20 0.7381 78.85 0.7225 表 4 不同卷积核数量下的性能对比

Table 4 Performance comparison with different numbers of convolutional kernels

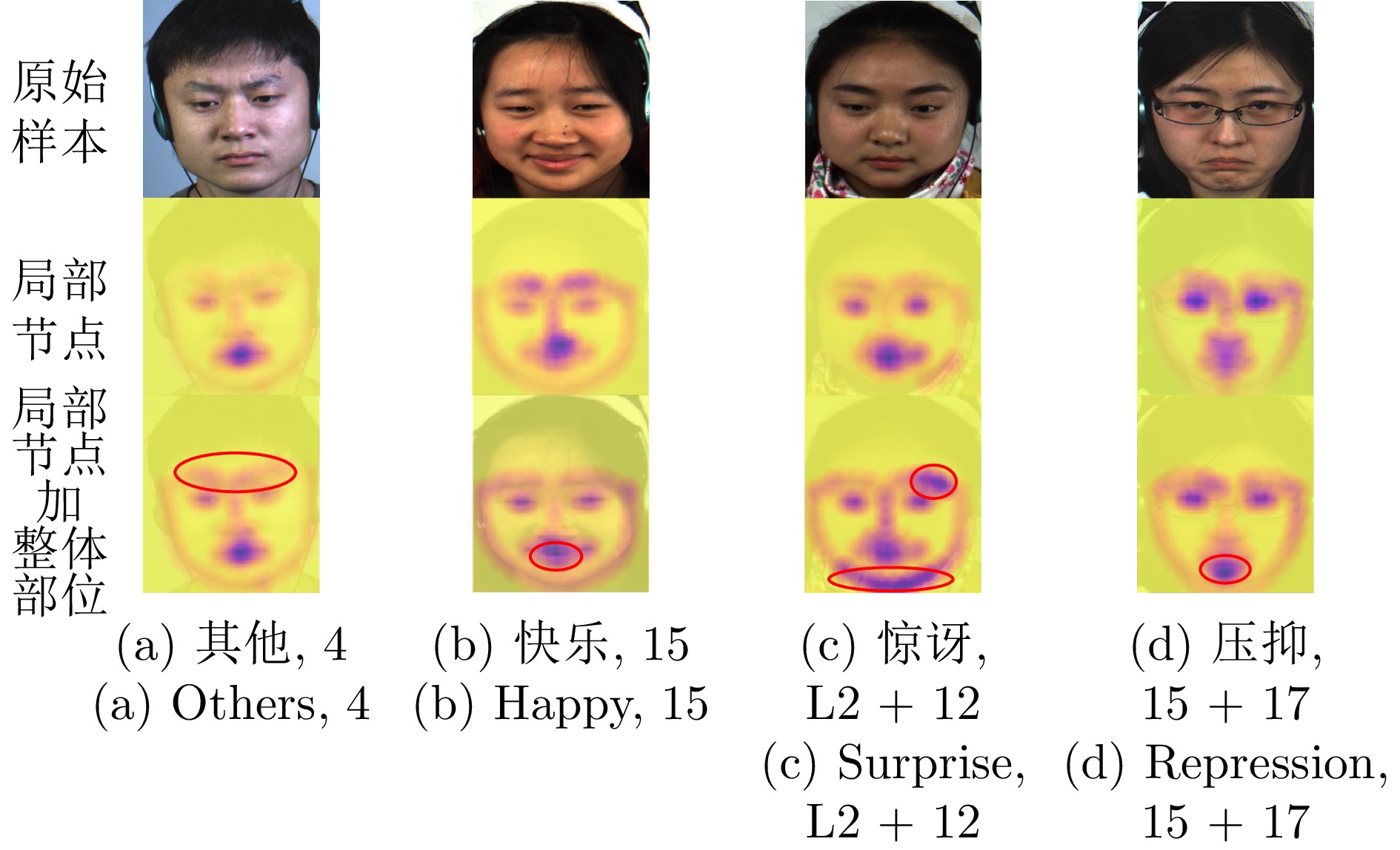

卷积核数 SAMM (3分类) MMEW (5分类) 准确率(%) UF1 准确率(%) UF1 0 83.46 0.7429 72.75 0.6341 7 89.47 0.8356 81.36 0.7834 68 87.22 0.8247 77.42 0.7323 表 5 各网络分支模型的消融实验

Table 5 Ablation studies of each network branch in our model

学习网络 SAMM (3分类) MMEW (5分类) 准确率 (%) UF1 准确率 (%) UF1 局部节点 80.45 0.7252 73.12 0.6968 局部节点加整体部位 86.47 0.8021 80.65 0.7626 局部节点加整体部位加图像全局 89.47 0.8356 81.36 0.7834 表 6 ICE的性能验证

Table 6 Performance validation of ICE

损失函数 w SAMM (3分类) MMEW (5分类) 准确率 (%) UF1 准确率 (%) UF1 CE — 85.71 0.7982 79.93 0.7463 ICE 0.1 87.96 0.8236 80.28 0.7732 ICE 0.3 89.47 0.8356 79.56 0.7635 ICE 0.5 88.72 0.8262 80.64 0.7582 ICE 1.0 87.22 0.8194 81.36 0.7834 ICE 2.0 86.47 0.8124 78.85 0.7281 ICE 5.0 89.47 0.8293 80.28 0.7546 ICE 10.0 87.96 0.8178 81.00 0.7782 表 7 CASME II和SAMM数据集上的3分类任务性能比较

Table 7 Comparison of the performance of the3-categorization task on the CASME II and SAMM datasets

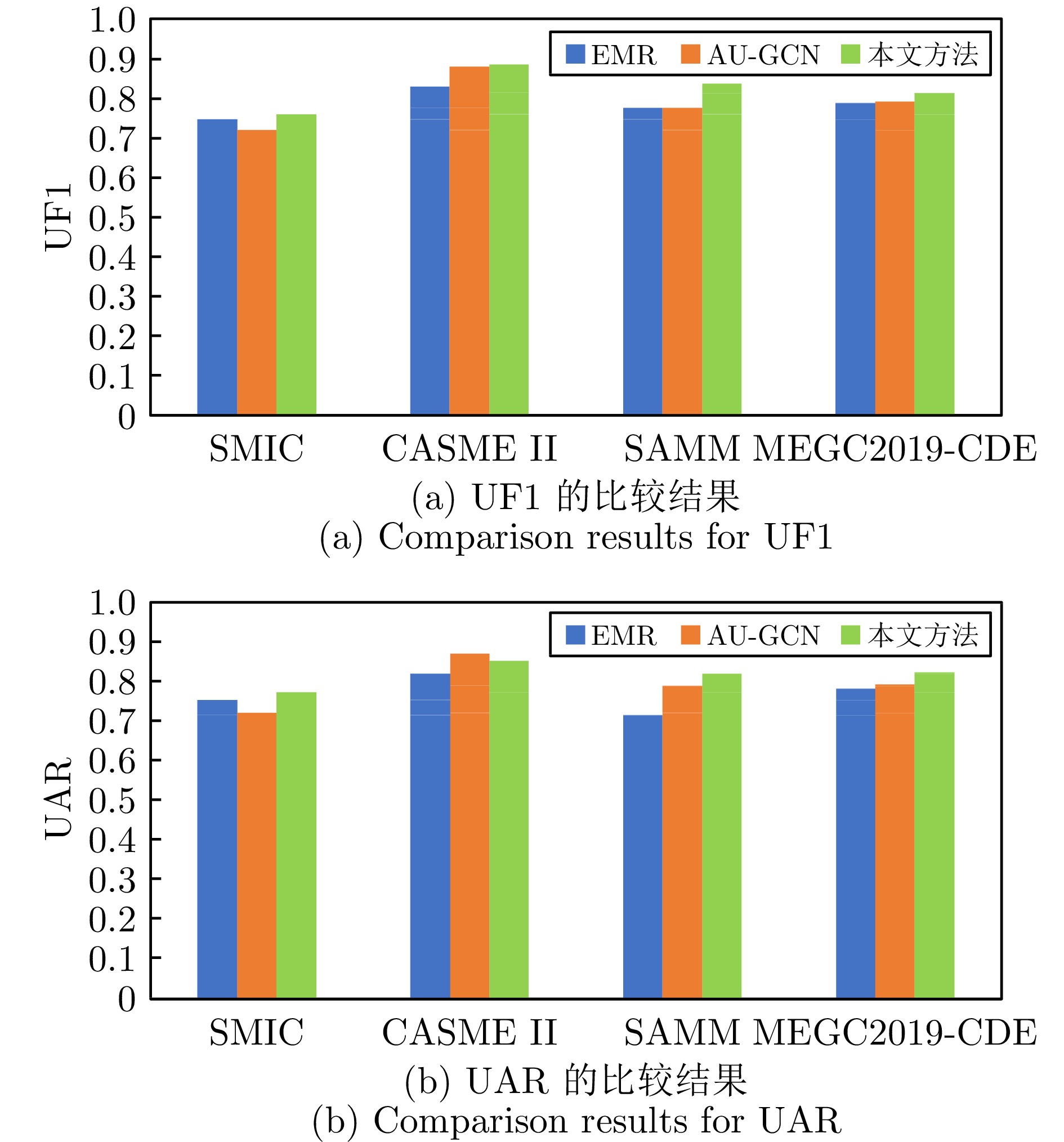

方法 CASME II SAMM 准确率(%) UF1 准确率(%) UF1 Bi-WOOF 58.80 0.6100 58.30 0.3970 OFF-ANet 88.28 0.8697 68.18 0.5423 STST-Net 86.86 0.8382 68.10 0.6588 Graph-TCN 71.20 0.3550 70.20 0.4330 GACNN 89.66 0.8695 88.72 0.8188 本文方法 91.03 0.8849 89.47 0.8356 表 8 MEGC2019-CDE协议下的性能比较

Table 8 Performance comparison under the MEGC2019-CDE protocol

方法 SMIC CASME II SAMM MEGC2019-CDE UF1 UAR UF1 UAR UF1 UAR UF1 UAR Bi-WOOF 0.5727 0.5829 0.7805 0.8027 0.5211 0.5139 0.6296 0.6227 OFF-ANet 0.6817 0.6695 0.8764 0.8681 0.5409 0.5392 0.7196 0.7096 DIN 0.6645 0.6726 0.8621 0.8560 0.5868 0.5663 0.7322 0.7278 STST-Net 0.6801 0.7013 0.8382 0.8686 0.6588 0.6810 0.7353 0.7605 EMR 0.7461 0.7530 0.8293 0.8209 0.7754 0.7152 0.7885 0.7824 AU-GCN 0.7192 0.7215 0.8798 0.8710 0.7751 0.7890 0.7914 0.7933 ME-PLAN 0.7127 0.7256 0.8632 0.8778 0.7164 0.7418 0.7715 0.7864 本文方法 0.7583 0.7741 0.8849 0.8532 0.8356 0.8194 0.8124 0.8231 表 9 CASME II和SAMM数据集的5分类任务性能比较

Table 9 Comparison of performance on the5-categorization task for the CASME II and SAMM datasets

方法 CASME II SAMM 准确率(%) UF1 准确率(%) UF1 DSSN 71.19 0.7297 57.35 0.4644 Graph-TCN 73.98 0.7246 75.00 0.6985 SMA-STN 82.59 0.7946 77.20 0.7033 MERSiamC3D 81.89 0.8300 68.75 0.6400 AU-GCN 74.27 0.7047 74.26 0.7045 GACNN 81.30 0.7090 88.24 0.8279 AMAN $75.40$ 0.7100 68.85 0.6700 本文方法 83.67 0.8428 81.62 0.7523 -

[1] Sun L A, Lian Z, Liu B, Tao J H. MAE-DFER: Efficient masked autoencoder for self-supervised dynamic facial expression recognition. In: Proceedings of the 31st ACM International Conference on Multimedia. Ottawa, Canada: ACM, 2023. 6110− 6121 [2] Roy S, Etemad A. Active learning with contrastive pre-training for facial expression recognition. In: Proceedings of the 11th International Conference on Affective Computing and Intelligent Interaction. Massachusetts, USA: IEEE, 2023. 1−8 [3] Huang J J, Li Y N, Feng J S, Wu X L, Sun X S, Ji R R. Clover: Towards a unified video-language alignment and fusion model. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Vancouver, Canada: IEEE, 2023. 14856−14866 [4] Jian M W, Lam K M. Multi-view face hallucination using SVD and a mapping model. IEEE Transactions on Circuits and Systems for Video Technology, 2015, 25(11): 1761−1722 doi: 10.1109/TCSVT.2015.2400772 [5] Liu Q H, Wu J F, Jiang Y, Bai X, Yuille A L, Bai S. InstMove: Instance motion for object-centric video segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Vancouver, Canada: IEEE, 2023. 6344−6354 [6] 张颖, 张冰冰, 董微, 安峰民, 张建新, 张强. 基于语言−视觉对比学习的多模态视频行为识别方法. 自动化学报, 2024, 50(2): 417−430Zhang Ying, Zhang Bing-Bing, Dong Wei, An Feng-Min, Zhang Jian-Xin, Zhang Qiang. Multi-modal video action recognition method based on language-visual contrastive learning. Acta Automatica Sinica, 2024, 50(2): 417−430 [7] Lei L, Li J F, Chen T, Li S G. A novel Graph-TCN with a graph structured representation for micro-expression recognition. In: Proceedings of the 28th ACM International Conference on Multimedia. Washington, USA: ACM, 2020. 2237−2245 [8] Lei L, Chen T, Li S G. Micro-expression recognition based on facial graph representation learning and facial action unit fusion. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. Virtual Event: IEEE, 2021. 1571−1580 [9] 徐峰, 张军平. 人脸微表情识别综述. 自动化学报, 2017, 43(3): 333−348Xu Feng, Zhang Jun-Ping. Facial micro-expression recognition: A survey. Acta Automatica Sinica, 2017, 43(3): 333−348 [10] Li Y T, Wei J S, Liu Y. Deep learning for micr-expression recognition: A survey. IEEE Transactions on Affective Computer, 2022, 13(4): 2028−2046 doi: 10.1109/TAFFC.2022.3205170 [11] Zhao S R, Tang H Y, Liu S F. ME-PLAN: A deep prototypical learning with local attention network for dynamic micro-expression recognition. Neural Networks, 2022, 153: 427−443 doi: 10.1016/j.neunet.2022.06.024 [12] Li H T, Sui M Z, Zhu Z Q, Zhao F. MMNet: Muscle motion-guided network for micro-expression recognition. In: Proceedings of the 31th International Joint Conference on Artificial Intelligence. Vienna, Austria: 2022. 1074−1080 [13] Zhai Z J, Zhao J H, Long C J. Feature representation learning with adaptive displacement generation and transformer fusion for micro-expression recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Vancouver, Canada: IEEE, 2023. 22086−22095 [14] Ojala T, Pietikainen M, Maenpaa T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2002, 24(7): 971−987 doi: 10.1109/TPAMI.2002.1017623 [15] 宋克臣, 颜云辉, 陈文辉, 张旭. 局部二值模式方法研究与展望. 自动化学报, 2013, 39(6): 730−744 doi: 10.1016/S1874-1029(13)60051-8Song Ke-Chen, Yan Yun-Hui, Chen Wen-Hui, Zhang Xu. Research and perspective on local binary pattern. Acta Automatica Sinica, 2013, 39(6): 730−744 doi: 10.1016/S1874-1029(13)60051-8 [16] Zhao G, Pietikainen M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007, 29(6): 915−928 doi: 10.1109/TPAMI.2007.1110 [17] Wang Y, See J, Phan R C, Oh Y H. LBP with six intersection points: Reducing redundant information in LBP-TOP for micro-expression recognition. In: Proceedings of the 12th Asian Conference on Computer Vision. Singapore: AFCV, 2014. 525−537 [18] Huang X H, Zhao G Y, Hong X P, Zheng W M. Spontaneous facial micro-expression analysis using Spatio-temporal Completed Local Quantized Patterns. Neurocomputing, 2016, 175: 564−578 doi: 10.1016/j.neucom.2015.10.096 [19] 陈震, 张道文, 张聪炫, 汪洋. 基于深度匹配的由稀疏到稠密大位移运动光流估计. 自动化学报, 2022, 48(9): 2316−2326Chen Zhen, Zhang Dao-Wen, Zhang Cong-Xuan, Wang Yang. Sparse-to-dense large displacement motion optical flow estimation based on deep matching. Acta Automatica Sinica, 2022, 48(9): 2316−2326 [20] Liong S T, See J, Phan R C W, Oh Y H, Ngo A C L, Wong K S, et al. Spontaneous subtle expression detection and recognition based on facial strain. Signal Processing: Image Communication, 2016, 47: 170−182 doi: 10.1016/j.image.2016.06.004 [21] Liu Y J, Zhang J K, Yan W J, Wang S J, Zhao G Y, Fu X L. A main directional mean optical flow feature for spontaneous micro-expression recognition. IEEE Transactions on Affective Computing, 2016, 7(4): 299−310 doi: 10.1109/TAFFC.2015.2485205 [22] Liu Y J, Bi J L, Lai Y K. Sparse MDMO: Learning a discriminative feature for micro-expression recognition. IEEE Transactions on Affective Computing, 2021, 12(1): 254−261 [23] Kumar A J R, Bhanu B. Micro-expression classification based on landmark relations with graph attention convolutional network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. Virtual Event: IEEE, 2021. 1511−1520 [24] Jian M W, Cui C R, Nie X S, Zhang H X, Nie L Q, Yin Y L. Multi-view face hallucination using SVD and a mapping model. Information Sciences, 2019, 488: 181−189 doi: 10.1016/j.ins.2019.03.026 [25] Patel D, Hong X P, Zhao G Y. Selective deep features for micro-expression recognition. In: Proceedings of the 23rd International Conference on Pattern Recognition. Cancun, Mexico: IEEE, 2017. 2258−2263 [26] Kim D H, Baddar W J, Ro Y M. Micro-expression recognition with expression-state constrained spatio-temporal feature representations. In: Proceedings of the 24th ACM International Conference on Multimedia. Amsterdam, Netherlands: ACM, 2016. 382−386 [27] Peng M, Wang C Y, Chen T, Liu G Y, Fu X L. Dual temporal scale convolutional neural network for micro-expression recognition. Frontiers in Psychology, 2017, 8: 1−12 [28] Liong S T, See J, Wong K S, Phan R C W. Less is more: Micro-expression recognition from video using apex frame. Signal Processing: Image Communication, 2018, 62: 82−92 doi: 10.1016/j.image.2017.11.006 [29] Quang N V, Chun J, Tokuyama T. CapsuleNet for micro-expression recognition. In: Proceedings of the 14th IEEE International Conference on Automatic Face & Gesture Recognition. Lille, France: IEEE, 2019. 1−7 [30] Song B L, Li K, Zong Y, Zhu J, Zheng W M, Shi J G, et al. Recognizing spontaneous micro-expression using a three-stream convolutional neural network. IEEE Access, 2019, 7: 184537−184551 doi: 10.1109/ACCESS.2019.2960629 [31] Gan Y S, Liong S T, Yau W C, Huang Y C, Tan L K. OFF-ApexNet on micro-expression recognition system. Signal Processing: Image Communication, 2019, 74: 129−139 doi: 10.1016/j.image.2019.02.005 [32] Lo L, Xie H X, Shuai H H, Cheng W H. MER-GCN: Micro-expression recognition based on relation modeling with graph convolutional networks. In: Proceedings of the IEEE Conference on Multimedia Information Processing and Retrieval. Orlando, USA: IEEE, 2020. 79−84 [33] King D E. Dlib-ML: A machine learning toolkit. Journal of Machine Learning Research, 2009, 10: 1755−1758 [34] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, et al. Attention is all you need. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: Curran Associates, 2017. 5999−6009 [35] Wang R, Jian M W, Yu H, Wang L, Yang B. Face hallucination using multisource references and cross-scale dual residual fusion mechanism. International Journal of Intelligent Systems, 2022, 37(11): 9982−10000 doi: 10.1002/int.23024 [36] Yan W J, Li X B, Wang S J, Zhao G Y, Liu Y J, Chen Y H, et al. CASME II: An improved spontaneous micro-expression database and the baseline evaluation. Plos One, 2014, 9(1): 1−8 [37] Li Y T, Huang X H, Zhao G Y. Joint local and global information learning with single apex frame detection for micro-expression recognition. IEEE Transactions on Image Process, 2021, 30: 249−263 doi: 10.1109/TIP.2020.3035042 [38] Li X B, Pfister T, Huang X H, Zhao G Y, Pietikainen M. A spontaneous micro-expression database: Inducement, collection and baseline. In: Proceedings of the 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition. Shanghai, China: IEEE, 2013. 1−6 [39] Davison A K, Lansley C, Costen N, Tan K, Yap M H. SAMM: A spontaneous micro-facial movement dataset. IEEE Transactions on Affective Computing, 2016, 9(1): 116−129 [40] Ben X Y, Ren Y, Zhang J P, Wang S J, Kpalma K, Meng W X, et al. Video-based facial micro-expression analysis: A survey of datasets, features and algorithms. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(9): 5826−5846 [41] See J, Yap M H, Li J T, Hong X P, Wang S J. MEGC 2019——The second facial micro-expressions grand challenge. In: Proceedings of the 14th IEEE International Conference on Automatic Face & Gesture Recognition. Lille, France: IEEE, 2019. 1−5 [42] Zhou L, Mao Q, Xue L Y. Dual-inception network for cross-database micro-expression recognition. In: Proceedings of the 14th IEEE International Conference on Automatic Face & Gesture Recognition. Lille, France: IEEE, 2019. 1−5 [43] Liong S T, Gan Y S, See J, Khor H Q, Huang Y C. Shallow triple stream three-dimensional CNN (STSTNet) for micro-expression recognition. In: Proceedings of the 14th IEEE International Conference on Automatic Face & Gesture Recognition. Lille, France: IEEE, 2019. 1−5 [44] Liu Y C, Du H M, Zheng L, Gedeon T. A neural micro-expression recognizer. In: Proceedings of the 14th IEEE International Conference on Automatic Face & Gesture Recognition. Lille, France: IEEE, 2019. 1−4 [45] Khor H Q, See J, Liong S T, Phan R C W, Lin W Y. Dual-stream shallow networks for facial micro-expression recognition. In: Proceedings of the 26th IEEE International Conference on Image Processing. Taipei, China: IEEE, 2019. 36−40 [46] Liu J T, Zheng W M, Zong Y. SMA-STN: Segmented movement-attending spatio-temporal network for micro-expression recognition [Online], available: https://arxiv.org/abs/2010.09342, October 19, 2020 [47] Zhao S R, Tao H Q, Zhang Y S, Xu T, Zhang K, Hao Z K, et al. A two-stage 3D CNN based learning method for spontaneous micro-expression recognition. Neurocomputing, 2021, 448: 276−289 doi: 10.1016/j.neucom.2021.03.058 [48] Wei M T, Zheng W M, Zong Y, Jiang X X, Lu C, Liu J T. A novel micro-expression recognition approach using attention-based magnification-adaptive networks. In: Proceedings of the 47th IEEE International Conference on Acoustics, Speech and Signal Processing. Singapore: IEEE, 2022. 2420−2424 -

下载:

下载: