A Survey on Vision-based Sensing and Pose Estimation Methods for UAV Autonomous Landing

-

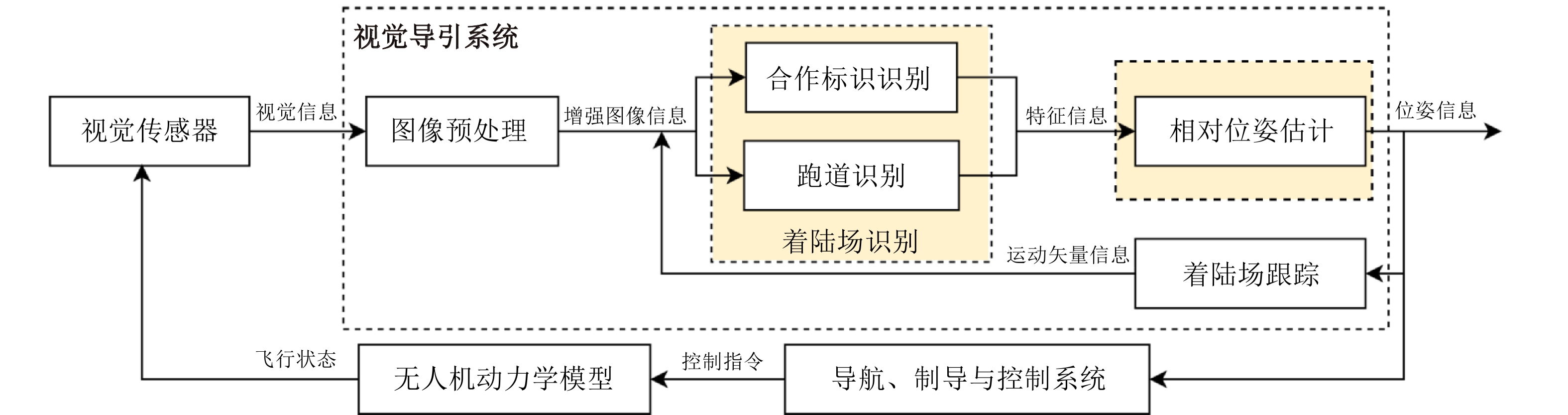

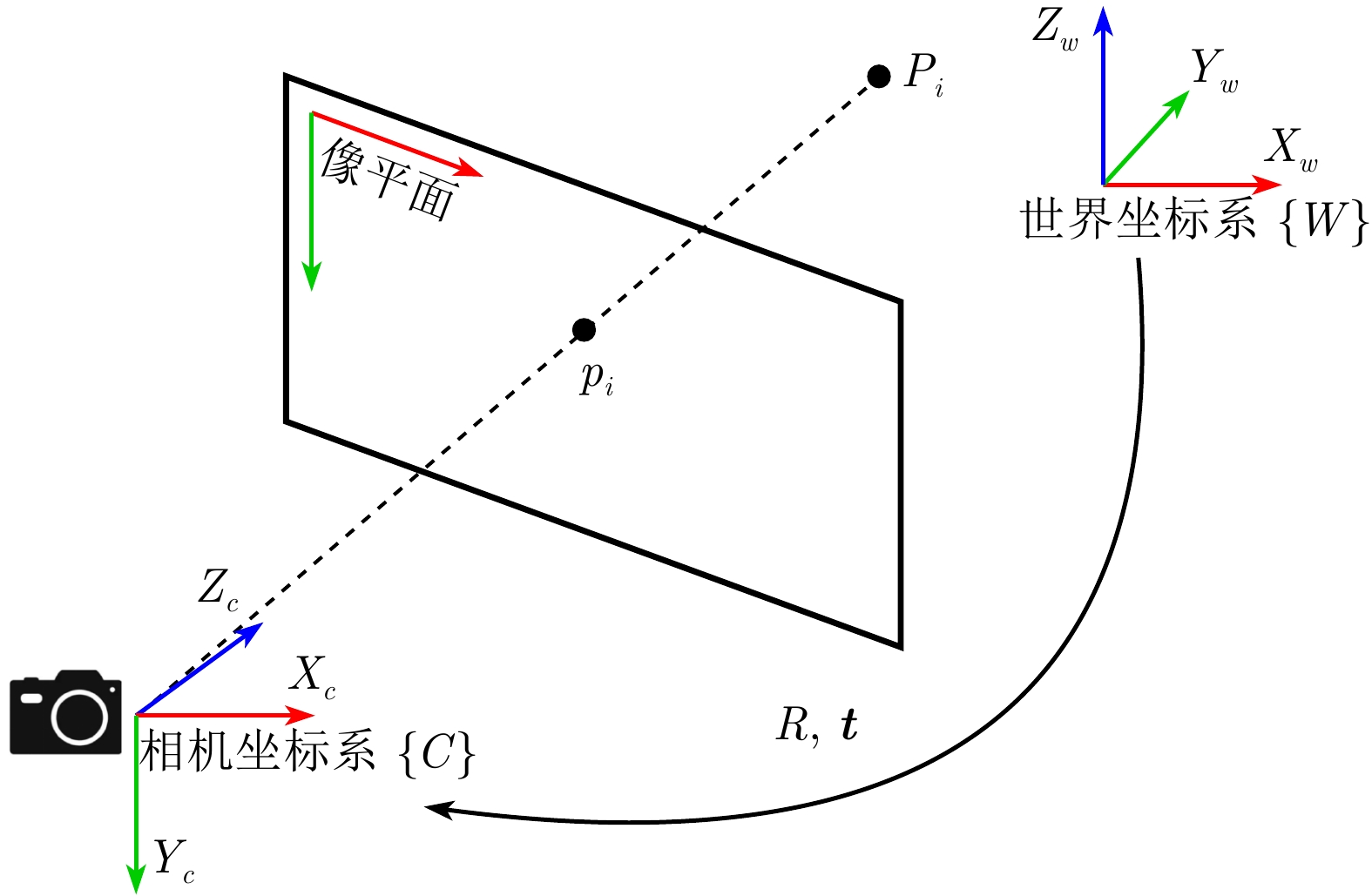

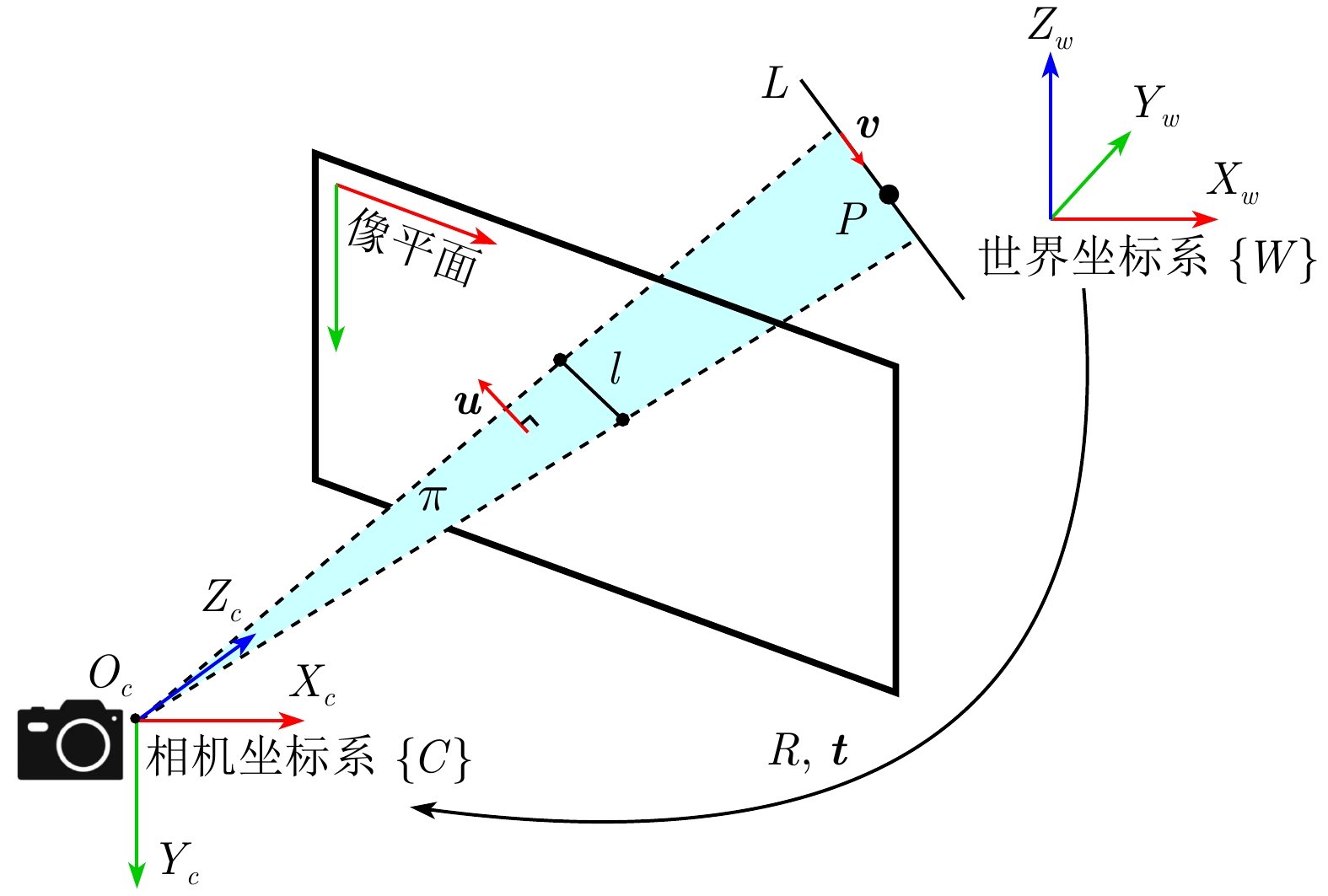

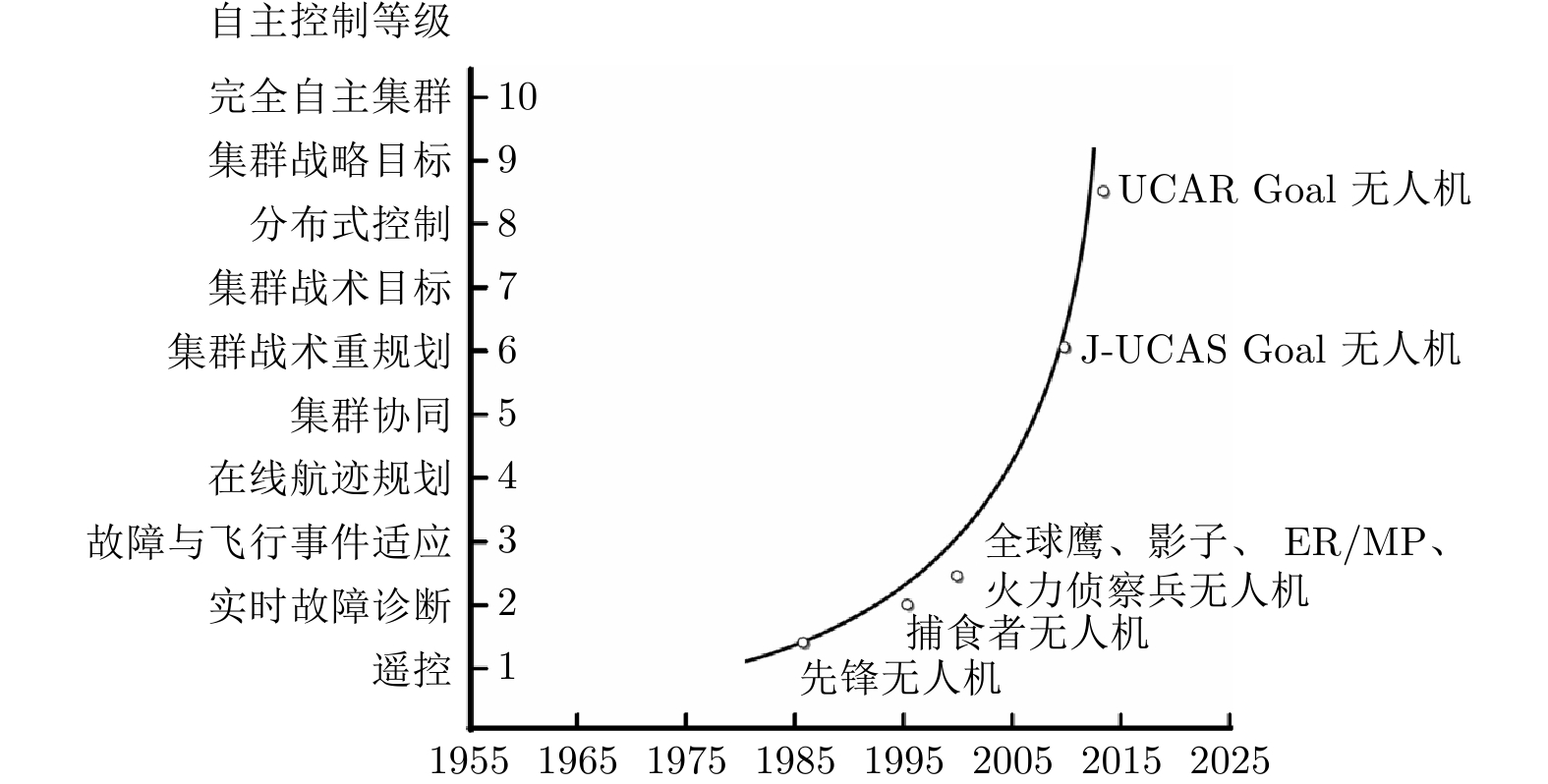

摘要: 自主着陆技术是制约无人机 (Unmanned aerial vehicle, UAV) 自主性等级提升中极具挑战性的一项技术. 立足于未来基于视觉的无人机自主着陆技术的发展需求, 围绕其中的核心问题——着陆场检测与位姿估计, 对近十年来国内外无人机自主着陆领域中基于视觉的着陆场检测与位姿估计方法研究进展进行总结. 首先, 在分析无人机自主着陆应用需求的基础上, 指出机器视觉在无人机自主着陆领域的应用优势, 并凝练出存在的科学问题; 其次, 按不同应用场景划分对着陆场检测算法进行梳理; 然后, 分别对纯视觉、多源信息融合的位姿估计技术研究成果进行归纳; 最后, 总结该领域有待进一步解决的难点, 并对未来的技术发展趋势进行展望.Abstract: Autonomous landing technology is the most challenging technology to restrict the improvement of unmanned aerial vehicle (UAV) autonomy level. Focusing on the core problem of autonomous landing technology of UAV——Landing site detection and pose estimation, the research progress in this field in the past decade is summarized in this paper, which is on the basis of the development demand of vision-based autonomous landing technology in the future. First of all, on the basis of analyzing the application demand for UAV autonomous landing, the advantages of the application of machine vision in the field of UAV autonomous landing are pointed out, and the existing scientific problems are summarized. Secondly, the landing site detection algorithms are sorted according to different application scenarios. Then, the research progress of pose estimation technology based on vision and multi-information fusion are summarized, respectively. Finally, the challenges to be solved in this field are summarized, and future technical development trends are introduced.

-

表 1 FAA精密进近与着陆标准

Table 1 The FAA precision approach and landing standard

着陆分级 决断高度(m) 水平精度(m) 垂直精度(m) 角度容差(%) I类 60 9.1 3.0 7.5 II类 30 4.6 1.4 4.0 III类 15 4.1 0.5 4.0 表 2 几种机器视觉传感器的测量原理及特点

Table 2 Measurement principles and characteristics of several machine vision sensors

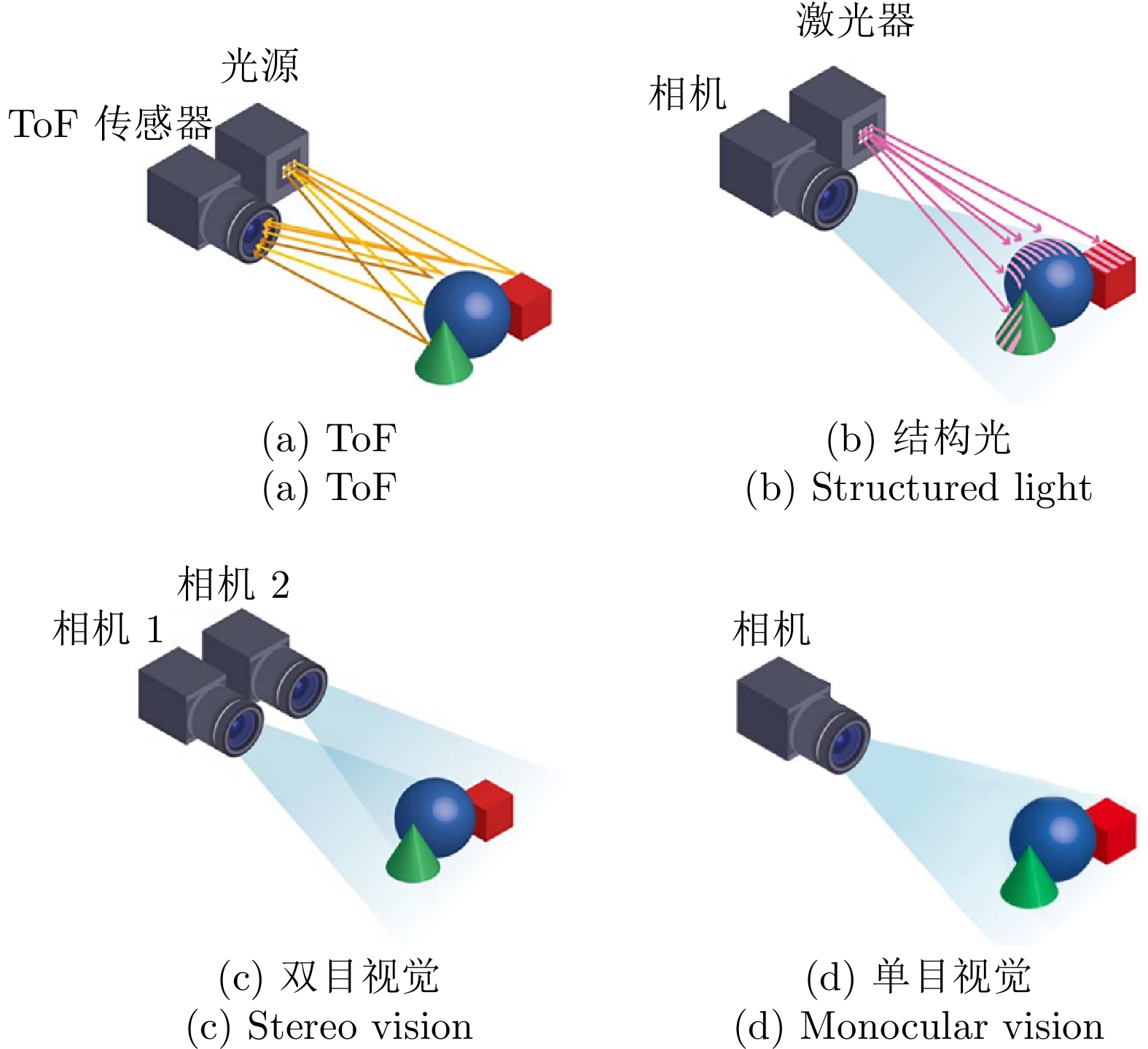

传感器类型 测量方式 测量原理 有效测量范围(m) 测量精度 ToF 主动视觉 通过红外发射器发射调制过的光脉冲, 再由接收器接收遇到目标后反射回来的光脉冲, 并根据光脉冲的往返时间计算与目标之间的距离 $ 0.1 \sim 10 $ 厘米级 结构光 主动视觉 通过红外激光器, 将具有一定结构特征的光线投射到被拍摄物体上, 再由专门的红外摄像头进行采集反射的结构光图案, 根据三角测量原理进行深度信息的计算 $ 0.1 \sim 6 $ 毫米级 双目(立体) 视觉 被动视觉 不需要主动对外发射光源, 通过左右两个摄像头获取图像信息, 解算视差得出目标的位置和深度信息 $ 0.3 \sim 25 $ 厘米级 单目视觉 被动视觉 不需要主动对外发射光源, 通过对单目摄像头获取的图像信息进行增强、目标检测及跟踪、位姿估计等一系列的图像算法处理, 间接地获取目标的相对位姿信息 $0.3 \sim 20\, 000$ — 表 3 典型的着陆场检测方法总结

Table 3 A summary of typical landing site detection methods

检测

方案文献 着陆场

类型着陆场检测方法 特殊应用场景 实验结果 移动

平台背景

复杂不完全

成像光照

变化面向

合作

标识基于

典型

特征[33] “H”形 仿射不变矩和Harris 角点检测 $\checkmark$ $\checkmark$ 平均检测精度为95% [36] “H”形 基于DLS 的椭圆拟合方法 $\checkmark$ 平均检测精度为92% [37] “H”形 基于分层特征和特征金字塔的改进

YOLOv3-tiny 网络模型$\checkmark$ 平均检测精度为85.88%, 平均检测速度为17 Hz [38] “H”形 融合高低层特征的改进SSD 网络模型 $\checkmark$ $\checkmark$ 平均检测精度为85.88%, 平均检测速度为17 Hz [41] “H”形 基于深度残差网络和特征金字塔的改进

SSD 网络模型$\checkmark$ $\checkmark$ 平均检测精度约为78% [34] “T”形 Canny 边缘检测与Hu 矩匹配 平均检测速度为33 Hz [35] 3D标识 Canny 和SURF 特征 $\checkmark$ 平均检测误差约为3 px, 平均检测速度为 63 Hz [39] 矩形 YOLOv3-tiny 网络模型和改进TLD $\checkmark$ $\checkmark$ 平均检测精度为98.5%, 平均检测速度为53 Hz [42] 圆形 VGG-M 模型与主动强化学习 $\checkmark$ 平均检测误差为13.55 px 图像

分割[49] 圆形 自动阈值分割算法 $\checkmark$ $\checkmark$ 平均检测精度为66% [50] 圆形 HSV 分割和Canny 边缘检测 $\checkmark$ $\checkmark$ 平均检测误差约为5 px [51] 圆形 LLIE-Net 图像增强网络模型和基于

FastMBD的图像分割$\checkmark$ 平均检测精度为88%, 平均检测速度为21 Hz [52] 矩形 改进的ERFNet 网络模型 $\checkmark$ 平均检测精度为76.35%, 平均检测速度为45 Hz 基准

标识

系统[44] AprilTag 基于ROI 和CMT 的改进AprilTag 算法 $\checkmark$ 自主降落的精度为0.3 m [43] AprilTag 两级级联的改进MobileNet 网络模型 $\checkmark$ 平均检测精度为98%, 平均检测速度为 31 Hz [45] AprilTag 基于ROI 的AprilTag 算法 $\checkmark$ 平均检测精度为100% [47] AprilTag 基于局部搜索和调整分辨率策略的改进

AprilTag 识别算法$\checkmark$ 平均检测速度为20 Hz [48] AprilTag AprilTag 编码和阈值法 $\checkmark$ $\checkmark$ $\checkmark$ 平均检测精度为98.8% [46] ArUco HOG 特征和基于TLD 框架的改进KCF 算法 $\checkmark$ 平均检测精度为82.24%, 平均

检测速度为31.47 Hz面向

跑道基于

几何

特征[55] 舰基跑道 EDline 线特征检测 $\checkmark$ 平均检测精度为65.5% [57] 舰基跑道 形态学特征和边缘检测 $\checkmark$ 平均检测误差约为7 px [53] 陆基跑道 基于LSD 的改进FDCM 边缘检测 平均检测误差约为10 px, 平均检测速度为 13 Hz [54] 陆基跑道 YOLOv3 剪枝模型和概率Hough 变换 $\checkmark$ $\checkmark$ 平均检测精度约70%, 平均检测速度为16 Hz [56] 陆基跑道 频域残差法与SIFT 特征 $\checkmark$ 平均检测精度为94% [58] 陆基跑道 基于分割的区域竞争和特定能量函数最小化策略 $\checkmark$ $\checkmark$ 平均检测误差约为8 px, 平均检测速度

不低于20 Hz[59] 陆基跑道 SIFT 特征与CSRT 跟踪 $\checkmark$ 平均检测精度为94.89%, 平均检测速度为4.3 Hz 基于

深度

特征[61] 陆基跑道 基于角点回归的改进YOLOv3 网络模型 平均检测精度为98.3%, 平均检测速度为25 Hz [62] 陆基跑道 RunwayNet 网络模型 平均检测精度为90% 表 4 典型的单目视觉位姿估计方法梳理

Table 4 A summary of typical monocular vision-based pose estimation methods

文献 位姿估计方法 测量范围 实验结果 独立帧 [65] 正交迭代法 500 m飞行半径内 最大位置估计误差为5 m, 最大姿态估计误差为$ 2^\circ $ [66] Powell's-Dogleg 算法 3 m飞行半径内 三轴平均位置误差分别为17.95 cm、11.50 cm、3.65 cm,

三轴平均姿态误差分别为$ 8.43^\circ $、$ 9.11^\circ $、$ 0.56^\circ $[67] POSIT 算法 400 m飞行半径内 三轴平均位置误差分别为0.5 m、0.8 m、2.5 m, 偏航角平均误差为$ 0.1^\circ $ [68] 单应矩阵分解 3 m飞行半径内 位置均方根误差为0.0138 m, 三轴姿态均方根误差分别为

$ 1.98^\circ $、$ 1.41^\circ $、$ 0.22^\circ $[69] Ma.Y.B 编码与L-M 算法 2 m飞行半径内 俯仰角、滚转角和偏航角的平均误差分别为$ 0.36^\circ $、$ 0.40^\circ $、$ 0.38^\circ $ [70] L-M 算法 2 km飞行半径内 平均位置估计误差小于10 m, 平均姿态估计误差小于$ 2^\circ $ [71] 基于圆和角点特征的相对位姿

估计改进算法20 m 高度范围内 水平位置和高度平均误差分别为0.005 m、0.054 m,

偏航角平均误差为$ 1.6^\circ $[72] 目标中心约束与最小二乘法 1 m高度范围内 平均位置误差小于20 mm, 平均姿态误差小于$ 0.5^\circ $ [74] L-M 与单状态卡尔曼滤波 250 m飞行半径内 平均位置误差不超过0.7 m [78] 最小二乘法 60 m高度范围内 最大位置估计误差为6.52 m, 最大姿态估计误差为$ 0.08^\circ $ 连续帧 [79] 多点观测法 10 m 高度范围内 平均位置误差为1.47 m, 平均姿态误差为$ 1^\circ $ [80] SURF 与单应性分解 0.72 m飞行半径内 最大位置估计误差小于0.05 m [81] SPoseNet 网络模型 80 m飞行半径内 位置均方根误差小于7 m, 偏航角均方根误差小于$ 6^\circ $ [82] 基于运动点剔除的优化算法 120 m飞行半径内 平均位置误差约为2 cm [83] P4P 及UKF 0.26 m高度范围内 平均位置估计误差为2.4% [84] BA 3 m高度范围内 平均位置误差为4 mm 表 5 不同信息融合层级的特点

Table 5 Characteristics of different information fusion levels

像素级融合 特征级融合 位姿级融合 信息损失 最小 中等 最大 对传感器的依赖性 最大 中等 最小 算法复杂度 最高 中等 最低 系统开放性 最低 中等 最高 表 6 典型的视觉/惯性融合位姿估计方法梳理

Table 6 A summary of typical pose estimation methods based on visual-inertial fusion

文献 信息源 融合方法 测量范围 实验结果 滤波 [87] 视觉/惯性 卡尔曼滤波和Mean Shift 4 m飞行半径内 速度估计均方根误差为0.04 m/s, 位置估计均方根误差为0.02 m [88] 视觉/惯性/高度计 卡尔曼滤波 100 m高度范围内 平均位置估计误差小于0.4 m, 平均姿态估计误差小于$ 1^\circ $ [89] 视觉/惯性 EKF滤波 500 m飞行半径内 平均位置估计误差小于2 m [90] 视觉/惯性 SR_UKF滤波 150 m飞行半径内 平均位置估计误差为0.0531 m, 平均姿态估计误差为$0.020\,3^\circ$ [91] 视觉/惯性 UKF滤波 距离甲板约5 m内 平均位置估计误差为0.23 m, 平均姿态估计误差为$ 5^\circ $ [92] 视觉/惯性/气压计 ESKF滤波 20 m高度范围内 平均位置测量误差小于5 m, 速度误差小于2 m/s [93] 视觉/惯性/雷达 联邦滤波器 距离甲板400 m内 纵向平均位置估计误差为1.6 m, 横侧向平均位置

估计误差为0.8 m, 航向角平均估计误差为$ 0.1^\circ $[94] 视觉/惯性 歧义校正算法 30 m飞行半径内 平均位置估计误差约2 cm [95] 视觉/惯性 改进的粒子滤波 0.4 m飞行半径内 平均位置估计误差约0.87 cm [96] 视觉/惯性 时延滤波器 800 m 飞行半径内 最大位置估计误差为15 m 优化 [98] 视觉/惯性 流形优化 4 m高度范围内 平均位置误差小于0.2 m [99] 视觉/惯性/

激光测距仪因子图优化 300 m高度范围内 平均位置估计误差约3 m 深度

学习[102] 视觉/惯性 ResNet18 和LSTM 20 m飞行半径内 平均位置估计误差为0.08 m [103] 视觉/惯性 CNN和BiLSTM 160 m飞行半径内 平均高度估计误差小于1.0567 m [104] 视觉/惯性 FlowNet和LSTM 3 m高度范围内 平均位置估计误差为0.28 m, 平均姿态估计误差为$ 38^\circ $ -

[1] 赵良玉, 李丹, 赵辰悦, 蒋飞. 无人机自主降落标识检测方法若干研究进展. 航空学报, 2022, 43(9): 266−281Zhao Liang-Yu, Li Dan, Zhao Chen-Yue, Jiang Fei. Some achievements on detection methods of UAV autonomous landing markers. Acta Aeronautica et Astronautica Sinica, 2022, 43(9): 266−281 [2] 唐大全, 毕波, 王旭尚, 李飞, 沈宁. 自主着陆/着舰技术综述. 中国惯性技术学报, 2010, 18(5): 550−555Tang Da-Quan, Bi Bo, Wang Xu-Shang, Li Fei, Shen Ning. Summary on technology of automatic landing/carrier landing. Journal of Chinese Inertial Technology, 2010, 18(5): 550−555 [3] 甄子洋. 舰载无人机自主着舰回收制导与控制研究进展. 自动化学报, 2019, 45(4): 669−681Zhen Zi-Yang. Research development in autonomous carrier-landing/ship-recovery guidance and control of unmanned aerial vehicles. Acta Automatica Sinica, 2019, 45(4): 669−681 [4] Chen H, Wang X M, Li Y. A survey of autonomous control for UAV. In: Proceedings of the International Conference on Artificial Intelligence and Computational Intelligence. Shanghai, China: IEEE, 2009. 267−271 [5] Grlj C G, Krznar N, Pranjić M. A decade of UAV docking stations: A brief overview of mobile and fixed landing platforms. Drones, 2022, 6(1): Article No. 17 doi: 10.3390/drones6010017 [6] Sarigul-Klijn N, Sarigulklijn M M. A novel sea launch and recovery concept for fixed wing UAVs. In: Proceedings of the 54th AIAA Aerospace Sciences Meeting. San Diego, USA: AIAA, 2016. 15−27 [7] EASA. Artificial Intelligence Roadmap: A Human-centric Approach to AI in Aviation. Cologne: European Union Aviation Safety Agency (EASA), 2020. [8] Braff R. Description of the FAA's local area augmentation system (LAAS). Navigation, 1997, 44(4): 411−423 doi: 10.1002/j.2161-4296.1997.tb02357.x [9] 沈林成, 孔维玮, 牛轶峰. 无人机自主降落地基/舰基引导方法综述. 北京航空航天大学学报, 2021, 47(2): 187−196Shen Lin-Cheng, Kong Wei-Wei, Niu Yi-Feng. Ground-and ship-based guidance approaches for autonomous landing of UAV. Journal of Beijing University of Aeronautics and Astronautics, 2021, 47(2): 187−196 [10] Jantz J D, West J C, Mitchell T, Johnson D, Ambrose G. Airborne measurement of instrument landing system signals using a UAV. In: Proceedings of the IEEE International Symposium on Antennas and Propagation and USNC-URSI Radio Science Meeting. Atlanta, USA: IEEE, 2019. 2131−2132 [11] 陈晓飞, 董彦非. 精密进场雷达引导无人机自主着陆综述. 航空科学技术, 2014, 25(1): 69−72Chen Xiao-Fei, Dong Yan-Fei. Review of precision approach radar guide unmanned aerial vehicle (UAV) automatic landing. Aeronautical Science & Technology, 2014, 25(1): 69−72 [12] Kang Y, Park B J, Cho A, Yoo C S, Kim Y, Choi S, et al. A precision landing test on motion platform and shipboard of a tilt-rotor UAV based on RTK-GNSS. International Journal of Aeronautical and Space Sciences, 2018, 19(4): 994−1005 doi: 10.1007/s42405-018-0081-8 [13] Parfiriev A V, Dushkin A V, Ischuk I N. Model of inertial navigation system for unmanned aerial vehicle based on MEMS. Journal of Physics: Conference Series, 2019, 1353: Article No. 012019 [14] Trefilov P M, Iskhakov A Y, Meshcheryakov R V, Jharko E P, Mamchenko M V. Simulation modeling of strapdown inertial navigation systems functioning as a means to ensure cybersecurity of unmanned aerial vehicles navigation systems for dynamic objects in various correction modes. In: Proceedings of the 7th International Conference on Control, Decision and Information Technologies (CoDIT). Prague, Czech Republic: IEEE, 2020. 1046−1051 [15] Zhuravlev V P, Perelvaev S E, Bodunov B P, Bodunov S B. New-generation small-size solid-state wave gyroscope for strapdown inertial navigation systems of unmanned aerial vehicle. In: Proceedings of the 26th Saint Petersburg International Conference on Integrated Navigation Systems (ICINS). St. Petersburg, Russia: IEEE, 2019. 1−3 [16] Wierzbanowski S. Collaborative operations in denied environment (CODE) [Online], available: https://www.darpa.mil/program/collaborative-operations-in-denied-environment, March 19, 2016 [17] Patterson T, McClean S, Morrow P, Parr G. Utilizing geographic information system data for unmanned aerial vehicle position estimation. In: Proceedings of the Canadian Conference on Computer and Robot Vision. St. John's, Canada: IEEE, 2011. 8−15 [18] Santos N P, Lobo V, Bernardino A. Autoland project: Fixed-wing UAV landing on a fast patrol boat using computer vision. In: Proceedings of the OCEANS MTS/IEEE SEATTLE. Seattle, USA: IEEE, 2019. 1−5 [19] Airbus. Airbus concludes ATTOL with fully autonomous flight tests [Online], available: https://www.airbus.com/en/newsroom/press-releases/2020-06-airbus-concludes-attol-with-fully-autonomous-flight-tests, November 15, 2020 [20] Scherer S, Mishra C, Holzapfel F. Extension of the capabilities of an automatic landing system with procedures motivated by visual-flight-rules. In: Proceedings of the 33rd Congress of the International Council of the Aeronautical Sciences (ICAS). Stockholm, Sweden: International Council of the Aeronautical Sciences, 2022. 5421−5431 [21] Gyagenda N, Hatilima J V, Roth H, Zhmud V. A review of GNSS-independent UAV navigation techniques. Robotics and Autonomous Systems, 2022, 152: Article No. 104069 doi: 10.1016/j.robot.2022.104069 [22] 付鲁华, 冯菲, 王鹏, 孙长库. 网格结构光视觉位姿测量系统设计. 激光与光电子学进展, 2023, 60(5): Article No. 0512003Fu Lu-Hua, Feng Fei, Wang Peng, Sun Chang-Ku. Design of visual pose measuring system based on grid-structured light. Laser & Optoelectronics Progress, 2023, 60(5): Article No. 0512003 [23] Sefidgar M, Landry Jr R. Unstable landing platform pose estimation based on camera and range sensor homogeneous fusion (CRHF). Drones, 2022, 6(3): Article No. 60 doi: 10.3390/drones6030060 [24] Wang Y S, Liu J Y, Zeng Q H, Liu S. Visual pose measurement based on structured light for MAVs in non-cooperative environments. Optik, 2015, 126(24): 5444−5451 doi: 10.1016/j.ijleo.2015.09.041 [25] Miiller M G, Steidle F, Schuster M J, Lutz P, Maier M, Stoneman S, et al. Robust visual-inertial state estimation with multiple odometries and efficient mapping on an MAV with ultra-wide FOV stereo vision. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Madrid, Spain: IEEE, 2018. 3701−3708 [26] Peng J Q, Xu W F, Yuan H. An efficient pose measurement method of a space non-cooperative target based on stereo vision. IEEE Access, 2017, 5: 22344−22362 doi: 10.1109/ACCESS.2017.2759798 [27] Thurrowgood S, Moore R J D, Soccol D, Knight M, Srinivasan M V. A biologically inspired, vision-based guidance system for automatic landing of a fixed-wing aircraft. Journal of Field Robotics, 2014, 31(4): 699−727 doi: 10.1002/rob.21527 [28] Al-Kaff A, García F, Martín D, Escalera A D L, Armingol J M. Obstacle detection and avoidance system based on monocular camera and size expansion algorithm for UAVs. Sensors, 2017, 17(5): Article No. 1061 doi: 10.3390/s17051061 [29] Reibel Y, Taalat R, Brunner A, Rubaldo L, Augey T, Kerlain A, et al. Infrared SWAP detectors: Pushing the limits. In: Proceedings of the Infrared Technology and Applications XLI. Baltimore, USA: SPIE, 2015. Article No. 945110 [30] Yang Z G, Li C J. Review on vision-based pose estimation of UAV based on landmark. In: Proceedings of the 2nd International Conference on Frontiers of Sensors Technologies (ICFST). Shenzhen, China: IEEE, 2017. 453−457 [31] 张礼廉, 屈豪, 毛军, 胡小平. 视觉/惯性组合导航技术发展综述. 导航定位与授时, 2020, 7(4): 50−63Zhang Li-Lian, Qu Hao, Mao Jun, Hu Xiao-Ping. A survey of intelligence science and technology integrated navigation technology. Navigation Positioning and Timing, 2020, 7(4): 50−63 [32] Kalaitzakis M, Cain B, Carroll S, Ambrosi A, Whitehead C, Vitzilaios N. Fiducial markers for pose estimation: Overview, applications and experimental comparison of the ARTag, AprilTag, ArUco and STag markers. Journal of Intelligent & Robotic Systems, 2021, 101(4): Article No. 71 [33] 史阳阳. 旋翼型无人机自主着舰目标识别技术研究 [硕士学位论文], 南京航空航天大学, 中国, 2013.Shi Yang-Yang. Research on Target Recognition for Autonomous Landing of Ship-board Rotor Unmanned Aerial Vehicles [Master thesis], Nanjing University of Aeronautics and Astronautics, China, 2013. [34] 史琳婕. 无人机视觉辅助着舰算法研究 [硕士学位论文], 西安电子科技大学, 中国, 2015.Shi Lin-Jie. Research on UAV Autonomous Landing Algorithm Based on Vision-guided [Master thesis], Xidian University, China, 2015. [35] 魏祥灰. 着陆区域视觉检测及无人机自主着陆导引研究 [硕士学位论文], 南京航空航天大学, 中国, 2019.Wei Xiang-Hui. Research on Visual Detection of Landing Area and Autonomous Landing Guidance of UAV [Master thesis], Nanjing University of Aeronautics and Astronautics, China, 2019. [36] Jung Y, Lee D, Bang H. Close-range vision navigation and guidance for rotary UAV autonomous landing. In: Proceedings of the IEEE International Conference on Automation Science and Engineering (CASE). Gothenburg, Sweden: IEEE, 2015. 342−347 [37] 丁沙. 低空无人飞艇的目标检测方法研究 [硕士学位论文], 华中科技大学, 中国, 2019.Ding Sha. Research on Object Detection Method for Low-altitude Unmanned Airship [Master thesis], Huazhong University of Science and Technology, China, 2019. [38] Li J H, Wang X H, Cui H R, Ma Z Y. Research on detection technology of autonomous landing based on airborne vision. IOP Conference Series: Earth and Environmental Science, 2020, 440(4): Article No. 042093 [39] 蔡炳锋. 基于机器视觉的无人机自适应移动着陆研究 [硕士学位论文], 浙江大学, 中国, 2020.Cai Bing-Feng. Research on Vision Based Adaptive Landing of UAV on a Moving Platform [Master thesis], Zhejiang University, China, 2020. [40] 袁俊. 基于无人机平台的目标跟踪和着陆位姿估计 [硕士学位论文], 哈尔滨工业大学, 中国, 2020.Yuan Jun. Target Tracking and Landing Pose Estimation Based on UAV Platform [Master thesis], Harbin Institute of Technology, China, 2020. [41] 吴鹏飞, 石章松, 黄隽, 傅冰. 基于改进SSD网络的着舰标志识别方法. 电光与控制, 2022, 29(1): 88−92Wu Peng-Fei, Shi Zhang-Song, Huang Jun, Fu Bing. Landing mark identification method based on improved SSD network. Electronics Optics & Control, 2022, 29(1): 88−92 [42] 王欣然. 基于主动强化学习的无人机着陆地标视觉定位研究 [硕士学位论文], 中国石油大学(华东), 中国, 2020.Wang Xin-Ran. UAV First View Landmark Localization With Active Reinforcement Learning Study [Master thesis], China University of Petroleum (East China), China, 2020. [43] 杨建业. 移动平台上基于视觉伺服的无人机自主着陆系统设计 [硕士学位论文], 南京理工大学, 中国, 2018.Yang Jian-Ye. Design of UAV Autonomous Landing System Based on Visual Servo on Mobile Platform [Master thesis], Nanjing University of Science and Technology, China, 2018. [44] 王兆哲. 基于视觉定位的四旋翼无人机移动平台自主降落技术研究 [硕士学位论文], 哈尔滨工业大学, 中国, 2019.Wang Zhao-Zhe. Research on Autonomous Landing Technology of Four-rotor UAV Mobile Platform Based on Visual Positioning [Master thesis], Harbin Institute of Technology, China, 2019. [45] 许哲成. 视觉导引的无人机−艇自主动态降落研究 [硕士学位论文], 华中科技大学, 中国, 2021.Xu Zhe-Cheng. Research on Vision-guided UAV-USV Autonomous Dynamic Landing [Master thesis], Huazhong University of Science and Technology, China, 2021. [46] 陈菲雨. 无人机自主精准定点降落中图像处理技术的研究 [硕士学位论文], 山东大学, 中国, 2020.Chen Fei-Yu. Research on Image Processing Technology of UAV Autonomous and Precise Landing [Master thesis], Shandong University, China, 2020. [47] 张航. 基于视觉引导的小型无人机撞网回收技术研究 [硕士学位论文], 南京航空航天大学, 中国, 2021.Zhang Hang. Research on Recovery Technology of Small UAV Collision Net Based on Visual Guidance [Master thesis], Nanjing University of Aeronautics and Astronautics, China, 2021. [48] 郭佳倩. 农用无人机自主精准机动平台降落技术研究 [硕士学位论文], 西北农林科技大学, 中国, 2021.Guo Jia-Qian. Research on Autonomous Precision Mobile Platform Landing Technology for Agricultural UAV [Master thesis], Northwest A&F University, China, 2021. [49] 郭通情. 无人驾驶直升飞机自主着舰视觉导引关键技术研究 [硕士学位论文], 电子科技大学, 中国, 2013.Guo Tong-Qing. Research on the Key Technologies of Autonomous Deck-landing of Unmanned Helicopter [Master thesis], University of Electronic Science and Technology of China, China, 2013. [50] 席志鹏. 无人机自主飞行若干关键问题研究 [硕士学位论文], 浙江大学, 中国, 2019.Xi Zhi-Peng. Research on Several Key Issues of Autonomous Flight of UAV [Master thesis], Zhejiang University, China, 2019. [51] 郭砚辉. 面向无人机−艇协同的计算机视觉辅助关键技术研究 [硕士学位论文], 华中科技大学, 中国, 2019.Guo Yan-Hui. Research on Key Technologies of UAV-USV Collaboration Based on the Assistance of Computer Vision [Master thesis], Huazhong University of Science and Technology, China, 2019. [52] 刘健, 张祥甫, 于志军, 吴中红. 基于改进ERFNet的无人直升机着舰环境语义分割. 电讯技术, 2020, 60(1): 40−46Liu Jian, Zhang Xiang-Fu, Yu Zhi-Jun, Wu Zhong-Hong. Semantic segmentation of landing environment for unmanned helicopter based on improved ERFNet. Telecommunication Engineering, 2020, 60(1): 40−46 [53] Wang J Z, Cheng Y, Xie J C, Niu W S. A real-time sensor guided runway detection method for forward-looking aerial images. In: Proceedings of the 11th International Conference on Computational Intelligence and Security (CIS). Shenzhen, China: IEEE, 2015. 150−153 [54] 李灿. 小型固定翼无人机视觉导航算法研究 [硕士学位论文], 上海交通大学, 中国, 2020.Li Can. Small Fixed-wing UAV Vision Navigation Algorithm Research [Master thesis], Shanghai Jiao Tong University, China, 2020. [55] Zhang L, Cheng Y, Zhai Z J. Real-time accurate runway detection based on airborne multi-sensors fusion. Defence Science Journal, 2017, 67(5): 542−550 doi: 10.14429/dsj.67.10439 [56] Zhang Z Y, Cao Y F, Ding M, Zhuang L K, Tao J. Vision-based guidance for fixed-wing unmanned aerial vehicle autonomous carrier landing. Proceedings of the Institution of Mechanical Engineers, Part G: Journal of Aerospace Engineering, 2019, 233(8): 2894−2913 doi: 10.1177/0954410018788003 [57] Nagarani N, Venkatakrishnan P, Balaji N. Unmanned aerial vehicle's runway landing system with efficient target detection by using morphological fusion for military surveillance system. Computer Communications, 2020, 151: 463−472 doi: 10.1016/j.comcom.2019.12.039 [58] Abu-Jbara K, Sundaramorthi G, Claudel C. Fusing vision and inertial sensors for robust runway detection and tracking. Journal of Guidance, Control, and Dynamics, 2018, 41(9): 1929−1946 doi: 10.2514/1.G002898 [59] Tsapparellas K, Jelev N, Waters J, Brunswicker S, Mihaylova L S. Vision-based runway detection and landing for unmanned aerial vehicle enhanced autonomy. In: Proceedings of the IEEE International Conference on Mechatronics and Automation (ICMA). Harbin, China: IEEE, 2023. 239−246 [60] Quessy A D, Richardson T S, Hansen M. Vision based semantic runway segmentation from simulation with deep convolutional neural networks. In: Proceedings of the AIAA SCITECH Forum. San Diego, USA: AIAA, 2022. Article No. 0680 [61] 梁杰, 任君, 李磊, 齐航, 周红丽. 基于典型几何形状精确回归的机场跑道检测方法. 兵工学报, 2020, 41(10): 2045−2054Liang Jie, Ren Jun, Li Lei, Qi Hang, Zhou Hong-Li. Airport runway detection agorithm based on accurate regression of typical geometric shapes. Acta Armamentarii, 2020, 41(10): 2045−2054 [62] Shang K J, Li X X, Liu C L, Ming L, Hu G F. An integrated navigation method for UAV autonomous landing based on inertial and vision sensors. In: Proceedings of the 2nd International Conference on Artificial Intelligence. Beijing, China: Springer, 2022. 182−193 [63] 尚克军, 郑辛, 王旒军, 扈光锋, 刘崇亮. 基于图像语义分割的无人机自主着陆导航方法. 中国惯性技术学报, 2020, 28(5): 586−594Shang Ke-Jun, Zheng Xin, Wang Liu-Jun, Hu Guang-Feng, Liu Chong-Liang. Image semantic segmentation-based navigation method for UAV auto-landing. Journal of Chinese Inertial Technology, 2020, 28(5): 586−594 [64] 王平, 周雪峰, 安爱民, 何倩玉, 张爱华. 一种鲁棒且线性的PnP问题求解方法. 仪器仪表学报, 2020, 41(9): 271−280Wang Ping, Zhou Xue-Feng, An Ai-Min, He Qian-Yu, Zhang Ai-Hua. Robust and linear solving method for Perspective-n-point problem. Chinese Journal of Scientific Instrument, 2020, 41(9): 271−280 [65] 张洲宇. 基于多源信息融合的无人机自主着舰导引技术研究 [硕士学位论文], 南京航空航天大学, 中国, 2017.Zhang Zhou-Yu. Research on Multi-source Information Based Guidance Technology for Unmanned Aerial Vehicle Autonomous Carrier-landing [Master thesis], Nanjing University of Aeronautics and Astronautics, China, 2017. [66] 姜腾. 基于视觉的多旋翼无人机位置和姿态测量方法研究 [硕士学位论文], 哈尔滨工业大学, 中国, 2017.Jiang Teng. Research on Position and Attitude Measurement Method of Multi Rotor UAV Based on Vision [Master thesis], Harbin Institute of Technology, China, 2017. [67] Meng Y, Wang W, Ding Z X. Research on the visual/inertial integrated carrier landing guidance algorithm. International Journal of Advanced Robotic Systems, 2018, 15 (2 [68] Patruno C, Nitti M, Petitti A, Stella E, D'Orazio T. A vision-based approach for unmanned aerial vehicle landing. Journal of Intelligent & Robotic Systems, 2019, 95(2): 645−664 [69] 任丽君. 基于机载单相机的无人机姿态角测量系统研究 [硕士学位论文], 合肥工业大学, 中国, 2019.Ren Li-Jun. Research on UAV Attitude Angle Measurement System Based on Airborne Single Camera [Master thesis], Hefei University of Technology, China, 2019. [70] Pieniazek J. Measurement of aircraft approach using airfield image. Measurement, 2019, 141: 396−406 doi: 10.1016/j.measurement.2019.03.074 [71] 黄琬婷. 基于合作目标视觉特征信息的相对运动参数估计方法 [硕士学位论文], 国防科技大学, 中国, 2019.Huang Wan-Ting. Estimation of Relative Motion Parameters Based on Visual Feature Information of Cooperative Targets [Master thesis], National University of Defense Technology, China, 2019. [72] 李佳欢. 基于机载视觉的旋翼无人机着舰引导技术研究 [硕士学位论文], 南京航空航天大学, 中国, 2020.Li Jia-Huan. Research on Landing Guidance Technology of Rotor UAV Based on Airborne Vision [Master thesis], Nanjing University of Aeronautics and Astronautics, China, 2020. [73] 师嘉辰. 基于角参数化的单目视觉位姿测量方法研究 [硕士学位论文], 南京航空航天大学, 中国, 2021.Shi Jia-Chen. Research on Monocular Vision Pose Estimation Method Based on Angle Parameterization [Master thesis], Nanjing University of Aeronautics and Astronautics, China, 2021. [74] Lee B, Saj V, Kalathil D, Benedict M. Intelligent vision-based autonomous ship landing of VTOL UAVs. Journal of the American Helicopter Society, 2023, 68(2): 113−126 doi: 10.4050/JAHS.68.022010 [75] 魏振忠, 冯广堃, 周丹雅, 马岳鸣, 刘明坤, 罗启峰, 等. 位姿视觉测量方法及应用综述. 激光与光电子学进展, 2023, 60(3): Article No. 0312010Wei Zhen-Zhong, Feng Guang-Kun, Zhou Dan-Ya, Ma Yue-Ming, Liu Ming-Kun, Luo Qi-Feng, et al. A review of position and orientation visual measurement methods and applications. Laser & Optoelectronics Progress, 2023, 60(3): Article No. 0312010 [76] Lecrosnier L, Boutteau R, Vasseur P, Savatier X, Fraundorfer F. Camera pose estimation based on PnL with a known vertical direction. IEEE Robotics and Automation Letters, 2019, 4(4): 3852−3859 doi: 10.1109/LRA.2019.2929982 [77] 王平, 何卫隆, 张爱华, 姚鹏鹏, 徐贵力. EPnL: 一种高效且精确的PnL问题求解算法. 自动化学报, 2022, 48(10): 2600−2610Wang Ping, He Wei-Long, Zhang Ai-Hua, Yao Peng-Peng, Xu Gui-Li. EPnL: An efficient and accurate algorithm to the PnL problem. Acta Automatica Sinica, 2022, 48(10): 2600−2610 [78] 贾宁. 基于点线特征的无人机自主着舰方法研究 [硕士学位论文], 国防科技大学, 中国, 2016.Jia Ning. An Independently Carrier Landing Method Research Using Point and Line Features for UAVs [Master thesis], National University of Defense Technology, China, 2016. [79] 房东飞. 基于视觉的无人机地面目标精确定位算法研究 [硕士学位论文], 哈尔滨工业大学, 中国, 2018.Fang Dong-Fei. Research on Precise Localization Algorithm for Ground Target Based on Vision-based UAV [Master thesis], Harbin Institute of Technology, China, 2018. [80] Brockers R, Bouffard P, Ma J, Matthies L, Tomlin C. Autonomous landing and ingress of micro-air-vehicles in urban environments based on monocular vision. In: Proceedings of the Micro-and Nanotechnology Sensors, Systems, and Applications III. Orlando, USA: SPIE, 2011. Article No. 803111 [81] 唐邓清. 无人机序列图像目标位姿估计方法及应用 [博士学位论文], 国防科技大学, 中国, 2019.Tang Deng-Qing. Image Sequence-based Target Pose Estimation Method and Application for Unmanned Aerial Vehicle [Ph.D. dissertation], National University of Defense Technology, China, 2019. [82] 李朋娜. 基于视觉特征的位姿估计算法研究 [硕士学位论文], 湖北大学, 中国, 2022.Li Peng-Na. The Research on Pose Estimation Algorithm Based on Visual Features [Master thesis], Hubei University, China, 2022. [83] 刘传鸽. 面向移动平台的无人机自主降落技术研究 [硕士学位论文], 电子科技大学, 中国, 2022.Liu Chuan-Ge. Research on Autonomous Landing Technology of UAV for Mobile Platform [Master thesis], University of Electronic Science and Technology of China, China, 2022. [84] 景江. 基于无人机的大空间视觉形貌测量技术研究 [硕士学位论文], 天津大学, 中国, 2016.Jing Jiang. The Research of Large Space Vision Shape Measurement Technology Based on UAV [Master thesis], Tianjin University, China, 2016. [85] Hiba A, Gáti A, Manecy A. Optical navigation sensor for runway relative positioning of aircraft during final approach. Sensors, 2021, 21(6): Article No. 2203 doi: 10.3390/s21062203 [86] 李康一. 基于多引导体制的舰载无人机撞网回收技术研究 [硕士学位论文], 南京航空航天大学, 中国, 2021.Li Kang-Yi. Research on Collision Recovery Technology of Shipborne UAV Based on Multi Guidance System [Master thesis], Nanjing University of Aeronautics and Astronautics, China, 2021. [87] Deng H, Arif U, Fu Q, Xi Z Y, Quan Q, Cai K Y. Visual-inertial estimation of velocity for multicopters based on vision motion constraint. Robotics and Autonomous Systems, 2018, 107: 262−279 doi: 10.1016/j.robot.2018.06.010 [88] 王妮. 基于多源信息融合的无人机视觉导航技术 [硕士学位论文], 西安电子科技大学, 中国, 2019.Wang Ni. Vision Navigation Technology Based on Multi-source Information Fusion for UAV [Master thesis], Xidian University, China, 2019. [89] 孔维玮. 基于多传感器的无人机自主着舰引导与控制系统研究 [博士学位论文], 国防科技大学, 中国, 2017.Kong Wei-Wei. Multi-sensor Based Autonomous Landing Guidance and Control System of a Fixed-wing Unmanned Aerial Vehicle [Ph.D. dissertation], National University of Defense Technology, China, 2017. [90] Zhang L, Zhai Z J, He L, Wen P C, Niu W S. Infrared-inertial navigation for commercial aircraft precision landing in low visibility and GPS-denied environments. Sensors, 2019, 19(2): Article No. 408 doi: 10.3390/s19020408 [91] Nicholson D, Hendrick C M, Jaques E R, Horn J, Langelaan J W, Sydney A J. Scaled experiments in vision-based approach and landing in high sea states. In: Proceedings of the AIAA AVIATION Forum. Chicago, USA: AIAA, 2022. Article No. 3279 [92] Gróf T, Bauer P, Watanabe Y. Positioning of aircraft relative to unknown runway with delayed image data, airdata and inertial measurement fusion. Control Engineering Practice, 2022, 125: Article No. 105211 doi: 10.1016/j.conengprac.2022.105211 [93] Meng Y, Wang W, Han H, Zhang M Y. A vision/radar/INS integrated guidance method for shipboard landing. IEEE Transactions on Industrial Electronics, 2019, 66(11): 8803−8810 doi: 10.1109/TIE.2019.2891465 [94] 尚克军, 郑辛, 王旒军, 扈光锋, 刘崇亮. 无人机动平台着陆惯性/视觉位姿歧义校正算法. 中国惯性技术学报, 2020, 28(4): 462−468Shang Ke-Jun, Zheng Xin, Wang Liu-Jun, Hu Guang-Feng, Liu Chong-Liang. Pose ambiguity correction algorithm for UAV mobile platform landing. Journal of Chinese Inertial Technology, 2020, 28(4): 462−468 [95] 黄卫华, 何佳乐, 陈阳, 章政, 郭庆瑞. 基于灰色模型和改进粒子滤波的无人机视觉/INS导航算法. 中国惯性技术学报, 2021, 29(4): 459−466Huang Wei-Hua, He Jia-Le, Chen Yang, Zhang Zheng, Guo Qing-Rui. UAV vision/INS navigation algorithm based on grey model and improved particle filter. Journal of Chinese Inertial Technology, 2021, 29(4): 459−466 [96] Hecker P, Angermann M, Bestmann U, Dekiert A, Wolkow S. Optical aircraft positioning for monitoring of the integrated navigation system during landing approach. Gyroscopy and Navigation, 2019, 10(4): 216−230 doi: 10.1134/S2075108719040084 [97] 代波, 何玉庆, 谷丰, 杨丽英, 徐卫良. 结合滤波与优化的无人机多传感器融合方法. 中国科学: 信息科学, 2020, 50(12): 1919−1931 doi: 10.1360/SSI-2019-0237Dai Bo, He Yu-Qing, Gu Feng, Yang Li-Ying, Xu Wei-Liang. Multi-sensor fusion for unmanned aerial vehicles based on the combination of filtering and optimization. Scientia Sinica Informationis, 2020, 50(12): 1919−1931 doi: 10.1360/SSI-2019-0237 [98] Dinh T H, Hong H L T, Dinh T N. State estimation in visual inertial autonomous helicopter landing using optimisation on manifold. arXiv preprint arXiv: 1907.06247, 2019. [99] 王大元, 李涛, 庄广琛, 李至. 基于惯性/激光测距/视觉里程计组合的高空场景尺度误差估计方法. 导航定位与授时, 2022, 9(4): 70−76Wang Da-Yuan, Li Tao, Zhuang Guang-Chen, Li Zhi. A high-altitude scene scale error estimation method based on inertial/laser ranging/visual odometry combination. Navigation Positioning and Timing, 2022, 9(4): 70−76 [100] Clark R, Wang S, Wen H K, Markham A, Trigoni N. VINet: Visual-inertial odometry as a sequence-to-sequence learning problem. In: Proceedings of the 31st AAAI Conference on Artificial Intelligence. San Francisco, USA: AAAI, 2017. 3995−4001 [101] Greff K, Srivastava R K, Koutník J, Steunebrink B R, Schmidhuber J. LSTM: A search space odyssey. IEEE Transactions on Neural Networks and Learning Systems, 2017, 28(10): 2222−2232 doi: 10.1109/TNNLS.2016.2582924 [102] Baldini F, Anandkumar A, Murray R M. Learning pose estimation for UAV autonomous navigation and landing using visual-inertial sensor data. In: Proceedings of the American Control Conference (ACC). Denver, USA: IEEE, 2020. 2961−2966 [103] Zhang X P, He Z Z, Ma Z, Jun P, Yang K. VIAE-Net: An end-to-end altitude estimation through monocular vision and inertial feature fusion neural networks for UAV autonomous landing. Sensors, 2021, 21(18): Article No. 6302 doi: 10.3390/s21186302 [104] 王浩. 小行星着陆过程模拟平台设计与位姿估计研究 [硕士学位论文], 东南大学, 中国, 2021.Wang Hao. Research on the Design of Simulation Platform and Pose Estimation Algorithm in Asteroid Probe Landing Period [Master thesis], Southeast University, China, 2021. -

下载:

下载: