Relative Pose Estimation Algorithm for Unmanned Aerial Vehicles Based on Weighted Fusion of Multiple Keypoint Detection

-

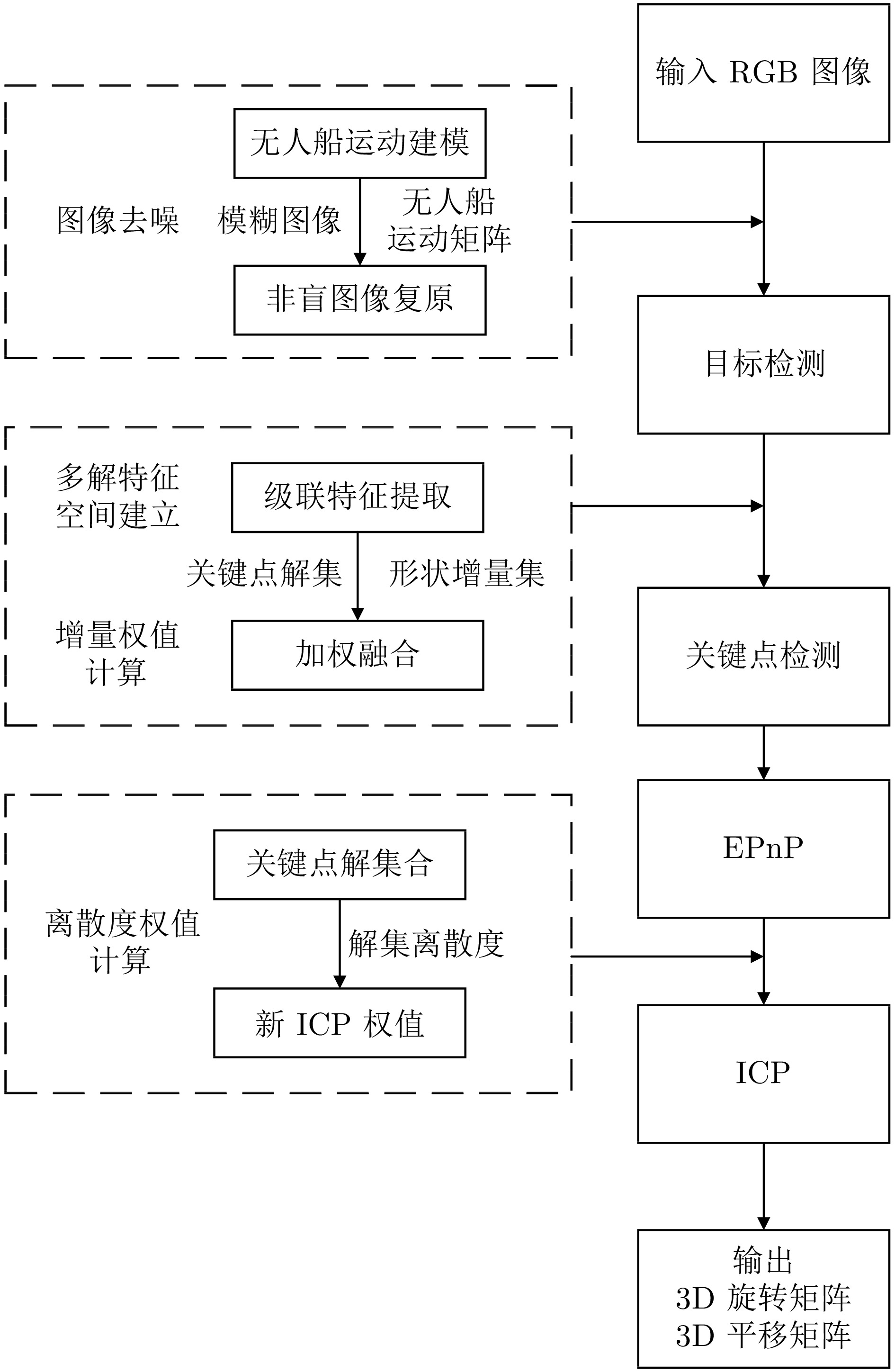

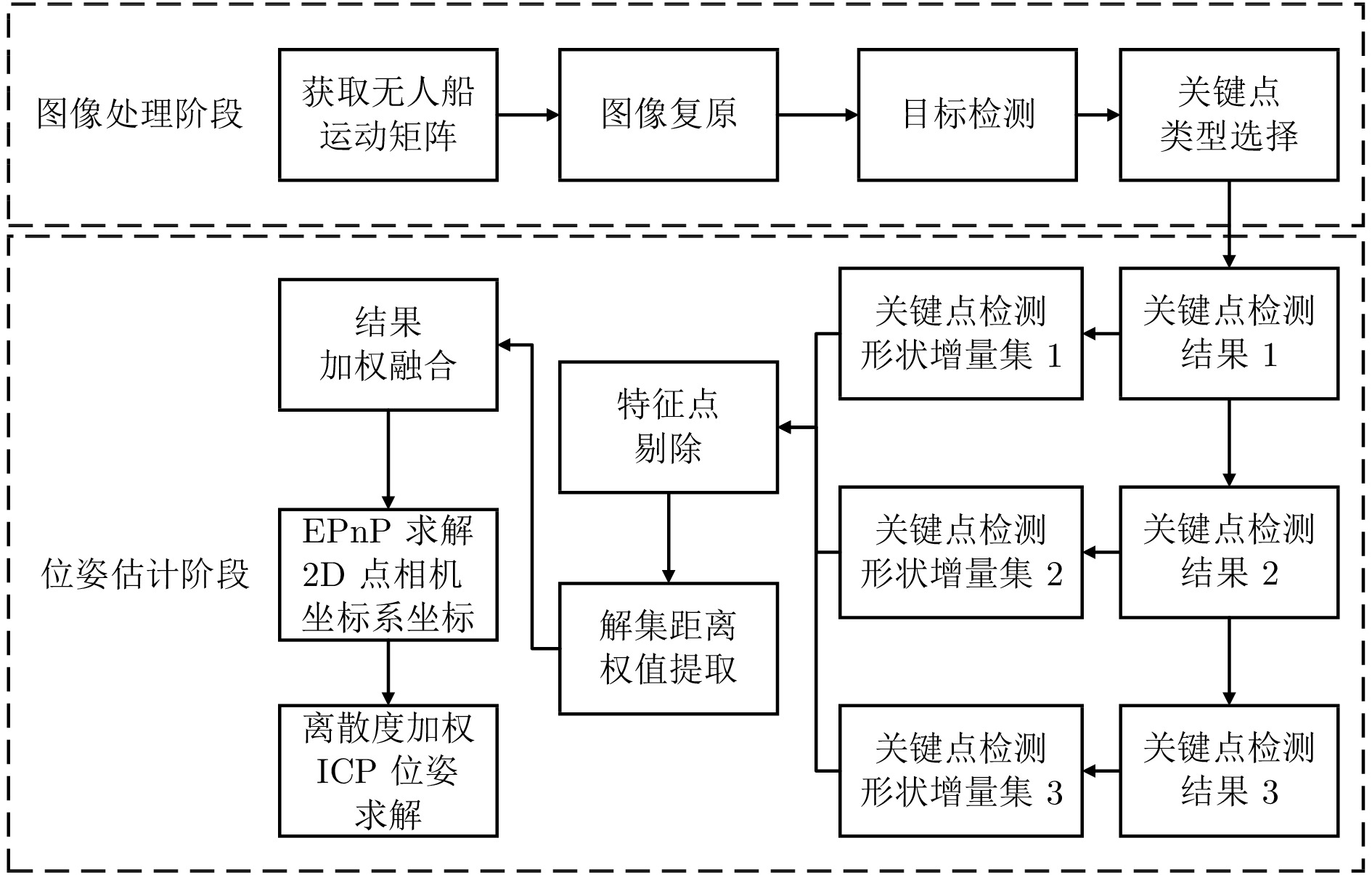

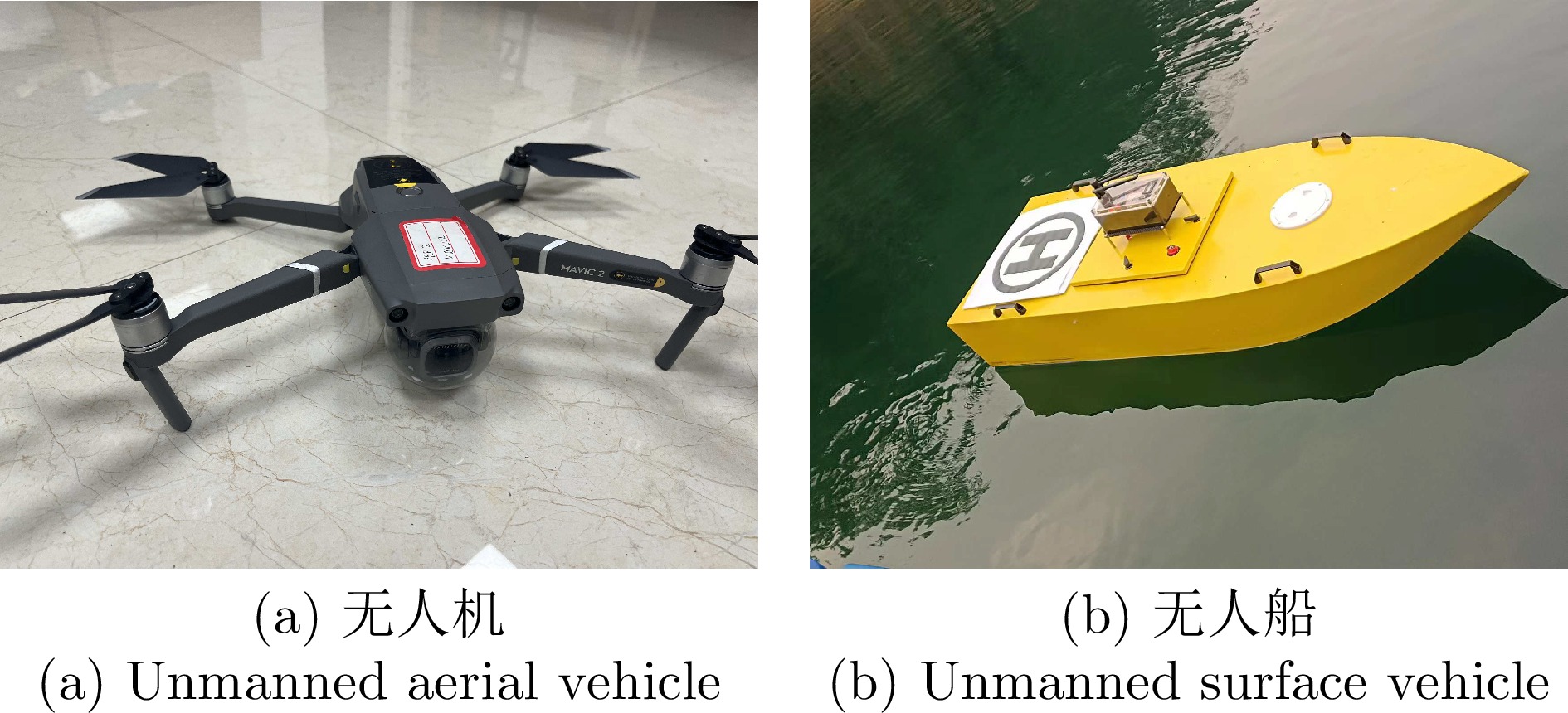

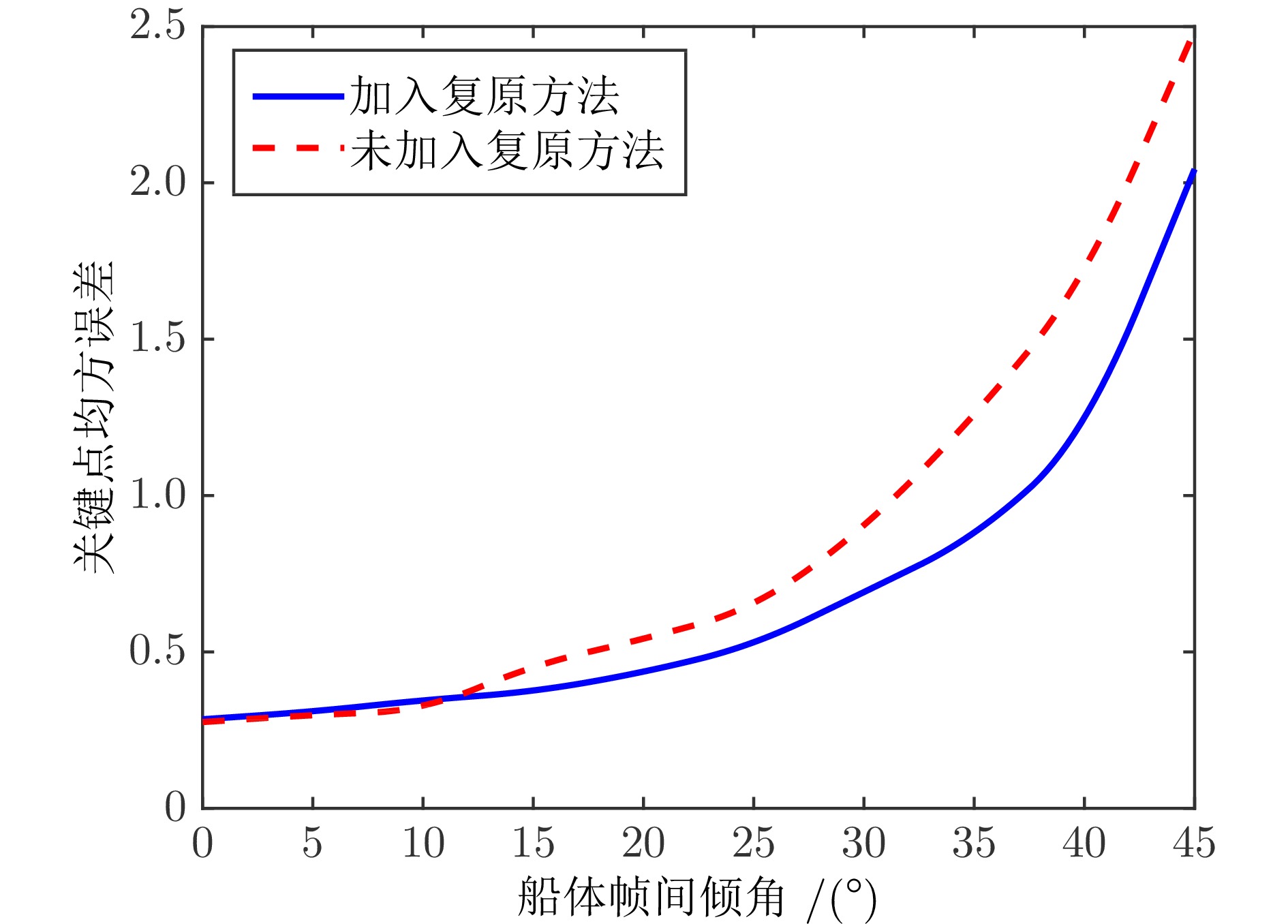

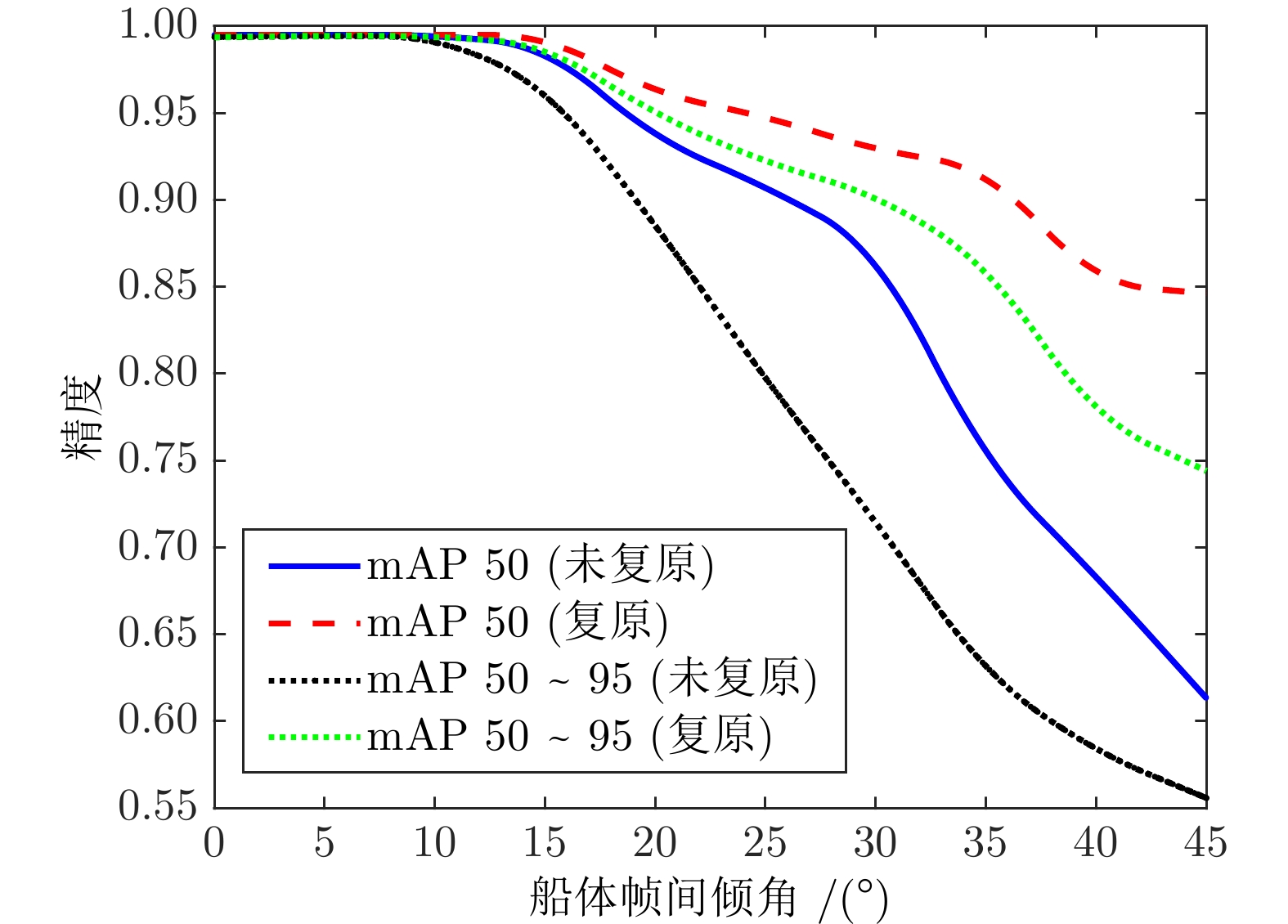

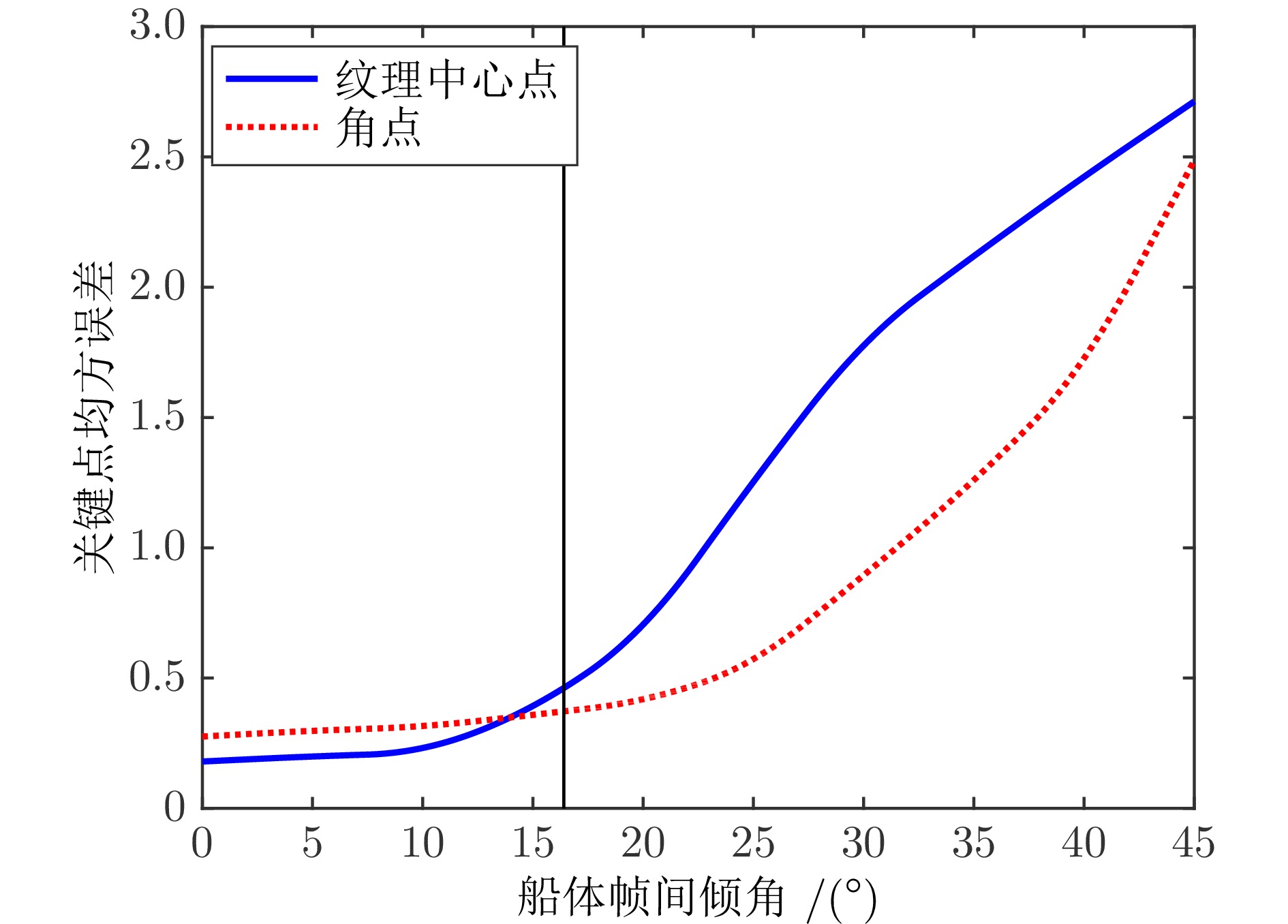

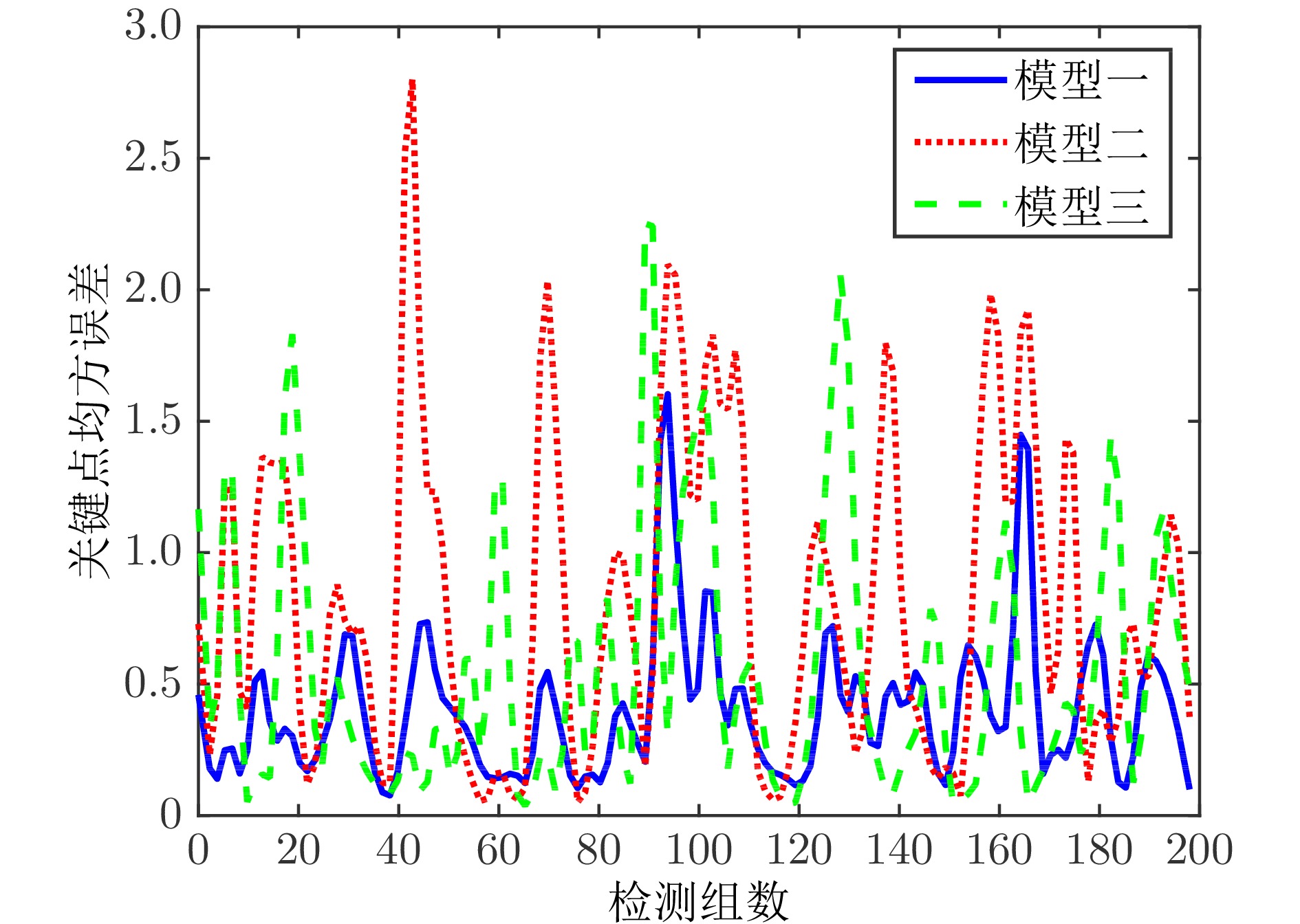

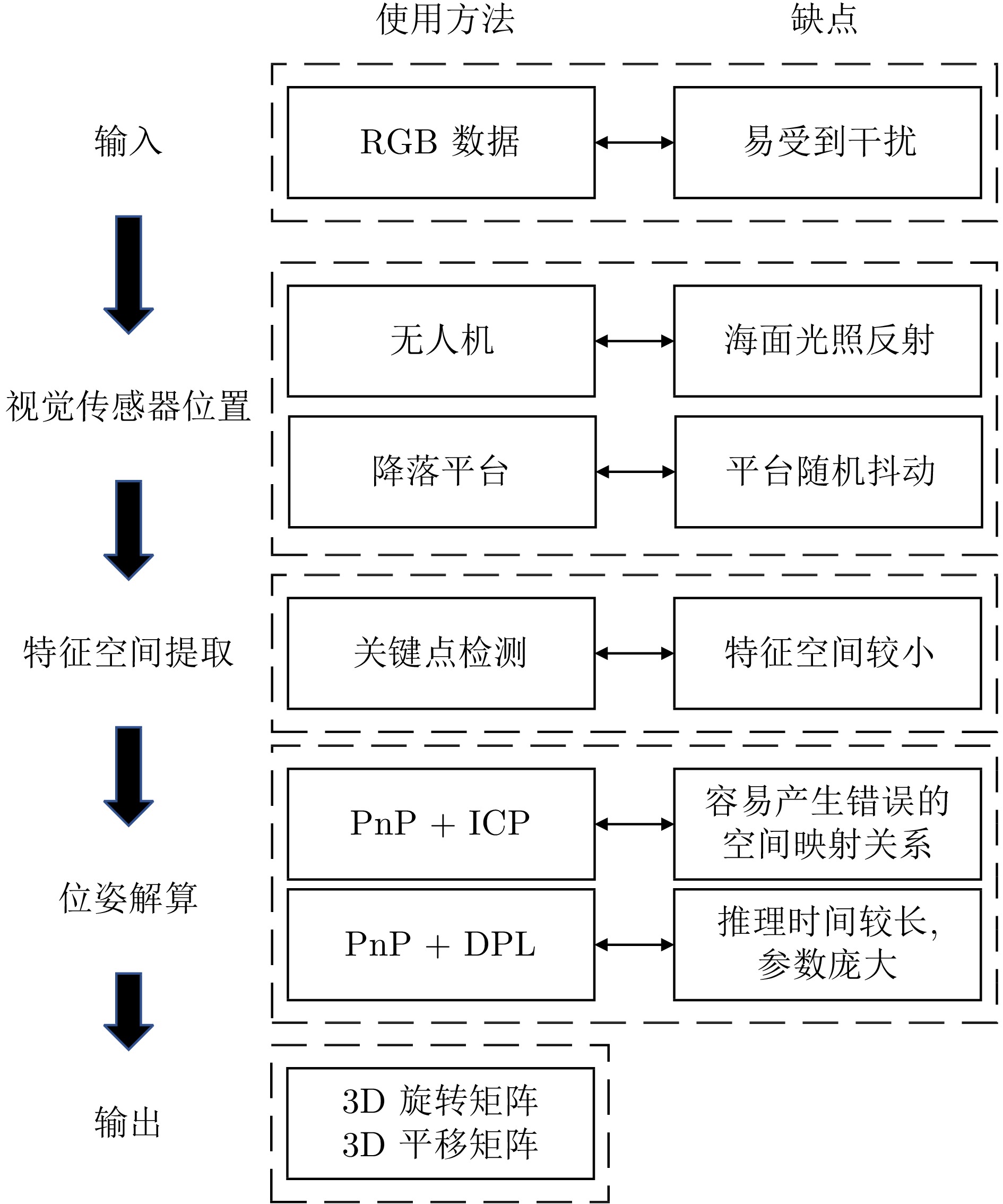

摘要: 针对无人机降落阶段中无人船受水面波浪影响导致图像产生运动模糊以及获取无人机相对位姿精度低且鲁棒性差的问题, 提出一种基于多模型关键点加权融合的6D目标位姿估计算法, 以提高位姿估计的精度和鲁棒性. 首先, 基于无人船陀螺仪得到的运动信息设计帧间抖动模型, 通过还原图像信息达到降低图像噪声的目的; 然后, 设计一种多模型的级联回归特征提取算法, 通过多模型检测舰载视觉系统获取的图像, 以增强特征空间的多样性; 同时, 将检测过程中关键点定位形状增量集作为融合权重对模型进行加权融合, 以提高特征空间的鲁棒性; 紧接着, 利用EPnP (Efficient perspective-n-point) 计算关键点相机坐标系坐标, 将PnP (Perspective-n-point) 问题转化为ICP (Iterative closest point) 问题; 最终, 基于关键点解集的离散度为关键点赋权, 使用ICP算法求解位姿以削弱深度信息对位姿的影响. 仿真结果表明, 该算法能够建立一个精度更高的特征空间, 使得位姿解算时特征映射的损失降低, 最终提高位姿解算的精度.Abstract: A 6D target pose estimation algorithm based on multiple models keypoints weighted fusion is proposed to address the issue of low accuracy and poor robustness in obtaining the relative pose of unmanned aerial vehicles due to motion blur caused by the influence of water surface waves on the image during the landing phase of unmanned aerial vehicles. This algorithm aims to improve the accuracy and robustness of pose estimation. Firstly, based on the motion information obtained from the unmanned ship gyroscope, an inter frame jitter model is designed to reduce image noise by restoring image information. Then, a cascaded regression feature extraction algorithm with multiple models is designed to detect images obtained by the shipborne visual system through multiple models, in order to enhance the diversity of the feature space; at the same time, the incremental set of keypoint localization shapes during the detection process is used as the fusion weight to weight and fuse the model, in order to improve the robustness of the feature space. This paper uses efficient perspective-n-point (EPnP) to calculate the coordinates of the camera coordinate system for keypoints, and transforms the perspective-n-point (PnP) problem into an iterative closest point (ICP) problem. Finally, based on the dispersion of the keypoints solution set, weights are assigned to keypoints, and the ICP algorithm is used to mitigate the influence of depth information on the pose estimation. The simulation results show that this algorithm can establish a more accurate feature space, reduce the loss of feature mapping during pose estimation, and ultimately improve the accuracy of pose estimation.

-

表 1 各关键点类型最低检测损失占比

Table 1 Proportion of minimum detection loss for each keypoint type

检测关键点类型 角点检测 纹理中心点检测 选择算法检测 最优占比 54.7% 45.3% 90.6% 表 2 各模型均值损失和方差

Table 2 Mean loss and variance of different models

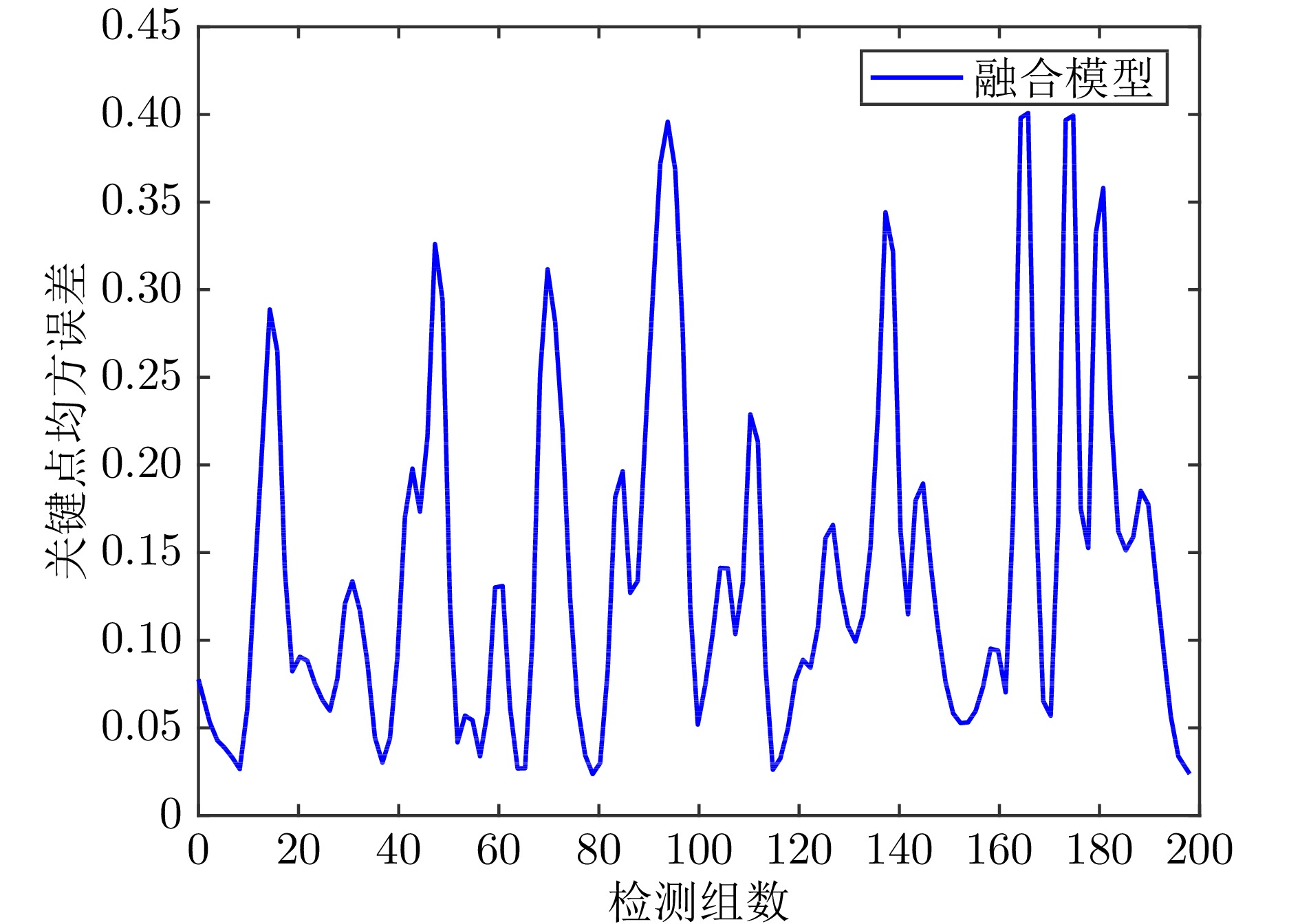

模型名称 均值损失 方差 模型一 0.4001 0.1184 模型二 0.8213 0.5607 模型三 0.5640 0.4226 加权融合模型 0.1401 0.0157 表 3 不同距离中两种ICP算法的均值精度和方差

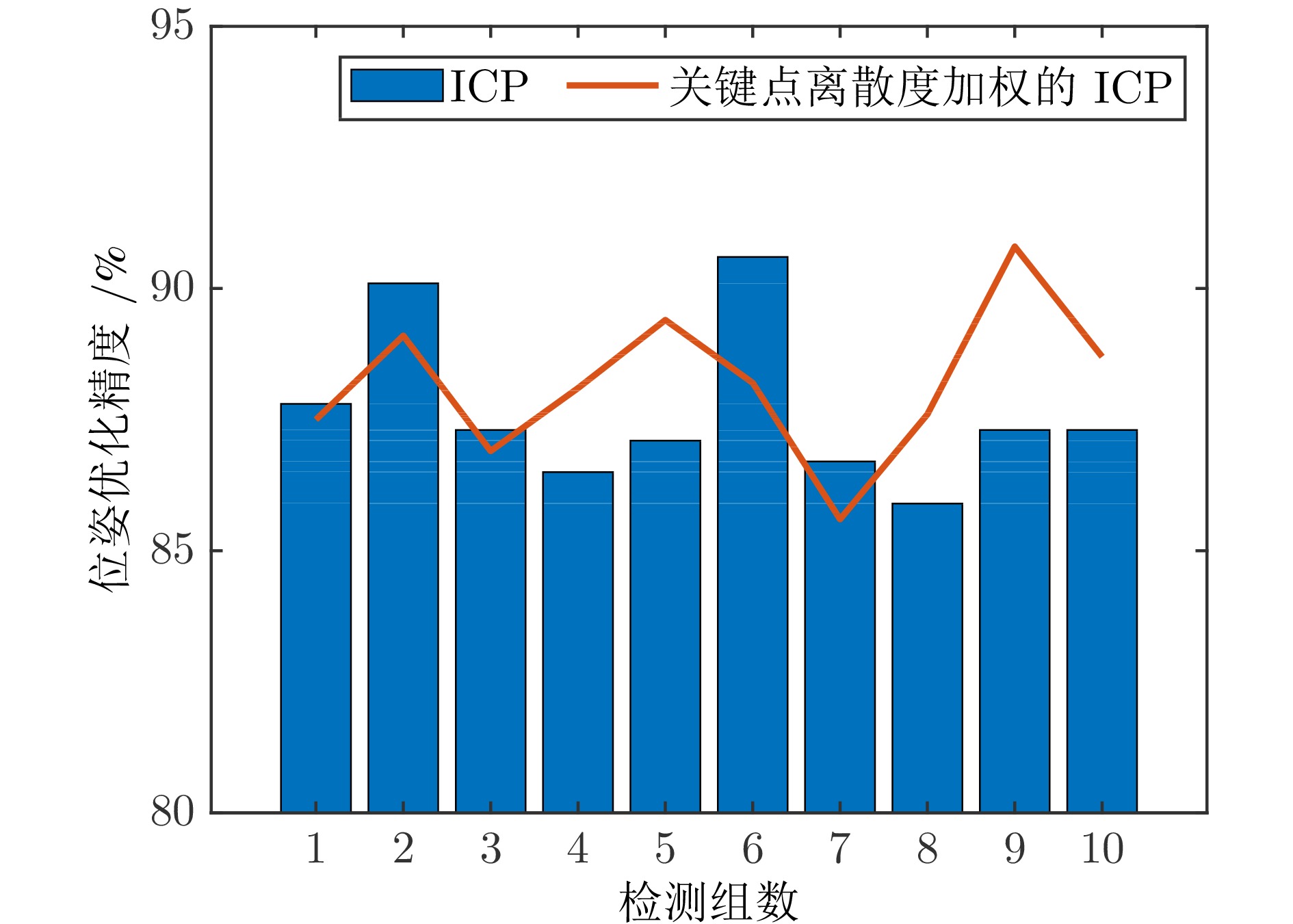

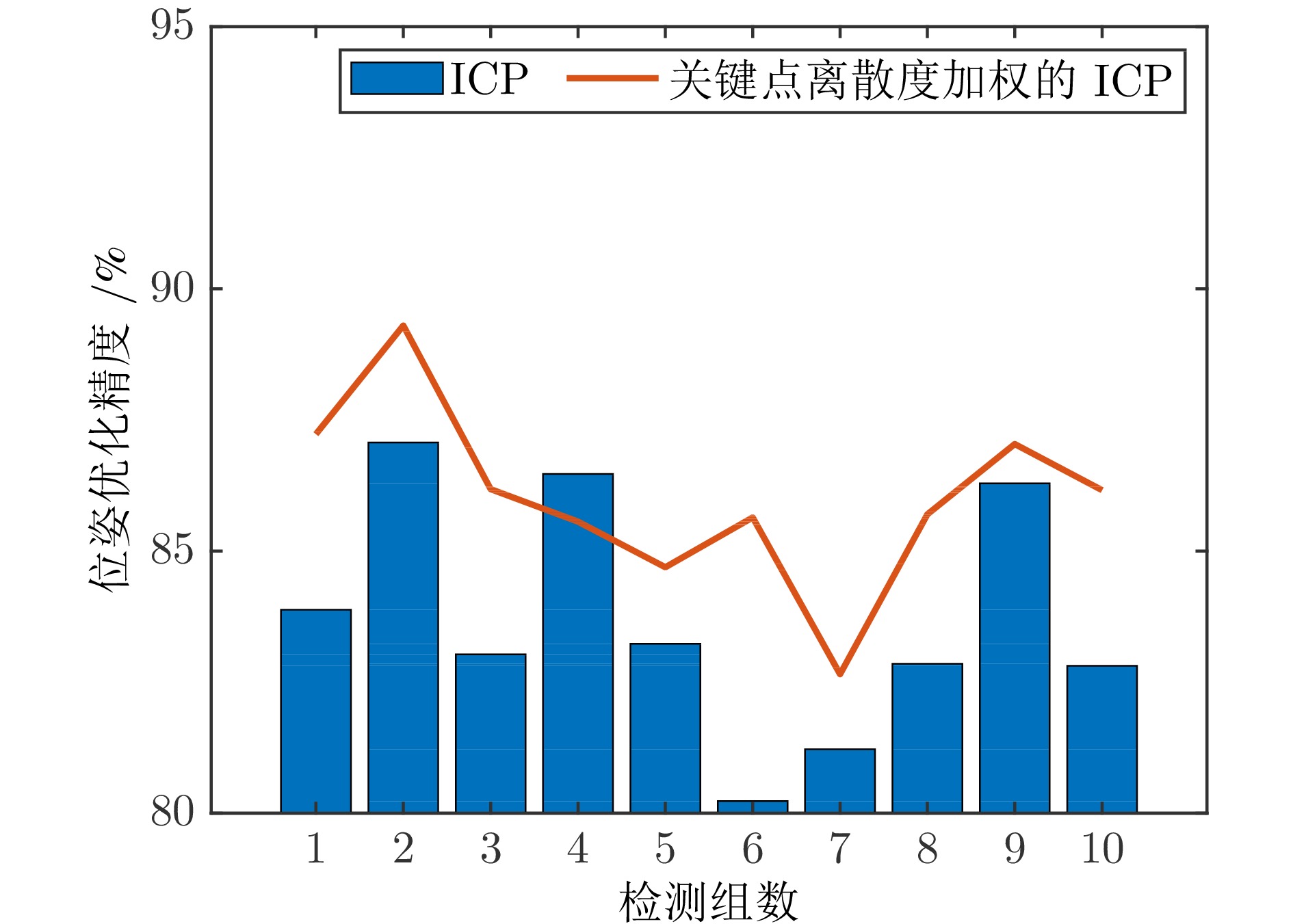

Table 3 Mean accuracy and variance of two ICP algorithms in different distances

距离 算法 均值精度 方差 近距离(5 m) ICP 87.34% 2.30 近距离(5 m) 关键点离散度加权的ICP 88.08% 2.06 远距离(15 m) ICP 83.71% 5.13 远距离(15 m) 关键点离散度加权的ICP 86.05% 2.98 表 4 不同船体帧间倾角下的各算法位姿估计精度 (%)

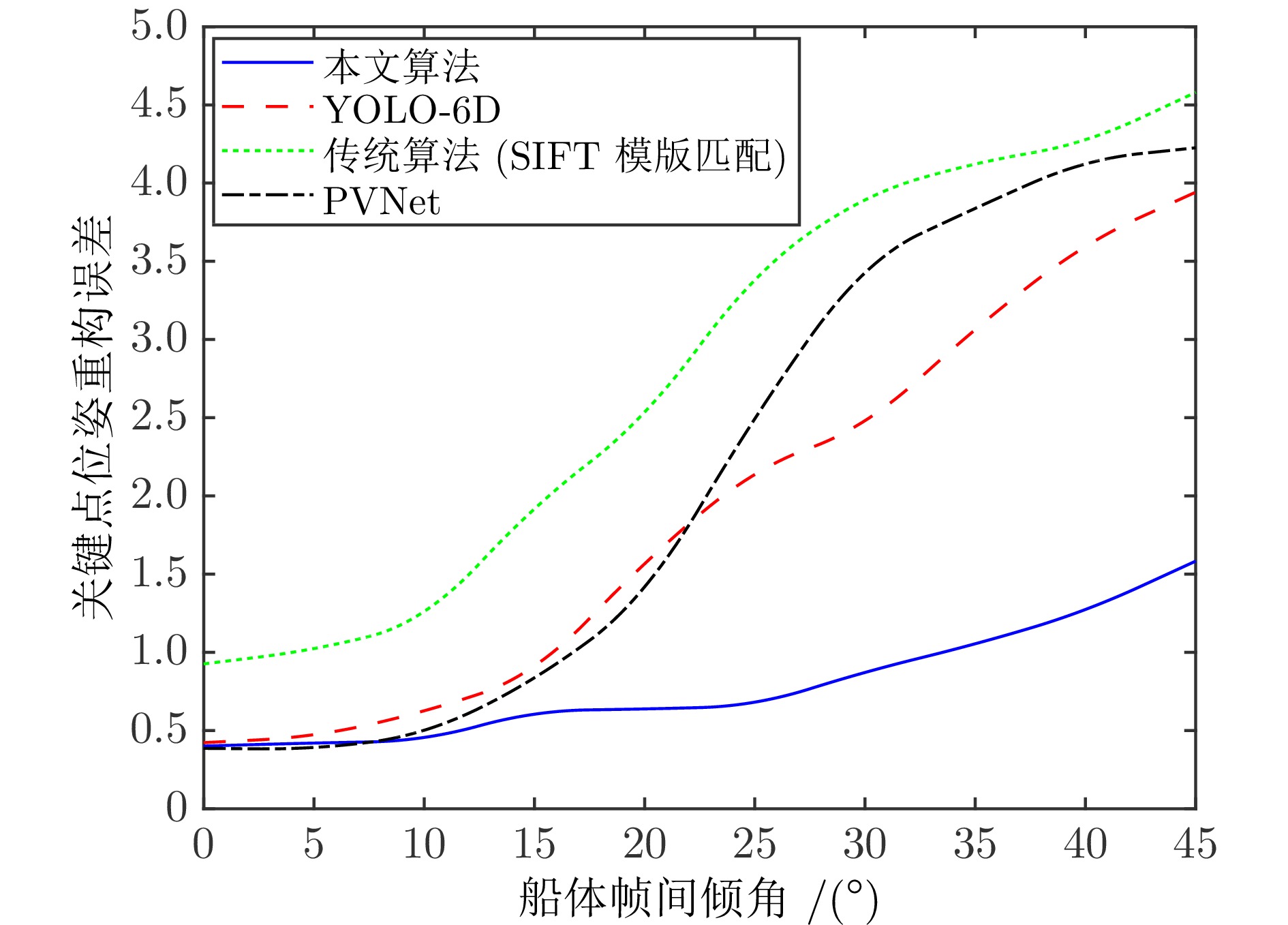

Table 4 Pose estimation accuracy of various algorithms under different ship hull inter-frame angles (%)

帧间倾角 $ 0^{\circ } $ $ 5^{\circ } $ $ 10^{\circ } $ $ 15^{\circ } $ $ 20^{\circ } $ $ 25^{\circ } $ $ 30^{\circ } $ $ 35^{\circ } $ $ 40^{\circ } $ $ 45^{\circ } $ 本文算法 94.2 93.0 90.4 89.1 88.4 85.3 81.2 82.3 78.3 72.4 PVNet 93.3 94.7 91.3 82.6 71.3 56.7 45.3 44.0 41.3 36.0 YOLO-6D 92.0 92.7 88.7 81.3 72.7 63.3 55.3 50.7 46.7 39.3 传统算法(SIFT模版匹配) 83.4 83.7 81.0 73.8 64.8 54.0 45.3 42.6 39.6 33.9 -

[1] 沈林成, 孔维玮, 牛轶峰. 无人机自主降落地基/舰基引导方法综述. 北京航空航天大学学报, 2021, 47(2): 187−196Shen Lin-Cheng, Kong Wei-Wei, Niu Yi-Feng. Ground-and ship-based guidance approaches for autonomous landing of UAV. Journal of Beijing University of Aeronautics and Astronautics, 2021, 47(2): 187−196 [2] 甄子洋. 舰载无人机自主着舰回收制导与控制研究进展. 自动化学报, 2019, 45(4): 669−681Zhen Zi-Yang. Research development in autonomous carrier-landing/ship-recovery guidance and control of unmanned aerial vehicles. Acta Automatica Sinica, 2019, 45(4): 669−681 [3] Lowe D G. Object recognition from local scale-invariant features. In: Proceedings of the 7th IEEE International Conference on Computer Vision. Kerkyra, Greece: IEEE, 1999. 1150−1157 [4] Bay H, Tuytelaars T, Gool L V. SURF: Speeded up robust features. In: Proceedings of the 9th European Conference on Computer Vision. Graz, Austria: Springer, 2006. 404−417 [5] Rublee E, Rabaud V, Konolige K, Bradski G. ORB: An efficient alternative to SIFT or SURF. In: Proceedings of the International Conference on Computer Vision. Barcelona, Spain: IEEE, 2011. 2564−2571 [6] Lepetit V, Moreno-Noguer F, Fua P. EPnP: An accurate O(n) solution to the PnP problem. International Journal of Computer Vision, 2009, 81(2): 155−166 doi: 10.1007/s11263-008-0152-6 [7] 李航宇, 张志龙, 李楚为, 吴晗. 基于视觉的目标位姿估计综述. 第七届高分辨率对地观测学术年会论文集. 长沙, 中国: 高分辨率对地观测学术联盟, 2020. 635−648Li Hang-Yu, Zhang Zhi-Long, Li Chu-Wei, Wu Han. Overview of vision-based target pose estimation. In: Proceedings of the 7th High Resolution Earth Observation Academic Annual Conference. Changsha, China: China High Resolution Earth Observation Conference, 2020. 635−648 [8] 王静, 金玉楚, 锅苹, 胡少毅. 基于深度学习的相机位姿估计方法综述. 计算机工程与应用, 2023, 59(7): 1−14 doi: 10.3778/j.issn.1002-8331.2209-0280Wang Jing, Jin Yu-Chu, Guo Ping, Hu Shao-Yi. Survey of camera pose estimation methods based on deep learning. Computer Engineering and Application, 2023, 59(7): 1−14 doi: 10.3778/j.issn.1002-8331.2209-0280 [9] 郭楠, 李婧源, 任曦. 基于深度学习的刚体位姿估计方法综述. 计算机科学, 2023, 50(2): 178−189 doi: 10.11896/jsjkx.211200164Guo Nan, Li Jing-Yuan, Ren Xi. Survey of rigid object pose estimation algorithms based on deep learning. Computer Science, 2023, 50(2): 178−189 doi: 10.11896/jsjkx.211200164 [10] Xiang Y, Schmidt T, Narayanan V, Fox D. PoseCNN: A convolutional neural network for 6D object pose estimation in cluttered scenes. arXiv preprint arXiv: 1711.00199, 2018. [11] Do T T, Cai M, Pham T, Reid I. Deep-6DPose: Recovering 6D object pose from a single RGB image. arXiv preprint arXiv: 1802.10367, 2018. [12] Liu J, He S. 6D object pose estimation without PnP. arXiv preprint arXiv: 1902.01728, 2019. [13] 王晓琦, 桑晗博. 基于类别级先验重建的六维位姿估计方法. 长江信息通信, 2023, 36(1): 15−17 doi: 10.3969/j.issn.1673-1131.2023.01.005Wang Xiao-Qi, Sang Han-Bo. 6D pose estimation based on category-level prior reconstruction. Changjiang Information and Communications, 2023, 36(1): 15−17 doi: 10.3969/j.issn.1673-1131.2023.01.005 [14] 桑晗博, 林巍峣, 叶龙. 基于深度三维模型表征的类别级六维位姿估计. 中国传媒大学学报(自然科学版), 2022, 29(4): 50−56Sang Han-Bo, Lin Wei-Rao, Ye Long. 3D deep implicit function for category-level object 6D pose estimation. Journal of Communication University of China (Natural Science Edition), 2022, 29(4): 50−56 [15] Peng S D, Liu Y, Huang Q X, Bao H J, Zhou X W. PVNet: Pixel-wise voting network for 6DoF pose estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 4556−4565 [16] Rad M, Lepetit V. BB8: A scalable, accurate, robust to partial occlusion method for predicting the 3D poses of challenging objects without using depth. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV). Venice, Italy: IEEE, 2017. 3848−3856 [17] Tekin B, Sinha S N, Fua P. Real-time seamless single shot 6D object pose prediction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 292−301 [18] Kehl W, Manhardt F, Tombari F, Ilic S, Navab N. SSd-6D: Making RGB-based 3D detection and 6D pose estimation great again. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV). Venice, Italy: IEEE, 2017. 1530−1538 [19] Liu J, Sun W, Liu C P, Zhang X, Fan S M, Zhang L X. A novel 6D pose estimation method for indoor objects based on monocular regression depth. In: Proceedings of the China Automation Congress (CAC). Beijing, China: IEEE, 2021. 4168−4172 [20] Xu S W, Lin F, Lu Y J. Pose estimation method for autonomous landing of quadrotor unmanned aerial vehicle. In: Proceedings of the IEEE 6th Information Technology and Mechatronics Engineering Conference (ITOEC). Chongqing, China: IEEE, 2022. 1246−1250 [21] Na S, Chen W C, Stork W, Tang S. UAV flight control algorithm based on detection and pose estimation of the mounting position for weather station on transmission tower using depth camera. In: Proceedings of the IEEE 17th International Conference on Control and Automation (ICCA). Naples, Italy: IEEE, 2022. 522−528 [22] 王硕, 祝海江, 李和平, 吴毅红. 基于共面圆的距离传感器与相机的相对位姿标定. 自动化学报, 2020, 46(6): 1154−1165Wang Shuo, Zhu Hai-Jiang, Li He-Ping, Wu Yi-Hong. Relative pose calibration between a range sensor and a camera using two coplanar circles. Acta Automatica Sinica, 2020, 46(6): 1154−1165 [23] 汪佳宝, 张世荣, 周清雅. 基于视觉 EPnP加权迭代算法的三维位移实时测量. 仪器仪表学报, 2020, 41(2): 166−175Wang Jia-Bao, Zhang Shi-Rong, Zhou Qing-Ya. Vision based real-time 3D displacement measurement using weighted iterative EPnP algorithm. Chinese Journal of Scientific Instrument, 2020, 41(2): 166−175 [24] 仇翔, 王国顺, 赵扬扬, 滕游, 俞立. 基于YOLOv3和EPnP算法的多药盒姿态估计. 计算机测量与控制, 2021, 29(2): 125−131Qiu Xiang, Wang Guo-Shun, Zhao Yang-Yang, Teng You, Yu Li. Multi-pillbox attitude estimation based on YOLOv3 and EPnP algorithm. Computer Measurement and Control, 2021, 29(2): 125−131 [25] 马天, 蒙鑫, 牟琦, 李占利, 何志强. 基于特征融合的6D目标位姿估计算法. 计算机工程与设计, 2023, 44(2): 563−569Ma Tian, Meng Xin, Mou Qi, Li Zhan-Li, He Zhi-Qiang. 6D object pose estimation algorithm based on feature fusion. Computer Engineering and Design, 2023, 44(2): 563−569 [26] 孙沁璇, 苑晶, 张雪波, 高远兮. PLVO: 基于平面和直线融合的RGB-D视觉里程计. 自动化学报, 2023, 49(10): 2060−2072Sun Qin-Xuan, Yuan Jing, Zhang Xue-Bo, Gao Yuan-Xi. PLVO: Plane-line-based RGB-D visual odometry. Acta Automatica Sinica, 2023, 49(10): 2060−2072 [27] Besl P J, McKay N D. A method for registration of 3-D shapes. IEEE Transactions on Pattern Analysis Machine Intelligence, 1992, 14(2): 239−256 doi: 10.1109/34.121791 [28] 丁文东, 徐德, 刘希龙, 张大朋, 陈天. 移动机器人视觉里程计综述. 自动化学报, 2018, 44(3): 385−400Ding Wen-Dong, Xu De, Liu Xi-Long, Zhang Da-Peng, Chen Tian. Review on visual odometry for mobile robots. Acta Automatica Sinica, 2018, 44(3): 385−400 [29] 张卫东, 刘笑成, 韩鹏. 水上无人系统研究进展及其面临的挑战. 自动化学报, 2020, 46(5): 847−857Zhang Wei-Dong, Liu Xiao-Cheng, Han Peng. Progress and challenges of overwater unmanned systems. Acta Automatica Sinica, 2020, 46(5): 847−857 [30] Sun Y, Wang X G, Tang X O. Deep convolutional network cascade for facial point detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Portland, USA: IEEE, 2013. 3476−3483 [31] Dollar P, Welinder P, Perona P. Cascaded pose regression. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. San Francisco, USA: IEEE, 2010. 1078−1085 [32] Cao X D, Wei Y C, Wen F, Sun J. Face alignment by explicit shape regression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Providence, USA: IEEE, 2012. 2887−2894 [33] Ren S Q, Cao X D, Wei Y C, Sun J. Face alignment at 3000 fps via regressing local binary features. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Columbus, USA: IEEE, 2014. 1685−1692 [34] Kowalski M, Naruniec J, Trzcinski T. Deep alignment network: A convolutional neural network for robust face alignment. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Honolulu, USA: IEEE, 2017. 2034−2043 [35] 范涵奇, 吴锦河. 基于拉普拉斯分布的双目视觉里程计. 自动化学报, 2022, 48(3): 865−876Fan Han-Qi, Wu Jin-He. Stereo visual odometry based on Laplace distribution. Acta Automatica Sinica, 2022, 48(3): 865−876 [36] Ferreira T, Bernardino A, Damas B. 6D UAV pose estimation for ship landing guidance. In: Proceedings of the OCEANS 2021: San Diego – Porto. San Diego, USA: IEEE, 2021. 1−10 [37] Chatzikalymnios E, Moustakas K. Autonomous vision-based landing of UAV's on unstructured terrains. In: Proceedings of the IEEE International Conference on Autonomous Systems (ICAS). Montreal, Canada: IEEE, 2021. 1−5 [38] Orsulic J, Milijas R, Batinovic A, Markovic L, Ivanovic A, Bogdan S. Flying with cartographer: Adapting the cartographer 3D graph SLAM stack for UAV navigation. In: Proceedings of the Aerial Robotic Systems Physically Interacting With the Environment (AIRPHARO). Biograd na Moru, Croatia: IEEE, 2021. 1−7 [39] 聂伟, 文怀志, 谢良波, 杨小龙, 周牧. 一种基于单目视觉的无人机室内定位方法. 电子与信息学报, 2022, 44(3): 906−914 doi: 10.11999/JEIT211328Nie Wei, Wen Huai-Zhi, Xie Liang-Bo, Yang Xiao-Long, Zhou Mu. Indoor localization of UAV using monocular vision. Journal of Electronics and Information Technology, 2022, 44(3): 906−914 doi: 10.11999/JEIT211328 [40] Lippiello V, Cacace J. Robust visual localization of a UAV over a pipe-rack based on the lie group SE(3). IEEE Robotics and Automation Letters, 2022, 7(1): 295−302 doi: 10.1109/LRA.2021.3125039 [41] Gurgu M M, Queralta J P, Westerlund T. Vision-based GNSS-free localization for UAVs in the wild. In: Proceedings of the 7th International Conference on Mechanical Engineering and Robotics Research (ICMERR). Krakow, Poland: IEEE, 2022. 7−12 [42] Huang Q Z, Feng P Y. Research on vision positioning algorithm of UAV landing based on vanishing point. In: Proceedings of the 3rd International Conference on Intelligent Control, Measurement and Signal Processing and Intelligent Oil Field (ICMSP). Xi'an, China: IEEE, 2021. 68−71 [43] Wang Y X, Wang H L, Liu B L, Liu Y H, Wu J F, Lu Z Y. A visual navigation framework for the aerial recovery of UAVs. IEEE Transactions on Instrumentation and Measurement, 2021, 70: Article No. 5019713 [44] Fan Y S, Huang J S, Jia T, Bai C C. A visual marker detection and position method for autonomous aerial refueling of UAVs. In: Proceedings of the IEEE International Conference on Unmanned Systems (ICUS). Beijing, China: IEEE, 2021. 1006−1011 [45] Moura A, Antunes J, Dias A, Martins A, Almeida J. Graph-SLAM approach for indoor UAV localization in warehouse logistics applications. In: Proceedings of the IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC). Santa Maria da Feira, Portugal: IEEE, 2021. 4−11 [46] 贺勇, 李子豪, 高正涛. 基于视觉导航的无人机自主精准降落. 电光与控制, 2023, 30(4): 88−93He Yong, Li Zi-Hao, Gao Zheng-Tao. Autonomous and precise landing of UAVs based on visual navigation. Electronics Optics and Control, 2023, 30(4): 88−93 [47] 卢金燕, 徐德, 覃政科, 王鹏, 任超. 基于多传感器的大口径器件自动对准策略. 自动化学报, 2015, 41(10): 1711−1722Lu Jin-Yan, Xu De, Tan Zheng-Ke, Wang Peng, Ren Chao. An automatic alignment strategy of large diameter components with a multi-sensor system. Acta Automatica Sinica, 2015, 41(10): 1711−1722 [48] Ou N, Wang J Z, Liu S F, Li J H. Towards pose estimation for large UAV in close range. In: Proceedings of the 37th Youth Academic Annual Conference of Chinese Association of Automation (YAC). Beijing, China: IEEE, 2022. 56−61 [49] Deng J Y, Qu W D, Fang S Q. A high accuracy and recall rate 6D pose estimation method using point pair features for bin-picking. In: Proceedings of the 34th Chinese Control and Decision Conference (CCDC). Hefei, China: IEEE, 2022. 6056−6061 [50] Wang H S, Situ H J, Zhuang C G. 6D pose estimation for bin-picking based on improved mask R-CNN and DenseFusion. In: Proceedings of the 26th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA). Vasteras, Sweden: IEEE, 2021. 1−7 [51] 张文政, 吴长悦, 赵文, 满卫东, 刘明月. 融合对抗网络和维纳滤波的无人机图像去模糊方法研究. 无线电工程, 2024, 54(3): 607−614Zhang Wen-Zheng, Wu Chang-Yue, Zhao Wen, Man Wei-Dong, Liu Ming-Yue. Research on UAV image deblurring method based on confrontation network and Wiener filtering. Radio Engineering, 2024, 54(3): 607−614 [52] Project webpage [Online], available: https://github.com/ultralytics/yolov5, March 5, 2024 [53] Xiong X H, Torre F D L. Supervised descent method and its applications to face alignment. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Portland, USA: IEEE, 2013. 532−539 [54] Zhang Z Y. Flexible camera calibration by viewing a plane from unknown orientations. In: Proceedings of the 7th IEEE International Conference on Computer Vision. Kerkyra, Greece: IEEE, 1999. 666−673 [55] Gao Y, He J F, Zhang T Z, Zhang Z, Zhang Y D. Dynamic keypoint detection network for image matching. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(12): 14404−14419 -

下载:

下载: