Robot Perception, Planning, and Control Technologies for Intelligent Biochemical Laboratories

-

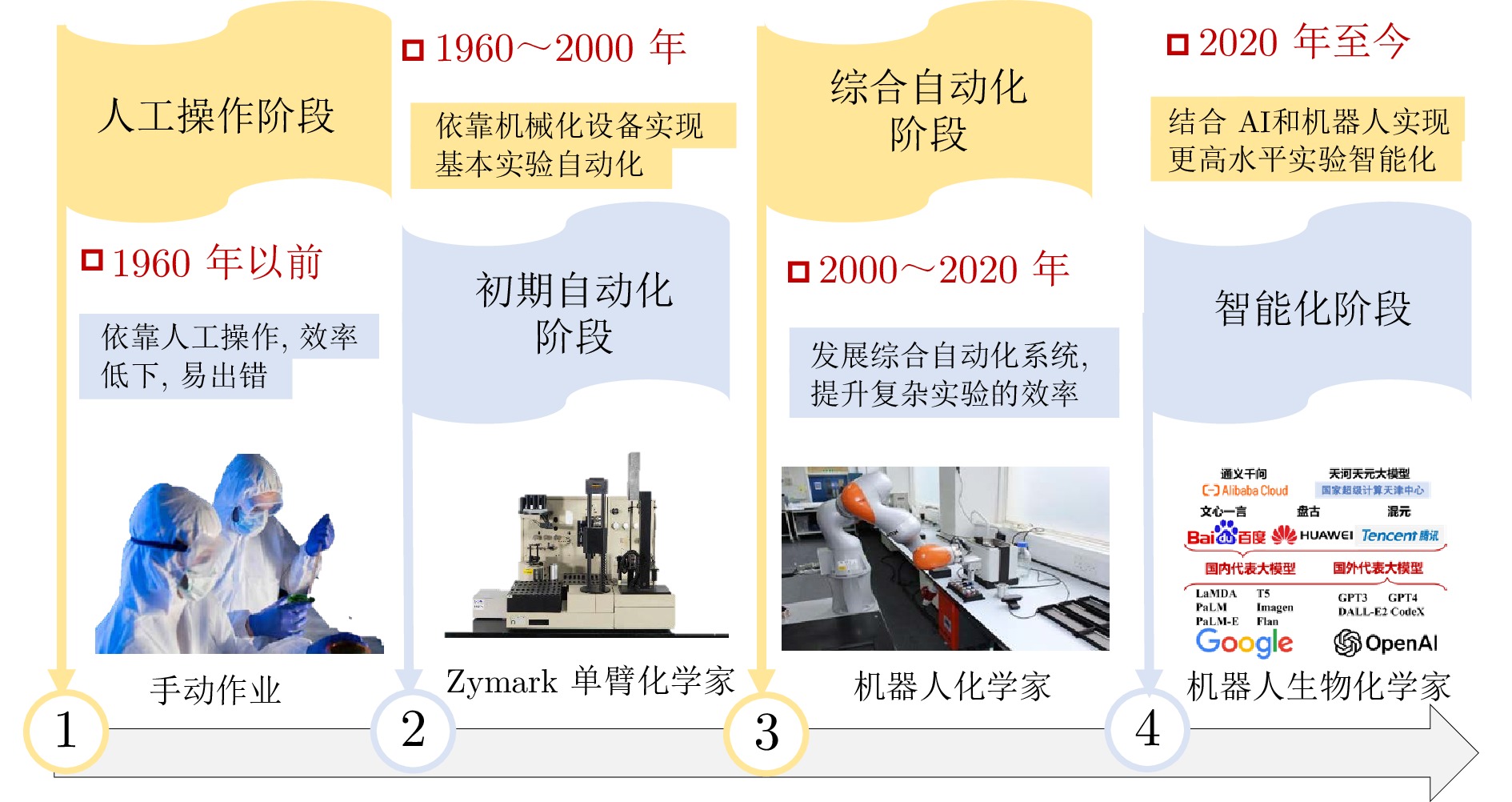

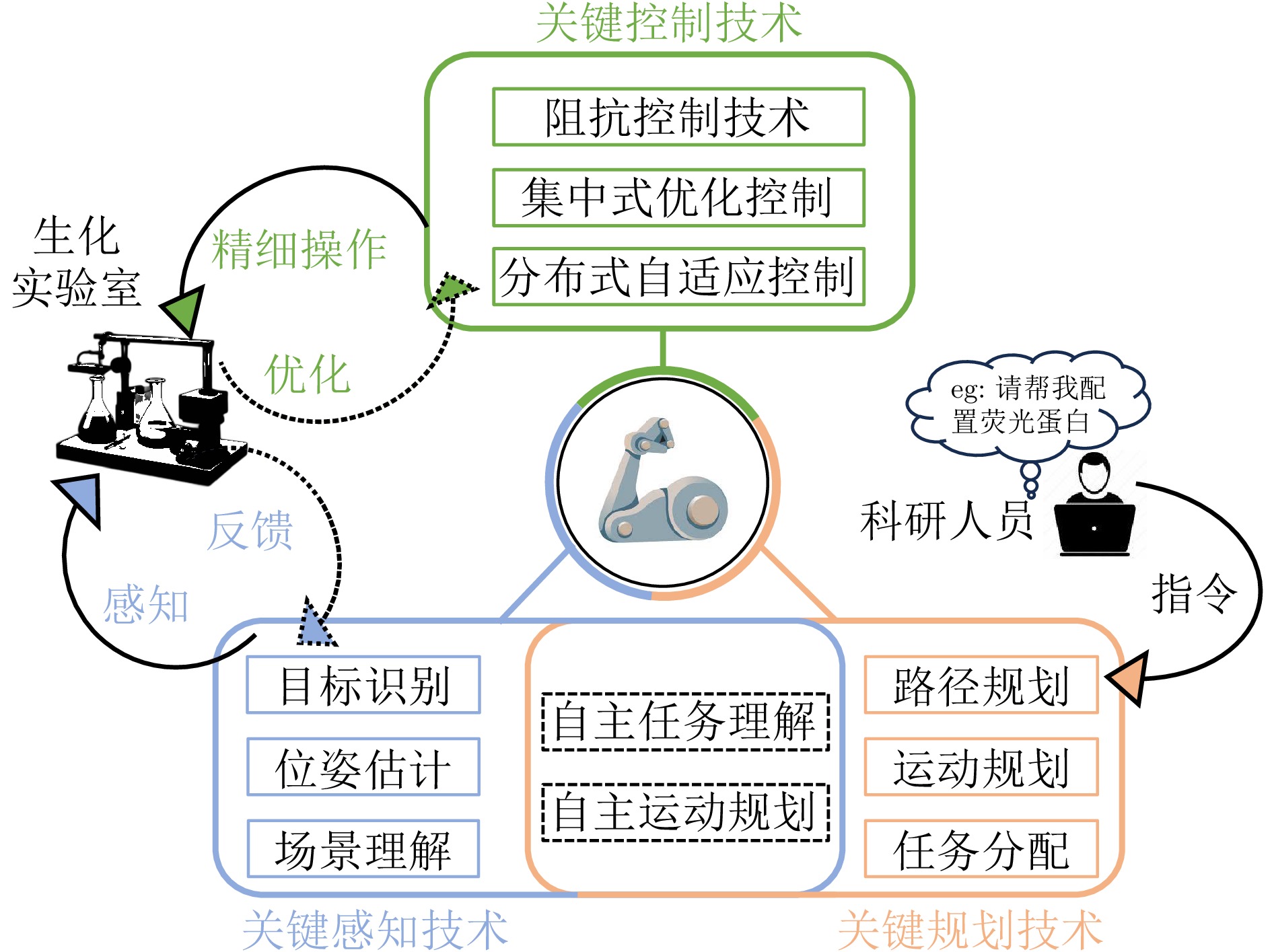

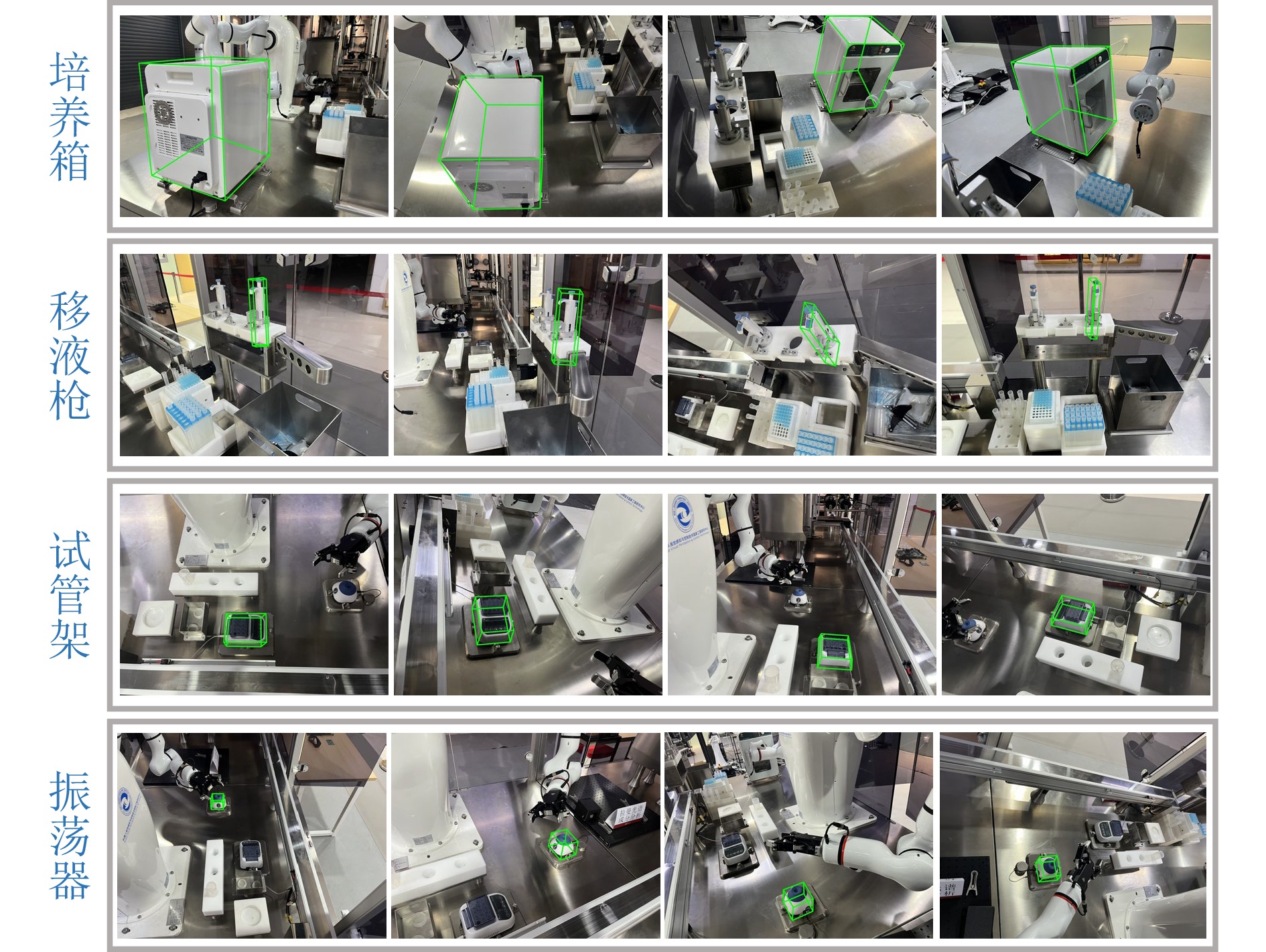

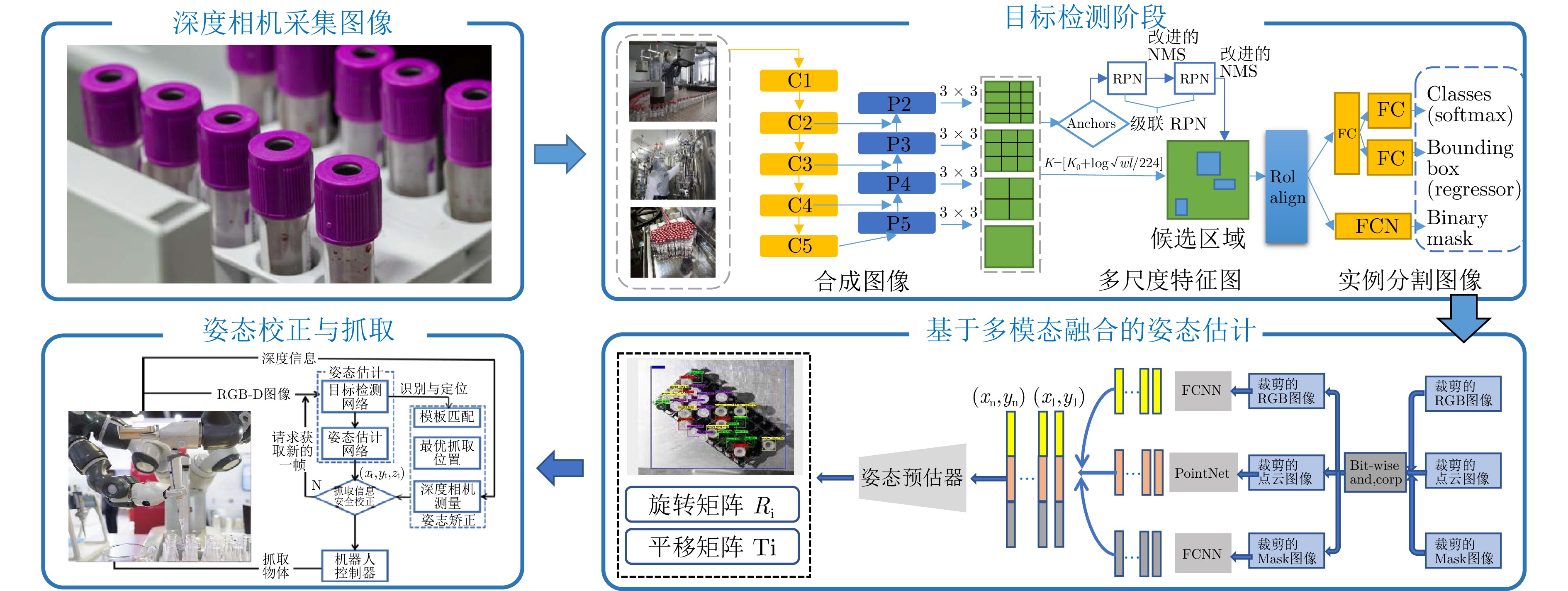

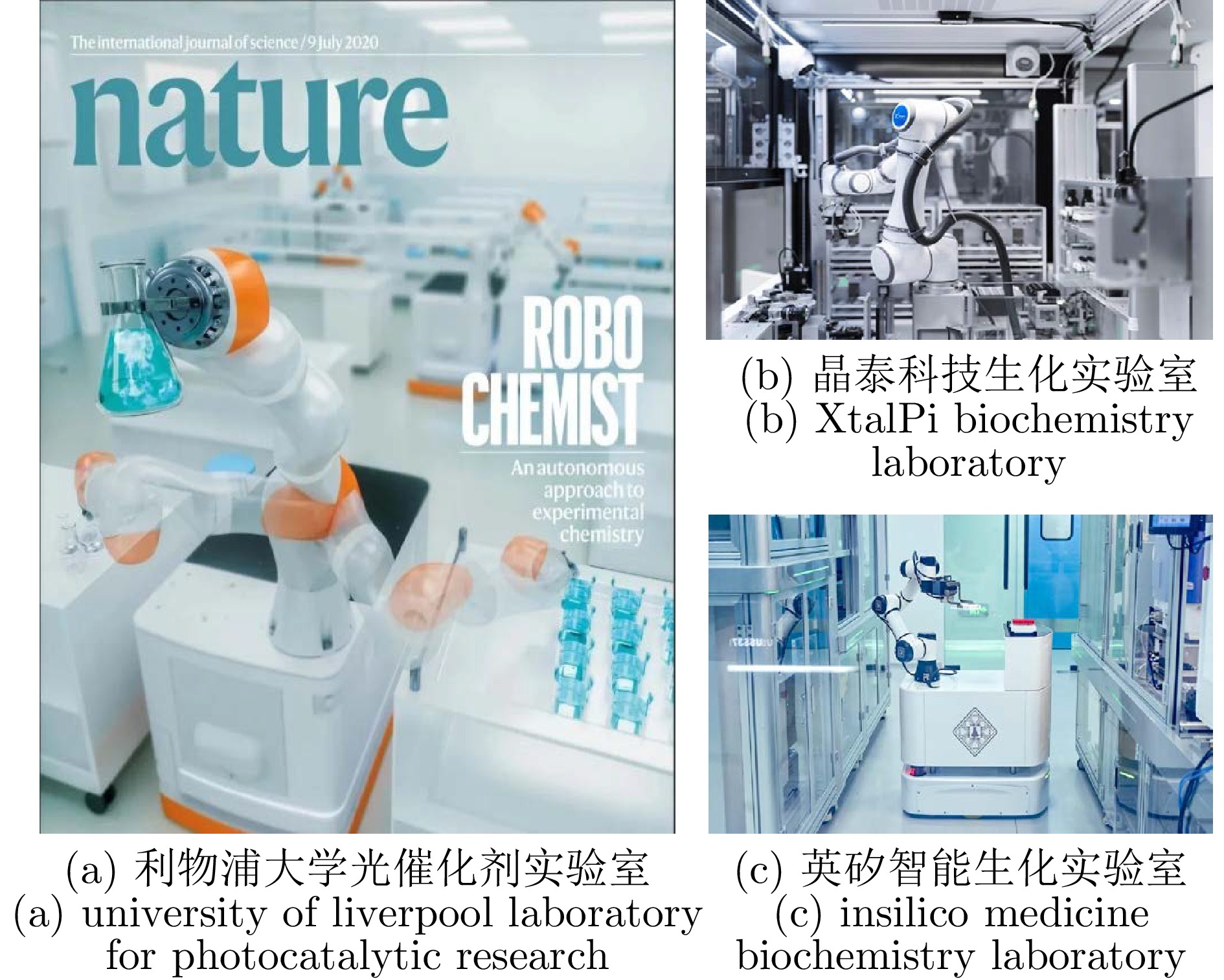

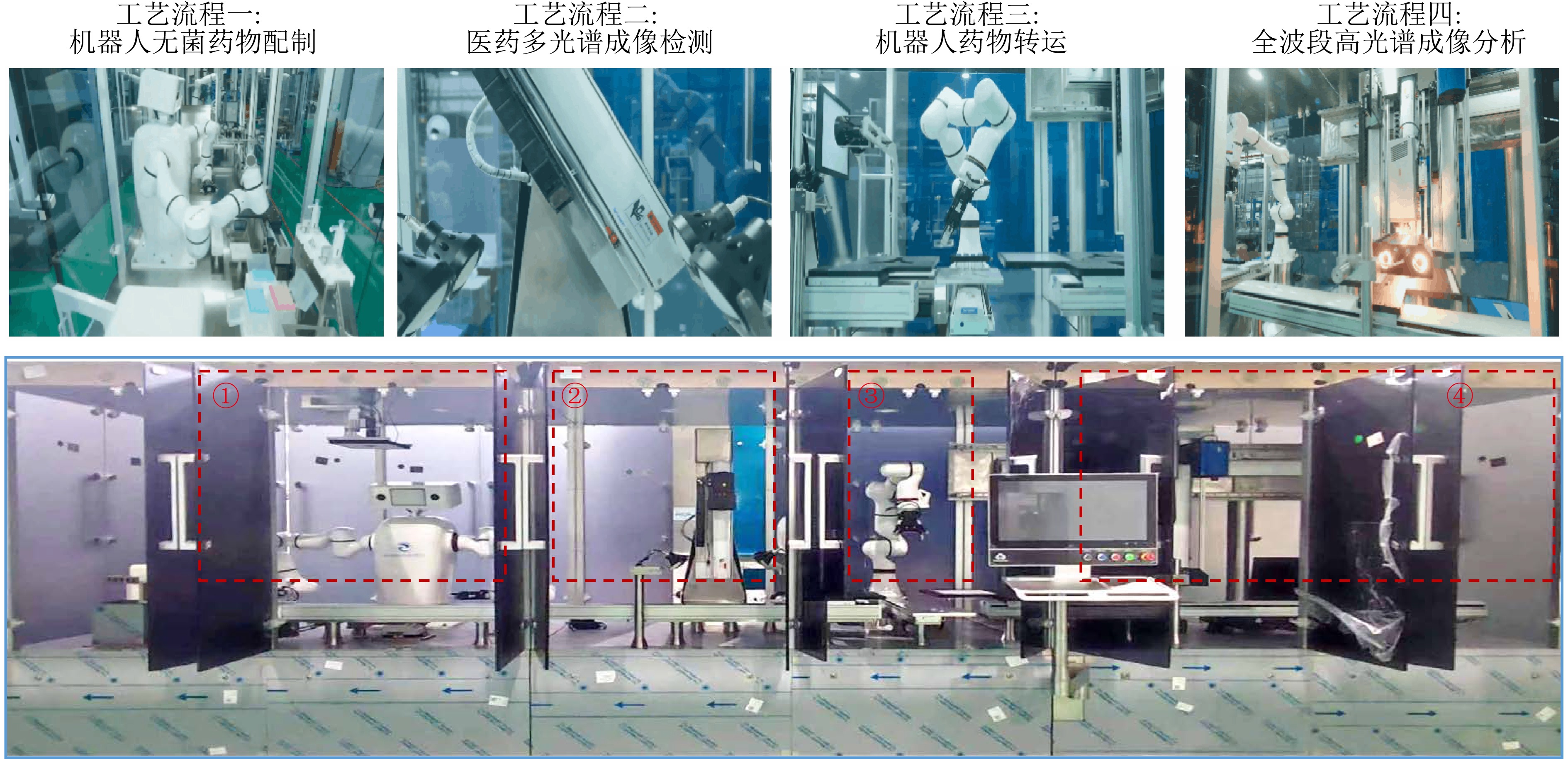

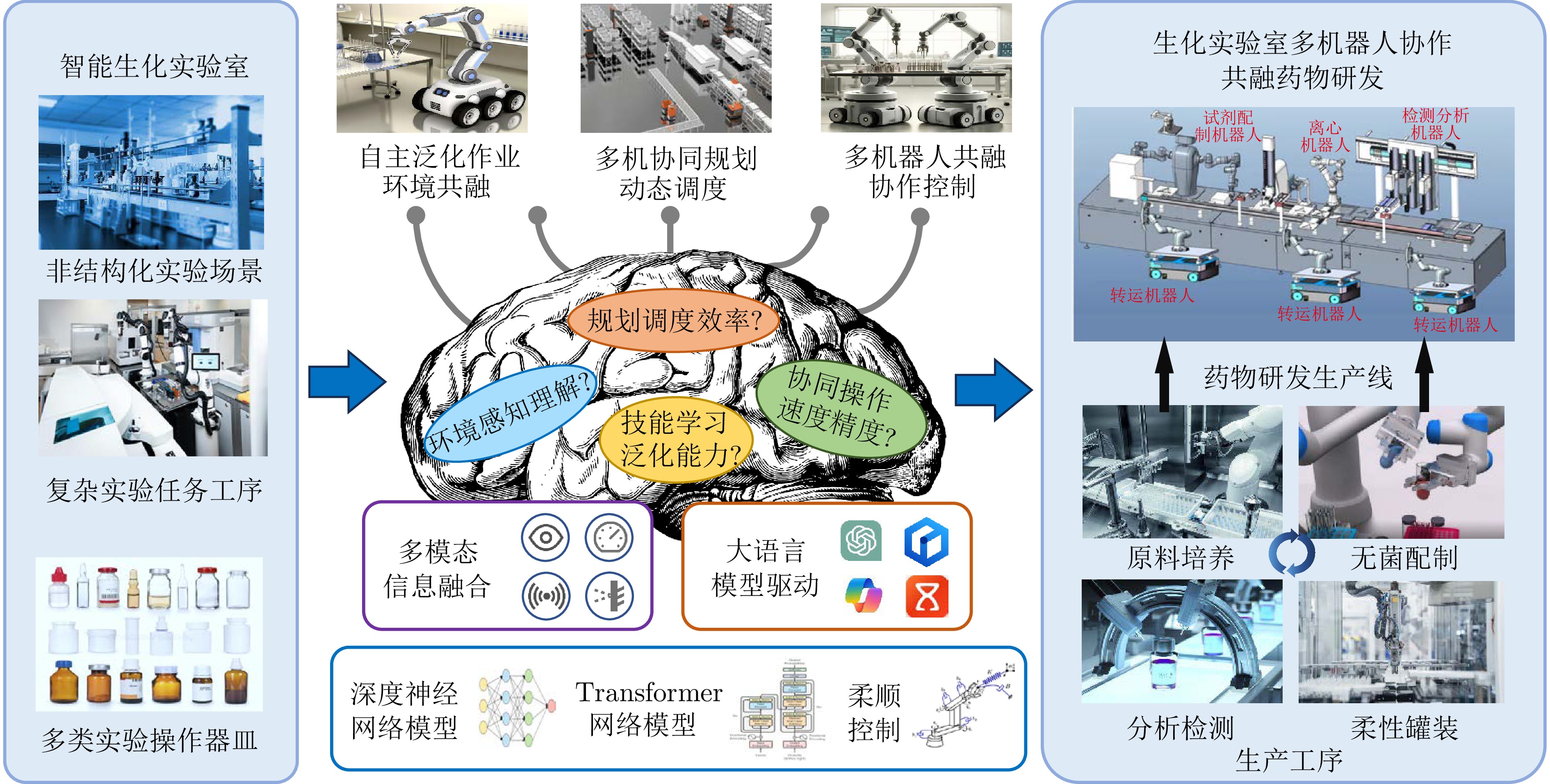

摘要: 生物制药在保障国计民生和国家安全方面发挥着至关重要的作用, 加快机器人技术、人工智能与生物医学的深度融合, 对于提升新药研发效率、应对公共卫生危机具有重要意义. 在生化实验室中, 随着新药制备流程日益复杂, 机器人技术在高精度液体处理、样品分析和实验自动化等关键操作中发挥着至关重要的作用. 然而, 现有机器人技术在环境感知、协同工作以及动态适应能力等方面仍存在局限性. 近年来, 深度学习、跨模态感知和大模型等领域的快速发展, 使得机器人在复杂生化实验室场景中的应用前景愈加广阔. 本文从智能生化实验室的具体需求出发, 重点探讨机器人在环境感知、任务与运动规划以及协同控制等关键技术的最新进展. 随后, 列举国内外在智能生化实验室领域的应用案例, 深入分析机器人技术在实验室环境中的实际应用现状. 最后, 总结智能生化实验室的技术发展趋势及面临的挑战, 为未来研究方向提供参考.Abstract: Biopharmaceuticals play a crucial role in safeguarding national prosperity and security. Accelerating the deep integration of robotics, artificial intelligence, and biomedicine is of significant importance for enhancing the efficiency of new drug development and addressing public health crises. In biochemical laboratories, as the processes for new drug preparation become increasingly complex, robotics plays a vital role in critical operations such as high-precision liquid handling, sample analysis, and experimental automation. However, existing robotics still faces limitations in environmental perception, collaborative operation, and dynamic adaptability. In recent years, rapid advancements in deep learning, cross-modal perception, and large models have made the application prospects for robotics in complex biochemical laboratory settings increasingly promising. This paper starts from the specific needs of intelligent biochemical laboratories and focuses on the latest developments in key technologies such as environmental perception, task and motion planning, and collaborative control. Subsequently, it presents examples of applications in intelligent biochemical laboratories both domestically and internationally, providing an in-depth analysis of the current state of robotics in laboratory environments. Finally, this paper summarizes the technological development trends and challenges faced by intelligent biochemical laboratories, offering references for future research directions.

-

表 1 术语解释

Table 1 Terminology explanation

术语 术语解释 强化学习 通过试错学习策略的机器学习方法 深度学习 用神经网络从数据中提取特征的技术 大模型 基于大数据训练的复杂神经网络模型 机器人系统 用于执行任务的智能自动化系统 环境感知 获取并理解周围环境信息的技术 任务规划 确定机器人任务分配与执行顺序的技术 运动规划 设计机器人路径和动作的技术 交互控制 调整机器人动作以适应交互的技术 表 2 多模态融合算法对比

Table 2 Comparison of multimodal fusion algorithms

融合方法 融合阶段 优点 缺点 早期融合 数据输入阶段 信息利用率高、计算效率高, 适合实时感知任务 对噪声敏感, 依赖数据质量 特征融合 模型中间层 语义关联性强、特征表达丰富, 适合语义理解任务 计算复杂度高, 需大规模标注数据 后期融合 决策阶段 灵活性高、鲁棒性强, 适合融合来自不同模态的异构信息的感知任务 难以充分利用低层次信息 表 3 代表性大模型

Table 3 Representative Large Models

模型名称 发布机构 发布时间 参数量 训练数据规模 预训练任务 应用领域 CLIP[2] OpenAI 2021 数据缺失 4亿图像——文本对 图像——文本对齐 图像——文本检索任务 DALL-E[49] OpenAI 2021 12亿 2.5亿图像——文本对 图像生成 文本生成图像任务 Flamingo[62] DeepMind 2022 8~10亿 数据缺失 图像——文本对齐 视觉问答任务 Point-BERT[50] 清华大学 2022 数据缺失 5万个点云模型 掩码点云建模 3D点云分类任务 GPT-4V(ision)[47] OpenAI 2023 数据缺失 数据缺失 图像——文本对齐 图像描述生成任务 Qwen-VL[48] 阿里巴巴 2023 96亿 50亿图像——文本对 图像——文本对齐 图像内容理解任务 SAM[61] Meta AI 2023 6.32亿 11亿图像及1.1亿标注 图像分割 通用场景分割任务 表 4 生化实验室中的感知算法应用

Table 4 Perception algorithm applications in biochemical laboratories

表 5 任务规划算法对比

Table 5 Comparison of task planning algorithms

规划方法 优缺点 基于预先定义的方法 稳定, 易实现, 但难以应对环境变化 基于强化学习的方法 适应性强, 但训练时间长且依赖数据 基于大模型的方法 灵活, 适应性强, 但规划结果稳定性差 表 6 生化实验室中的大语言模型任务规划算法应用

Table 6 Application of LLM-based task planning algorithms in biochemical laboratories

表 7 集中式控制与分布式控制对比

Table 7 Comparison of centralized control and distributed control

变量 集中式控制 分布式控制 控制结构 由中央控制器统一指挥 各机器人自主决策 信息共享方式 所有信息集中处理 信息通过局部通信共享 协调效率 高度协调, 但依赖中央控制器 灵活协调, 依赖局部通信 系统复杂性 控制算法复杂, 需中央协调 较为简单, 控制分散在各机器人 典型应用场景 任务明确、需要高度协调的操作 环境复杂、任务多变的操作 -

[1] 王文晟, 谭宁, 黄凯, 张雨浓, 郑伟诗, 孙富春. 基于大模型的具身智能系统综述. 自动化学报, 2025, 51(1): 1−19Wang Wen-Sheng, Tan Ning, Huang Kai, Zhang Yu-Nong, Zheng Wei-Shi, Sun Fu-Chun. Embodied intelligence systems based on large models: A survey. Acta Automatica Sinica, 2025, 51(1): 1−19 [2] Radford A, Kim J W, Hallacy C, Ramesh A, Goh G, Agarwal S, et al. Learning transferable visual models from natural language supervision. In: Proceedings of the 38th International Conference on Machine Learning. Virtual Event: PMLR, 2021. 8748−8763(未找到本条文献出版地信息, 请核对)Radford A, Kim J W, Hallacy C, Ramesh A, Goh G, Agarwal S, et al. Learning transferable visual models from natural language supervision. In: Proceedings of the 38th International Conference on Machine Learning. Virtual Event: PMLR, 2021. 8748−8763 [3] 卢经纬, 郭超, 戴星原, 缪青海, 王兴霞, 杨静, 等. 问答ChatGPT之后: 超大预训练模型的机遇和挑战. 自动化学报, 2023, 49(4): 705−717Lu Jing-Wei, Guo Chao, Dai Xing-Yuan, Miao Qing-Hai, Wang Xing-Xia, Yang Jing, et al. The ChatGPT after: Opportunities and challenges of very large scale pre-trained models. Acta Automatica Sinica, 2023, 49(4): 705−717 [4] Wu T Y, He S Z, Liu J P, Sun S Q, Liu K, Han Q L, et al. A brief overview of ChatGPT: The history, status quo and potential future development. IEEE/CAA Journal of Automatica Sinica, 2023, 10(5): 1122−1136 doi: 10.1109/JAS.2023.123618 [5] Bai J R, Cao L W, Mosbach S, Akroyd J, Lapkin A A, Kraft M. From platform to knowledge graph: Evolution of laboratory automation. JACS Au, 2022, 2(2): 292−309 doi: 10.1021/jacsau.1c00438 [6] Bogue R. Robots in the laboratory: A review of applications. Industrial Robot: An International Journal, 2012, 39(2): 113−119 doi: 10.1108/01439911211203382 [7] Burger B, Maffettone P M, Gusev V V, Aitchison C M, Bai Y, Wang X Y, et al. A mobile robotic chemist. Nature, 2020, 583(7815): 237−241 doi: 10.1038/s41586-020-2442-2 [8] Shen Y L, Song K T, Tan X, Li D S, Lu W M, Zhuang Y T. HuggingGPT: Solving AI tasks with ChatGPT and its friends in Hugging Face. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. 38154−38180 [9] Bran A M, Cox S, Schilter O, Baldassari C, White A D, Schwaller P. Augmenting large language models with chemistry tools. Nature Machine Intelligence, 2024, 6(5): 525−535 doi: 10.1038/s42256-024-00832-8 [10] O'Neill S. AI-driven robotic laboratories show promise. Engineering, 2021, 7(10): 1351−1353 doi: 10.1016/j.eng.2021.08.006 [11] Firoozi R, Tucker J, Tian S, Majumdar A, Sun J K, Liu W Y, et al. Foundation models in robotics: Applications, challenges, and the future. The International Journal of Robotics Research, 2025, 44(5): 701−739 doi: 10.1177/02783649241281508 [12] Chen W L, Zhang H, Jiang Y M, Chen Y R, Chen B, Huang C Q. An iterative attention fusion network for 6D object pose estimation. In: Proceedings of China Automation Congress. Chongqing, China: IEEE, 2023. 9300−9305 [13] Ullman S. Three-dimensional object recognition based on the combination of views. Cognition, 1998, 67(1−2): 21−44 doi: 10.1016/S0010-0277(98)00013-4 [14] Lowe D G. Object recognition from local scale-invariant features. In: Proceedings of the 7th IEEE International Conference on Computer Vision. Kerkyra, Greece: IEEE, 1999. 1150−1157 [15] Fischler M A, Bolles R C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Communications of the ACM, 1981, 24(6): 381−395 doi: 10.1145/358669.358692 [16] Lepetit V, Fua P. Monocular model-based 3D tracking of rigid objects: A survey. Foundations and Trends® in Computer Graphics and Vision, 2005, 1(1): 1−89 [17] 杨旭升, 王帅炀, 张文安, 仇翔. 基于二次关键点匹配的药盒位姿估计方法. 计算机辅助设计与图形学学报, 2022, 34(4): 570−580Yang Xu-Sheng, Wang Shuai-Yang, Zhang Wen-An, Qiu Xiang. Research on pose estimation method of medicine box based on secondary key point matching. Journal of Computer-Aided Design & Computer Graphics, 2022, 34(4): 570−580 [18] Olson C F, Huttenlocher D P. Automatic target recognition by matching oriented edge pixels. IEEE Transactions on Image Processing, 1997, 6(1): 103−113 doi: 10.1109/83.552100 [19] Hinterstoisser S, Cagniart C, Ilic S, Sturm P, Navab N, Fua P, et al. Gradient response maps for real-time detection of textureless objects. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(5): 876−888 doi: 10.1109/TPAMI.2011.206 [20] Liu J, Sun W, Yang H, Liu C P, Zhang X, Mian A. Domain-generalized robotic picking via contrastive learning-based 6-D pose estimation. IEEE Transactions on Industrial Informatics, 2024, 20(6): 8650−8661 doi: 10.1109/TII.2024.3366248 [21] Xiang Y, Schmidt T, Narayanan V, Fox D. PoseCNN: A convolutional neural network for 6D object pose estimation in cluttered scenes. In: Proceedings of the 14th Robotics: Science and Systems. Pittsburgh, USA, 2018.(未找到本条文献出版者信息, 请核对)Xiang Y, Schmidt T, Narayanan V, Fox D. PoseCNN: A convolutional neural network for 6D object pose estimation in cluttered scenes. In: Proceedings of the 14th Robotics: Science and Systems. Pittsburgh, USA, 2018. [22] Liu J, Sun W, Liu C P, Zhang X, Fan S M, Wu W. HFF6D: Hierarchical feature fusion network for robust 6D object pose tracking. IEEE Transactions on Circuits and Systems for Video Technology, 2022, 32(11): 7719−7731 doi: 10.1109/TCSVT.2022.3181597 [23] Wang H, Sridhar S, Huang J W, Valentin J, Song S R, Guibas L J. Normalized object coordinate space for category-level 6D object pose and size estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2637−2646 [24] Wang C, Xu D F, Zhu Y K, Martín-Martín R, Lu C W, Fei-Fei L, et al. DenseFusion: 6D object pose estimation by iterative dense fusion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 3338−3347 [25] Le-Khac P H, Healy G, Smeaton A F. Contrastive representation learning: A framework and review. IEEE Access, 2020, 8: 193907−193934 doi: 10.1109/ACCESS.2020.3031549 [26] Shorten C, Khoshgoftaa T M. A survey on image data augmentation for deep learning. Journal of Big Data, 2019, 6(1): Article No. 60 doi: 10.1186/s40537-019-0197-0 [27] Ren Y, Chen R H, Cong Y. Autonomous manipulation learning for similar deformable objects via only one demonstration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Vancouver, Canada: IEEE, 2023. 17069−17078 [28] 赵一鸣, 李艳华, 商雅楠, 李静, 于勇, 李凉海. 激光雷达的应用及发展趋势. 遥测遥控, 2014, 35(5): 4−22Zhao Yi-Ming, Li Yan-Hua, Shang Ya-Nan, Li Jing, Yu Yong, Li Liang-Hai. Application and development direction of Lidar. Journal of Telemetry, Tracking and Command, 2014, 35(5): 4−22 [29] Opromolla R, Fasano G, Rufino G, Grassi M. Uncooperative pose estimation with a LIDAR-based system. Acta Astronautica, 2015, 110: 287−297 doi: 10.1016/j.actaastro.2014.11.003 [30] Pramatarov G, Gadd M, Newman P, De Martini D. That's my point: Compact object-centric LiDAR pose estimation for large-scale outdoor localisation. In: Proceedings of the IEEE International Conference on Robotics and Automation. Yokohama, Japan: IEEE, 2024. 12276−12282 [31] Pensado E A, de Santos L M G, Jorge H G, Sanjurjo-Rivo M. Deep learning-based target pose estimation using LiDAR measurements in active debris removal operations. IEEE Transactions on Aerospace and Electronic Systems, 2023, 59(5): 5658−5670 [32] Bimbo J, Luo S, Althoefer K, Liu H B. In-hand object pose estimation using covariance-based tactile to geometry matching. IEEE Robotics and Automation Letters, 2016, 1(1): 570−577 doi: 10.1109/LRA.2016.2517244 [33] Sodhi P, Kaess M, Mukadam M, Anderson S. Learning tactile models for factor graph-based estimation. In: Proceedings of the IEEE International Conference on Robotics and Automation. Xi'an, China: IEEE, 2021. 13686−13692 [34] Lin Q G, Yan C J, Li Q, Ling Y G, Zheng Y, Lee W W, et al. Tactile-based object pose estimation employing extended Kalman filter. In: Proceedings of the IEEE International Conference on Advanced Robotics and Mechatronics. Sanya, China: IEEE, 2023. 118−123 [35] Rosinol A, Violette A, Abate M, Hughes N, Chang Y, Shi J N, et al. Kimera: From SLAM to spatial perception with 3D dynamic scene graphs. The International Journal of Robotics Research, 2021, 40(12−14): 1510−1546 doi: 10.1177/02783649211056674 [36] Zhang Y F, Sidibé D, Morel O, Mériaudeau F. Deep multimodal fusion for semantic image segmentation: A survey. Image and Vision Computing, 2021, 105: Article No. 104042 doi: 10.1016/j.imavis.2020.104042 [37] Alaba S Y, Gurbuz A C, Ball J E. Emerging trends in autonomous vehicle perception: Multimodal fusion for 3D object detection. World Electric Vehicle Journal, 2024, 15(1): Article No. 20 doi: 10.3390/wevj15010020 [38] Zhou Y J, Mei G F, Wang Y M, Poiesi F, Wan Y. Attentive multimodal fusion for optical and scene flow. IEEE Robotics and Automation Letters, 2023, 8(10): 6091−6098 doi: 10.1109/LRA.2023.3300252 [39] Zhai M L, Xiang X Z, Lv N, Kong X D. Optical flow and scene flow estimation: A survey. Pattern Recognition, 2021, 114: Article No. 107861 doi: 10.1016/j.patcog.2021.107861 [40] Ngiam J, Khosla A, Kim M, Nam J, Lee H, Ng A Y. Multimodal deep learning. In: Proceedings of the 28th International Conference on Machine Learning. Bellevue, USA: Omnipress, 2011. 689−696 [41] Pan J Y, Wang Z P, Wang L. Co-Occ: Coupling explicit feature fusion with volume rendering regularization for multi-modal 3D semantic occupancy prediction. IEEE Robotics and Automation Letters, 2024, 9(6): 5687−5694 doi: 10.1109/LRA.2024.3396092 [42] Qian K, Zhu S L, Zhang X Y, Li L E. Robust multimodal vehicle detection in foggy weather using complementary Lidar and radar signals. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Nashville, USA: IEEE, 2021. 444−453 [43] Sargin M E, Yemez Y, Erzin E, Tekalp A M. Audiovisual synchronization and fusion using canonical correlation analysis. IEEE Transactions on Multimedia, 2007, 9(7): 1396−1403 doi: 10.1109/TMM.2007.906583 [44] Inceoglu A, Aksoy E E, Ak A C, Sariel S. FINO-Net: A deep multimodal sensor fusion framework for manipulation failure detection. In: Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems. Prague, Czech Republic: IEEE, 2021. 6841−6847 [45] Zhang H, Liang Z C, Li C, Zhong H, Liu L, Zhao C Y, et al. A practical robotic grasping method by using 6-D pose estimation with protective correction. IEEE Transactions on Industrial Electronics, 2022, 69(4): 3876−3886 doi: 10.1109/TIE.2021.3075836 [46] Brown T B, Mann B, Ryder N, Subbiah M, Kaplan J, Dhariwal P, et al. Language models are few-shot learners. In: Proceedings of the 34th International Conference on Neural Information Processing Systems. Vancouver, Canada: Curran Associates Inc., 2020. Article No. 159 [47] Achiam J, Adler S, Agarwal S, Ahmad L, Akkaya I, Aleman F L, et al. GPT-4 technical report. arXiv preprint arXiv: 2303.08774, 2023. [48] Bai J Z, Bai S, Yang S S, Wang S J, Tan S N, Wang P, et al. Qwen-VL: A versatile vision-language model for understanding, localization, text reading, and beyond. arXiv preprint arXiv: 2308.12966, 2023. [49] Ramesh A, Pavlov M, Goh G, Gray S, Voss C, Radford A, et al. Zero-shot text-to-image generation. In: Proceedings of the 38th International Conference on Machine Learning. Virtual Event: PMLR, 2021. 8821−8831(未找到本条文献出版信息, 请核对)Ramesh A, Pavlov M, Goh G, Gray S, Voss C, Radford A, et al. Zero-shot text-to-image generation. In: Proceedings of the 38th International Conference on Machine Learning. Virtual Event: PMLR, 2021. 8821−8831 [50] Yu X M, Tang L L, Rao Y M, Huang T J, Zhou J, Lu J W. Point-BERT: Pre-training 3D point cloud transformers with masked point modeling. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. New Orleans, USA: IEEE, 2022. 19291−19300 [51] Gu X Y, Lin T Y, Kuo W C, Cui Y. Open-vocabulary object detection via vision and language knowledge distillation. In: Proceedings of the 10th International Conference on Learning Representations. ICLR, 2022.(未找到本条文献出版地信息, 请核对)Gu X Y, Lin T Y, Kuo W C, Cui Y. Open-vocabulary object detection via vision and language knowledge distillation. In: Proceedings of the 10th International Conference on Learning Representations. ICLR, 2022. [52] Zhang R R, Guo Z Y, Zhang W, Li K C, Miao X P, Cui B, et al. PointCLIP: Point cloud understanding by CLIP. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. New Orleans, USA: IEEE, 2022. 8542−8552 [53] Cao Y, Zeng Y H, Xu H, Xu D. CoDA: Collaborative novel box discovery and cross-modal alignment for open-vocabulary 3D object detection. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 3145 [54] Liu M H, Shi R X, Kuang K M, Zhu Y H, Li X L, Han S Z, et al. OpenShape: Scaling up 3D shape representation towards open-world understanding. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2023. Article No. 1944 [55] Takmaz A, Fedele E, Sumner R W, Pollefeys M, Tombari F, Engelmann F. OpenMask3D: Open-vocabulary 3D instance segmentation. In: Proceedings of the 37th International Conference on Neural Information Processing Systems. New Orleans: Curran Associates Inc., 2023. Article No. 2989 [56] Lu S Y, Chang H N, Jing E P, Boularias A, Bekris K. OVIR-3D: Open-vocabulary 3D instance retrieval without training on 3D data. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023. 1610−1620 [57] Nguyen P, Ngo T D, Kalogerakis E, Gan C, Tran A, Pham C, et al. Open3DIS: Open-vocabulary 3D instance segmentation with 2D mask guidance. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, USA: IEEE, 2024. 4018−4028 [58] Mildenhall B, Srinivasan P P, Tancik M, Barron J T, Ramamoorthi R, Ng R. NeRF: Representing scenes as neural radiance fields for view synthesis. Communications of the ACM, 2021, 65(1): 99−106 [59] Kerr J, Kim C M, Goldberg K, Kanazawa A, Tancik M. LERF: Language embedded radiance fields. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Paris, France: IEEE, 2023. 19672−19682 [60] Shen Q H, Yang X Y, Wang X C. Anything-3D: Towards single-view anything reconstruction in the wild. arXiv preprint arXiv: 2304.10261, 2023. [61] Kirillov A, Mintun E, Ravi N, Mao H Z, Rolland C, Gustafson L, et al. Segment anything. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Paris, France: IEEE, 2023. 3992−4003 [62] Alayrac J B, Donahue J, Luc P, Miech A, Barr I, Hasson Y, et al. Flamingo: A visual language model for few-shot learning. In: Proceedings of the 36th International Conference on Neural Information Processing Systems. New Orleans, USA: Curran Associates Inc., 2022. Article No. 1723 [63] Zhang Y, Pan Y W, Yao T, Huang R, Mei T, Chen C W. Learning to generate language-supervised and open-vocabulary scene graph using pre-trained visual-semantic space. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Vancouver, Canada: IEEE, 2023. 2915−2924 [64] Koch S, Hermosilla P, Vaskevicius N, Colosi M, Ropinski T. Lang3DSG: Language-based contrastive pre-training for 3D Scene Graph prediction. In: Proceedings of the International Conference on 3D Vision. Davos, Switzerland: IEEE, 2024. 1037−1047 [65] Chen L G X, Wang X J, Lu J L, Lin S H, Wang C B, He G Q. CLIP-driven open-vocabulary 3D scene graph generation via cross-modality contrastive learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, USA: IEEE, 2024. 27863−27873 [66] Gu Q, Kuwajerwala A, Morin S, Jatavallabhula K M, Sen B, Agarwal A, et al. ConceptGraphs: Open-vocabulary 3D scene graphs for perception and planning. In: Proceedings of IEEE International Conference on Robotics and Automation. Yokohama, Japan: IEEE, 2024. 5021−5028 [67] Sajjan S, Moore M, Pan M, Nagaraja G, Lee J, Zeng A, et al. Clear grasp: 3D shape estimation of transparent objects for manipulation. In: Proceedings of the IEEE International Conference on Robotics and Automation. Paris, France: IEEE, 2020. 3634−3642 [68] Eppel S, Xu H P, Bismuth M, Aspuru-Guzik A. Computer vision for recognition of materials and vessels in chemistry lab settings and the Vector-LabPics data set. ACS Central Science, 2020, 6(10): 1743−1752 doi: 10.1021/acscentsci.0c00460 [69] Hatakeyama-Sato K, Ishikawa H, Takaishi S, Igarashi Y, Nabae Y, Hayakawa T. Semiautomated experiment with a robotic system and data generation by foundation models for synthesis of polyamic acid particles. Polymer Journal, 2024, 56(11): 977−986 doi: 10.1038/s41428-024-00930-9 [70] El-Khawaldeh R, Guy M, Bork F, Taherimakhsousi N, Jones K N, Hawkins J M, et al. Keeping an “eye” on the experiment: Computer vision for real-time monitoring and control. Chemical Science, 2024, 15(4): 1271−1282 doi: 10.1039/D3SC05491H [71] Zepel T, Lai V, Yunker L P E, Hein J E. Automated liquid-level monitoring and control using computer vision. ChemRxiv, to be published, DOI: 10.26434/chemrxiv.12798143.v1 [72] Darvish K, Skreta M, Zhao Y C, Yoshikawa N, Som S, Bogdanovic M, et al. ORGANA: A robotic assistant for automated chemistry experimentation and characterization. Matter, 2025, 8(2): Article No. 101897 doi: 10.1016/j.matt.2024.10.015 [73] Helmert M. Concise finite-domain representations for PDDL planning tasks. Artificial Intelligence, 2009, 173(5−6): 503−535 doi: 10.1016/j.artint.2008.10.013 [74] Kovacs D L. A multi-agent extension of PDDL3.1. In: Proceedings of the 3rd Workshop on the International Planning Competition. Atibaia, Brazil: ICAPS, 2012. 19−27 [75] Buehler J, Pagnucco M. A framework for task planning in heterogeneous multi robot systems based on robot capabilities. In: Proceedings of the 28th AAAI Conference on Artificial Intelligence. Quebec City, Canada: AAAI, 2014. 2527−2533 [76] Yu P, Dimarogonas D V. Distributed motion coordination for multirobot systems under LTL specifications. IEEE Transactions on Robotics, 2022, 38(2): 1047−1062 doi: 10.1109/TRO.2021.3088764 [77] Luo X S, Zavlanos M M. Temporal logic task allocation in heterogeneous multirobot systems. IEEE Transactions on Robotics, 2022, 38(6): 3602−3621 doi: 10.1109/TRO.2022.3181948 [78] Luo X S, Xu S J, Liu R X, Liu C L. Decomposition-based hierarchical task allocation and planning for multi-robots under hierarchical temporal logic specifications. IEEE Robotics and Automation Letters, 2024, 9(8): 7182−7189 doi: 10.1109/LRA.2024.3412589 [79] 孙长银, 穆朝絮. 多智能体深度强化学习的若干关键科学问题. 自动化学报, 2020, 46(7): 1301−1312Sun Chang-Yin, Mu Chao-Xu. Important scientific problems of multi-agent deep reinforcement learning. Acta Automatica Sinica, 2020, 46(7): 1301−1312 [80] Zhang K Q, Yang Z R, Başar T. Multi-agent reinforcement learning: A selective overview of theories and algorithms. Handbook of Reinforcement Learning and Control, Cham: Springer, 2021. 321−384 [81] Son K, Kim D, Kang W J, Hostallero D E, Yi Y. QTRAN: Learning to factorize with transformation for cooperative multi-agent reinforcement learning. In: Proceedings of the 36th International Conference on Machine Learning. Los Angeles, USA: PMLR, 2019. 5887−5896 [82] Chen G. A new framework for multi-agent reinforcement learning–centralized training and exploration with decentralized execution via policy distillation. In: Proceedings of the 19th International Conference on Autonomous Agents and Multiagent Systems. Auckland, New Zealand: International Foundation for Autonomous Agents and Multiagent Systems, 2020. 1801−1803 [83] Li S, Gupta J K, Morales P, Allen R, Kochenderfer M J. Deep implicit coordination graphs for multi-agent reinforcement learning. In: Proceedings of the 20th International Conference on Autonomous Agents and Multiagent Systems. Virtual Event: International Foundation for Autonomous Agents and Multiagent Systems, 2021. 764−772(未找到本条文献出版地信息, 请核对)Li S, Gupta J K, Morales P, Allen R, Kochenderfer M J. Deep implicit coordination graphs for multi-agent reinforcement learning. In: Proceedings of the 20th International Conference on Autonomous Agents and Multiagent Systems. Virtual Event: International Foundation for Autonomous Agents and Multiagent Systems, 2021. 764−772 [84] Gu H T, Guo X, Wei X L, Xu R Y. Mean-field multiagent reinforcement learning: A decentralized network approach. Mathematics of Operations Research, 2025, 50(1): 506−536 doi: 10.1287/moor.2022.0055 [85] Du W, Ding S F. A survey on multi-agent deep reinforcement learning: From the perspective of challenges and applications. Artificial Intelligence Review, 2021, 54(5): 3215−3238 doi: 10.1007/s10462-020-09938-y [86] Lanctot M, Zambaldi V, Gruslys A, Lazaridou A, Tuyls K, Pérolat J, et al. A unified game-theoretic approach to multiagent reinforcement learning. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: Curran Associates Inc., 2017. 4193−4206 [87] Gleave A, Dennis M, Wild C, Kant N, Levine S, Russell S. Adversarial policies: Attacking deep reinforcement learning. In: Proceedings of the 8th International Conference on Learning Representations. Addis Ababa, Ethiopia: ICLR, 2020. 1−16 [88] Kannan S S, Venkatesh V L N, Min B C. SMART-LLM: Smart multi-agent robot task planning using large language models. In: Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems. Abu Dhabi, United Arab Emirates: IEEE, 2024. 12140−12147 [89] Mandi Z, Jain S, Song S R. RoCo: Dialectic multi-robot collaboration with large language models. In: Proceedings of the IEEE International Conference on Robotics and Automation. Yokohama, Japan: IEEE, 2024. 286−299 [90] Wang J, He G C, Kantaros Y. Probabilistically correct language-based multi-robot planning using conformal prediction. IEEE Robotics and Automation Letters, 2025, 10(1): 160−167 doi: 10.1109/LRA.2024.3504233 [91] Wang S, Han M Z, Jiao Z Y, Zhang Z Y, Wu Y N, Zhu S C, et al. LLM.3: Large language model-based task and motion planning with motion failure reasoning. In: Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems. Abu Dhabi, United Arab Emirates: IEEE, 2024. 12086−12092 [92] Bai D, Singh I, Traum D, Thomason J. TwoStep: Multi-agent task planning using classical planners and large language models. arXiv preprint arXiv: 2403.17246, 2025. [93] Shirai K, Beltran-Hernandez C C, Hamaya M, Hashimoto A, Tanaka S, Kawaharazuka K, et al. Vision-language interpreter for robot task planning. In: Proceedings of the IEEE International Conference on Robotics and Automation. Yokohama, Japan: IEEE, 2024. 2051−2058 [94] Liang J, Huang W L, Xia F, Xu P, Hausman K, Ichter B, et al. Code as policies: Language model programs for embodied control. In: Proceedings of the IEEE International Conference on Robotics and Automation. London, UK: IEEE, 2023. 9493−9500 [95] Song C H, Sadler B M, Wu J M, Chao W L, Washington C, Su Y. LLM-planner: Few-Shot grounded planning for embodied agents with large language models. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Paris, France: IEEE, 2023. 2986−2997 [96] Yoshikawa N, Skreta M, Darvish K, Arellano-Rubach S, Ji Z, Kristensen L B, et al. Large language models for chemistry robotics. Autonomous Robots, 2023, 47(8): 1057−1086 doi: 10.1007/s10514-023-10136-2 [97] Ye J J, Chen X T, Xu N, Zu C, Shao Z K, Liu S C, et al. A comprehensive capability analysis of GPT-3 and GPT-3.5 series model. arXiv preprint arXiv: 2303.10420, 2023. [98] Song T, Luo M, Zhang X L, Chen L J, Huang Y, Cao J Q, et al. A multiagent-driven robotic AI chemist enabling autonomous chemical research on demand. Journal of the American Chemical Society, 2025, 147(15): 12534−12545 doi: 10.1021/jacs.4c17738 [99] Grattafiori A, Dubey A, Jauhri A, Pandey A, Kadian A, Al-Dahle A, et al. The Llama 3 herd of models. arXiv preprint arXiv: 2407.21783, 2024. [100] Hart P E, Nilsson N J, Raphael B. A formal basis for the heuristic determination of minimum cost paths. IEEE Transactions on Systems Science and Cybernetics, 1968, 4(2): 100−107 doi: 10.1109/TSSC.1968.300136 [101] Lee D, Nahrendra I M A, Oh M, Yu B, Myung H. TRG-Planner: Traversal risk graph-based path planning in unstructured environments for safe and efficient navigation. IEEE Robotics and Automation Letters, 2025, 10(2): 1736−1743 doi: 10.1109/LRA.2024.3524912 [102] Sahin A, Bhattacharya S. Topo-geometrically distinct path computation using neighborhood-augmented graph, and its application to path planning for a tethered robot in 3-D. IEEE Transactions on Robotics, 2025, 41: 20−41 doi: 10.1109/TRO.2024.3492386 [103] Lavalle S M. Rapidly-exploring random trees: A new tool for path planning, The Annual Research Report, 1998(未找到本条文献出版信息, 请核对)Lavalle S M. Rapidly-exploring random trees: A new tool for path planning, The Annual Research Report, 1998 [104] Kuffner J J, LaValle S M. RRT-connect: An efficient approach to single-query path planning. In: Proceedings of the IEEE International Conference on Robotics and Automation. San Francisco, USA: IEEE, 2000. 995−1001 [105] Rivière B, Lathrop J, Chung S J. Monte Carlo tree search with spectral expansion for planning with dynamical systems. Science Robotics, 2024, 9(97): Article No. eado1010 doi: 10.1126/scirobotics.ado1010 [106] Pomerleau D A. ALVINN: An autonomous land vehicle in a neural network. In: Proceedings of the 2nd International Conference on Neural Information Processing Systems. Cambridge, USA: MIT Press, 1988. 305−313 [107] Ross S, Gordon G, Bagnell D. A reduction of imitation learning and structured prediction to no-regret online learning. In: Proceedings of the 14th International Conference on Artificial Intelligence and Statistics. Fort Lauderdale, USA: JMLR Workshop and Conference Proceedings, 2011. 627−635 [108] Ng A Y, Russell S J. Algorithms for inverse reinforcement learning. In: Proceedings of the 17th International Conference on Machine Learning. San Francisco, USA: Morgan Kaufmann Publishers Inc, 2000. 663−670 [109] Ho J, Ermon S. Generative adversarial imitation learning. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: Curran Associates Inc., 2016. 4572−4580 [110] Ijspeert A J, Nakanishi J, Hoffmann H, Pastor P, Schaal S. Dynamical movement primitives: Learning attractor models for motor behaviors. Neural Computation, 2013, 25(2): 328−373 doi: 10.1162/NECO_a_00393 [111] Huang H F, Zeng C, Cheng L, Yang C G. Toward generalizable robotic dual-arm flipping manipulation. IEEE Transactions on Industrial Electronics, 2024, 71(5): 4954−4962 doi: 10.1109/TIE.2023.3288189 [112] Zhang H Y, Cheng L, Zhang Y. Learning robust point-to-point motions adversarially: A stochastic differential equation approach. IEEE Robotics and Automation Letters, 2023, 8(4): 2357−2364 doi: 10.1109/LRA.2023.3251190 [113] Brohan A, Brown N, Carbajal J, Chebotar Y, Dabis J, Finn C, et al. Rt-1: Robotics transformer for real-world control at scale. In: Proceedings of the 19th Robotics: Science and Systems. Daegu, Republic of Korea, 2023.(未找到本条文献出版者信息, 请核对)Brohan A, Brown N, Carbajal J, Chebotar Y, Dabis J, Finn C, et al. Rt-1: Robotics transformer for real-world control at scale. In: Proceedings of the 19th Robotics: Science and Systems. Daegu, Republic of Korea, 2023. [114] Zitkovich B, Yu T H, Xu S C, Xu P, Xiao T, Xia F, et al. RT2: Vision-Language-Action models transfer web knowledge to robotic control. In: Proceedings of the 7th Conference on Robot Learning. Atlanta, USA: PMLR, 2023. 2165−2183 [115] Shridhar M, Manuelli L, Fox D. CLIPort: What and where pathways for robotic manipulation. In: Proceedings of the 5th Conference on Robot Learning. Auckland, New Zealand: PMLR, 2022. 894−906 [116] Bucker A, Figueredo L, Haddadin S, Kapoor A, Ma S, Vemprala S, et al. LATTE: LAnguage trajectory TransformEr. In: Proceedings of the IEEE International Conference on Robotics and Automation. London, UK: IEEE, 2023. 7287−7294 [117] Ren Y, Liu Y C, Jin M H, Liu H. Biomimetic object impedance control for dual-arm cooperative 7-DOF manipulators. Robotics and Autonomous Systems, 2016, 75: 273−287 doi: 10.1016/j.robot.2015.09.018 [118] Naseri Soufiani B, Adli M A. An expanded impedance control scheme for slosh-free liquid transfer by a dual-arm cooperative robot. Journal of Vibration and Control, 2021, 27(23−24): 2793−2806 doi: 10.1177/1077546320966208 [119] Nicolis D, Allevi F, Rocco P. Operational space model predictive sliding mode control for redundant manipulators. IEEE Transactions on Robotics, 2020, 36(4): 1348−1355 doi: 10.1109/TRO.2020.2974092 [120] 李德昀, 徐德刚, 桂卫华. 基于时间延时估计和自适应模糊滑模控制器的双机械臂协同阻抗控制. 控制与决策, 2021, 36(6): 1311−1323Li De-Yun, Xu De-Gang, Gui Wei-Hua. Coordinated impedance control for dual-arm robots based on time delay estimation and adaptive fuzzy sliding mode controller. Control and Decision, 2021, 36(6): 1311−1323 [121] Bouyarmane K, Chappellet K, Vaillant J, Kheddar A. Quadratic programming for multirobot and task-space force control. IEEE Transactions on Robotics, 2019, 35(1): 64−77 doi: 10.1109/TRO.2018.2876782 [122] Djeha M, Gergondet P, Kheddar A. Robust task-space quadratic programming for kinematic-controlled robots. IEEE Transactions on Robotics, 2023, 39(5): 3857−3874 doi: 10.1109/TRO.2023.3286069 [123] Ren Y, Zhou Z H, Xu Z W, Yang Y, Zhai G Y, Leibold M, et al. Enabling versatility and dexterity of the dual-arm manipulators: A general framework toward universal cooperative manipulation. IEEE Transactions on Robotics, 2024, 40: 2024−2045 doi: 10.1109/TRO.2024.3370048 [124] Fan Y W, Li X G, Zhang K H, Qian C, Zhou F H, Li T F, et al. Learning robust skills for tightly coordinated arms in contact-rich tasks. IEEE Robotics and Automation Letters, 2024, 9(3): 2973−2980 doi: 10.1109/LRA.2024.3359542 [125] Lin Y J, Church A, Yang M, Li H R, Lloyd J, Zhang D D, et al. Bi-Touch: Bimanual tactile manipulation with sim-to-real deep reinforcement learning. IEEE Robotics and Automation Letters, 2023, 8(9): 5472−5479 doi: 10.1109/LRA.2023.3295991 [126] Luck K S, Ben Amor H. Extracting bimanual synergies with reinforcement learning. In: Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems. Vancouver, Canada: IEEE, 2017. 4805−4812 [127] He Z P, Ciocarlie M. Discovering synergies for robot manipulation with multi-task reinforcement learning. In: Proceedings of the IEEE International Conference on Robotics and Automation. Philadelphia, USA: IEEE, 2022. 2714−2721 [128] Lin Y J, Huang J C, Zimmer M, Guan Y S, Rojas J, Weng P. Invariant transform experience replay: Data augmentation for deep reinforcement learning. IEEE Robotics and Automation Letters, 2020, 5(4): 6615−6622 doi: 10.1109/LRA.2020.3013937 [129] Culbertson P, Slotine J J, Schwager M. Decentralized adaptive control for collaborative manipulation of rigid bodies. IEEE Transactions on Robotics, 2021, 37(6): 1906−1920 doi: 10.1109/TRO.2021.3072021 [130] 李肖, 李世其, 韩可, 李卓, 熊友军, 谢铮. 面向实时自避碰的双臂机器人力矩控制策略. 信息与控制, 2023, 52(2): 211−219Li Xiao, Li Shi-Qi, Han Ke, Li Zhuo, Xiong You-Jun, Xie Zheng. Real-time self-collision avoidance-oriented torque control strategy for dual-arm robot. Information and Control, 2023, 52(2): 211−219 [131] Jing X, Roveda L, Li J F, Wang Y B, Gao H B. An adaptive impedance control for dual-arm manipulators incorporated with the virtual decomposition control. Journal of Vibration and Control, 2024, 30(11−12): 2647−2660 doi: 10.1177/10775463231182462 [132] Sewlia M, Verginis C K, Dimarogonas D V. Cooperative object manipulation under signal temporal logic tasks and uncertain dynamics. IEEE Robotics and Automation Letters, 2022, 7(4): 11561−11568 doi: 10.1109/LRA.2022.3200760 [133] Abbas M, Dwivedy S K. Adaptive control for networked uncertain cooperative dual-arm manipulators: An event-triggered approach. Robotica, 2022, 40(6): 1951−1978 doi: 10.1017/S0263574721001478 [134] Chen B, Zhang H, Zhang F F, Jiang Y M, Miao Z Q, Yu H N, et al. DIBNN: A dual-improved-BNN based algorithm for multi-robot cooperative area search in complex obstacle environments. IEEE Transactions on Automation Science and Engineering, 2025, 22: 2361−2374 doi: 10.1109/TASE.2024.3379166 [135] Zhang L, Sun Y F, Barth A, Ma O. Decentralized control of multi-robot system in cooperative object transportation using deep reinforcement learning. IEEE Access, 2020, 8: 184109−184119 doi: 10.1109/ACCESS.2020.3025287 [136] Liu L Y, Liu Q Y, Song Y, Pang B, Yuan X F, Xu Q Y. A collaborative control method of dual-arm robots based on deep reinforcement learning. Applied Sciences, 2021, 11(4): Article No. 1816 doi: 10.3390/app11041816 [137] Yu C, Cao J Y, Rosendo A. Learning to climb: Constrained contextual Bayesian optimisation on a multi-modal legged robot. IEEE Robotics and Automation Letters, 2022, 7(4): 9881−9888 doi: 10.1109/LRA.2022.3192798 [138] Fan Y W, Li X G, Zhang K H, Qian C, Zhou F H, Li T F, et al. Learning robust skills for tightly coordinated arms in contact-rich tasks. IEEE Robotics and Automation Letters, 2024, 9(3): 2973−2980(查阅网上资料, 本条文献与第124条文献重复, 请核对)Fan Y W, Li X G, Zhang K H, Qian C, Zhou F H, Li T F, et al. Learning robust skills for tightly coordinated arms in contact-rich tasks. IEEE Robotics and Automation Letters, 2024, 9(3): 2973−2980(查阅网上资料, 本条文献与第124条文献重复, 请核对) [139] Sun G, Cong Y, Dong J H, Liu Y Y, Ding Z M, Yu H B. What and how: Generalized lifelong spectral clustering via dual memory. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(7): 3895−3908 doi: 10.1109/TPAMI.2021.3058852 [140] Dong J H, Li H L, Cong Y, Sun G, Zhang Y L, Van Gool L. No one left behind: Real-world federated class-incremental learning. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(4): 2054−2070 doi: 10.1109/TPAMI.2023.3334213 [141] Zhu Q, Huang Y, Zhou D L, Zhao L Y, Guo L L, Yang R Y, et al. Automated synthesis of oxygen-producing catalysts from Martian meteorites by a robotic AI chemist. Nature Synthesis, 2024, 3(3): 319−328 [142] Chen Y R, Wang Y N, Zhang H. Prior image guided snapshot compressive spectral imaging. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(9): 11096−11107 doi: 10.1109/TPAMI.2023.3265749 [143] Chen Y R, Zhang H, Wang Y N, Yang Y M, Wu J. Flex-DLD: Deep low-rank decomposition model with flexible priors for hyperspectral image denoising and restoration. IEEE Transactions on Image Processing, 2024, 33: 1211−1226 doi: 10.1109/TIP.2024.3360902 [144] Yin A T, Wang Y N, Chen Y R, Zeng K, Zhang H, Mao J X. SSAPN: Spectral–spatial anomaly perception network for unsupervised vaccine detection. IEEE Transactions on Industrial Informatics, 2023, 19(4): 6081−6092 doi: 10.1109/TII.2022.3195168 [145] Hao K R. IBM has built a new drug-making lab entirely in the cloud [Online], available: https://www.technologyreview.com/2020/08/28/1007737/ibm-ai-robot-drug-making-lab-in-the-cloud/, July 10, 2024. [146] Probst D, Manica M, Nana Teukam Y G, Castrogiovanni A, Paratore F, Laino T. Biocatalysed synthesis planning using data-driven learning. Nature Communications, 2022, 13(1): Article No. 964 doi: 10.1038/s41467-022-28536-w [147] Ross J, Belgodere B, Chenthamarakshan V, Padhi I, Mroueh Y, Das P. Large-scale chemical language representations capture molecular structure and properties. Nature Machine Intelligence, 2022, 4(12): 1256−1264 doi: 10.1038/s42256-022-00580-7 [148] XtalPi, ChemArt.TM [Online], available: https://www.xtalpi.com/chemical-synthesis-services, July 10, 2024. [149] Kamya P, Ozerov I V, Pun F W, Tretina K, Fokina T, Chen S, et al. PandaOmics: An AI-driven platform for therapeutic target and biomarker discovery. Journal of Chemical Information and Modeling, 2024, 64(10): 3961−3969 doi: 10.1021/acs.jcim.3c01619 [150] Lim C M, González Díaz A, Fuxreiter M, Pun F W, Zhavoronkov A, Vendruscolo M. Multiomic prediction of therapeutic targets for human diseases associated with protein phase separation. Proceedings of the National Academy of Sciences of the United States of America, 2023, 120(40): Article No. e2300215120 [151] Kadurin A, Nikolenko S, Khrabrov K, Aliper A, Zhavoronkov A. druGAN: An advanced generative adversarial autoencoder model for de novo generation of new molecules with desired molecular properties in silico. Molecular Pharmaceutics, 2017, 14(9): 3098−3104 doi: 10.1021/acs.molpharmaceut.7b00346 [152] Putin E, Asadulaev A, Ivanenkov Y, Aladinskiy V, Sanchez-Lengeling B, Aspuru-Guzik A, et al. Reinforced adversarial neural computer for de Novo molecular design. Journal of Chemical Information and Modeling, 2018, 58(6): 1194−1204 doi: 10.1021/acs.jcim.7b00690 [153] Aliper A, Kudrin R, Polykovskiy D, Kamya P, Tutubalina E, Chen S, et al. Prediction of clinical trials outcomes based on target choice and clinical trial design with multi-modal artificial intelligence. Clinical Pharmacology & Therapeutics, 2023, 114(5): 972−980 -

计量

- 文章访问数: 64

- HTML全文浏览量: 30

- 被引次数: 0

下载:

下载: