A Feature Pyramid Optical Flow Estimation Method Based on Multi-scale Deformable Convolution

-

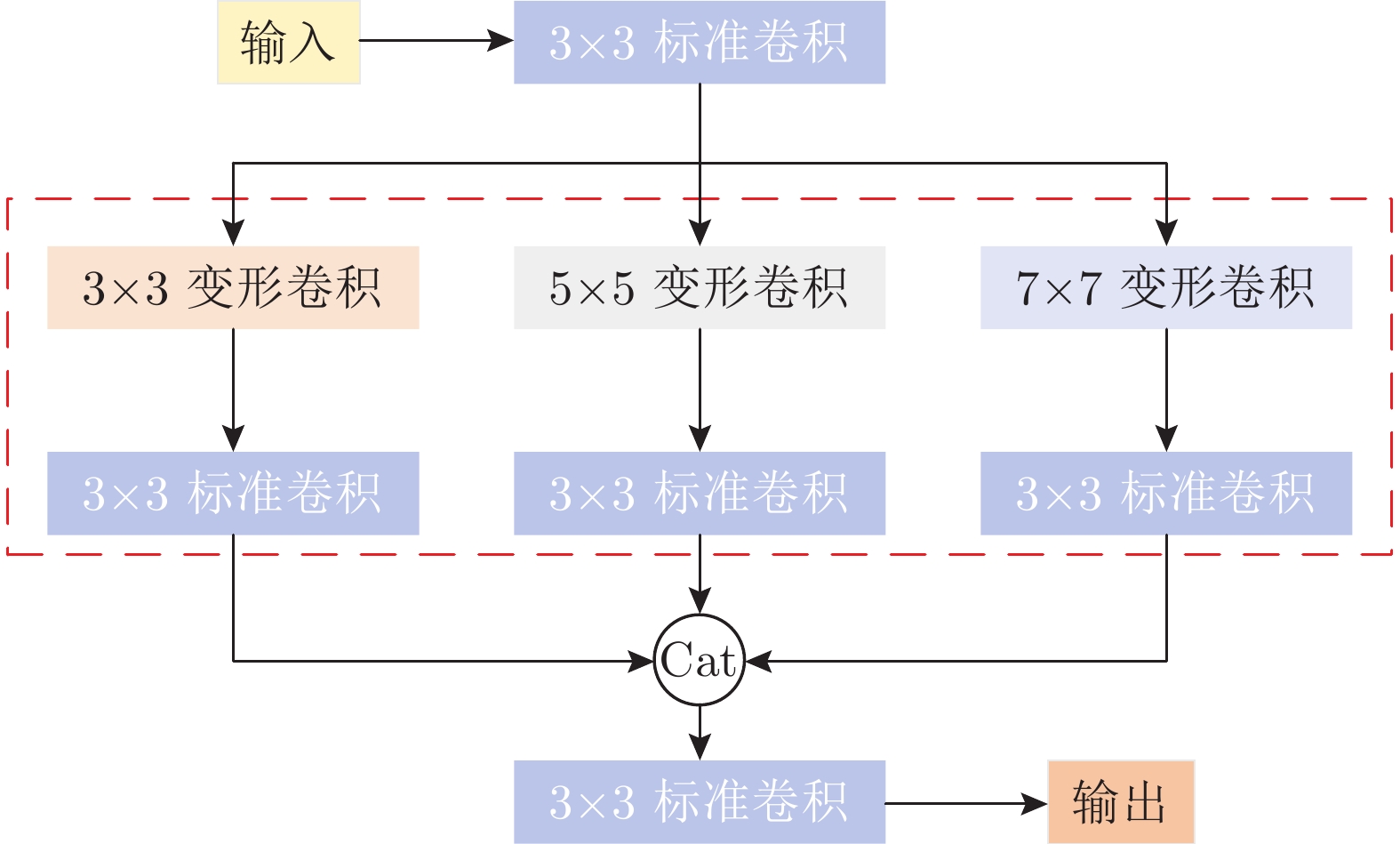

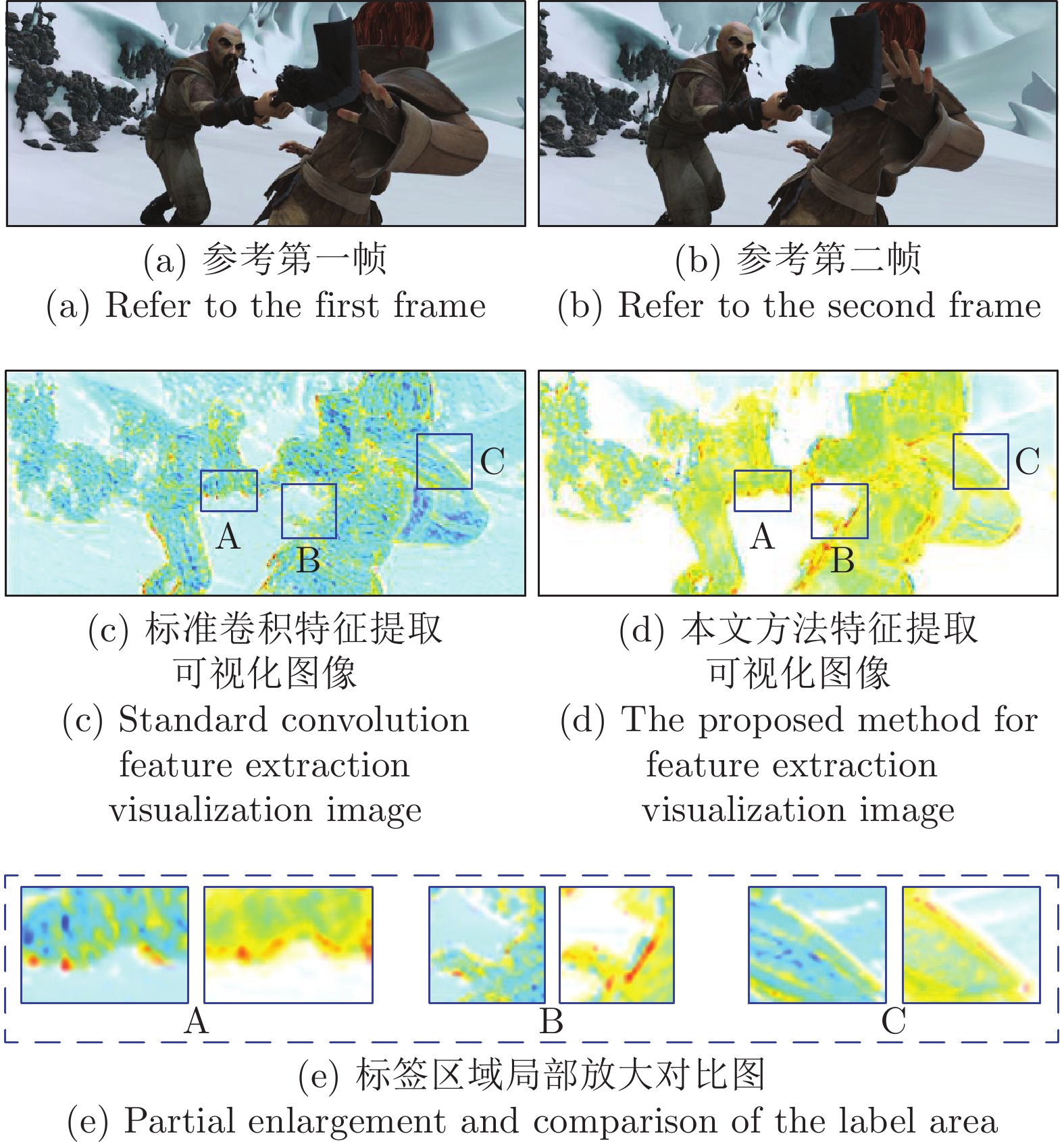

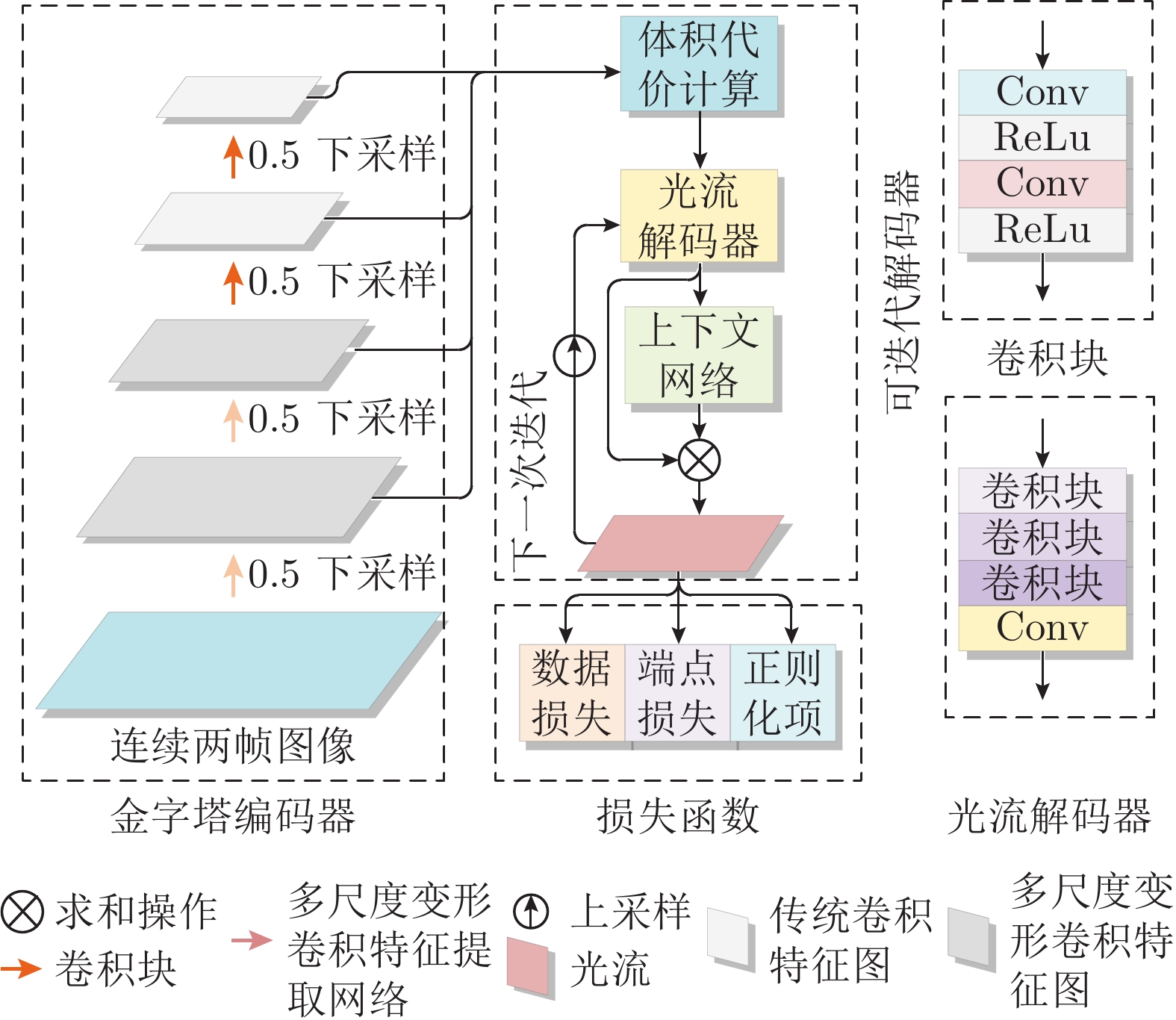

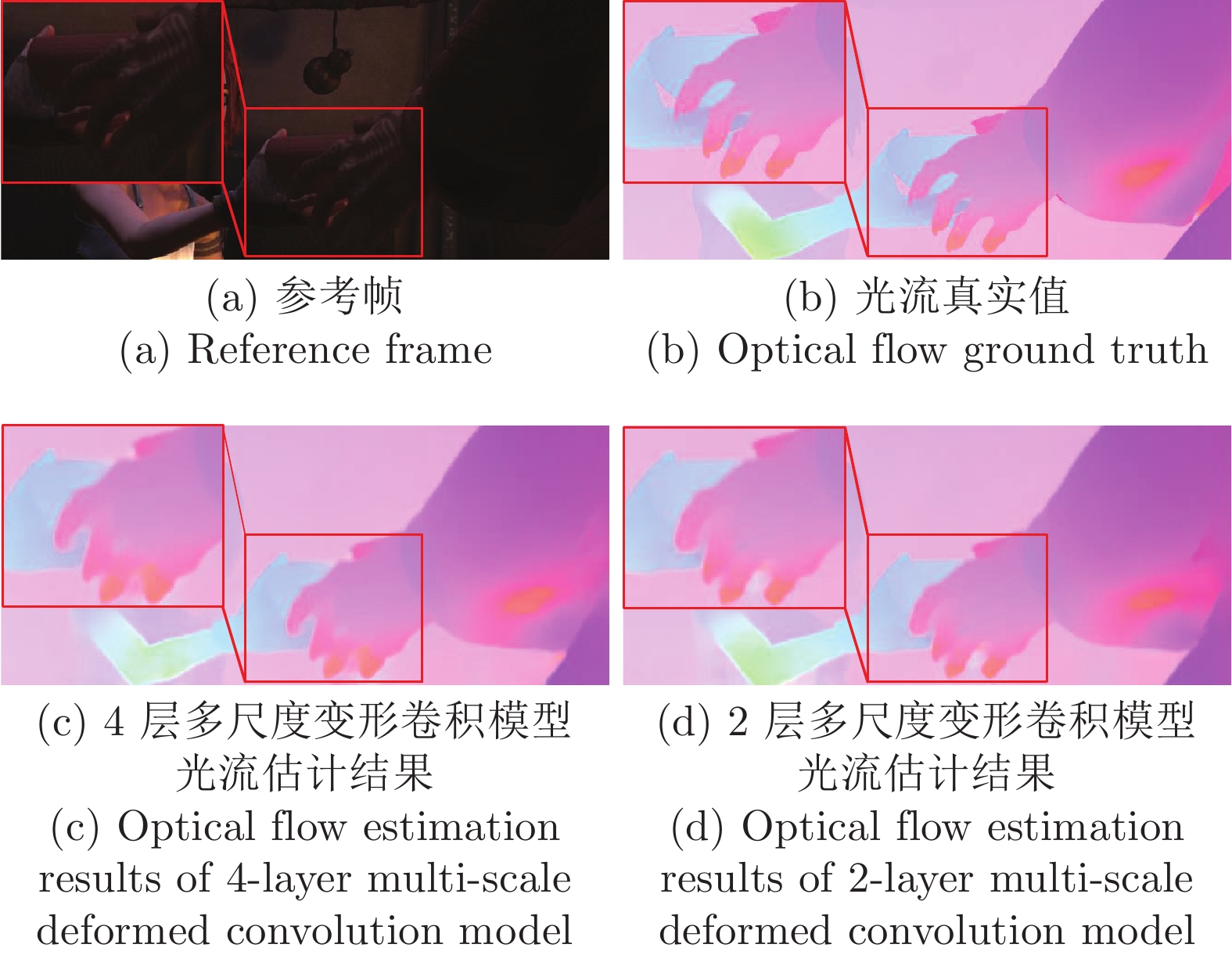

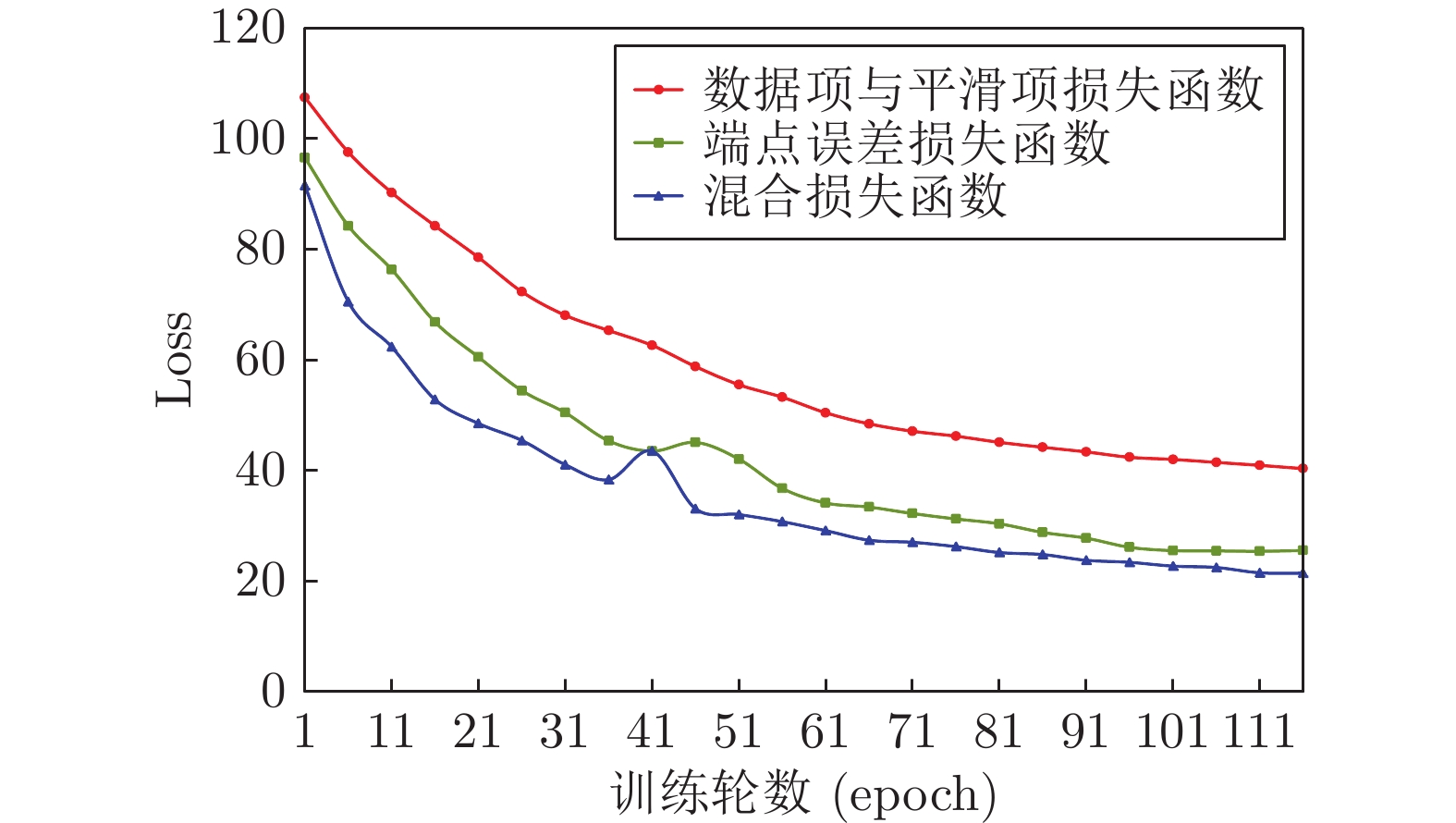

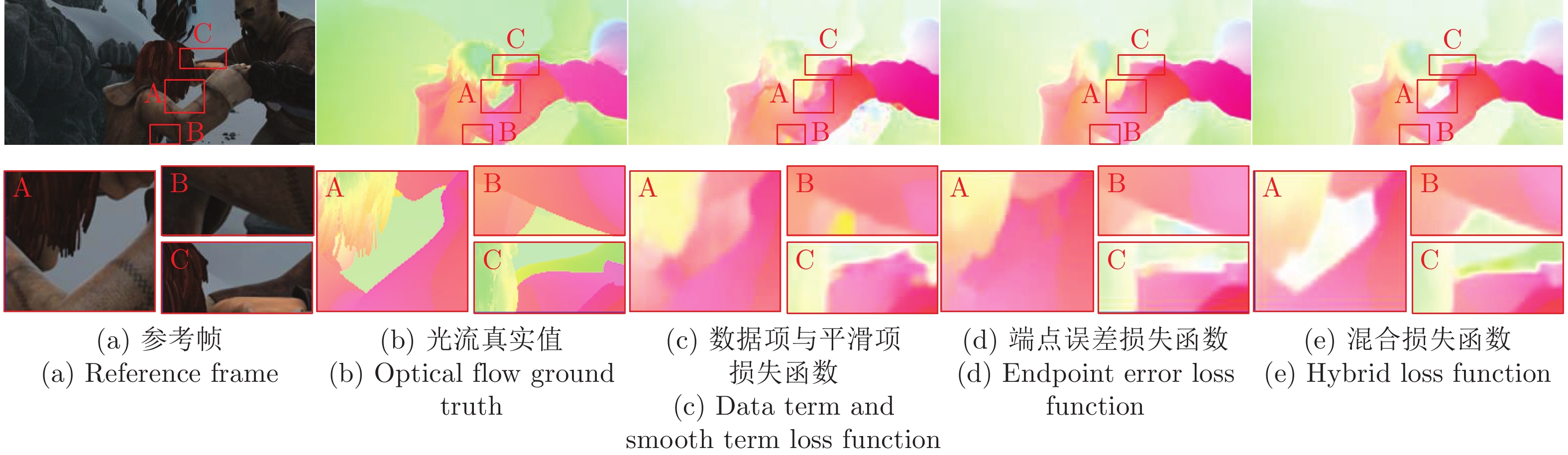

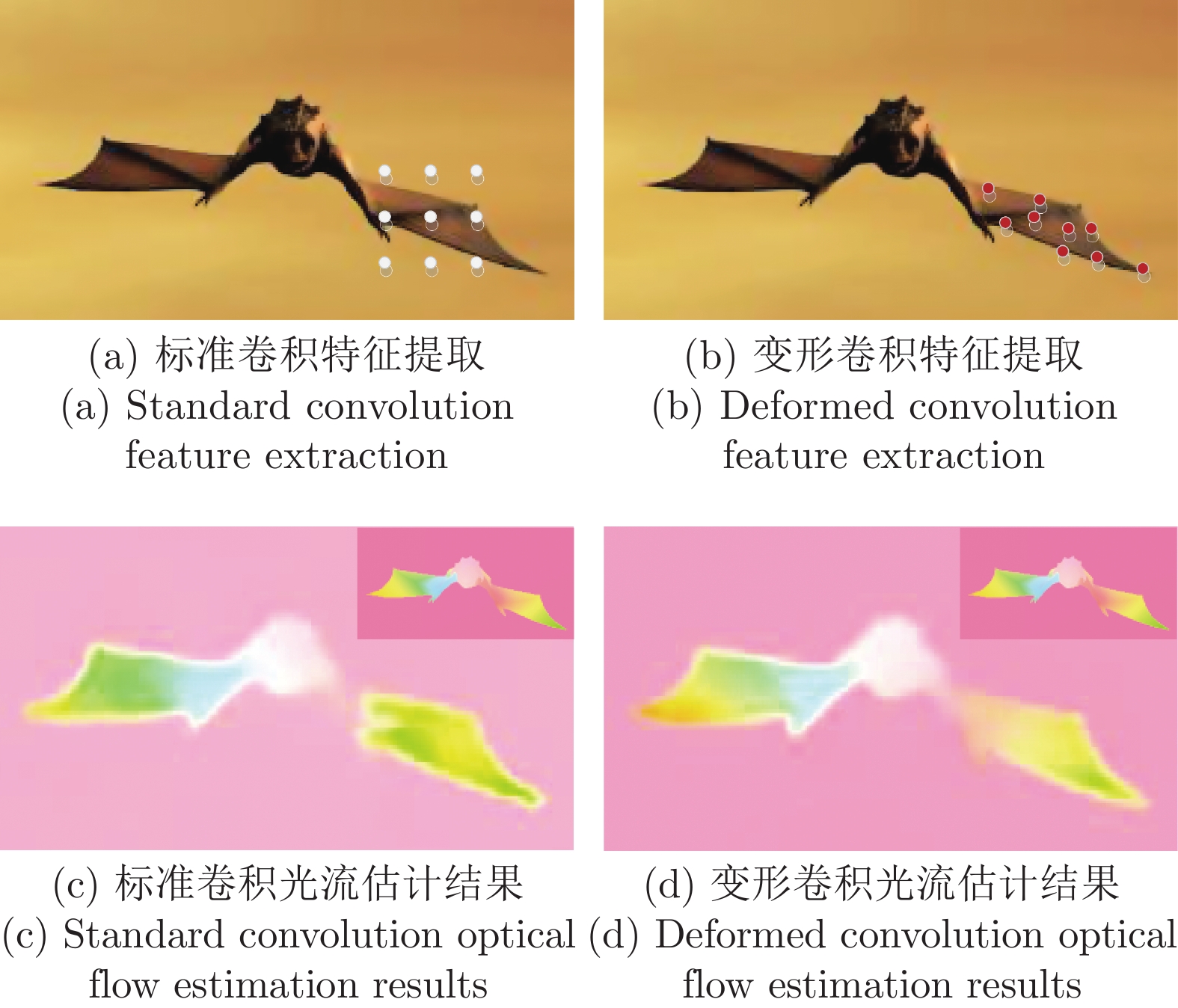

摘要: 针对现有深度学习光流计算方法的运动边缘模糊问题, 提出了一种基于多尺度变形卷积的特征金字塔光流计算方法. 首先, 构造基于多尺度变形卷积的特征提取模型, 显著提高图像边缘区域特征提取的准确性; 然后, 将多尺度变形卷积特征提取模型与特征金字塔光流计算网络耦合, 提出一种基于多尺度变形卷积的特征金字塔光流计算模型; 最后, 设计一种结合图像与运动边缘约束的混合损失函数, 通过指导模型学习更加精准的边缘信息, 克服了光流计算运动边缘模糊问题. 分别采用 MPI-Sintel 和 KITTI2015 测试图像集对该方法与代表性的深度学习光流计算方法进行综合对比分析. 实验结果表明, 该方法具有更高的光流计算精度, 有效解决了光流计算的边缘模糊问题.Abstract: To cope with the issue of edge-blurring caused by the existing deep-learning based optical flow estimation methods, this paper proposes a feature pyramid optical flow estimation method based on multi-scale deformation convolution. Firstly, a feature extraction module based on multi-scale deformable convolution is constructed to improve the accuracy of feature extraction in the regions of image edges. Secondly, by coupling the multi-scale deformable convolution feature extraction module with the feature pyramid based optical flow estimation network, a feature pyramid optical flow estimation model based on multi-scale deformable convolution is presented. Thirdly, a hybrid loss function combining the constraints of image and motion edges is designed, which addresses the issue of edge-blurring by guiding the optical flow model to learn more accurate edge information. Finally, the MPI-Sintel and KITTI2015 test datasets are used for conducting a comprehensive comparison between the proposed method and some representative deep-learning based optical flow estimation methods. The experimental results indicate that the proposed method achieves higher computational accuracy, and overcomes the issue of edge-blurring in optical flow estimation.

-

Key words:

- Optical flow /

- deep-learning /

- deformable convolution /

- feature pyramid /

- edge-preserving

-

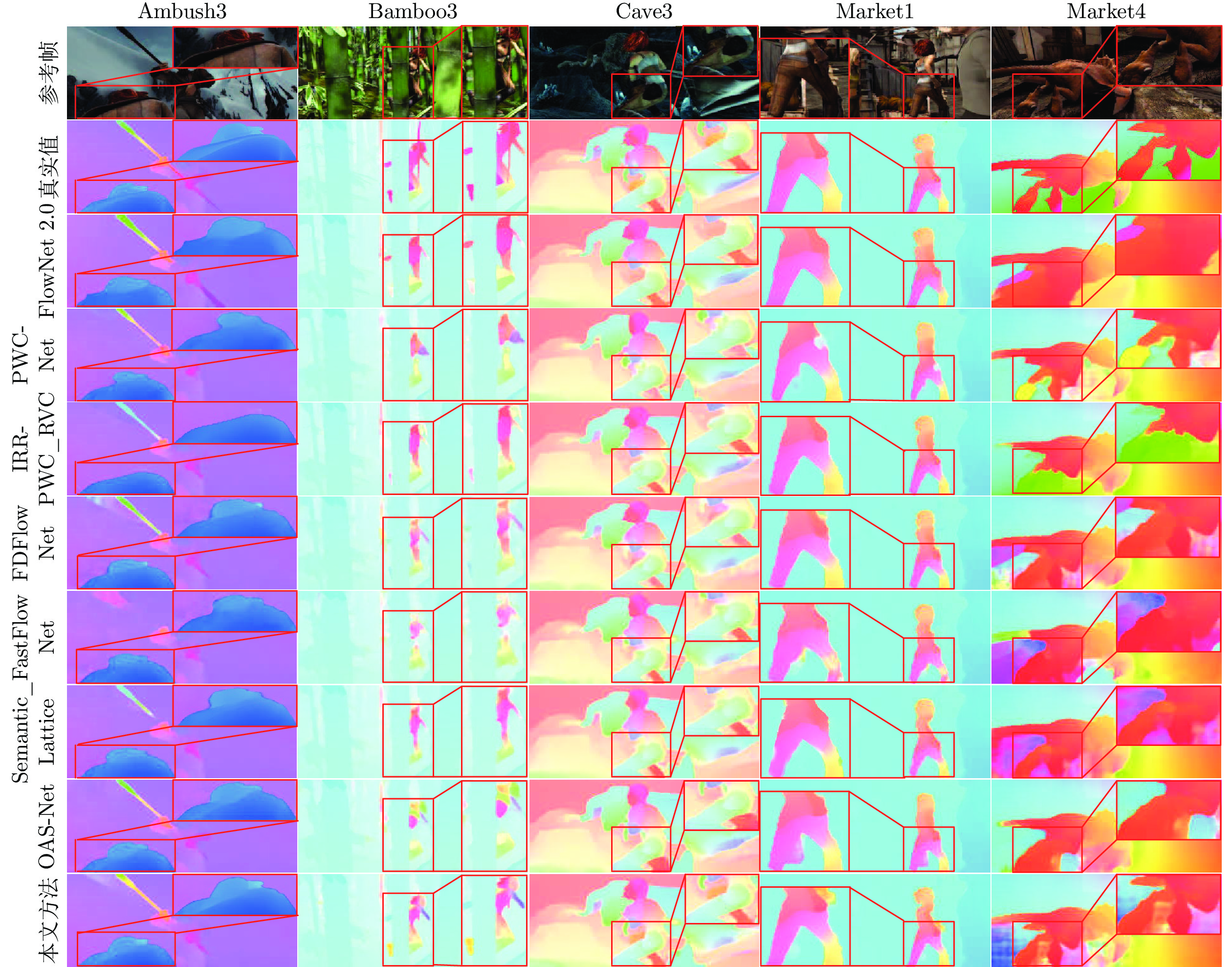

表 1 MPI-Sintel数据集图像序列光流计算结果

Table 1 Optical flow calculation results of image sequences in MPI-Sintel dataset

对比方法 Clean Final All Matched Unmatched All Matched Unmatched FlowNet 2.0[15] 4.16 1.56 25.40 5.74 2.75 30.11 PWC-Net[16] 4.39 1.72 26.17 5.04 2.45 26.22 IRR-PWC_RVC[19] 3.79 2.04 18.04 4.80 2.77 21.34 FDFlowNet[31] 3.71 1.54 21.38 5.11 2.52 26.23 FastFlowNet[25] 4.89 1.79 30.18 6.08 2.94 31.69 Semantic_Lattice[28] 3.84 1.70 21.30 4.89 2.46 24.70 OAS-Net[29] 3.65 1.49 21.32 5.04 2.46 25.86 本文方法 3.43 1.31 20.79 4.78 2.32 24.77 表 2 MPI-Sintel 数据集运动边缘与大位移指标对比结果

Table 2 Comparison results of motion edge and large displacement index in MPI-Sintel dataset

对比方法 Clean Final $ {\rm{d}}_{0-10} $ $ {\rm{d}}_{10-60} $ $ {\rm{d}}_{60-140} $ $ {\rm{s}}_{0-10} $ $ {\rm{s}}_{10-40} $ $ {\rm{s}}_{40+} $ $ {\rm{d}}_{0-10} $ $ {\rm{d}}_{10-60} $ $ {\rm{d}}_{60-140} $ $ {\rm{s}}_{0-10} $ $ {\rm{s}}_{10-40} $ $ {\rm{s}}_{40+} $ FlowNet 2.0[15] 3.09 1.32 0.92 0.64 1.90 25.42 5.14 2.79 2.10 1.24 4.03 34.51 PWC-Net[16] 4.28 1.66 0.67 0.61 2.07 28.79 4.64 2.09 1.48 0.80 2.99 31.07 IRR-PWC_RVC[19] 4.05 1.70 1.04 0.68 2.11 23.23 5.06 2.55 1.66 0.81 3.20 28.45 FDFlowNet[31] 3.81 1.42 0.69 0.84 2.20 21.63 4.67 2.17 1.64 1.03 3.12 30.16 FastFlowNet[25] 4.25 1.64 0.91 0.81 2.36 31.24 5.20 2.56 2.04 1.07 3.41 37.44 Semantic_Lattice[28] 3.86 1.43 0.80 0.60 2.00 24.40 4.60 2.08 1.53 0.80 3.02 29.65 OAS-Net[29] 3.81 1.39 0.59 0.75 2.13 21.78 4.54 2.05 1.57 0.88 2.91 30.63 本文方法 3.15 1.15 0.59 0.64 1.78 21.33 4.13 1.87 1.59 0.85 2.60 29.51 表 3 KITTI2015数据集计算结果

Table 3 Calculation results in KITTI2015 dataset

对比方法 Fl-bg Fl-fg Fl-all time (s) FlowNet 2.0[15] 10.75% 8.75% 10.41% 0.12 PWC-Net[16] 9.66% 9.31% 9.60% 0.07 IRR-PWC_RVC[19] 7.61% 12.22% 8.38% 0.18 LiteFlowNet[26] 9.66% 7.99% 9.38% 0.09 FlowNet3[27] 9.82% 10.91% 10.00% 0.09 LSM_RVC[30] 7.33% 13.06% 8.28% 0.25 FDFlowNet[31] 9.31% 9.71% 9.38% 0.05 本文方法 7.25% 10.06% 7.72% 0.13 表 4 MPI-Sintel数据集上消融实验结果对比

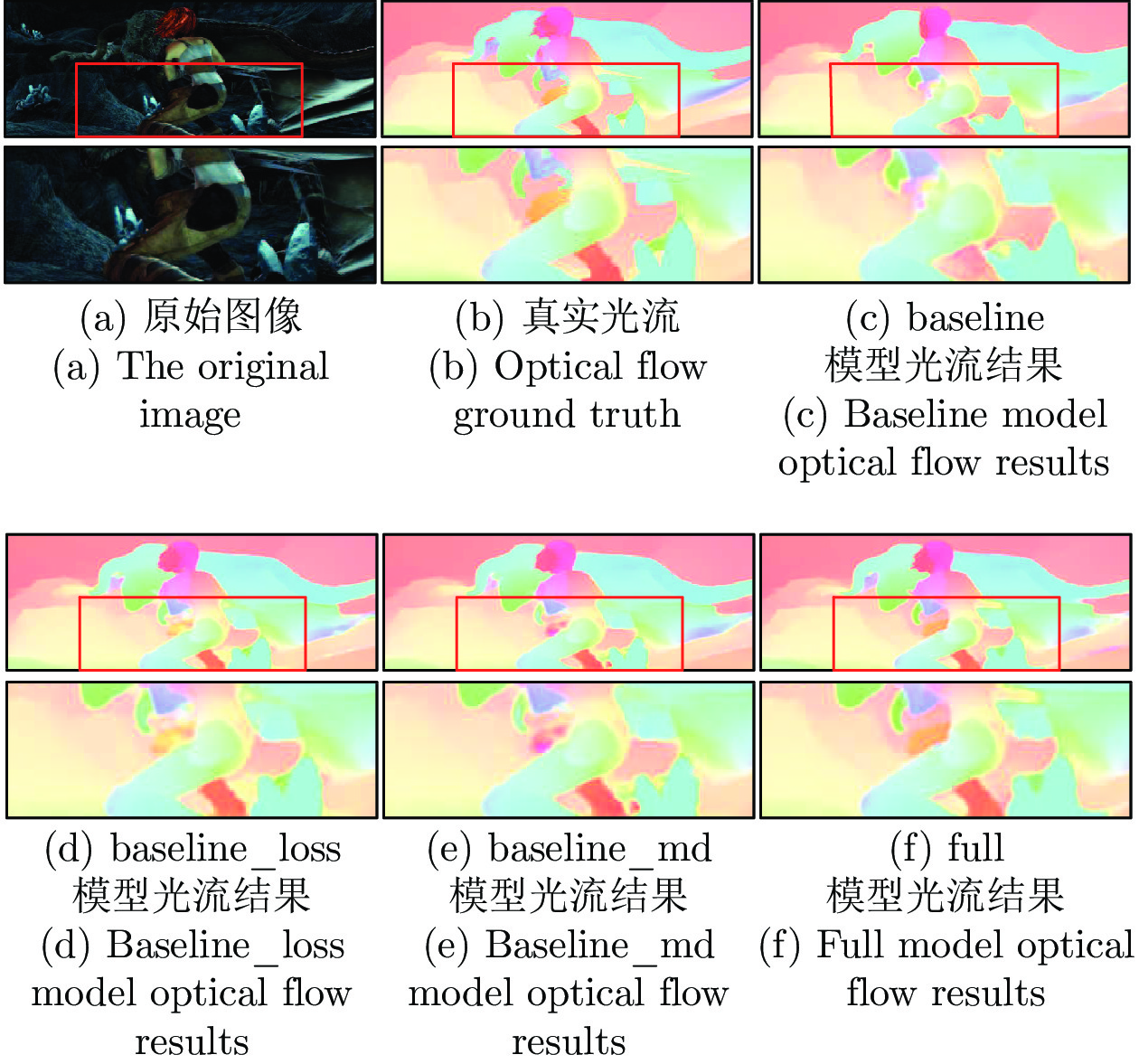

Table 4 Comparison of ablation experiment results in MPI-Sintel dataset

消融模型 All Matched Unmatched ${\rm{d}}_{0-10}$ ${\rm{d}}_{10-60}$ ${\rm{d}}_{60-140}$ baseline 4.39 1.72 26.17 4.28 1.66 0.67 baseline_loss 4.03 1.63 23.76 3.17 1.25 0.97 baseline_md 4.19 1.69 24.58 3.32 1.35 0.98 full model 3.43 1.31 20.79 3.15 1.15 0.59 -

[1] 付婧祎, 余磊, 杨文, 卢昕. 基于事件相机的连续光流估计. 自动化学报, DOI: 10.16383/j.aas.c210242Fu Jing-Yi, Yu Lei, Yang Wen, Lu Xin. Event-based continuous optical flow estimation. Acta Automatica Sinica, DOI: 10.16383/j.aas.c210242 [2] Mahapatra D, Ge Z Y. Training data independent image registration using generative adversarial networks and domain adaptation. Pattern Recognition, 2020, 100: Article No. 107109 [3] 张学森, 贾静平. 基于三维卷积神经网络和峰值帧光流的微表情识别算法. 模式识别与人工智能, 2021, 34(5): 423-433 doi: 10.16451/j.cnki.issn1003-6059.202105005Zhang X S, Jia J P, Cheng Y H, Wang X S. Micro-expression recognition algorithm based on 3D convolutional neural network and optical flow fields from neighboring frames of apex frame. Pattern Recognition and Artificial Intelligence, 2021, 34(5): 423-433 doi: 10.16451/j.cnki.issn1003-6059.202105005 [4] 冯诚, 张聪炫, 陈震, 李兵, 黎明. 基于光流与多尺度上下文的图像序列运动遮挡检测. 自动化学报, DOI: 10.16383/j.aas.c210324Feng Cheng, Zhang Cong-Xuan, Chen Zhen, Li Bing, Li Ming. Occlusion detection based on optical flow and multiscale context aggregation. Acta Automatica Sinica, DOI: 10.16383/j.aas.c210324 [5] Bahraini M S, Zenati A, Aouf N. Autonomous cooperative visual navigation for planetary exploration robots. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). Xi'an, China: IEEE, 2021. 9653−9658 [6] Zhai M L, Xiang X Z, Lv N, Kong X D. Optical flow and scene flow estimation: A survey. Pattern Recognition, 2021, 114: Article No. 107861 [7] Rao S N, Wang H Z. Robust optical flow estimation via edge preserving filterin. Signal Processing: Image Communication, 2021, 96: Article No. 116309 [8] Zhang C X, Chen Z, Wang M R, Li M, Jiang S F. Robust non-local TV-L1 optical flow estimation with occlusion detection. IEEE Transactions on Image Processing, 2017, 26: 4055-4067 doi: 10.1109/TIP.2017.2712279 [9] Mei L, Lai J H, Xie X H, Zhu J Y, Chen J. Illumination-invariance optical flow estimation using weighted regularization transform. IEEE Transactions on Circuits and Systems for Video Technology, 2020, 30(2): 495-508 doi: 10.1109/TCSVT.2019.2890861 [10] Chen J, Cai Z, Lai J H, Xie X H. Efficient segmentation-based PatchMatch for large displacement optical flow estimation. IEEE Transactions on Circuits and Systems for Video Technology, 2019, 29(12): 3595-3607 doi: 10.1109/TCSVT.2018.2885246 [11] Deng Y, Xiao J M, Zhou S Z, Feng J S. Detail preserving coarse-to-fine matching for stereo matching and optical flow. IEEE Transactions on Image Processing, 2021, 30: 5835-5847 doi: 10.1109/TIP.2021.3088635 [12] Zhang C X, Ge L Y, Chen Z, Li M, Liu W, Chen H. Refined TV-L1 optical flow estimation using joint filtering. IEEE Transactions Multimedia, 2020, 22(2): 349-364 doi: 10.1109/TMM.2019.2929934 [13] Dong C, Wang Z S, Han J M, Xing C D, Tang S F. A non-local propagation filtering scheme for edge-preserving in variational optical flow computation. Signal Processing: Image Communication, 2021, 93: Article No. 116143 [14] Dosovitskiy A, Fischer P, Ilg E, Hausser P, Hazirbas C, Golkov V. FlowNet: Learning optical flow with convolutional networks. In: Proceedings of the International Conference on Computer Vision (ICCV). Santiago, Chile: IEEE, 2015. 2758−2766 [15] Ilg E, Mayer N, Saikia T, Keuper M, Dosovitskiy A, Brox T. FlowNet 2.0: Evolution of optical flow estimation with deep networks. In: Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, USA: IEEE, 2017. 1647−1655 [16] Sun D Q, Yang X D, Liu M Y, Jan K. PWC-Net: CNNs for optical flow using pyramid, warping, and cost volume. In: Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR). Salt Lake City, USA: IEEE, 2018. 8934−8943 [17] Yu J J, Harley A W, Derpanis K G. Back to basics: Unsupervised learning of optical flow via brightness constancy and motion smoothness. In: Proceedings of the European Conference on Computer Vision (ECCV). Amsterdam, The Netherlands: Springer, 2016. 3−10 [18] Liu P P, King I, Lyu M R, Xu J. DDFlow: Learning optical flow with unlabeled data distillation. In: Proceedings of the AAAI Conference on Artificial Intelligence. Phoenix, USA: AAAI, 2019. 2−8 [19] Hur J, Roth S. Iterative residual refinement for joint optical flow and occlusion estimation. In: Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 5747−5756 [20] Zhao S Y, Sheng Y L, Dong Y, Chang E I, Xu Y. MaskFlownet: Asymmetric feature matching with learnable occlusion mask. In: Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 6277−6286 [21] Meister S, Hur J, Roth S. UnFlow: Unsupervised learning of optical flow with a bidirectional census loss. In: Proceedings of the AAAI Conference on Artificial Intelligence. New Orleans, USA: AAAI, 2018. 7251−7259 [22] Zhang C X, Zhou Z Z, Chen Z, Hu W M, Li M, Jiang S F. Self-attention-based multiscale feature learning optical flow with occlusion feature map prediction. IEEE Transactions on Multimedia, 2021: 3340-3354 doi: 10.1109/TMM.2021.3096083, to be published [23] Butler D J, Wulff J, Stanley G B, Black M J. A naturalistic open source movie for optical flow evaluation. In: Proceedings of the European Conference on Computer Vision (ECCV). Florence, Italy: Springer, 2012. 611−625 [24] Menze M, Geiger A. Object scene flow for autonomous vehicles. In: Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR). Boston, USA: IEEE, 2015. 3061−3070 [25] Kong L T, Shen C H, Yang J. FastFlowNet: A lightweight network for fast optical flow estimation. In: Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). Xi'an, China: IEEE, 2021. 10310−10316 [26] Hui T W, Tang X O, Loy C C. LiteFlowNet: A lightweight convolutional neural network for optical flow estimation. In: Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR). Salt Lake City, USA: IEEE, 2018. 8981−8989 [27] Ilg E, Saikia T, Keuper M, Brox T. Occlusions, motion and depth boundaries with a generic network for disparity, optical flow or scene flow estimation. In: Proceedings of the European Conference on Computer Vision (ECCV). Munich, Germany: Springer, 2018. 626−643 [28] Wannenwetsch A S, Kiefel M, Gehler P V, Roth S. Learning task-specific generalized convolutions in the permutohedral lattice. In: Proceedings of the German Conference on Pattern Recognition (GCPR). Cham, Germany: Springer, 2019. 345−359 [29] Kong L T, Yang X H, Yang J. OAS-Net: Occlusion aware sampling network for accurate optical flow. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Toronto, Canada: IEEE, 2021. 2475−2479 [30] Tang C Z, Yuan L, Tan P. LSM: Learning subspace minimization for low-level vision. In: Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR). Seattle, USA: IEEE, 2020. 6234−6245 [31] Kong L T, Yang J. FDFlowNet: Fast optical flow estimation using a deep lightweight network. In: Proceedings of the IEEE International Conference on Image Processing (ICIP). Anchorage, USA: IEEE, 2020. 1501−1505 -

下载:

下载: