-

摘要:

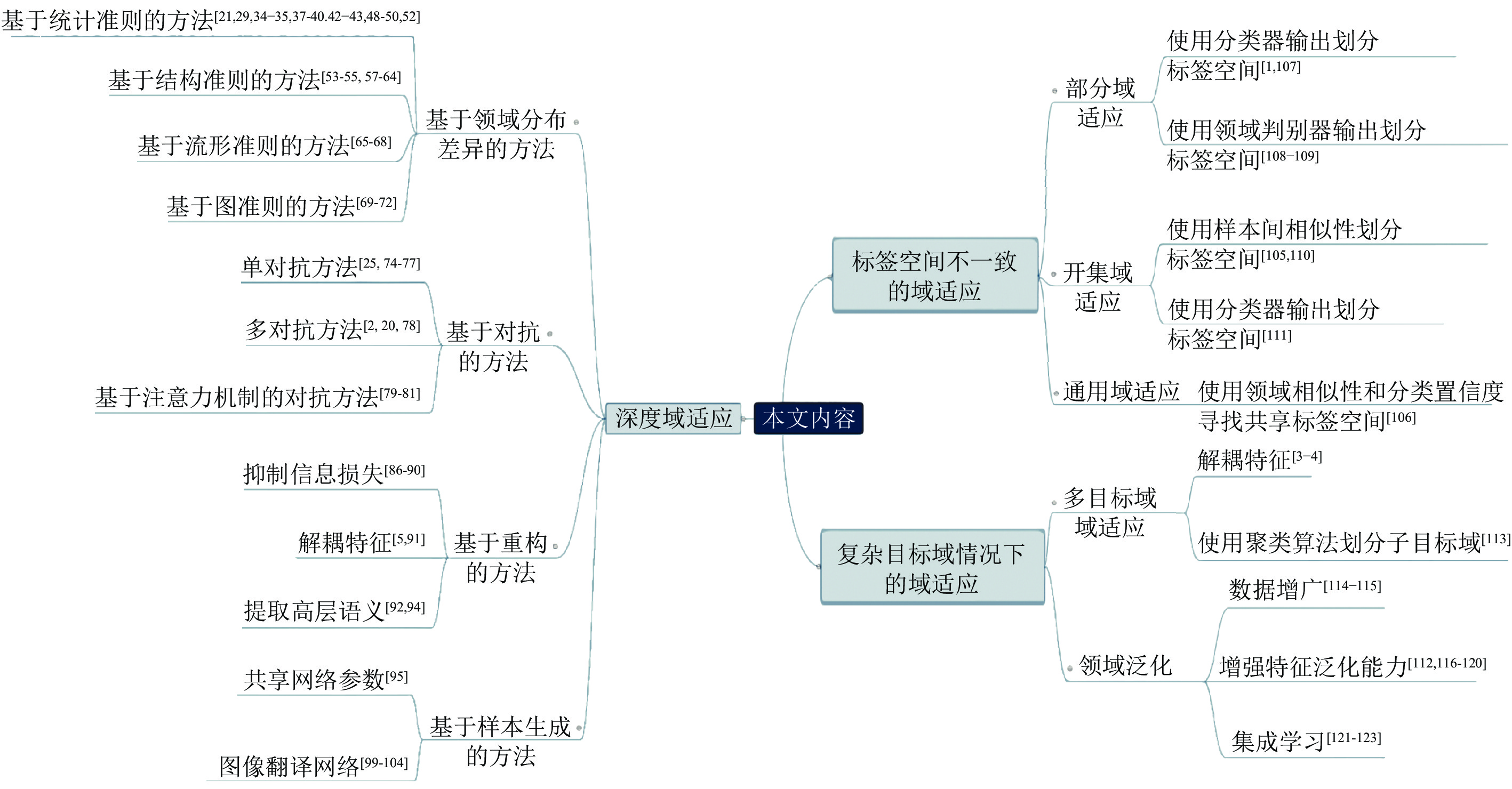

信息时代产生的大量数据使机器学习技术成功地应用于许多领域. 大多数机器学习技术需要满足训练集与测试集独立同分布的假设, 但在实际应用中这个假设很难满足. 域适应是一种在训练集和测试集不满足独立同分布条件下的机器学习技术. 一般情况下的域适应只适用于源域目标域特征空间与标签空间都相同的情况, 然而实际上这个条件很难满足. 为了增强域适应技术的适用性, 复杂情况下的域适应逐渐成为研究热点, 其中标签空间不一致和复杂目标域情况下的域适应技术是近年来的新兴方向. 随着深度学习技术的崛起, 深度域适应已经成为域适应研究领域中的主流方法. 本文对一般情况与复杂情况下的深度域适应的研究进展进行综述, 对其缺点进行总结, 并对其未来的发展趋势进行预测. 首先对迁移学习相关概念进行介绍, 然后分别对一般情况与复杂情况下的域适应、域适应技术的应用以及域适应方法性能的实验结果进行综述, 最后对域适应领域的未来发展趋势进行展望并对全文内容进行总结.

Abstract:The large amount of data generated in the information age enables machine learning to be successfully applied in many fields. Most machine learning techniques need to meet the assumption that the training set and the test set are independent and identically distributed, but in practice this assumption is difficult to meet. Domain adaptation is a machine learning technology in which the training set and test set do not need to satisfy the condition of independent and identical distribution. The general domain adaptation is only applicable to the case where feature space and label space of the source domain and target domain are the same, but in fact this condition is difficult to meet. In order to enhance the applicability of domain adaptation, domain adaptation under complex conditions has gradually become a research hotspot. Domain adaptation under the condition of inconsistent label space and complex target domain is an emerging direction in recent years. With the rise of deep learning technology, deep domain adaptation has become the mainstream method in the field of domain adaptation research. This article reviews the research progress of deep domain adaptation in general and complex situations, summarizes their shortcomings, and predicts their future development trends. This article firstly introduces the concepts of transfer learning, and then summarizes domain adaptation in general and complex situations, the application of domain adaptation technology and the performance of domain adaptation methods, finally prospects the development trend of the domain adaptation field and summarizes the content of the full text.

-

Key words:

- Domain adaptation /

- transfer learning /

- deep domain adaptation /

- deep learning /

- machine learning

-

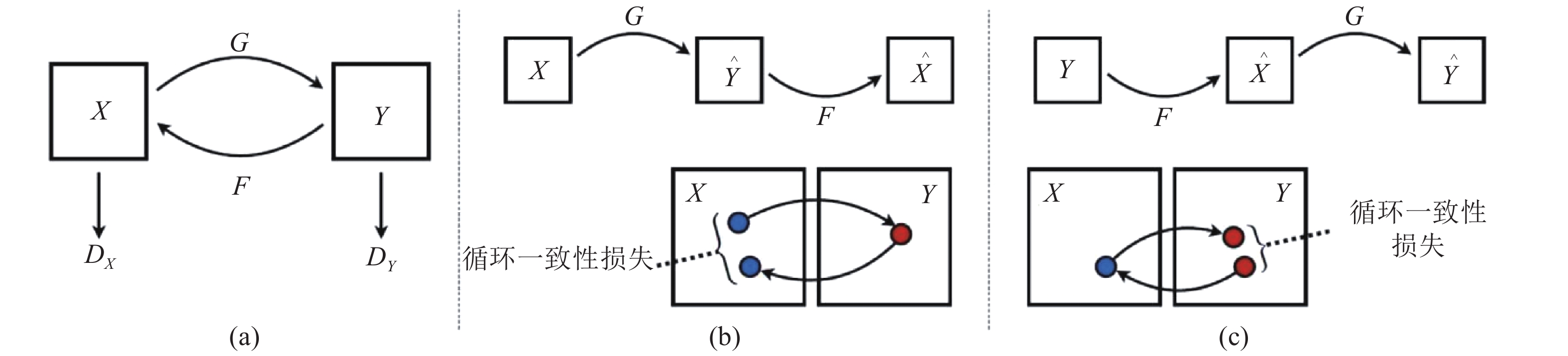

图 7 CycleGAN的训练过程((a)源域图像通过翻译网络G变换到目标域, 目标域图像通过翻译网络F变换到源域;(b)在源域中计算循环一致性损失; (c)在目标域中计算循环一致性损失)

Fig. 7 The training process of CycleGAN ((a) source images are transformed to target domain through translation network G, target images are transformed to source domain through translation network F; (b) calculate the cycle-consistency loss in source domain; (c) calculate the cycle-consistency loss in target domain.)

表 1 深度域适应的四类方法

Table 1 Four kinds of methods for deep domain adaptation

表 2 标签空间不一致的域适应问题

Table 2 Domain adaptation with inconsistent label space

表 3 复杂目标域情况下的域适应问题

Table 3 Domain adaptation in the case of complex target domain

表 4 在Office31数据集上各深度域适应方法的准确率 (%)

Table 4 Accuracy of each deep domain adaptation method on Office31 dataset (%)

方法 ${\rm{A}}\to {\rm{W}}$ ${\rm{D}}\to {\rm{W}}$ ${\rm{W}}\to {\rm{D}}$ ${\rm{A}}\to {\rm{D}}$ ${\rm{D}}\to {\rm{A}}$ ${\rm{W}}\to {\rm{A}}$ 平均 ResNet50[26] 68.4 96.7 99.3 68.9 62.5 60.7 76.1 DAN[20] 80.5 97.1 99.6 78.6 63.6 62.8 80.4 DCORAL[42] 79.0 98.0 100.0 82.7 65.3 64.5 81.6 RTN[39] 84.5 96.8 99.4 77.5 66.2 64.8 81.6 DANN[27] 82.0 96.9 99.1 79.7 68.2 67.4 82.2 ADDA[75] 86.2 96.2 98.4 77.8 69.5 68.9 82.9 JAN[23] 85.4 97.4 99.8 84.7 68.6 70.0 84.3 MADA[2] 90.1 97.4 99.6 87.8 70.3 66.4 85.2 GTA[99] 89.5 97.9 99.8 87.7 72.8 71.4 86.5 CDAN[22] 94.1 98.6 100.0 92.9 71.0 69.3 87.7 表 5 在OfficeHome数据集上各深度域适应方法的准确率 (%)

Table 5 Accuracy of each deep domain adaptation method on OfficeHome dataset (%)

方法 ${\rm{A}}\to {\rm{C}}$ ${\rm{A}}\to {\rm{P}}$ ${\rm{A}}\to {\rm{R}}$ ${\rm{C}}\to {\rm{A}}$ ${\rm{C}}\to {\rm{P}}$ ${\rm{C}}\to {\rm{R}}$ ResNet50[26] 34.9 50.0 58.0 37.4 41.9 46.2 DAN[20] 43.6 57.0 67.9 45.8 56.5 60.4 DANN[27] 45.6 59.3 70.1 47.0 58.5 60.9 JAN[23] 45.9 61.2 68.9 50.4 59.7 61.0 CDAN[22] 50.7 70.6 76.0 57.6 70.0 70.0 方法 ${\rm{P}}\to {\rm{A}}$ ${\rm{P}}\to {\rm{C }}$ ${\rm{P}}\to {\rm{R}}$ ${\rm{R}}\to {\rm{A}}$ ${\rm{R}}\to {\rm{C }}$ ${\rm{R}}\to {\rm{P}}$ 平均 ResNet50[26] 38.5 31.2 60.4 53.9 41.2 59.9 46.1 DAN[20] 44.0 43.6 67.7 63.1 51.5 74.3 56.3 DANN[27] 46.1 43.7 68.5 63.2 51.8 76.8 57.6 JAN[23] 45.8 43.4 70.3 63.9 52.4 76.8 58.3 CDAN[22] 57.4 50.9 77.3 70.9 56.7 81.6 65.8 表 6 在Office31数据集上各部分域适应方法的准确率 (%)

Table 6 Accuracy of each partial domain adaptation method on Office31 dataset (%)

方法 ${\rm{A}}\to {\rm{W}}$ ${\rm{D}}\to {\rm{W}}$ ${\rm{W}}\to {\rm{D}}$ ${\rm{A}}\to {\rm{D}}$ ${\rm{D}}\to {\rm{A}}$ ${\rm{W}}\to {\rm{A}}$ 平均 ResNet50[26] 75.5 96.2 98.0 83.4 83.9 84.9 87.0 DAN[20] 59.3 73.9 90.4 61.7 74.9 67.6 71.3 DANN[27] 73.5 96.2 98.7 81.5 82.7 86.1 86.5 IWAN[109] 89.1 99.3 99.3 90.4 95.6 94.2 94.6 SAN[1] 93.9 99.3 99.3 94.2 94.1 88.7 94.9 PADA[107] 86.5 99.3 100.0 82.1 92.6 95.4 92.6 ETN[108] 94.5 100.0 100.0 95.0 96.2 94.6 96.7 表 7 在Office31数据集上各开集域适应方法的准确率 (%)

Table 7 Accuracy of each open set domain adaptation method on Office31 dataset (%)

方法 ${\rm{A}}\to {\rm{W}}$ ${\rm{A}}\to {\rm{D}}$ ${\rm{D}}\to {\rm{W}}$ OS OS* OS OS* OS OS* ResNet50[26] 82.5 82.7 85.2 85.5 94.1 94.3 RTN[39] 85.6 88.1 89.5 90.1 94.8 96.2 DANN[27] 85.3 87.7 86.5 87.7 97.5 98.3 OpenMax[145] 87.4 87.5 87.1 88.4 96.1 96.2 ATI-$ \lambda $[110] 87.4 88.9 84.3 86.6 93.6 95.3 OSBP[111] 86.5 87.6 88.6 89.2 97.0 96.5 STA[105] 89.5 92.1 93.7 96.1 97.5 96.5 方法 ${\rm{W}}\to {\rm{D}}$ ${\rm{D}}\to {\rm{A}}$ ${\rm{W}}\to {\rm{A}}$ 平均 OS OS* OS OS* OS OS* OS OS* ResNet50[26] 96.6 97.0 71.6 71.5 75.5 75.2 84.2 84.4 RTN[39] 97.1 98.7 72.3 72.8 73.5 73.9 85.4 86.8 DANN[27] 99.5 100.0 75.7 76.2 74.9 75.6 86.6 87.6 OpenMax[145] 98.4 98.5 83.4 82.1 82.8 82.8 89.0 89.3 ATI-$ \lambda $[110] 96.5 98.7 78.0 79.6 80.4 81.4 86.7 88.4 OSBP[111] 97.9 98.7 88.9 90.6 85.8 84.9 90.8 91.3 STA[105] 99.5 99.6 89.1 93.5 87.9 87.4 92.9 94.1 表 8 在OfficeHome数据集上通用域适应及其他方法的准确率 (%)

Table 8 Accuracy of universal domain adaptation and other methods on OfficeHome dataset (%)

方法 ${\rm{A}}\to {\rm{C}}$ ${\rm{A}}\to {\rm{P}}$ ${\rm{A}}\to {\rm{R}}$ ${\rm{C}}\to {\rm{A}}$ ${\rm{C}}\to {\rm{P}}$ ${\rm{C}}\to {\rm{R}}$ ResNet[26] 59.4 76.6 87.5 69.9 71.1 81.7 DANN[27] 56.2 81.7 86.9 68.7 73.4 83.8 RTN[39] 50.5 77.8 86.9 65.1 73.4 85.1 IWAN[109] 52.6 81.4 86.5 70.6 71.0 85.3 PADA[107] 39.6 69.4 76.3 62.6 67.4 77.5 ATI-$ \lambda $[110] 52.9 80.4 85.9 71.1 72.4 84.4 OSBP[111] 47.8 60.9 76.8 59.2 61.6 74.3 UAN[106] 63.0 82.8 87.9 76.9 78.7 85.4 方法 ${\rm{P}}\to {\rm{A}}$ ${\rm{P}}\to {\rm{C}}$ ${\rm{P}}\to {\rm{R}}$ ${\rm{R}}\to {\rm{A}}$ ${\rm{R}}\to {\rm{C}}$ ${\rm{R}}\to {\rm{P}}$ 平均 ResNet[26] 73.7 56.3 86.1 78.7 59.2 78.6 73.2 DANN[27] 69.9 56.8 85.8 79.4 57.3 78.3 73.2 RTN[39] 67.9 45.2 85.5 79.2 55.6 78.8 70.9 IWAN[109] 74.9 57.3 85.1 77.5 59.7 78.9 73.4 PADA[107] 48.4 35.8 79.6 75.9 44.5 78.1 62.9 ATI-$ \lambda $[110] 74.3 57.8 85.6 76.1 60.2 78.4 73.3 OSBP[111] 61.7 44.5 79.3 70.6 55.0 75.2 63.9 UAN[106] 78.2 58.6 86.8 83.4 63.2 79.4 77.0 表 9 在Office31数据集上AMEAN及其他方法的准确率 (%)

Table 9 Accuracy of AMEAN and other methods on Office31 dataset (%)

表 10 在Office31数据集上DADA及其他方法的准确率 (%)

Table 10 Accuracy of DADA and other methods on Office31 dataset (%)

方法 ${\rm{A} }\to {\rm{C} }, $$ \;{\rm{ D},\;\rm{W} }$ ${\rm{C} }\to {\rm{A} }, $$ \; {\rm{D},\;\rm{W} }$ ${\rm{D} }\to {\rm{A} },\; $$ {\rm{C},\;{\rm{W} } }$ ${\rm{W} }\to {\rm{A} }, $$ \;{\rm{C},\;\rm{D} }$ 平均 ResNet[26] 90.5 94.3 88.7 82.5 89.0 MCD[28] 91.7 95.3 89.5 84.3 90.2 DANN[27] 91.5 94.3 90.5 86.3 90.6 DADA[4] 92.0 95.1 91.3 93.1 92.9 表 11 在MNIST数据集上领域泛化方法的准确率 (%)

Table 11 Accuracy of domain generalization methods on MNIST dataset (%)

源域 目标域 DAE DICA D-MTAE MMD-AAE ${M}_{ {15} },\;{M}_{30},\;{M}_{45},\;{M}_{60},\;{M}_{75}$ $ {M}_{0} $ 76.9 70.3 82.5 83.7 ${M}_{ {0} },\;{M}_{30},\;{M}_{45},\;{M}_{60},\;{M}_{ {75} }$ $ {M}_{15} $ 93.2 88.9 96.3 96.9 ${M}_{ {0}},\;{M}_{15},\;{M}_{45},\;{M}_{60},\;{M}_{{75} }$ $ {M}_{30} $ 91.3 90.4 93.4 95.7 ${M}_{ {0}},\;{M}_{15},\;{M}_{30},\;{M}_{60},\;{M}_{{75} }$ $ {M}_{45} $ 81.1 80.1 78.6 85.2 ${M}_{ {0}},\;{M}_{15},\;{M}_{30},\;{M}_{45},\;{M}_{{75} }$ $ {M}_{60} $ 92.8 88.5 94.2 95.9 ${M}_{ {0}},\;{M}_{15},\;{M}_{30},\;{M}_{45},\;{M}_{{60} }$ $ {M}_{75} $ 76.5 71.3 80.5 81.2 平均 85.3 81.6 87.6 89.8 -

[1] Cao Z J, Long M S, Wang J M, Jordan M I. Partial transfer learning with selective adversarial networks. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 2724−2732 [2] Pei Z Y, Cao Z J, Long M S, Wang J M. Multi-adversarial domain adaptation. In: Proceedings of the 32nd Conference on Artificial Intelligence. New Orleans, USA: AAAI, 2018. 3934−3941 [3] Gholami B, Sahu P, Rudovic O, Bousmalis K, Pavlovic V. Unsupervised multi-target domain adaptation: An information theoretic approach. IEEE Transactions on Image Processing, 2020, 29: 3993−4002 doi: 10.1109/TIP.2019.2963389 [4] Peng X C, Huang Z J, Sun X M, Saenko K. Domain agnostic learning with disentangled representations. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: ICML, 2019. 5102−5112 [5] Bousmalis K, Trigeorgis G, Silberman N, Krishnan D, Erhan D. Domain separation networks. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: NIPS, 2016. 343−351 [6] Pan S J, Yang Q. A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering, 2010, 22(10): 1345−1359 doi: 10.1109/TKDE.2009.191 [7] Shao L, Zhu F, Li X L. Transfer learning for visual categorization: A survey. IEEE Transactions on Neural Networks and Learning Systems, 2015, 26(5): 1019−1034 doi: 10.1109/TNNLS.2014.2330900 [8] Liang H, Fu W L, Yi F J. A survey of recent advances in transfer learning. In: Proceedings of the 2019 IEEE 19th International Conference on Communication Technology. Xi' an, China: IEEE, 2019. 1516−1523 [9] Chu C H, Wang R. A survey of domain adaptation for neural machine translation. In: Proceedings of the 27th International Conference on Computational Linguistics. Santa Fe, New Mexico, USA: COLING, 2018. 1304−1319 [10] Ramponi A, Plank B. Neural unsupervised domain adaptation in NLP: A survey. In: Proceedings of the 28th International Conference on Computational Linguistics. Barcelona, Spain: COLING, 2020. 6838−6855 [11] Day O, Khoshgoftaar T M. A survey on heterogeneous transfer learning. Journal of Big Data, 2017, 4: Article No. 29 doi: 10.1186/s40537-017-0089-0 [12] Patel V M, Gopalan R, Li R N, Chellappa R. Visual domain adaptation: A survey of recent advances. IEEE Signal Processing Magazine, 2015, 32(3): 53−69 doi: 10.1109/MSP.2014.2347059 [13] Weiss K, Khoshgoftaar T M, Wang D D. A survey of transfer learning. Journal of Big Data, 2016, 3: Article No. 9 doi: 10.1186/s40537-016-0043-6 [14] Csurka G. Domain adaptation for visual applications: A comprehensive survey. arXiv: 1702.05374, 2017. [15] Wang M, Deng W H. Deep visual domain adaptation: A survey. Neurocomputing, 2018, 312: 135−153 doi: 10.1016/j.neucom.2018.05.083 [16] Tan C Q, Sun F C, Kong T, Zhang W C, Yang C, Liu C F. A survey on deep transfer learning. In: Proceedings of the 27th International Conference on Artificial Neural Networks. Rhodes, Greece: Springer, 2018. 270−279 [17] Wilson G, Cook D J. A survey of unsupervised deep domain adaptation. ACM Transactions on Intelligent Systems and Technology, 2020, 11(5): Article No. 51 [18] Zhuang F Z, Qi Z Y, Duan K Y, Xi D B, Zhu Y C, Zhu H S, et al. A comprehensive survey on transfer learning. Proceedings of the IEEE, 2021, 109(1): 43−76 doi: 10.1109/JPROC.2020.3004555 [19] Ben-David S, Blitzer J, Crammer K, Kulesza A, Pereira F, Vaughan J W. A theory of learning from different domains. Machine Learning, 2010, 79(1-2): 151−175 doi: 10.1007/s10994-009-5152-4 [20] Long M S, Cao Y, Wang J M, Jordan M I. Learning transferable features with deep adaptation networks. In: Proceedings of the 32nd International Conference on Machine Learning. Lille, France: ICML, 2015. 97−105 [21] Zhu J Y, Park T, Isola P, Efros A A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 2242−2251 [22] Long M S, Cao Z J, Wang J M, Jordan M I. Conditional adversarial domain adaptation. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montréal, Canada: NeurIPS, 2018. 1647−1657 [23] Long M S, Zhu H, Wang J M, Jordan M I. Deep transfer learning with joint adaptation networks. In: Proceedings of the 34th International Conference on Machine Learning. Sydney, Australia: ICML, 2017. 2208−2217 [24] Zhao H, Des Combes R T, Zhang K, Gordon G J. On learning invariant representations for domain adaptation. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: ICML, 2019. 7523−7532 [25] Liu H, Long M S, Wang J M, Jordan M I. Transferable adversarial training: A general approach to adapting deep classifiers. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: ICML, 2019. 4013−4022 [26] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016: 770−778 [27] Ganin Y, Lempitsky V S. Unsupervised domain adaptation by backpropagation. In: Proceedings of the 32nd International Conference on Machine Learning. Lille, France: ICML, 2015. 1180−1189 [28] Saito K, Watanabe K, Ushiku Y, Harada T. Maximum classifier discrepancy for unsupervised domain adaptation. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 3723−3732 [29] Wang Z R, Dai Z H, Póczos B, Carbonell J. Characterizing and avoiding negative transfer. In: Proceedings of the 2019 Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 11285−11294 [30] Chen C Q, Xie W P, Huang W B, Rong Y, Ding X H, Xu T Y, et al. Progressive feature alignment for unsupervised domain adaptation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 627−636 [31] Tan B, Song Y Q, Zhong E H, Yang Q. Transitive transfer learning. In: Proceedings of the 21st ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Sydney, Australia: KDD, 2015. 1155−1164 [32] Tan B, Zhang Y, Pan S J, Yang Q. Distant domain transfer learning. In: Proceedings of the 31st AAAI Conference on Artificial Intelligence. San Francisco, USA: AAAI, 2017. 2604−2610 [33] Yosinski J, Clune J, Bengio Y, Lipson H. How transferable are features in deep neural networks? In: Proceedings of the 27th International Conference on Neural Information Processing Systems. Montreal, Canada: NIPS, 2014. 3320−3328 [34] Ghifary M, Kleijn W B, Zhang M J. Domain adaptive neural networks for object recognition. In: Proceedings of the 13th Pacific Rim International Conference on Artificial Intelligence. Gold Coast, Australia: Springer, 2014. 898−904 [35] Tzeng E, Hoffman J, Zhang N, Saenko K, Darrell T. Deep domain confusion: Maximizing for domain invariance. arXiv: 1412.3474 , 2014. [36] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks. In: Proceedings of the 25th International Conference on Neural Information Processing Systems. Lake Tahoe, USA: NIPS, 2012. 1097−1105 [37] Zhang X, Yu F X, Chang S F, Wang S J. Deep transfer network: Unsupervised domain adaptation. arXiv: 1503.00591, 2015. [38] Yan H L, Ding Y K, Li P H, Wang Q L, Xu Y, Zuo W M. Mind the class weight bias: Weighted maximum mean discrepancy for unsupervised domain adaptation. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 945−954 [39] Long M S, Zhu H, Wang J M, Jordan M I. Unsupervised domain adaptation with residual transfer networks. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: NIPS, 2016. 136−144 [40] 皋军, 黄丽莉, 孙长银. 一种基于局部加权均值的领域适应学习框架. 自动化学报, 2013, 39(7): 1037−1052Gao Jun, Huang Li-Li, Sun Chang-Yin. A local weighted mean based domain adaptation learning framework. Acta Automatica Sinica, 2013, 39(7): 1037−1052 [41] Sun B C, Feng J S, Saenko K. Return of frustratingly easy domain adaptation. In: Proceedings of the 30th AAAI Conference on Artificial Intelligence. Phoenix, USA: AAAI, 2016. 2058−2065 [42] Sun B C, Saenko K. Deep CORAL: Correlation alignment for deep domain adaptation. In: Proceedings of the 2016 European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 443−450 [43] Chen C, Chen Z H, Jiang B Y, Jin X Y. Joint domain alignment and discriminative feature learning for unsupervised deep domain adaptation. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence. Honolulu, USA: AAAI, 2019. 3296−3303 [44] Li Y J, Swersky K, Zemel R S. Generative moment matching networks. In: Proceedings of the 32nd International Conference on Machine Learning. Lille, France: ICML, 2015. 1718−1727 [45] Zellinger W, Grubinger T, Lughofer E, Natschläger T, Saminger-Platz S. Central moment discrepancy (CMD) for domain-invariant representation learning. In: Proceedings of the 5th International Conference on Learning Representations. Toulon, France: ICLR, 2017. [46] Courty N, Flamary R, Tuia D, Rakotomamonjy A. Optimal transport for domain adaptation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(9): 1853−1865 doi: 10.1109/TPAMI.2016.2615921 [47] Courty N, Flamary R, Habrard A, Rakotomamonjy A. Joint distribution optimal transportation for domain adaptation. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: NIPS, 2017. 3733−3742 [48] Damodaran B B, Kellenberger B, Flamary R, Tuia D, Courty N. Deepjdot: Deep joint distribution optimal transport for unsupervised domain adaptation. In: Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: Springer, 2018. 467−483 [49] Lee C Y, Batra T, Baig M H, Ulbricht D. Sliced wasserstein discrepancy for unsupervised domain adaptation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 10277−10287 [50] Shen J, Qu Y R, Zhang W N, Yu Y. Wasserstein distance guided representation learning for domain adaptation. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence. New Orleans, USA: AAAI, 2018. 4058−4065 [51] Arjovsky M, Chintala S, Bottou L. Wasserstein generative adversarial networks. In: Proceedings of the 34th International Conference on Machine Learning. Sydney, Australia: ICML, 2017. 214−223 [52] Herath S, Harandi M, Fernando B, Nock R. Min-max statistical alignment for transfer learning. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 9280−9289 [53] Rozantsev A, Salzmann M, Fua P. Beyond sharing weights for deep domain adaptation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2019, 41(4): 801−814 doi: 10.1109/TPAMI.2018.2814042 [54] Shu X B, Qi G J, Tang J H, Wang J D. Weakly-shared deep transfer networks for heterogeneous-domain knowledge propagation. In: Proceedings of the 23rd ACM international conference on Multimedia. Brisbane, Australia: ACM, 2015. 35−44 [55] 许夙晖, 慕晓冬, 柴栋, 罗畅. 基于极限学习机参数迁移的域适应算法. 自动化学报, 2018, 44(2): 311−317Xu Su-Hui, Mu Xiao-Dong, Chai Dong, Luo Chang. Domain adaption algorithm with elm parameter transfer. Acta Automatica Sinica, 2018, 44(2): 311−317 [56] Ioffe S, Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: Proceedings of the 32nd International Conference on Machine Learning. Lille, France: JMLR.org, 2015. 448−456 [57] Chang W G, You T, Seo S, Kwak S, Han B. Domain-specific batch normalization for unsupervised domain adaptation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 7346−7354 [58] Roy S, Siarohin A, Sangineto E, Buló S R, Sebe N, Ricci E. Unsupervised domain adaptation using feature-whitening and consensus loss. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 9463−9472 [59] Li Y H, Wang N Y, Shi J P, Liu J Y, Hou X D. Revisiting batch normalization for practical domain adaptation. In: Proceedings of the 5th International Conference on Learning Representations. Toulon, France: OpenReview.net, 2017. [60] Ulyanov D, Vedaldi A, Lempitsky V. Improved texture networks: Maximizing quality and diversity in feed-forward stylization and texture synthesis. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 4105−4113 [61] Carlucci F M, Porzi L, Caputo B, Ricci E, Bulò S R. AutoDIAL: Automatic domain alignment layers. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 5077−5085 [62] Xiao T, Li H S, Ouyang W L, Wang X G. Learning deep feature representations with domain guided dropout for person re-identification. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. 1249−1258 [63] Wu S, Zhong J, Cao W M, Li R, Yu Z W, Wong H S. Improving domain-specific classification by collaborative learning with adaptation networks. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence. Honolulu, USA: AAAI, 2019. 5450−5457 [64] Zhang Y B, Tang H, Jia K, Tan M K. Domain-symmetric networks for adversarial domain adaptation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 5026−5035 [65] Gopalan R, Li R N, Chellappa R. Domain adaptation for object recognition: An unsupervised approach. In: Proceedings of the 2011 International Conference on Computer Vision. Barcelona, Spain: IEEE, 2011. 999−1006 [66] Gong B Q, Shi Y, Sha F, Grauman K. Geodesic flow kernel for unsupervised domain adaptation. In: Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition. Providence, USA: IEEE, 2012. 2066−2073 [67] Chopra S, Balakrishnan S, Gopalan R. Dlid: Deep learning for domain adaptation by interpolating between domains. International Conference on Machine Learning Workshop on Challenges in Representation Learning, 2013, 2(6 [68] Gong R, Li W, Chen Y H, van Gool L. DLOW: Domain flow for adaptation and generalization. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2472−2481 [69] Xu X, Zhou X, Venkatesan R, Swaminathan G, Majumder O. d-SNE: Domain adaptation using stochastic neighborhood embedding. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2492−2501 [70] Yang B Y, Yuen P C. Cross-domain visual representations via unsupervised graph alignment. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence. Honolulu, USA: AAAI, 2019. 5613−5620 [71] Ma X H, Zhang T Z, Xu C S. GCAN: Graph convolutional adversarial network for unsupervised domain adaptation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 8258−8268 [72] Yang Z L, Zhao J J, Dhingra B, He K M, Cohen W W, Salakhutdinov R, et al. GLoMo: Unsupervised learning of transferable relational graphs. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montréal, Canada: NeurIPS, 2018. 8964−8975 [73] Goodfellow I J, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative adversarial nets. In: Proceedings of the 27th International Conference on Neural Information Processing Systems. Montreal, Canada: NIPS, 2014. 2672−2680 [74] Chen X Y, Wang S N, Long M S, Wang J M. Transferability vs. Discriminability: Batch spectral penalization for adversarial domain adaptation. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: PMLR, 2019, 1081−1090 [75] Tzeng E, Hoffman J, Saenko K, Darrell T. Adversarial discriminative domain adaptation. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 2962−2971 [76] Volpi R, Morerio P, Savarese S, Murino V. Adversarial feature augmentation for unsupervised domain adaptation. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 5495−5504 [77] Vu T H, Jain H, Bucher M, Cord M, Pérez P. ADVENT: Adversarial entropy minimization for domain adaptation in semantic segmentation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2512−2521 [78] Tzeng E, Hoffman J, Darrell T, Saenko K. Simultaneous deep transfer across domains and tasks. In: Proceedings of the 2015 IEEE International Conference on Computer Vision. Santiago, Chile: IEEE, 2015. 4068−4076 [79] Kurmi V K, Kumar S, Namboodiri V P. Attending to discriminative certainty for domain adaptation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 491−500 [80] Wang X M, Li L, Ye W R, Long M S, Wang J M. Transferable attention for domain adaptation. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence. Honolulu, USA: AAAI, 2019. 5345−5352 [81] Luo Y W, Zheng L, Guan T, Yu J Q, Yang Y. Taking a closer look at domain shift: Category-level adversaries for semantics consistent domain adaptation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2502−2511 [82] Springenberg J T, Dosovitskiy A, Brox T, Riedmiller M A. Striving for simplicity: The all convolutional net. In: Proceedings of the 3rd International Conference on Learning Representations. San Diego, USA: ICLR, 2015. [83] Selvaraju R R, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 618−626 [84] Chattopadhay A, Sarkar A, Howlader P, Balasubramanian V N. Grad-CAM++: Generalized gradient-based visual explanations for deep convolutional networks. In: Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision. Lake Tahoe, USA: IEEE, 2018. 839−847 [85] Bengio Y. Learning deep architectures for AI. Foundations and Trends® in Machine Learning, 2009, 2(1): 1−127 [86] Glorot X, Bordes A, Bengio Y. Domain adaptation for large-scale sentiment classification: A deep learning approach. In: Proceedings of the 28th International Conference on Machine Learning. Bellevue, USA: Omnipress, 2011. 513−520 [87] Chen M M, Xu Z E, Weinberger K Q, Sha F. Marginalized denoising autoencoders for domain adaptation. In: Proceedings of the 29th International Conference on Machine Learning. Edinburgh, UK: Omnipress, 2012. [88] Ghifary M, Kleijn W B, Zhang M J, Balduzzi D, Li W. Deep reconstruction-classification networks for unsupervised domain adaptation. In: Proceedings of the 14th European Conference on Computer Vision. Amsterdam, The Netherlands: Springer, 2016. 597−613 [89] Zhuang F Z, Cheng X H, Luo P, Pan S J, He Q. Supervised representation learning: Transfer learning with deep autoencoders. In: Proceedings of the 24th International Joint Conference on Artificial Intelligence. Buenos Aires, Argentina: AAAI, 2015. 4119−4125 [90] Sun R Q, Zhu X G, Wu C R, Huang C, Shi J P, Ma L Z. Not all areas are equal: Transfer learning for semantic segmentation via hierarchical region selection. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 4355−4364 [91] Tsai J C, Chien J T. Adversarial domain separation and adaptation. In: Proceedings of the 27th International Workshop on Machine Learning for Signal Processing. Tokyo, Japan: IEEE, 2017. 1−6 [92] Zhu P K, Wang H X, Saligrama V. Learning classifiers for target domain with limited or no labels. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: PMLR, 2019. 7643−7653 [93] Zheng H L, Fu J L, Mei T, Luo J B. Learning multi-attention convolutional neural network for fine-grained image recognition. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 5219−5227 [94] Zhao A, Ding M Y, Guan J C, Lu Z W, Xiang T, Wen J R. Domain-invariant projection learning for zero-shot recognition. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montréal, Canada: NeurIPS, 2018. 1027−1038 [95] Liu M Y, Tuzel O. Coupled generative adversarial networks. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: NIPS, 2016. 469−477 [96] He D, Xia Y C, Qin T, Wang L W, Yu N H, Liu T Y, et al. Dual learning for machine translation. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Barcelona, Spain: NIPS, 2016. 820−828 [97] Yi Z, Zhang H, Tan P, Gong M L. DualGAN: Unsupervised dual learning for image-to-image translation. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 2868−2876 [98] Kim T, Cha M, Kim H, Lee J K, Kim J. Learning to discover cross-domain relations with generative adversarial networks. In: Proceedings of the 34th International Conference on Machine Learning. Sydney, Australia: PMLR, 2017. 1857−1865 [99] Sankaranarayanan S, Balaji Y, Castillo C D, Chellappa R. Generate to adapt: Aligning domains using generative adversarial networks. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 8503−8512 [100] Yoo D, Kim N, Park S, Paek A S, Kweon I S. Pixel-level domain transfer. In: Proceedings of the 14th European Conference on Computer Vision. Amsterdam, the Netherlands: Springer, 2016. 517−532 [101] Chen Y C, Lin Y Y, Yang M H, Huang J B. CrDoCo: Pixel-level domain transfer with cross-domain consistency. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 1791−1800 [102] Shrivastava A, Pfister T, Tuzel O, Susskind J, Wang W D, Webb R. Learning from simulated and unsupervised images through adversarial training. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 2242−2251 [103] Li Y S, Yuan L, Vasconcelos N. Bidirectional learning for domain adaptation of semantic segmentation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 6929−6938 [104] Bousmalis K, Silberman N, Dohan D, Erhan D, Krishnan D. Unsupervised pixel-level domain adaptation with generative adversarial networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 95−104 [105] Liu H, Cao Z J, Long M S, Wang J M, Yang Q. Separate to adapt: Open set domain adaptation via progressive separation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2922−2931 [106] You K C, Long M S, Cao Z J, Wang J M, Jordan M I. Universal domain adaptation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2715−2724 [107] Cao Z J, Ma L J, Long M S, Wang J M. Partial adversarial domain adaptation. In: Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: Springer, 2018. 139−155 [108] Cao Z J, You K C, Long M S, Wang J M, Yang Q. Learning to transfer examples for partial domain adaptation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2980−2989 [109] Zhang J, Ding Z W, Li W Q, Ogunbona P. Importance weighted adversarial nets for partial domain adaptation. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 8156−8164 [110] Busto P P, Gall J. Open set domain adaptation. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 754−763 [111] Saito K, Yamamoto S, Ushiku Y, Harada T. Open set domain adaptation by backpropagation. In: Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: Springer, 2018. 156−171 [112] Li Y Y, Yang Y X, Zhou W, Hospedales T M. Feature-critic networks for heterogeneous domain generalization. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, USA: PMLR, 2019. 3915−3924 [113] Chen Z L, Zhuang J Y, Liang X D, Lin L. Blending-target domain adaptation by adversarial meta-adaptation networks. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2243−2252 [114] Shankar S, Piratla V, Chakrabarti S, Chaudhuri S, Jyothi P, Sarawagi S. Generalizing across domains via cross-gradient training. In: Proceedings of the 6th International Conference on Learning Representations. Vancouver, Canada: OpenReview.net, 2018. [115] Volpi R, Namkoong H, Sener O, Duchi J, Murino V, Savarese S. Generalizing to unseen domains via adversarial data augmentation. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montréal, Canada: NeurIPS, 2018. 5339−5349 [116] Muandet K, Balduzzi D, Schölkopf B. Domain generalization via invariant feature representation. In: Proceedings of the 30th International Conference on Machine Learning. Atlanta, USA: JMLR.org, 2013. 10−18 [117] Li D, Yang Y X, Song Y Z, Hospedales T M. Learning to generalize: Meta-learning for domain generalization. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence. New Orleans, USA: AAAI, 2018. 3490−3497 [118] Li H L, Pan S J, Wang S Q, Kot A C. Domain generalization with adversarial feature learning. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 5400−5409 [119] Carlucci F M, D'Innocente A, Bucci S, Caputo B, Tommasi T. Domain generalization by solving jigsaw puzzles. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 2224−2233 [120] Ghifary M, Kleijn W B, Zhang M J, Balduzzi D. Domain generalization for object recognition with multi-task autoencoders. In: Proceedings of the 2015 IEEE International Conference on Computer Vision. Santiago, Chile: IEEE, 2015. 2551−2559 [121] Xu Z, Li W, Niu L, Xu D. Exploiting low-rank structure from latent domains for domain generalization. In: Proceedings of the 13th European Conference on Computer Vision. Zurich, Switzerland: IEEE, 2014. 628−643 [122] Niu L, Li W, Xu D. Multi-view domain generalization for visual recognition. In: Proceedings of the 2015 IEEE International Conference on Computer Vision. Santiago, Chile: IEEE, 2015. 4193−4201 [123] Niu L, Li W, Xu D. Visual recognition by learning from web data: A weakly supervised domain generalization approach. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 2774−2783 [124] Chen Y H, Li W, Sakaridis C, Dai D X, Van Gool L. Domain adaptive faster R-CNN for object detection in the wild. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 3339−3348 [125] Inoue N, Furuta R, Yamasaki T, Aizawa K. Cross-domain weakly-supervised object detection through progressive domain adaptation. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 5001−5009 [126] Xu Y H, Du B, Zhang L F, Zhang Q, Wang G L, Zhang L P. Self-ensembling attention networks: Addressing domain shift for semantic segmentation. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence. Honolulu, USA: AAAI, 2019. 5581−5588 [127] Chen C, Dou Q, Chen H, Qin J, Heng P A. Synergistic image and feature adaptation: Towards cross-modality domain adaptation for medical image segmentation. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence. Honolulu, USA: AAAI, 2019. 865−872 [128] Agresti G, Schaefer H, Sartor P, Zanuttigh P. Unsupervised domain adaptation for ToF data denoising with adversarial learning. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, USA: IEEE, 2019. 5579−5586 [129] Yoo J, Hong Y J, Noh Y, Yoon S. Domain adaptation using adversarial learning for autonomous navigation. arXiv: 1712.03742, 2017. [130] Choi D, An T H, Ahn K, Choi J. Driving experience transfer method for end-to-end control of self-driving cars. arXiv: 1809.01822, 2018. [131] Bąk S, Carr P, Lalonde J F. Domain adaptation through synthesis for unsupervised person re-identification. In: Proceedings of the 15th European Conference on Computer Vision. Munich, Germany: Springer, 2018. 193−209 [132] Deng W J, Zheng L, Ye Q X, Kang G L, Yang Y, Jiao J B. Image-image domain adaptation with preserved self-similarity and domain-dissimilarity for person re-identification. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 994−1003 [133] Li Y J, Yang F E, Liu Y C, Yeh Y Y, Du X F, Wang Y C F. Adaptation and re-identification network: An unsupervised deep transfer learning approach to person re-identification. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. Salt Lake City, USA: IEEE, 2018. 172−178 [134] 吴彦丞, 陈鸿昶, 李邵梅, 高超. 基于行人属性先验分布的行人再识别. 自动化学报, 2019, 45(5): 953−964Wu Yan-Cheng, Chen Hong-Chang, Li Shao-Mei, Gao Chao. Person re-identification using attribute priori distribution. Acta Automatica Sinica, 2019, 45(5): 953−964 [135] Côté-Allard U, Fall C L, Drouin A, Campeau-Lecours A, Gosselin C, Glette K, et al. Deep learning for electromyographic hand gesture signal classification using transfer learning. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2019, 27(4): 760−771 doi: 10.1109/TNSRE.2019.2896269 [136] Ren C X, Dai D Q, Huang K K, Lai Z R. Transfer learning of structured representation for face recognition. IEEE Transactions on Image Processing, 2014, 23(12): 5440−5454 doi: 10.1109/TIP.2014.2365725 [137] Shin H C, Roth H R, Gao M C, Lu L, Xu Z Y, Nogues I, et al. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Transactions on Medical Imaging, 2016, 35(5): 1285−1298 doi: 10.1109/TMI.2016.2528162 [138] Byra M, Wu M, Zhang X D, Jang H, Ma Y J, Chang E Y, et al. Knee menisci segmentation and relaxometry of 3D ultrashort echo time (UTE) cones MR imaging using attention U-Net with transfer learning. arXiv: 1908.01594, 2019. [139] Cao H L, Bernard S, Heutte L, Sabourin R. Improve the performance of transfer learning without fine-tuning using dissimilarity-based multi-view learning for breast cancer histology images. In: Proceedings of the 15th International Conference Image Analysis and Recognition. Póvoa de Varzim, Portugal: Springer, 2018. 779−787 [140] Kachuee M, Fazeli S, Sarrafzadeh M. ECG heartbeat classification: A deep transferable representation. In: Proceedings of the 2018 IEEE International Conference on Healthcare Informatics. New York, USA: IEEE, 2018. 443−444 [141] 贺敏, 汤健, 郭旭琦, 阎高伟. 基于流形正则化域适应随机权神经网络的湿式球磨机负荷参数软测量. 自动化学报, 2019, 45(2): 398−406He Min, Tang Jian, Guo Xu-Qi, Yan Gao-Wei. Soft sensor for ball mill load using damrrwnn model. Acta Automatica Sinica, 2019, 45(2): 398−406 [142] Zhao M M, Yue S C, Katabi D, Jaakkola T S, Bianchi M T. Learning sleep stages from radio signals: A conditional adversarial architecture. In: Proceedings of the 34th International Conference on Machine Learning. Sydney, Australia: PMLR, 2017. 4100−4109 [143] Saenko K, Kulis B, Fritz M, Darrell T. Adapting visual category models to new domains. In: Proceedings of the 11th European Conference on Computer Vision. Crete, Greece: Springer. 2010. 213−226 [144] Venkateswara H, Eusebio J, Chakraborty S, Panchanathan S. Deep hashing network for unsupervised domain adaptation. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, USA: IEEE, 2017. 5385−5394 [145] Bendale A, Boult T E. Towards open set deep networks. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016. [146] Shu Y, Cao Z J, Long M S, Wang J M. Transferable curriculum for weakly-supervised domain adaptation. In: Proceedings of the 33rd AAAI Conference on Artificial Intelligence. Honolulu, USA: AAAI, 2019. 4951−4958 -

下载:

下载: