-

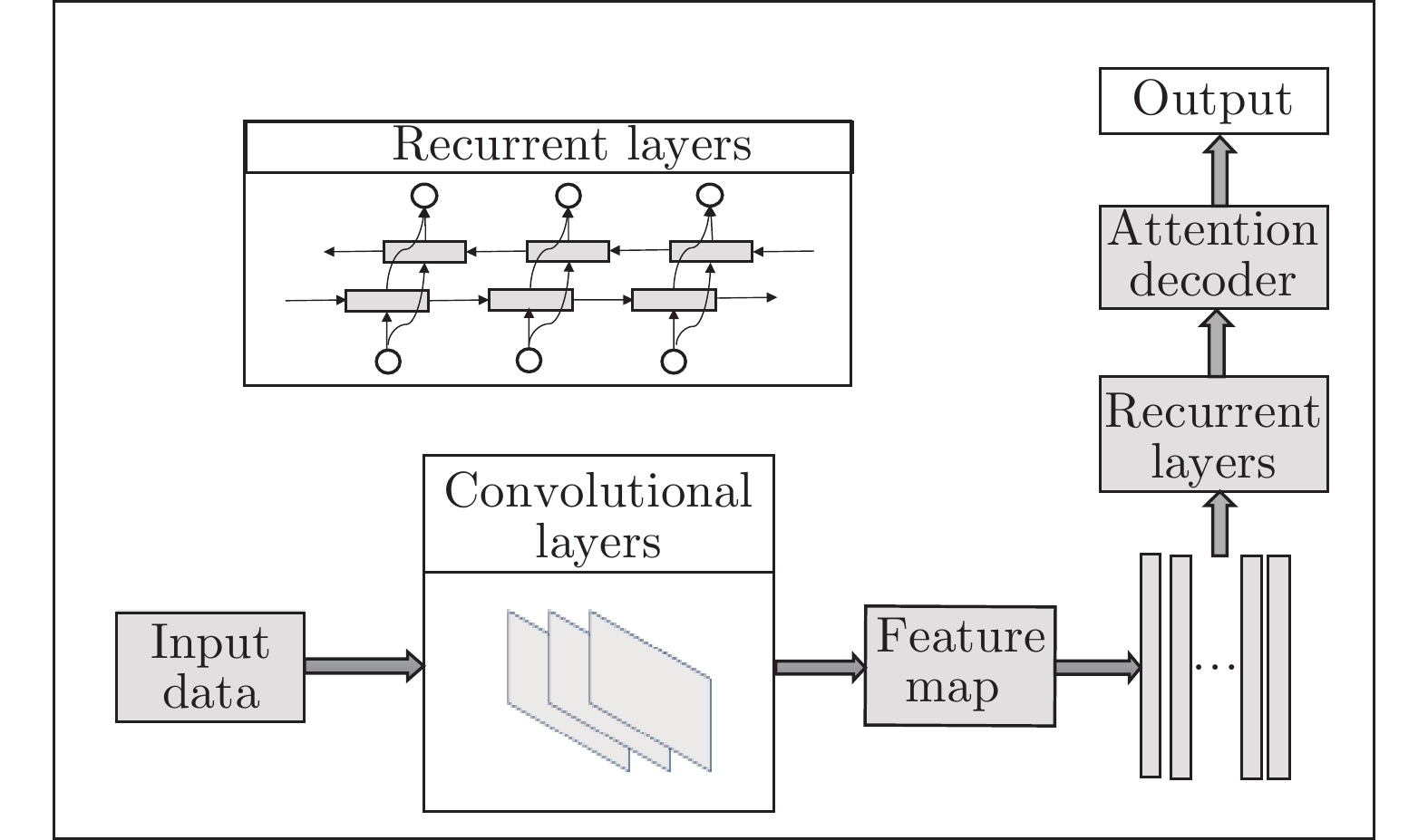

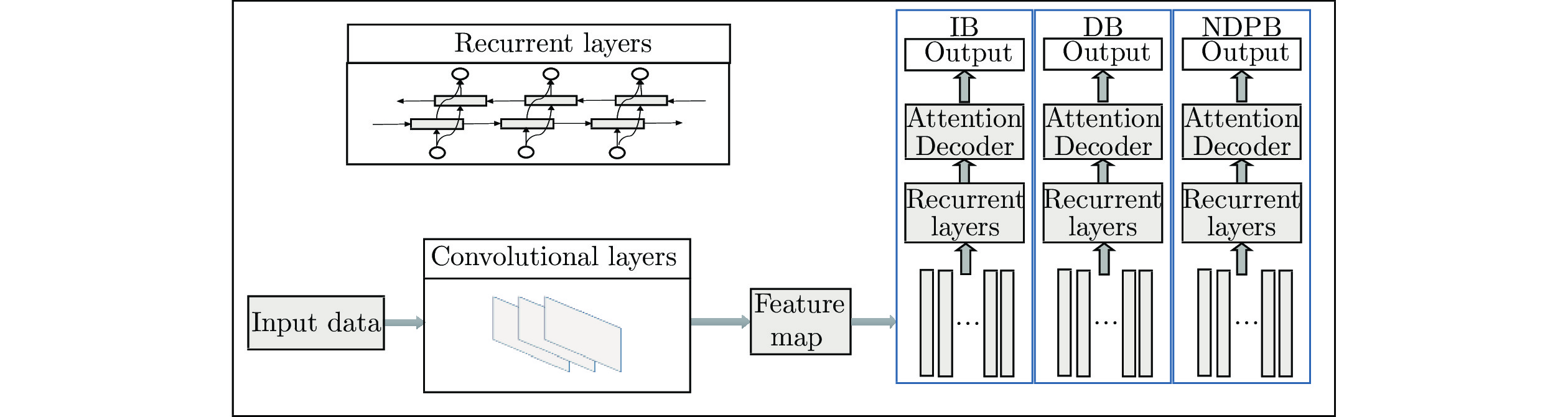

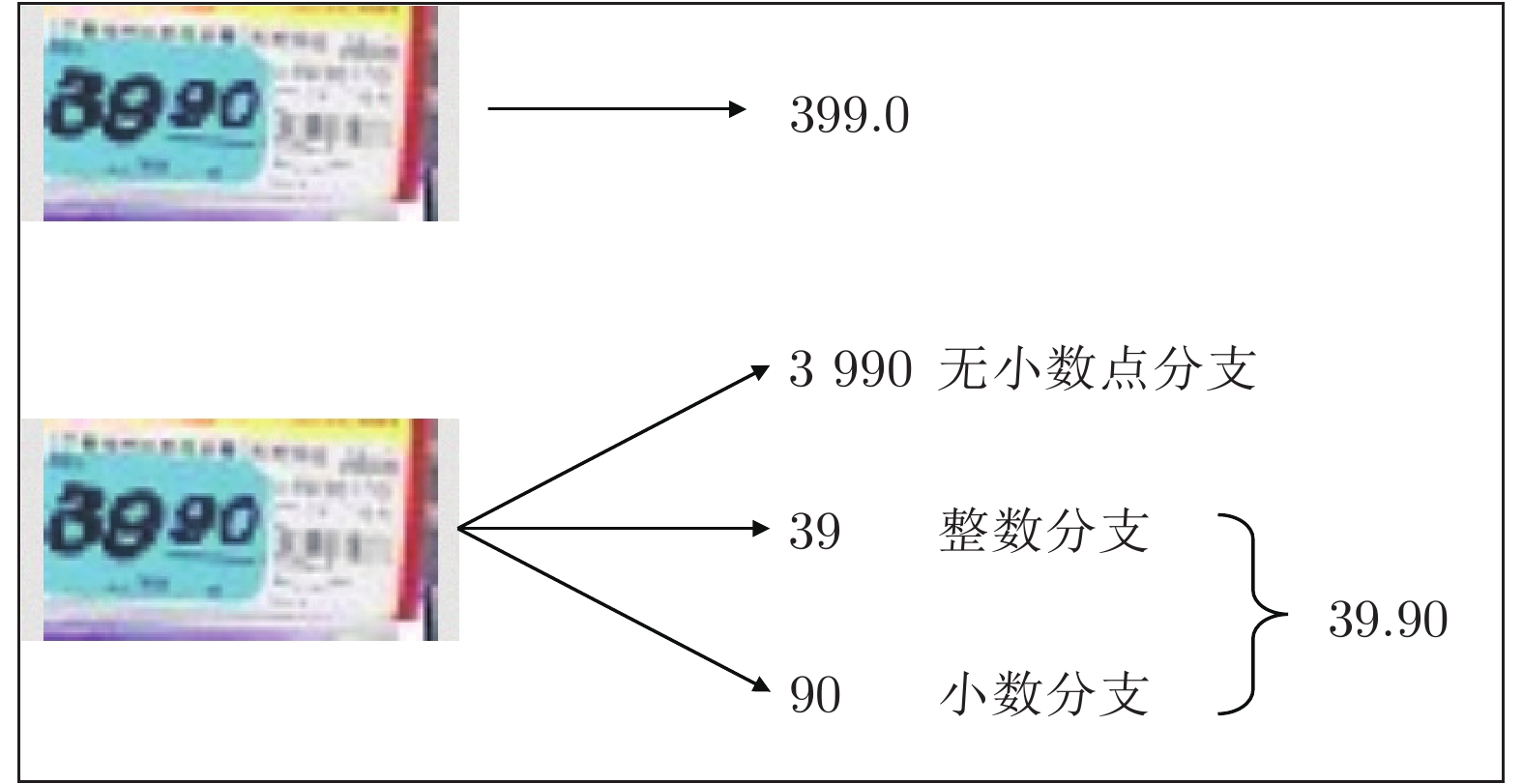

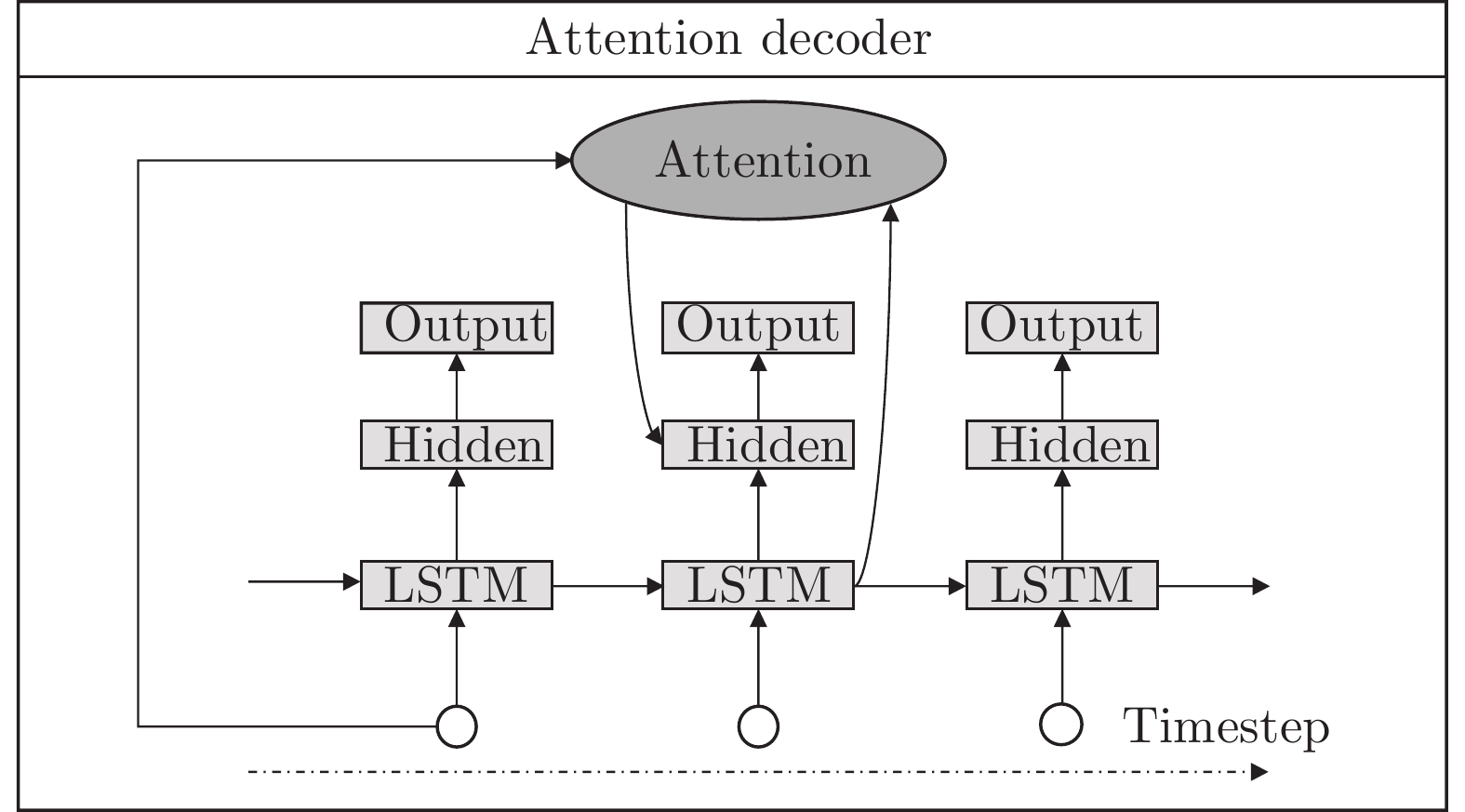

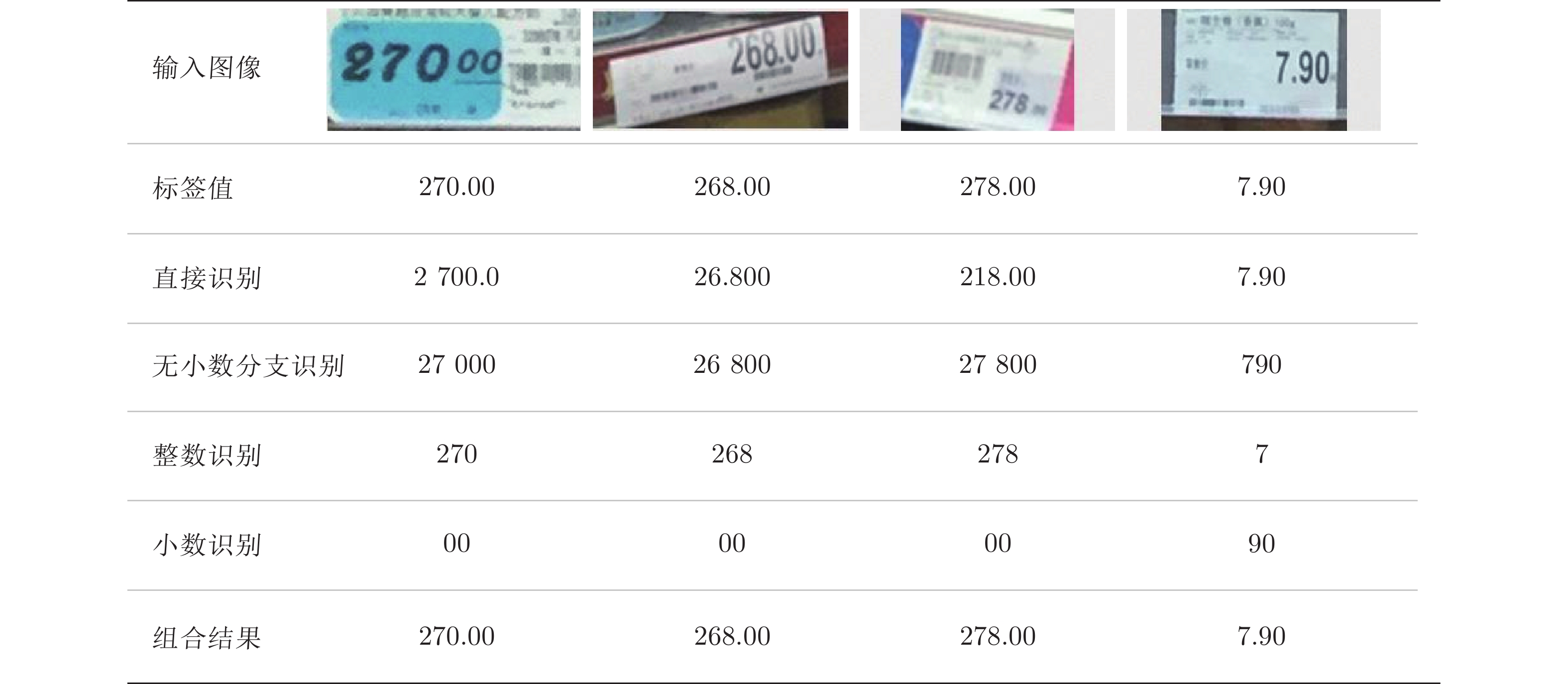

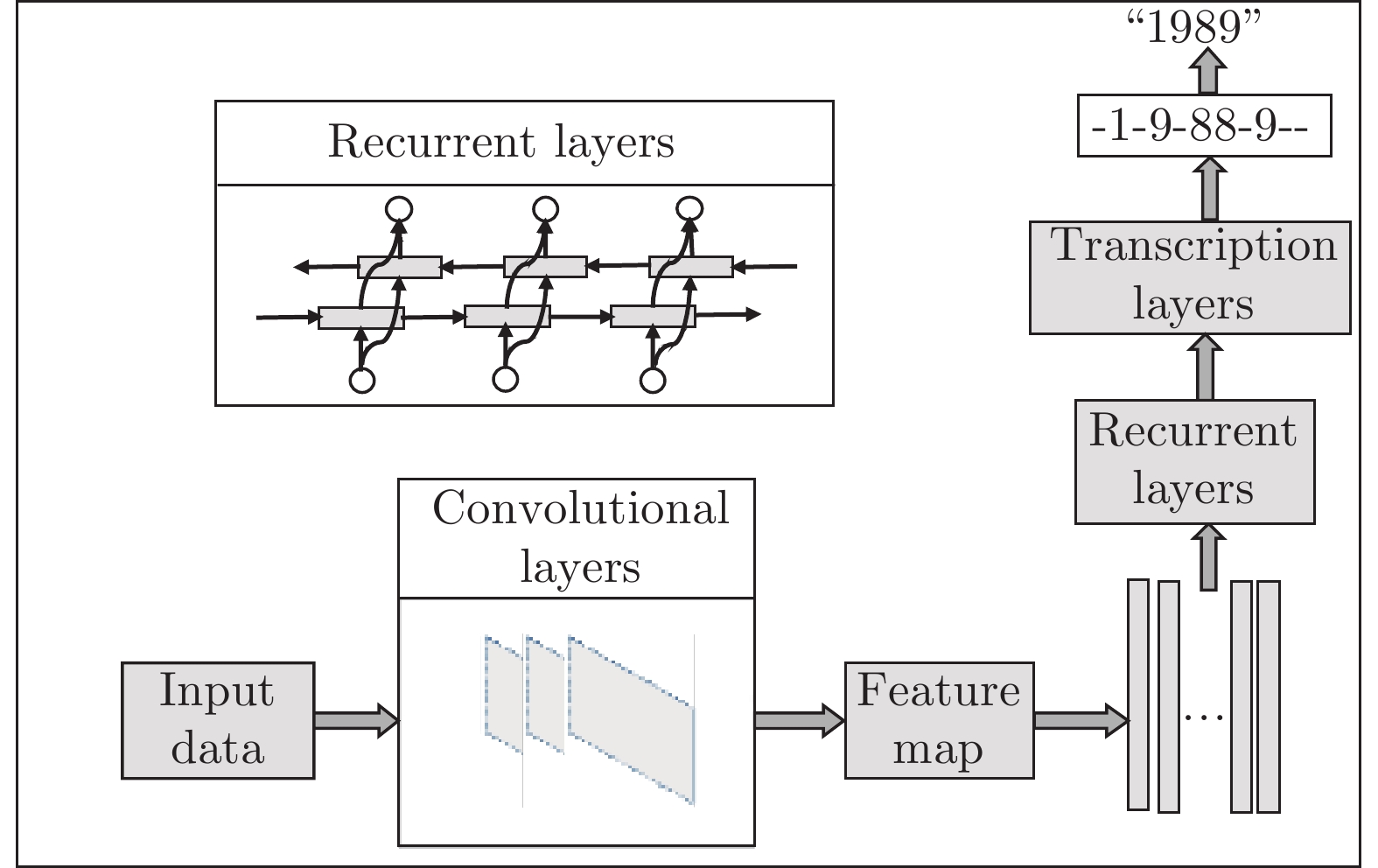

摘要: 为了促进智能新零售在线下业务场景的发展, 提高作为销售关键信息价格牌的识别精度. 本文对价格牌识别问题进行研究, 有效地提高了价格牌的识别精度, 并解决小数点定位不准确的难题. 通过深度卷积神经网络提取价格牌的深度语义表达特征, 将提取到的特征图送入多任务循环网络层进行编码, 然后根据解码网络设计的注意力机制解码出价格数字, 最后将多个分支的结果整合并输出完整价格. 本文所提出的方法能够非常有效地提高线下零售场景价格牌的识别精度, 并解决了一些领域难题如小数点的定位问题, 此外, 为了验证本文方法的普适性, 在其他场景数据集上进行了对比实验, 相关结果也验证了本文方法的有效性.Abstract: In order to promote the development of smart new retail in the offline scenario and improve the recognition accuracy of price tags, which is a key sales information, this paper studies this application scene to improve the recognition accuracy effectively of the price tag and solves the difficulty of locating decimal point. The deep semantic expression features of price tag are extracted by the deep convolutional neural network, sent to the multi-task recurrent network layer for encoding, and then the price number is decoded according to the attention mechanism of the decoding network, and finally the results of multiple branches are integrated to output the complete price. The method we proposed can effectively improve the price recognition accuracy in the smart new retail scenario and solve some challenge problems such as locating the position of decimal point. In addition, in order to verify the universality of the method, comparative experiments are carried out on datasets of other scenarios, and the related results also verify the effectiveness of the method in this paper.

-

表 1 模块的研究(%)

Table 1 Study of modules (%)

Model General-data Hard-data VGG-BiLSTM-CTC 50.20 20.20 VGG-BiLSTM-Attn 61.20 38.60 ResNet-BiLSTM-CTC 55.60 28.80 ResNet-BiLSTM-Attn 68.10 41.40 表 2 多任务模型结果(%)

Table 2 Results of multitask model (%)

Model General-data Hard-data Baseline[13] 68.10 41.40 NDPB&IB 90.10 72.90 NDPB&DB 91.70 74.30 IB&DB 92.20 73.20 NDPB&IB&DB 93.20 75.20 -

[1] Shi B, Bai X, Yao C. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE transactions on pattern analysis and machine intelligence, 2016, 39(11): 2298-2304 [2] Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A N, et al. Attention is all you need. In: Proceedings of the Neural Information Processing Systems. San Diego, USA: MIT, 2017. 5998−6008 [3] Luong M T, Pham H, Manning C D. Effective approaches to attention-based neural machine translation [Online], available: https://arxiv.org/abs/1508.04025, Sep 20, 2015 [4] Li H, Wang P, Shen C. Towards end-to-end text spotting with convolutional recurrent neural networks. In: Proceedings of the IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 5238−5246 [5] Yuan X, He P, Li X A. Adaptive adversarial attack on scene text recognition [Online], available: http://export.arxiv.org/abs/1807.03326, Jul 9, 2018 [6] Graves A, Fernández S, Gomez F, Schmidhuber J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In: Proceedings of the 23rd International Conference on Machine Learning. Pittsburgh Pennsylvania, USA: ACM, 2006. 369−376 [7] Sutskever I, Vinyals O, Le Q V. Sequence to sequence learning with neural networks. In: Proceedings of the Neural Information Processing Systems. Montréal, Canada: MIT, 2014. 3104−3112 [8] Lei Z, Zhao S, Song H, Shen J. Scene text recognition using residual convolutional recurrent neural network. Machine Vision and Applications, 29(5), 861−871 [9] Shi B, Yang M, Wang X, Lyu P, Yao C, Bai X. Aster: An attentional scene text recognizer with flexible rectification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 41(9), 2035−2048 [10] Long M, Wang J. Learning multiple tasks with deep relationship networks [Online], available: https://arxiv.org/abs/1506. 02117v1, Jul 6, 2015 [11] Veit A, Matera T, Neumann L, Matas J, Belongie S. Coco-text: Dataset and benchmark for text detection and recognition in natural images [Online], available: https://arxiv.org/abs/1601.07140v1, Jan 26, 2016 [12] Karatzas D, Gomez-Bigorda L, Nicolaou A, Ghosh S, Bagdanov A, Iwamura M, Shafait F. ICDAR 2015 competition on robust reading. In: Proceedings of the 13th International Conference on Document Analysis and Recognition (ICDAR). Tunis, Tunisia: IEEE, 2015. 1156−1160 [13] Baek J, Kim G, Lee J, Park S, Han D. What is wrong with scene text recognition model comparisons? dataset and model analysis [Online], available: https://arxiv.org/abs/1904.01906, Dec 18, 2019 [14] Bingel J, Søgaard A. Identifying beneficial task relations for multi-task learning in deep neural networks[Online], available: https://arxiv.org/abs/1702.08303, Feb 27, 2017 [15] Xie Z, Huang Y, Zhu Y, Jin L, Liu Y, Xie L. Aggregation cross-entropy for sequence recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach CA, USA: IEEE, 2019. 6538−6547 [16] Li H, Wang P, Shen C. Toward end-to-end car license plate detection and recognition with deep neural networks. IEEE Transactions on Intelligent Transportation Systems, 2018, 20(3): 1126-1136 [17] Xu Z, Yang W, Meng A, Lu N, Huang H, Ying C, Huang L. Towards end-to-end license plate detection and recognition: A large dataset and baseline. In: Proceedings of the European Conference on Computer Vision (ECCV). Munich, Germany: Springer, 2018. 255−271 -

下载:

下载: