-

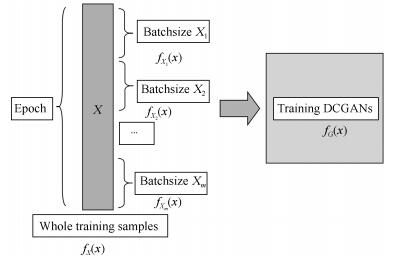

摘要: 深度卷积生成式对抗网络(Deep convolutional generative adversarial networks, DCGANs) 是一种改进的生成式对抗网络, 尽管生成图像效果比传统GANs有较大提升, 但在训练方法上依然存在改进的空间. 本文提出了一种基于训练图像子样本集构建的DCGANs训练方法. 推导给出了DCGANs的生成样本、子样本与总体样本的统计分布关系, 结果表明子样本集分布越趋近于总体样本集, 则生成样本集也越接近总体样本集. 设计了基于样本一阶颜色矩和清晰度的特征空间的子样本集构建方法, 通过改进的按概率抽样方法使得构建的子样本集之间近似独立同分布并且趋近于总体样本集分布. 为验证本文方法效果, 利用卡通人脸图像和Cifar10图像集, 对比分析本文构建子样本集与随机选取样本的DCGANs训练方法以及其他训练策略实验结果. 结果表明, 在Batchsize约为2 000的条件下, 测试误差、KL距离、起始分数指标有所提高, 从而得到更好的生成图像.

-

关键词:

- 深度卷积生成式对抗网络 /

- 子样本集构建 /

- 深度学习 /

- 样本特征 /

- 联合概率密度

Abstract: Deep convolutional generative adversarial networks (DCGANs) is an improved generative adversarial networks (GANs). There are some improvements in training method although the efiect of generated images are better than traditional GANs. This work proposes a DCGANs training method based on training image subsample set construction. The statistical distribution relations of DCGANs generated samples, subsamples and all samples are derived. The results show that the distributions of subsample sets are closer to the whole sample set, and the generated sample set is closer to the whole sample set. And then, a subsample set construction method is designed based on sample flrst order color moment and sharpness feature space. These subsample sets are approximately independent identically distributed each other and similar to the whole sample set distribution by improved probability sampling method. To validate the efiectiveness of this method, cartoon face image set and Cifar10 image set are used, the experimental results of DCGANs training method based on subsample set construction and random selection and other training strategies are compared and analyzed. The results show that under the condition that Batchsize is about 2 000, the test error, KL divergence, inception score are improved, so that better images could be generated.-

Key words:

- Deep convolutional generative adversarial networks (DCGANs) /

- subsample set construction /

- deep learning /

- sample feature /

- joint probability density

1) 本文责任编委 张军平 -

表 1 不同Batchsize下总体覆盖率

Table 1 Total coverage rate of different Batchsize

数据集 Batchsize 构建采样(%) 随机采样(%) 差距值(%) 卡通人脸 512 80.68 99.96 19.28 1 024 89.20 99.96 10.76 2 000 93.20 97.59 4.39 Cifar10 512 78.57 99.33 20.76 1 024 87.54 98.30 10.76 2 048 92.52 98.30 5.78 表 2 不同Batchsize下$ KL(f_{X_i}(x)||f_X(x)) $数据

Table 2 $ KL(f_{X_i}(x)||f_X(x)) $ data under difierent Batchsize

数据集 Batchsize 均值 标准差 最小值 中值 最大值 卡通人脸 128 1.3375 0.0805 1.1509 1.3379 1.6156 1 024 0.3109 0.0147 0.2849 0.3110 0.3504 1 024* 0.2366 0.0084 0.2154 0.2365 0.2579 2 000 0.1785 0.0089 0.1652 0.1778 0.1931 2 000* 0.1144 0.0042 0.1049 0.1150 0.1216 Cifar10 128 1.4125 0.0772 1.1881 1.4155 1.6037 1 024 0.3499 0.0155 0.3215 0.3475 0.3886 1 024* 0.2692 0.0063 0.2552 0.2687 0.2836 2 048 0.1994 0.0085 0.1830 0.2004 0.2148 2 048* 0.1372 0.0040 0.1281 0.1372 0.1462 带"*"项是构建子样本集相关数据, 下同 表 3 卡通人脸数据集实验结果对比

Table 3 Experimental results comparison of cartoon face dataset

Batchsize epoch 测试误差($ \times10^{-3} $) KL IS ($ \sigma\times10^{-2} $) 1 024 135 8.03 $ \pm $ 2.12 0.1710 3.97 $ \pm $ 2.62 1 024* 135 8.23 $ \pm $ 2.10 0.1844 3.82 $ \pm $ 2.02 2 000 200 7.68 $ \pm $ 2.21 0.1077 3.95 $ \pm $ 2.32 2 000* 200 7.18 $ \pm $ 2.13 0.0581 4.21 $ \pm $ 2.53 表 4 Cifar10数据集实验结果对比

Table 4 Experimental results comparison of Cifar10 dataset

Batchsize epoch 测试误差($ \times10^{-2} $) KL IS ($ \sigma\times10^{-2} $) 1 024 100 1.43 $ \pm $ 0.38 0.2146 5.44 $ \pm $ 6.40 1 024* 100 1.48 $ \pm $ 0.35 0.2233 5.36 $ \pm $ 6.01 2 048 200 1.40 $ \pm $ 0.39 0.2095 5.51 $ \pm $ 5.83 2 048* 200 1.35 $ \pm $ 0.37 0.1890 5.62 $ \pm $ 5.77 表 5 卡通人脸数据集不同策略对比

Table 5 Different strategies comparison of cartoon face dataset

Batchsize epoch 测试误差($ \times10^{-3} $) KL IS ($ \sigma\times10^{-2} $) 1 024* 135 8.23 $ \pm $ 2.10 0.1844 3.82 $ \pm $ 2.02 2 000* 200 7.18 $ \pm $ 2.13 0.0581 4.21 $ \pm $ 2.53 128 (a) 25 8.32 $ \pm $ 2.07 0.1954 3.62 $ \pm $ 2.59 128 (b) 25 8.15 $ \pm $ 2.15 0.1321 3.92 $ \pm $ 4.59 128 (c) 25 8.07 $ \pm $ 2.10 0.1745 3.89 $ \pm $ 4.45 128 (d) 25 8.23 $ \pm $ 2.26 0.1250 4.02 $ \pm $ 3.97 表 6 Cifar10数据集不同策略对比

Table 6 Different strategies comparison of Cifar10 dataset

Batchsize epoch 测试误差($ \times10^{-2} $) KL IS ($ \sigma\times10^{-2} $) 1 024* 100 1.48 $ \pm $ 0.35 0.2233 5.36 $ \pm $ 6.01 2 048* 200 1.35 $ \pm $ 0.37 0.1890 5.62 $ \pm $ 5.77 128 (a) 25 1.81 $ \pm $ 0.41 0.2813 4.44 $ \pm $ 3.66 128 (b) 25 1.64 $ \pm $ 0.40 0.2205 4.61 $ \pm $ 3.80 128 (c) 25 1.70 $ \pm $ 0.41 0.2494 4.62 $ \pm $ 4.80 128 (d) 25 1.63 $ \pm $ 0.42 0.2462 4.94 $ \pm $ 5.79 -

[1] Goodfellow I J, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S et al. Generative adversarial nets. In: Proceedings of International Conference on Neural Information Processing Systems. Montreal, Canada: 2014. 2672-2680 [2] Creswell A, White T, Dumoulin V, Arulkumaran K, Sengupta B, Bharath A A. Generative adversarial networks: An overview. IEEE Signal Processing Magazine, 2018, 35(1): 53-65 doi: 10.1109/MSP.2017.2765202 [3] 王坤峰, 苟超, 段艳杰, 林懿伦, 郑心湖, 王飞跃. 生成式对抗网络GAN的研究进展与展望. 自动化学报, 2017, 43(3): 321-332 doi: 10.16383/j.aas.2017.y000003Wang Kun-Feng, Gou Chao, Duan Yan-Jie, Lin Yi-Lun, Zheng Xin-Hu, Wang Fei-Yue. Generative adversarial networks: The state of the art and beyond. Acta Automatica Sinica, 2017, 43(3): 321-332 doi: 10.16383/j.aas.2017.y000003 [4] 王万良, 李卓蓉. 生成式对抗网络研究进展. 通信学报, 2018, 39(2): 135-148 https://www.cnki.com.cn/Article/CJFDTOTAL-TXXB201802014.htmWang Wan-Liang, Li Zuo-Rong. Advances in generative adversarial network. Journal of Communications, 2018, 39(2): 135-148 https://www.cnki.com.cn/Article/CJFDTOTAL-TXXB201802014.htm [5] Salimans T, Goodfellow I J, Zaremaba W, Cheung V, Radford A, Chen X. Improved techniques for training GANs. In: Proceedings of International Conference on Neural Information Processing Systems. Barcelona, Spain: 2016. [6] Mirza M, Osindero S. Conditional generative adversarial nets. arXiv preprint arXiv: 1411.1784v1, 2014. [7] Radford A, Metz L, Chintala S. Unsupervised representation learning with deep convolutional generative adversarial networks. In: Proceedings of International Conference on Learning Representations. San Juan, Puerto Rico: 2016. [8] Denton E, Chintala S, Szlam A, Fergus R. Deep generative image using a Laplacian pyramid of adversarial networks. In: Proceedings of International Conference on Neural Information Processing Systems. Montreal, Canada: 2015. 1486-1494 [9] Odena A. Semi-Supervised learning with generative adversarial networks. arXiv preprint arXiv: 1606.01583v2, 2016. [10] Donahue J, Krahenbuhl K, Darrell T. Adversarial feature learning. In: Proceedings of International Conference on Learning Representations. Toulon, France: 2017. [11] Zhang H, Xu T, Li H S, Zhang S T, Wang X G, Huang X L et al. StackGAN: text to photo-realistic image synthesis with stacked generative adversarial networks. In: Proceedings of International Conference on Computer Vision. Venice, Italy: 2017. [12] Chen X, Duan Y, Houthooft R, Schulman J, Sutskever I, Abbeel P. InfoGAN: interpretable representation learning by information maximizing generative adversarial nets. In: Proceedings of International Conference on Neural Information Processing Systems. Barcelona, Spain: 2016. [13] Qi G J. Loss-sensitive generative adversarial networks on lipschitz densities. arXiv preprint arXiv: 1701.06264v5, 2017. [14] Arjovsky M, Chintala S, Bottou L. Wasserstein GAN. arXiv preprint arXiv: 1701.07875v3, 2017. [15] Yu L T, Zhang W N, Wang J, Yu Y. SeqGAN: sequence generative adversarial nets with policy gradient. In: Proceedings of The Thirty-First AAAI Conference on Artificial Intelligence. San Francisco, USA: 2017. [16] 王功明, 乔俊飞, 乔磊. 一种能量函数意义下的生成式对抗网络. 自动化学报, 2018, 44(5): 793-803 doi: 10.16383/j.aas.2018.c170600Wang Gong-Ming, Qiao Jun-Fei, Qiao Lei. A generative adversarial network in terms of energy function. Acta Automatica Sinica, 2018, 44(5): 793-803 doi: 10.16383/j.aas.2018.c170600 [17] Do-Omri A, Wu D L, Liu X H. A self-training method for semi-supervised GANs. arXiv preprint arXiv: 1710.10313v1, 2017. [18] Gulrajani I, Ahmed G, Arjovsky M, Dumoulin V, Courville A. Improved training of wasserstein GANs. In: Proceedings of International Conference on Neural Information Processing Systems. Long Beach, USA: 2017. 5769-5579 [19] Daskalakis C, Ilyas A, Syrgkanis V, Zeng H Y. Training GANs with optimism. In: Proceedings of International Conference on Learning Representations. Vancouver, Canada: 2018. [20] Mescheder L, Geiger A, Nowozin S. Which training methods for GANs do actually converge? In: Proceedings of International Conference on Machine Learning. Stockholm, Sweden: 2018. 3481-3490 [21] Keskar N S, Mudigere D, Nocedal J, Smelyanskiy M, Tang P T P. On large-batch training for deep learning: generalization GAP and sharp minmax. In: Proceedings of International Conference on Learning Representations. New Orleans, USA: 2017. [22] Goyal P, Dollar P, Girshick R, Noordhuis P, Wesolowski L, Kyrola A et al. Accurate, large minibatch SGD: training ImageNet in 1 hour. arXiv preprint arXiv: 1706.02677v2, 2018. [23] Li M, Zhang T, Chen Y Q, Smola A J. Efficient mini-batch training for stochastic optimization. In: Proceedings of Acm Sigkdd International Conference on Knowledge Discovery & Data Mining. New York, USA: 2014. 661-670 [24] Bottou L, Frank E C, Nocedal J. Optimization methods for large-scale machine learning. arXiv preprint arXiv: 1606.04838v3, 2018. [25] Dekel O, Gilad-Bachrach R, Shamir O, Xiao L. Optimal distributed online prediction using mini-batches. Journal of Machine Learning Research, 2012, 13(1): 165-202 [26] 郭懋正. 实变函数与泛函分析. 北京: 北京大学出版社, 2005. 67-69Guo Mao-Zheng. Real Analysis and Functional Analysis. Beijing: Peking University press, 2005. 67-69 [27] 何书元. 概率论. 北京: 北京大学出版社, 2006. 52-56He Shu-Yuan. Probability Theory. Beijing: Peking University press, 2006. 52-56 [28] Xu Q T, Huang G, Yuan Y, Huo C, Sun Y, Wu F et al. An empirical study on evaluation metrics of generative adversarial networks. arXiv preprint arXiv: 1806.07755v2, 2018. -

下载:

下载: