-

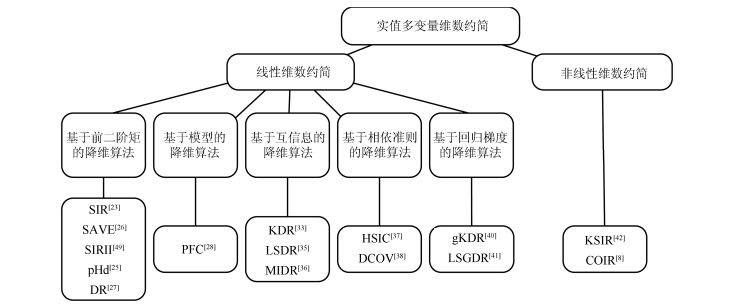

摘要: 维数约简作为机器学习的经典问题之一,主要用于处理维数灾问题、帮助加速算法的计算效率和提高可解释性以及数据可视化.传统的维数约简算法如主成分分析(Principal component analysis,PCA)和线性判别分析等只能处理无标签数据或者分类数据.然而,当预测变量为一元或多元连续型实值变量时,这些处理无标签数据或分类数据的维数约简方法则不能形成有效的预测性能.近20年来,有一系列工作从多个角度对这一问题展开了研究,并取得了系统性的研究成果.在此背景下,本文将综述这些面向回归问题的降维算法,即实值多变量维数约简.本文将介绍与实值多变量维数约简密切相关的基本概念、算法、理论,并探讨一些潜在的研究方向.Abstract: As one of the classical problems in machine learning, dimension reduction is used for dealing with the curse of dimensionality, speeding up computational efficiency of the algorithm, and improving interpretability as well as visualizing high-dimensional data. Traditional dimension reduction algorithms such as principal component analysis (PCA) and linear discriminant analysis are mainly suitable for unlabeled data or classification data. When the response variables are univariate or multivariate continuous real-valued ones, however, such dimension reduction methods cannot guarantee the effective predictive performance of the reduced subspace. In the recent two decades, researchers have been devoted to studying this issue with different viewpoints, attaining many promising and systemic achievements. Under this background, we will survey the developments of real-valued multivariate dimension reduction in detail. We will also introduce its basic concepts, algorithms and theories, and discuss some potential research directions deserving investigating.1) 本文责任编委 朱军

-

表 1 基于互信息的降维算法对比

Table 1 Comparison of mutual information-based dimension reduction algorithms

目标函数 条件独立性 代表方法 解释 $\min_{{B}} {\rm E}_U \left[\mathbb{I}({Y|U};{V|U}) \right]$ ${Y}╨{V}|{U}$ KDR[32-33] 去掉$X$中与$Y$独立的一些特征, 也就是说这些特征对$Y$的预测不提供任何信息 $\max_{{B}} \mathbb{I}({Y};{U})$ ${Y}╨{X}|{U}$ LSDR[34-35]、MIDR[36] 从与$Y$相关的因素中寻找一个小的子空间或者子集, 不属于该子空间或者子集的特征, 虽然对$Y$的预测作出贡献, 但是在给定子空间或者子集后, 这部分信息不足以增加或改进对$Y$的预测能力 表 2 实值多变量维数约简算法总结

Table 2 Summary of real-valued multivariate dimension reduction algorithms

算法 响应变量 求解类型 分布假设 统计矩 模型选取 回归方式 #参数 参数列表 SIR[23] 一元 解析解 椭圆对称 一阶 无 逆回归 1 切片数$h$ SAVE[26] 一元 解析解 椭圆对称 二阶 无 逆回归 1 $h$ SIRII[49] 一元 解析解 椭圆对称 二阶 无 逆回归 1 $h$ pHd[25] 一元 解析解 正态分布 二阶 - 正回归 0 - DR[27] 一元 解析解 渐进正态 二阶 无 逆回归 1 $h$ PFC[28] 一元 解析解 模型假设 模型相关 无 逆回归 1 次数$r$ KDR[33] 多元 非凸优化 无 无穷 无 正回归 3 带宽$\sigma_x, \sigma_y$, $\epsilon$ LSDR[35] 多元 非凸优化 无 无穷 交叉验证 正回归 4 $\sigma_x, \sigma_y$, $\lambda$, $b$ MIDR[36] 多元 非凸优化 无 无穷 - 正回归 0 - HSIC[37] 多元 非凸优化 无 无穷 无 正回归 2 $\sigma_x, \sigma_y$ DCOV[38] 多元 非凸优化 无 二阶 - 正回归 0 - gKDR[40] 多元 解析解 无 无穷 无 正回归 3 $\sigma_x, \sigma_y, \epsilon$ LSGDR[41] 多元 解析解 无 无穷 交叉验证 正回归 $2D+1$ $\{\sigma_x^{(j)}, \lambda^{(j)}\}_{j=1}^D, \sigma_y$ KSIR[42] 一元 解析解 无 无穷 无 逆回归 2 $\sigma_x$, $h$ COIR[8] 多元 解析解 无 无穷 无 逆回归 3 $\sigma_x, \sigma_y, \epsilon$ 表 3 基于广义特征值求解的实值多变量维约简算法估计量

Table 3 Estimation of generalized eigen-decomposition-based multivariate dimension reduction algorithms

方法 (样本)估计量${M}$ SIR[23] ${\rm E}\Big[{\rm E}(Z|Y){\rm E}(Z|Y)^{\rm T}\Big] $ SAVE[26] ${\rm E}\Big[{I}-{\rm var}(Z|Y)\Big]^2$ SIRII[49] ${\rm E}\Big[{\rm var}(Z|Y)-{\rm E}({\rm var}(Z|Y))\Big]^2$ pHd[25] ${\rm E}\Big[(Y-E Y)ZZ^{\rm T}\Big]^2$ DR[27] $2{\rm E}\Big[E^2(ZZ^{\rm T}|Y)\Big]+ 2E^2\Big[{\rm E}(Z|Y){\rm E}(Z^{\rm T}|Y)\Big]+$ $ 2{\rm E}\Big[{\rm E}(Z^{\rm T}|Y){\rm E}(Z|Y)\Big]{\rm E}\Big[{\rm E}(Z|Y) {\rm E}(Z^{\rm T}|Y)\Big]\!-\!2{I}$ PFC[28] $\frac{1}{n}\widehat{{X}}^{\rm T}\widehat{{X}}$ gKDR[40] $ \big\langle \frac{\partial \kappa_x(\cdot, {\pmb x})}{\partial {\pmb x}}, \Sigma_{XX}^{-1}\Sigma_{XY}\Sigma_{YX}\Sigma_{XX}^{-1}\frac{\partial \kappa_x(\cdot, {\pmb x})}{\partial {\pmb x}}\big\rangle_{\mathcal{H}_x}$ LSGDR[41] $ {\rm E}({{\pmb g}}(y, {\pmb x}){{\pmb g}}(y, {\pmb x})^{\rm T})$ KSIR[42] ${\rm var}({\rm E}[{ \pmb \phi}({\pmb x})|y])$ COIR[8] ${\rm var}({\rm E}[{ \pmb \phi}({\pmb x})|y])$ 表 4 通过矩阵流形优化的降维算法的总结

Table 4 Summary of matrix manifold optimization-based dimension reduction algorithms

算法 目标函数$f({B})$ 欧式空间梯度$\nabla_{{B}} f$ 对应的权值矩阵${W}$ KDR[33] ${\rm{tr}}[{\overline{K}}_Y({\overline{K}}_U+n\epsilon {I}_n)^{-1}]$ $\frac{2}{\sigma^2}{X}{L}_{{\rm{KDR}}}{X}^{\rm T}{B}$ $\begin{array}{rl} {W}_{hrm{KDR}} &\!\!\!\!\!\!\!={H}{\Omega}{H}\odot{K}_U\\ {\Omega} &\!\!\!\!\!\!\!= {M}^{-1}{H}{K}_Y{H}{M}^{-1} \\ {M} &\!\!\!\!\!\!\!= {H}{K}_U{H}+n \epsilon {I} \end{array}$ LSDR[35] $\frac{1}{2}{\hat{\pmb{\alpha}}}^{\rm T}{\widehat{H}}{\hat{\pmb{\alpha}}}- \widehat{\pmb{h}}^{\rm T}\widehat{\pmb{\alpha}}$ 不存在解析形式梯度 无拉普拉斯形式 MIDR[36] $\begin{array}{ll}&\frac{d+1}{n(n-1)}\sum_{i\neq j}\log(\left\| {({\pmb x}_i- {\pmb x}_j){B}} \right\|^2+\left\| {y_i-y_j} \right\|^2)-\\ &\frac{d}{n(n-1)}\sum_{i\neq j}\log\left\| {({\pmb x}_i-{\pmb x}_j){B}} \right\| ^2\end{array}$ $-\frac{4}{n(n-1)} {X}{L}_{{\rm{MIDR}}}{X}^{\rm T}{B}$ $\begin{array}{rl}&{W}_{hrm{MIDR}}=\frac{d}{{D}_X\!\odot\!{D}_X}- \\ &\frac{d+1}{({D}_X\!\odot\!{D}_X\!+\!{D}_Y\!\odot\!{D}_Y)}\end{array}$ HSIC[37] $-\frac{1}{(n-1)^2}\textrm{tr}\big[{K}_U{H}{K}_Y {H}\big]$ $\frac{2}{\sigma^2(n-1)^2}{X}{L}_{{\rm{HSIC}}}{X}^{\rm T}{B}$ ${W}_{{\rm{HSIC}}} = {H}{K}_Y{H}\odot{K}_U$ DCOV[38] $-\frac{1}{n^2}{\rm{tr}}\big[{\overline{D}}_U{\overline{D}}_Y\big]$ $-\frac{2}{n^2}{X}{L}_{{\rm{DCOV}}}{X}^{\rm T}{B}$ ${W}_{{\rm{DCOV}}} = \frac{{H}{D}_Y{H}}{{D}_U}$ -

[1] Jolliffe I. Principal Component Analysis. New York:Wiley Online Library, 2002. [2] Hotelling H. Analysis of a complex of statistical variables into principal components. Journal of Educational Psychology, 1993, 24 (6):417-441 http://www.docin.com/p-238815088.html [3] Roweis S T, Saul L K. Nonlinear dimensionality reduction by locally linear embedding. Science, 2000, 290 (5500):2323-2326 doi: 10.1126/science.290.5500.2323 [4] Tenenbaum J B, Silva V D, Langford J C. A global geometric framework for nonlinear dimensionality reduction. Science, 2000, 290 (5500):2319-2323 doi: 10.1126/science.290.5500.2319 [5] Fisher R A. The use of multiple measurements in taxonomic problems. Annals of Eugenics, 1936, 7 (2):179-188 https://www.bibsonomy.org/bibtex/2c9d8d78a8e1bb5adecc7602490f4323f/gromgull [6] Mika S, Ratsch G, Weston J, Schölkopft B, Mullert K R. Fisher discriminant analysis with kernels. In: Proceeding of the 1999 IEEE Signal Processing Society on Neural Networks for Signal Processing Ⅸ. Madison, WI, USA, USA: IEEE, 1999. 41-48 http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=788121 [7] Kulis B. Metric learning:a survey. Foundations and Trends in Machine Learning, 2012, 5 (4):287-364 https://www.cs.cmu.edu/~liuy/frame_survey_v2.pdf [8] Kim M, Pavlovic V. Central subspace dimensionality reduction using covariance operators. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2011, 33 (4):657-670 doi: 10.1109/TPAMI.2010.111 [9] Tan B, Zhang J P, Wang L. Semi-supervised elastic net for pedestrian counting. Pattern Recognition, 2011, 44 (10-11):2297-2304 doi: 10.1016/j.patcog.2010.10.002 [10] Zhang J P, Tan B, Sha F, He L. Predicting pedestrian counts in crowded scenes with rich and high-dimensional features. IEEE Transactions on Intelligent Transportation Systems, 2011, 12 (4):1037-1046 https://www.researchgate.net/profile/Junping_Zhang/publication/220109419_Predicting_Pedestrian_Counts_in_Crowded_Scenes_With_Rich_and_High-Dimensional_Features/links/0deec53b629366a5b6000000.pdf?inViewer=true&pdfJsDownload=true&disableCoverPage=true&origin=publication_detail [11] Kim M Y, Pavlovic V. Dimensionality reduction using covariance operator inverse regression. In: Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition. Anchorage, AK, USA: IEEE, 2008. 1-8 http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=4587404 [12] Zhang J P, Wang F Y, Wang K F, Lin W H, Xu X, Chen C. Data-driven intelligent transportation systems:a survey. IEEE Transactions on Intelligent Transportation Systems, 2011, 12 (4):1037-1046 doi: 10.1109/TITS.2011.2132759 [13] Zhu H J, Li L X. Biological pathway selection through nonlinear dimension reduction. Biostatistics, 2011, 12 (3):429-444 doi: 10.1093/biostatistics/kxq081 [14] Zhu H J, Li L X, Zhou H. Nonlinear dimension reduction with Wright-Fisher kernel for genotype aggregation and association mapping. Bioinformatics, 2012, 28 (18):i375-i381 doi: 10.1093/bioinformatics/bts406 [15] Shan H M, Zhang J P, Kruger U. Learning linear representation of space partitioning trees based on unsupervised kernel dimension reduction. IEEE Transactions on Cybernetics, 2016, 46 (12):3427-3438 doi: 10.1109/TCYB.2015.2507362 [16] Bishop C M. Pattern Recognition and Machine Learning. New York:Springer, 2006. [17] Caruana R. Multitask learning. Machine Learning, 1997, 28 (1):41-75 doi: 10.1023/A:1007379606734 [18] Tsoumakas G, Katakis I. Multi-label classification:an overview. International Journal of Data Warehousing and Mining, 2007, 3 (3):1-13 doi: 10.4018/IJDWM [19] Tipping M E. Sparse Bayesian learning and the relevance vector machine. Journal of Machine Learning Research, 2001, 1:211-244 https://dl.acm.org/citation.cfm?id=944741 [20] 王卫卫, 李小平, 冯象初, 王斯琪.稀疏子空间聚类综述.自动化学报, 2015, 41 (8):1373-1384 http://www.aas.net.cn/CN/abstract/abstract18712.shtmlWang Wei-Wei, Li Xiao-Ping, Feng Xiang-Chu, Wang Si-Qi. A survey on sparse subspace clustering. Acta Automatica Sinica, 2015, 41 (8):1373-1384 http://www.aas.net.cn/CN/abstract/abstract18712.shtml [21] Koller D, Friedman N. Probabilistic Graphical Models:Principles and Techniques. Massachusetts:MIT Press, 2009. [22] 刘建伟, 黎海恩, 罗雄麟.概率图模型学习技术研究进展.自动化学报, 2014, 40 (6):1025-1044 http://www.aas.net.cn/CN/abstract/abstract18373.shtmlLiu Jian-Wei, Li Hai-En, Luo Xiong-Lin. Learning technique of probabilistic graphical models:a review. Acta Automatica Sinica, 2014, 40 (6):1025-1044 http://www.aas.net.cn/CN/abstract/abstract18373.shtml [23] Li K C. Sliced inverse regression for dimension reduction. Journal of the American Statistical Association, 1991, 86 (414):316-327 doi: 10.1080/01621459.1991.10475035 [24] Chiaromonte F, Cook R D. Sufficient dimension reduction and graphics in regression. Annals of the Institute of Statistical Mathematics, 2002, 54 (4):768-795 doi: 10.1023/A:1022411301790 [25] Li K C. On principal Hessian directions for data visualization and dimension reduction:another application of Stein's lemma. Journal of the American Statistical Association, 1992, 87 (420):1025-1039 doi: 10.1080/01621459.1992.10476258 [26] Cook R D, Weisberg S. Sliced inverse regression for dimension reduction:comment. Journal of the American Statistical Association, 1991, 86 (414):328-332 http://citeseerx.ist.psu.edu/showciting?cid=2673643 [27] Li B, Wang S L. On directional regression for dimension reduction. Journal of the American Statistical Association, 2007, 102 (479):997-1008 doi: 10.1198/016214507000000536 [28] Cook R D. Fisher lecture:dimension reduction in regression. Statistical Science, 2007, 22 (1):1-26 doi: 10.1214/088342306000000682 [29] Cook R D, Forzani L. Principal fitted components for dimension reduction in regression. Statistical Science, 2008, 23 (4):485-501 doi: 10.1214/08-STS275 [30] Cook R D, Forzani L. Likelihood-based sufficient dimension reduction. Journal of the American Statistical Association, 2009, 104 (485):197-208 doi: 10.1198/jasa.2009.0106 [31] Bach F R, Jordan M I. Kernel independent component analysis. Journal of Machine Learning Research, 2002, 3:1-48 [32] Fukumizu K, Bach F R, Jordan M I. Dimensionality reduction for supervised learning with reproducing kernel Hilbert spaces. Journal of Machine Learning Research, 2004, 5:73-99 http://www.ams.org/mathscinet-getitem?mr=2247974 [33] Fukumizu K, Bach F R, Jordan M I. Kernel dimension reduction in regression. The Annals of Statistics, 2009, 37 (4):1871-1905 doi: 10.1214/08-AOS637 [34] Suzuki T, Sugiyama M. Sufficient dimension reduction via squared-loss mutual information estimation. In Proceedings of International Conference on Artificial Intelligence and Statistics, Chia Laguna Resort, Sardinia, Italy, 2010, 9:804-811 https://www.researchgate.net/profile/Masashi_Sugiyama/publication/220320963_Sufficient_Dimension_Reduction_via_Squared-loss_Mutual_Information_Estimation/links/0fcfd51196d6b08046000000.pdf?origin=publication_detail [35] Suzuki T, Sugiyama M. Sufficient dimension reduction via squared-loss mutual information estimation. Neural Computation, 2013, 25 (3):725-758 doi: 10.1162/NECO_a_00407 [36] Faivishevsky L, Goldberger J. Dimensionality reduction based on non-parametric mutual information. Neurocomputing, 2012, 80:31-37 doi: 10.1016/j.neucom.2011.07.028 [37] Wang M H, Sha F, Jordan M I. Unsupervised kernel dimension reduction. In: Proceedings of the Advances in Neural Information Processing Systems. Vancouver, Canada, 2010. 2379-2387 [38] Sheng W H, Yin X R. Sufficient dimension reduction via distance covariance. Journal of Computational and Graphical Statistics, 2016, 25 (1):91-104 doi: 10.1080/10618600.2015.1026601 [39] Fukumizu K, Leng C L. Gradient-based kernel method for feature extraction and variable selection. In: Proceedings of the Advances in Neural Information Processing Systems. Lake Tahoe, Nevada, 2012. 2114-2122 https://www.researchgate.net/publication/287550602_Gradient-based_kernel_method_for_feature_extraction_and_variable_selection [40] Fukumizu K, Leng C L. Gradient-based kernel dimension reduction for regression. Journal of the American Statistical Association, 2014, 109 (505):359-370 doi: 10.1080/01621459.2013.838167 [41] Sasaki H, Tangkaratt V, Sugiyama M. Sufficient dimension reduction via direct estimation of the gradients of logarithmic conditional densities. In: Proceedings of the 7th Asian Conference on Machine Learning. Hong Kong, China: PMLR, 2015. 33-48 http://europepmc.org/abstract/MED/29162006 [42] Wu H M. Kernel sliced inverse regression with applications to classification. Journal of Computational and Graphical Statistics, 2012, 17 (3):590-610 doi: 10.1198/106186008X345161 [43] Li L X. Sparse sufficient dimension reduction. Biometrika, 2007, 94 (3):603-613 doi: 10.1093/biomet/asm044 [44] Chen X, Zou C L, Cook R D. Coordinate-independent sparse sufficient dimension reduction and variable selection. The Annals of Statistics, 2010, 38 (6):3696-723 doi: 10.1214/10-AOS826 [45] Nilsson J, Sha F, Jordan M I. Regression on manifolds using kernel dimension reduction. In: Proceedings of the 24th International Conference on Machine Learning. Corvalis, Oregon, USA: ACM, 2007. 697-704 http://dl.acm.org/citation.cfm?id=1273584 [46] Shyr A, Urtasun R, Jordan M I. Sufficient dimension reduction for visual sequence classification. In: Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition. San Francisco, California, USA: IEEE, 2010. 3610-3617 http://ieeexplore.ieee.org/xpls/icp.jsp?arnumber=5539922 [47] Cook R D. On the interpretation of regression plots. Journal of the American Statistical Association, 1994, 89 (425):177-189 doi: 10.1080/01621459.1994.10476459 [48] Cook R D. Using dimension-reduction subspaces to identify important inputs in models of physical systems. In: Proceedings of the Section on Physical and Engineering Sciences. 1994. 18-25 https://www.researchgate.net/publication/313634045_Using_dimension-reduction_subspaces_to_identify_important_inputs_in_models_of_physical_systems [49] Li K C. Sliced inverse regression for dimension reduction:rejoinde. Journal of the American Statistical Association, 1991, 86 (414):337-342 [50] Harrison D, Rubinfeld D L. Hedonic housing prices and the demand for clean air. Journal of Environmental Economics and Management, 1978, 5 (1):81-102 https://ideas.repec.org/r/eee/jeeman/v5y1978i1p81-102.html [51] Stein C M. Estimation of the mean of a multivariate normal distribution. The Annals of Statistics, 1981, 9 (6):1135-1151 doi: 10.1214/aos/1176345632 [52] Li K C, Lue H H, Chen C H. Interactive tree-structured regression via principal Hessian directions. Journal of the American Statistical Association, 2000, 95 (450):547-560 doi: 10.1080/01621459.2000.10474231 [53] Gannoun A, Saracco J. An asymptotic theory for SIR α method. Statistica Sinica, 2003, 13:297-310 https://www.researchgate.net/publication/265359288_An_asymptotic_theory_for_SIR_a_method [54] Ye Z S, Weiss R E. Using the bootstrap to select one of a new class of dimension reduction methods. Journal of the American Statistical Association, 2003, 98 (464):968-979 doi: 10.1198/016214503000000927 [55] Li B, Zha H Y, Chiaromonte F. Contour regression:a general approach to dimension reduction. The Annals of Statistics, 2005, 33 (4):1580-1616 doi: 10.1214/009053605000000192 [56] Tipping M E, Bishop C M. Probabilistic principal component analysis. Journal of the Royal Statistical Society:Series B (Statistical Methodology), 1999, 61 (3):611-622 doi: 10.1111/rssb.1999.61.issue-3 [57] Cook R D, Forzani L M, Tomassi D R. LDR:a package for likelihood-based sufficient dimension reduction. Journal of Statistical Software, 2011, 39 (3):1-20 https://www.researchgate.net/profile/Kofi_Adragni/publication/279335077_ldr_An_R_Software_Package_for_Likelihood-Based_Sufficient_Dimension_Reduction/links/578c764208ae59aa667c5320/ldr-An-R-Software-Package-for-Likelihood-Based-Sufficient-Dimension-Reduction.pdf [58] Baker C R. Joint measures and cross-covariance operators. Transactions of the American Mathematical Society, 1973, 186:273-289 doi: 10.1090/S0002-9947-1973-0336795-3 [59] Absil P A, Mahony R, Sepulchre R. Optimization Algorithms on Matrix Manifolds. Princeton:Princeton University Press, 2009. [60] Zhang K, Tsang I W, Kwok J T. Improved Nystr öm low-rank approximation and error analysis. In: Proceedings of the 25th International Conference on Machine Learning. Helsinki, Finland: ACM, 2008. 1232-1239 http://dl.acm.org/citation.cfm?id=1390311 [61] Sugiyama M, Suzuki T, Kanamori T. Density Ratio Estimation in Machine Learning. Cambridge:Cambridge University Press, 2012. [62] Nguyen X, Wainwright M J, Jordan M I. Estimating divergence functionals and the likelihood ratio by penalized convex risk minimization. In: Proceedings of the Advances in Neural Information Processing Systems. Vancouver, Canada: ACM, 2008. 1089-1096 [63] Yamada M, Niu G, Takagi G, Sugiyama M. Computationally efficient sufficient dimension reduction via squared-loss mutual information. In: Proceedings of the 3rd Asian Conference on Machine Learning. Canberra, Australia: ACML, 2011. 247-262 https://www.researchgate.net/publication/215705073_Computationally_Efficient_Sufficient_Dimension_Reduction_via_Squared-Loss_Mutual_Information?ev=auth_pub [64] Faivishevsky L, Goldberger J. ICA based on a smooth estimation of the differential entropy. In: Proceedings of the Advances in Neural Information Processing Systems. Vancouver, British Columbia, Canada: ACM, 2009. 433-440 https://www.researchgate.net/publication/221618225_ICA_based_on_a_Smooth_Estimation_of_the_Differential_Entropy [65] Faivishevsky L, Goldberger J. Nonparametric information theoretic clustering algorithm. In: Proceedings of the 27th International Conference on Machine Learning. Haifa, Israel: ICML, 2010. 351-358 [66] Loftsgaarden D O, Quesenberry C P. A nonparametric estimate of a multivariate density function. The Annals of Mathematical Statistics, 1965, 36 (3):1049-1051 doi: 10.1214/aoms/1177700079 [67] Gretton A, Smola A J, Bousquet O, Herbrich R, Belitski A, Augath M, Murayama Y, Pauls J, Sch ölkopf B, Logothetis N K. Kernel constrained covariance for dependence measurement. In: Proceedings of the 10th International Workshop on Artificial Intelligence and Statistics. Barbados, 2005. 1-8 https://www.researchgate.net/publication/41781419_Kernel_Constrained_Covariance_for_Dependence_Measurement [68] Gretton A, Bousquet O, Smola A, Sch ölkopf B. Measuring statistical dependence with Hilbert-Schmidt norms. In: Proceedings of the 16th International Conference on Algorithmic Learning Theory. Berlin, Heidelberg: Springer-Verlag, 2005. 63-77 doi: 10.1007/11564089_7 [69] Sz ékely G J, Rizzo M L, Bakirov N K. Measuring and testing dependence by correlation of distances. The Annals of Statistics, 2007, 35 (6):2769-2794 doi: 10.1214/009053607000000505 [70] Zhang Y, Zhou Z H. Multi-label dimensionality reduction via dependence maximization. ACM Transactions on Knowledge Discovery from Data, 2010, 4 (3):Article No.14 https://cs.nju.edu.cn/zhouzh/zhouzh.files/publication/tkdd10mddm.pdf [71] Song L, Smola A, Gretton A, Borgwardt K M. A dependence maximization view of clustering. In: Proceedings of the 24th International Conference on Machine Learning. Corvalis, Oregon, USA: ACM, 2007. 815-822 http://dl.acm.org/citation.cfm?id=1273599 [72] Blaschko M, Gretton A. Learning taxonomies by dependence maximization. In: Proceedings of the Advances in Neural Information Processing Systems. Vancouver, B. C., Canada, 2009. 153-160 https://www.researchgate.net/publication/41782196_Learning_Taxonomies_by_Dependence_Maximization [73] Song L, Smola A, Gretton A, Bedo J, Borgwardt K. Feature selection via dependence maximization. The Journal of Machine Learning Research, 2012, 13 (1):1393-1434 https://dl.acm.org/citation.cfm?id=2343691 [74] Quadrianto N, Song L, Smola A J. Kernelized sorting. In: Proceedings of the Advances in neural information processing systems. Vancouver, B. C., Canada, 2009. 1289-1296 [75] Quadrianto N, Smola A J, Song L, Tuytelaars T. Kernelized sorting. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 32 (10):1809-1821 doi: 10.1109/TPAMI.2009.184 [76] Sun X H, Janzing D, Sch ölkopf B, Fukumizu K. A kernel-based causal learning algorithm. In: Proceedings of the 24th International Conference on Machine Learning. Corvalis, Oregon, USA: ACM, 2007. 855-862 [77] Li R Z, Zhong W, Zhu L P. Feature screening via distance correlation learning. Journal of the American Statistical Association, 2012, 107 (499):1129-1139 doi: 10.1080/01621459.2012.695654 [78] Vepakomma P, Tonde C, Elgammal A. Supervised dimensionality reduction via distance correlation maximization. arXiv: 1601. 00236, 2016. http://arxiv.org/abs/1601.00236 [79] Sz ékely G J, Rizzo M L. Brownian distance covariance. The Annals of Applied Statistics, 2009, 3 (4):1236-1265 doi: 10.1214/09-AOAS312 [80] Sejdinovic D, Sriperumbudur B, Gretton A, Fukumizu K. Equivalence of distance-based and RKHS-based statistics in hypothesis testing. The Annals of Statistics, 2013, 41 (5):2263-2291 doi: 10.1214/13-AOS1140 [81] Fukumizu K, Gretton A, Sun X H, Sch ölkopf B. Kernel measures of conditional dependence. In: Proceedings of the Advances in Neural Information Processing Systems. Vancouver, British Columbia, Canada: Curran Associates Inc., 2007. 489-496 [82] P óczos B, Ghahramani Z, Schneider J G. Copula-based kernel dependencymeasures. In: Proceedings of the 29th International Conference on Machine Learning. Edinburgh, Scotland: ICML, 2012. 1635-1642 http://www.oalib.com/paper/4033144 [83] Schweizer B, Wolff E F. On nonparametric measures of dependence for random variables. The Annals of Statistics, 1981, 9 (4):879-885 doi: 10.1214/aos/1176345528 [84] Steinwart I, Christmann A. Support Vector Machines. Berlin, Heidelberg:Springer Science & Business Media, 2008. [85] Kim M Y, Pavlovic V. Covariance operator based dimensionality reduction with extension to semi-supervised settings. In: Proceedings of the 12th International Conference on Artificial Intelligence and Statistics. Varna, Bulgaria: Springe, 2009. 280-287 https://www.researchgate.net/publication/220319841_Covariance_Operator_Based_Dimensionality_Reduction_with_Extension_to_Semi-Supervised_Settings [86] Zou H, Hastie T, Tibshirani R. Sparse principal component analysis. Journal of Computational and Graphical Statistics, 2006, 15 (2):265-286 doi: 10.1198/106186006X113430 [87] Cunningham J P, Ghahramani Z. Linear dimensionality reduction:Survey, insights, and generalizations. Journal of Machine Learning Research, 2015, 16:2859-2900 https://arxiv.org/abs/1406.0873 [88] Fan K, Hoffman A J. Some metric inequalities in the space of matrices. Proceedings of the American Mathematical Society, 1995, 6 (1):111-116 doi: 10.1142/9789812796936_0012 [89] Kaneko T, Fiori S, Tanaka T. Empirical arithmetic averaging over the compactStiefel manifold. IEEE Transactions on Signal Processing, 2013, 61 (4):883-894 doi: 10.1109/TSP.2012.2226167 [90] Cook R D, Yin X R. Theory & methods:special invited paper:dimension reduction and visualization in discriminant analysis (with discussion). Australian & New Zealand Journal of Statistics, 2001, 43 (2):147-199 doi: 10.1146/annurev.psych.52.1.1 [91] Zhu Y, Zeng P. Fourier methods for estimating the central subspace and the central mean subspace in regression. Journal of the American Statistical Association, 2006, 101 (476):1638-1651 doi: 10.1198/016214506000000140 [92] Davies A C, Yin J H, Velastin S A. Crowd monitoring using image processing. Electronics & Communication Engineering Journal, 1995, 7 (1):37-47 https://www.researchgate.net/publication/3364407_Crowd_monitoring_using_image_processing [93] Cho S Y, Chow T W, Leung C T. A neural-based crowd estimation by hybrid global learning algorithm. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 1999, 29 (4):535-541 doi: 10.1109/3477.775269 [94] 时增林, 叶阳东, 吴云鹏, 娄铮铮.基于序的空间金字塔池化网络的人群计数方法.自动化学报, 2016, 42(6):866-874 http://www.aas.net.cn/CN/abstract/abstract18877.shtmlShi Zeng-Lin, Ye Yang-Dong, Wu Yun-Peng, Lou Zheng-Zheng. Crowd counting using rank-based spatial pyramid pooling network. Acta Automatica Sinica, 2016, 42 (6):866-874 http://www.aas.net.cn/CN/abstract/abstract18877.shtml [95] Dasgupta S, Freund Y. Random projection trees and low dimensional manifolds. In: Proceedings of the 40th Annual ACM Symposium on Theory of Computing. Victoria, British Columbia, Canada: ACM, 2008. 537-546 http://dl.acm.org/citation.cfm?id=1374452 -

下载:

下载: