GRU-Based Modeling for Predicting Guidewire Trajectories in Interventional Robotics with Morphological Feature Fusion

-

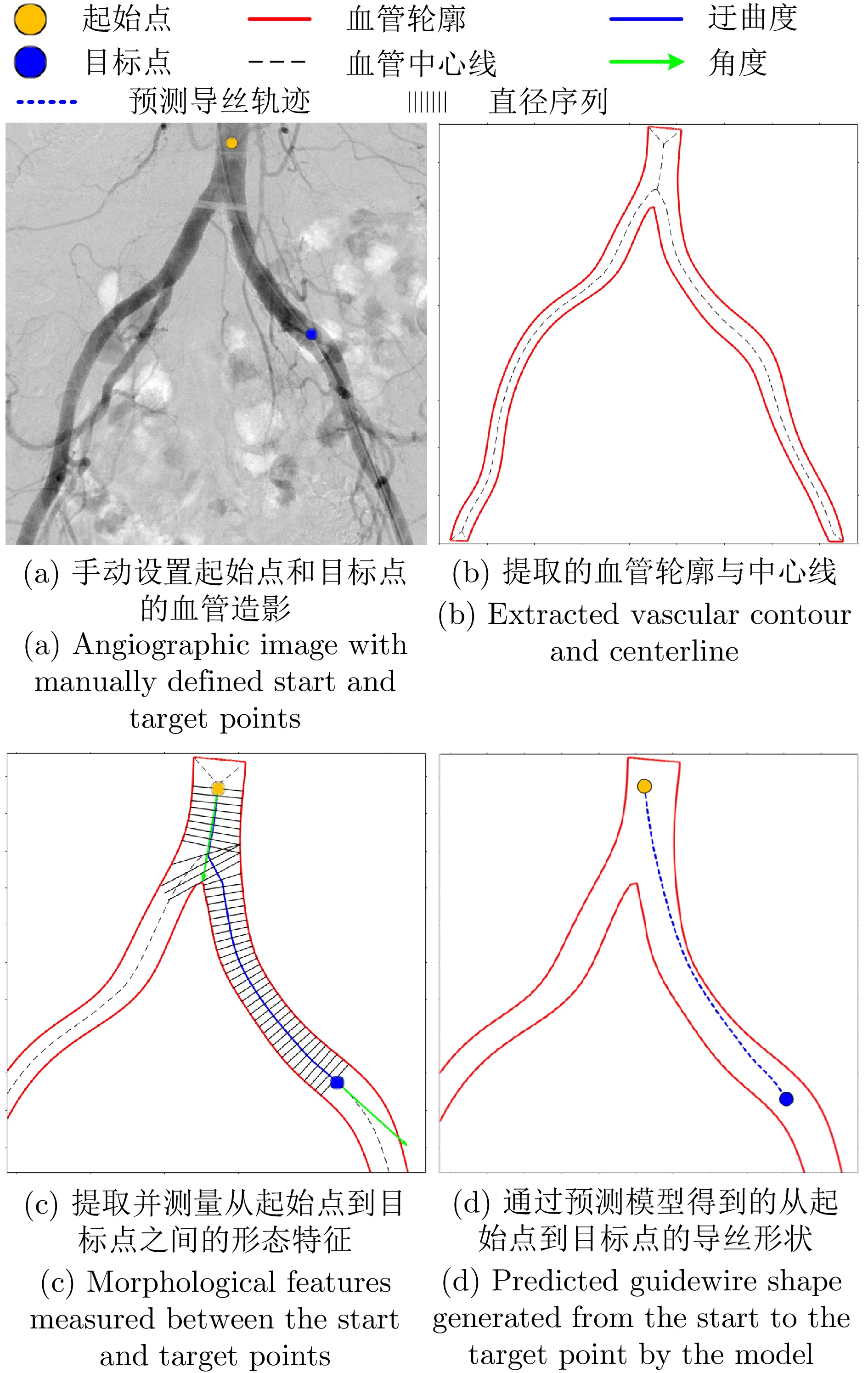

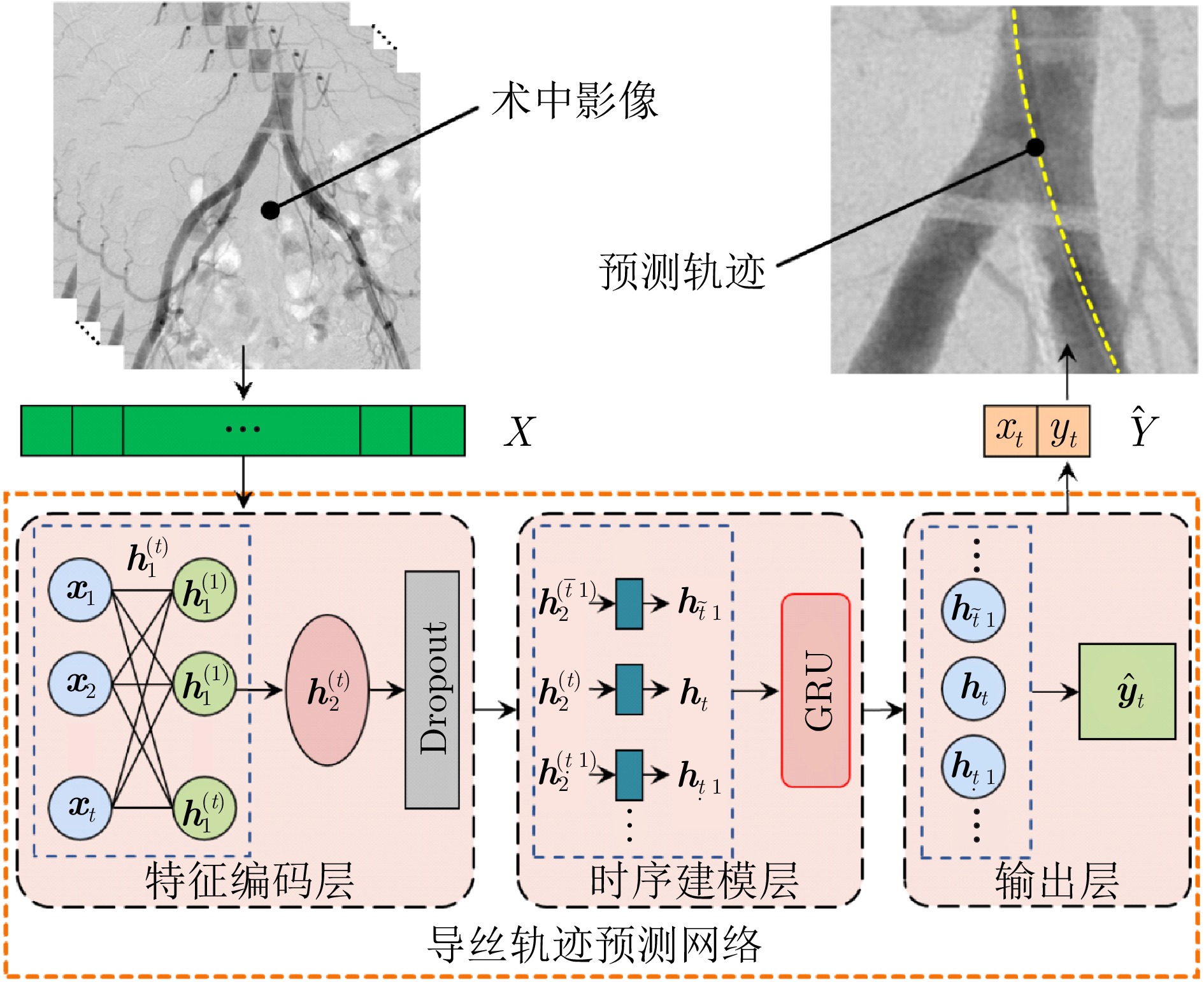

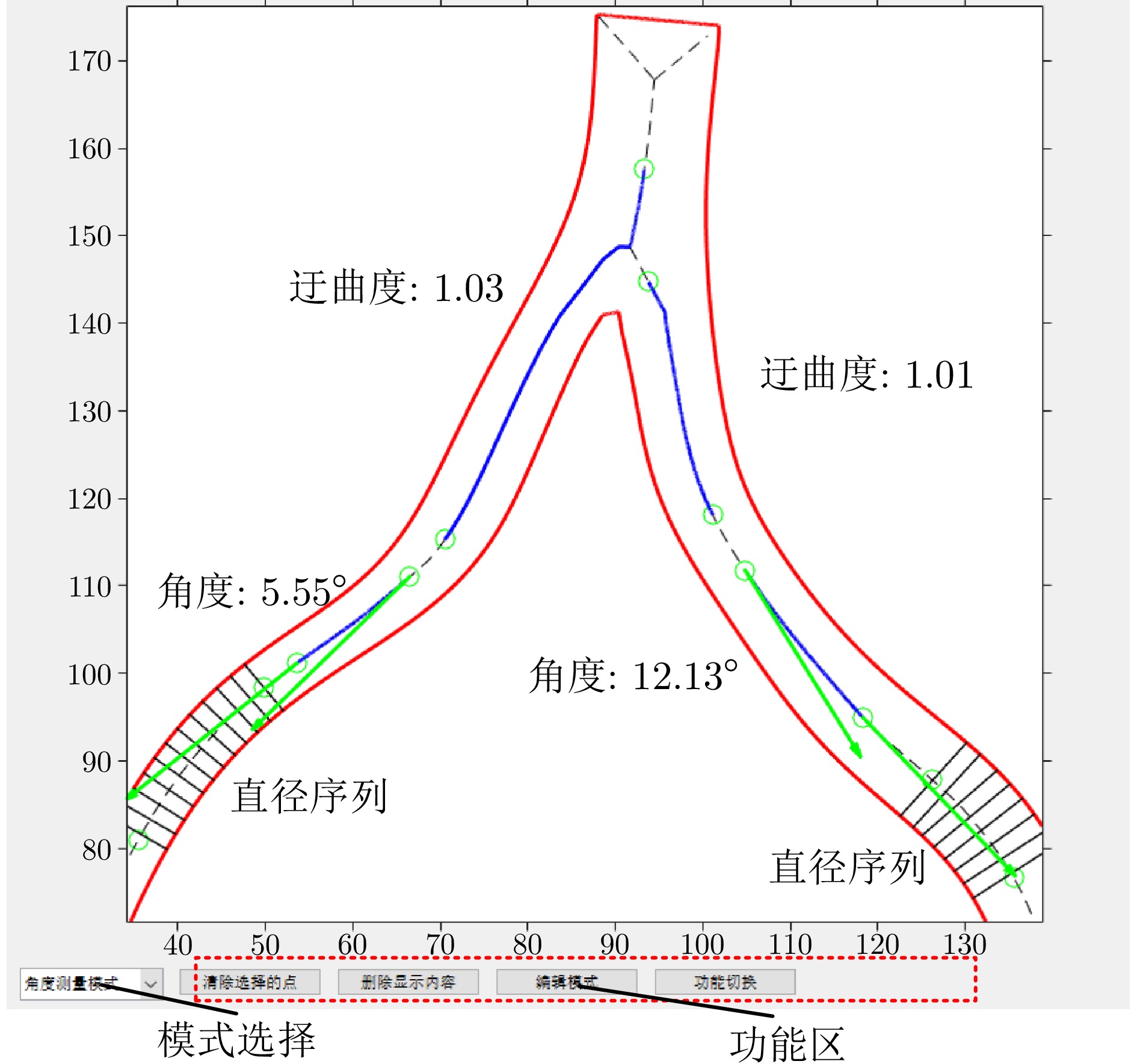

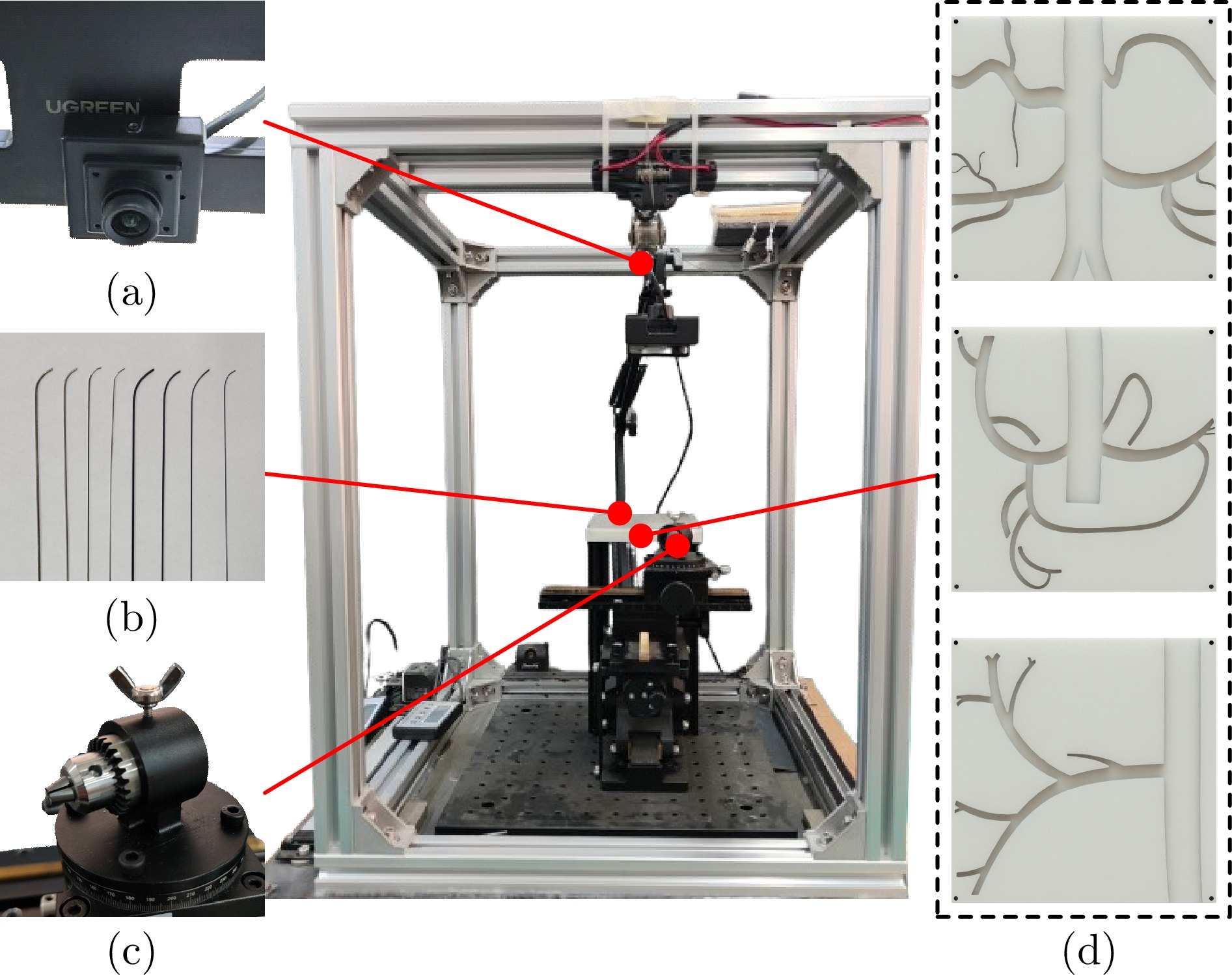

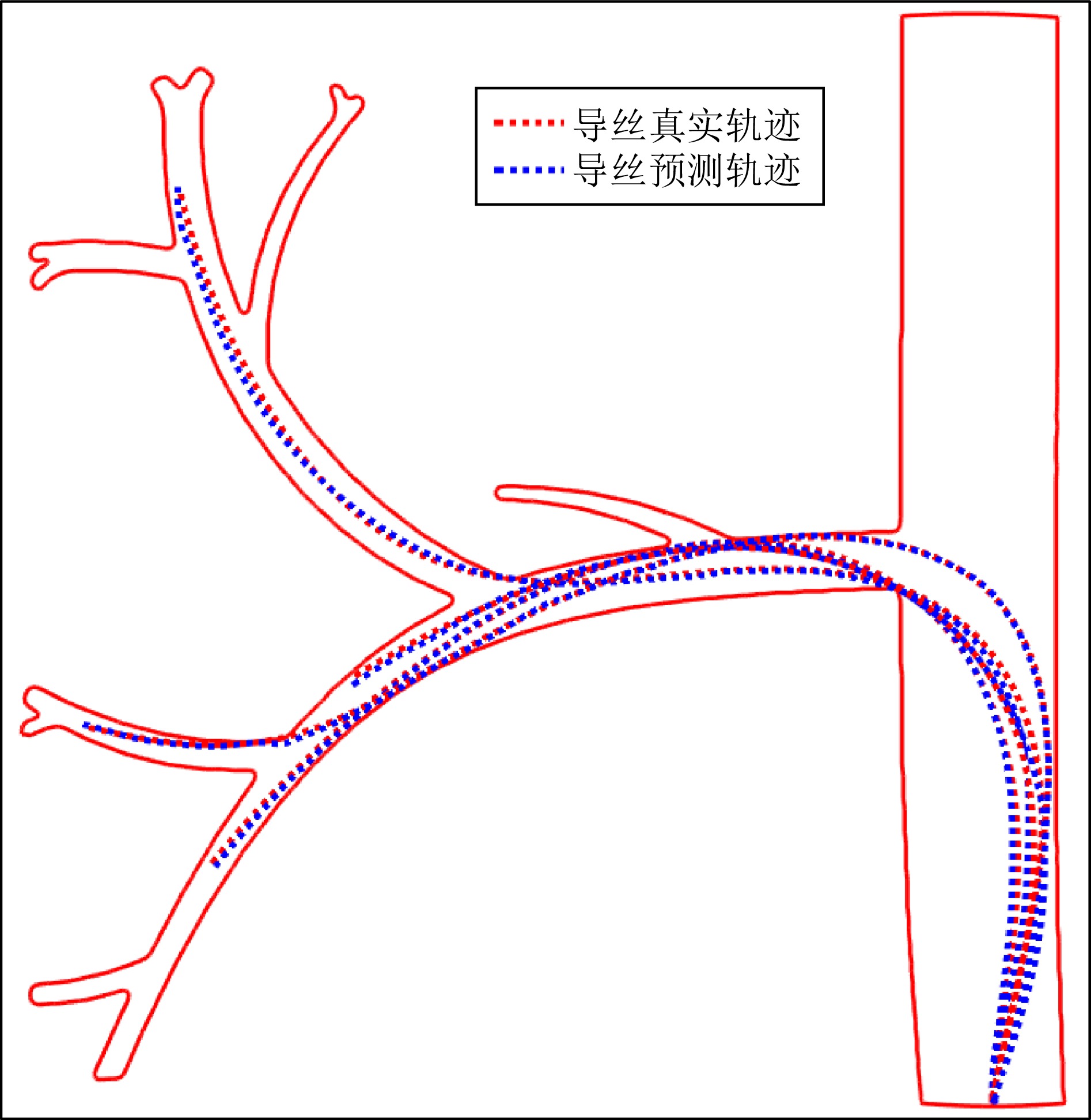

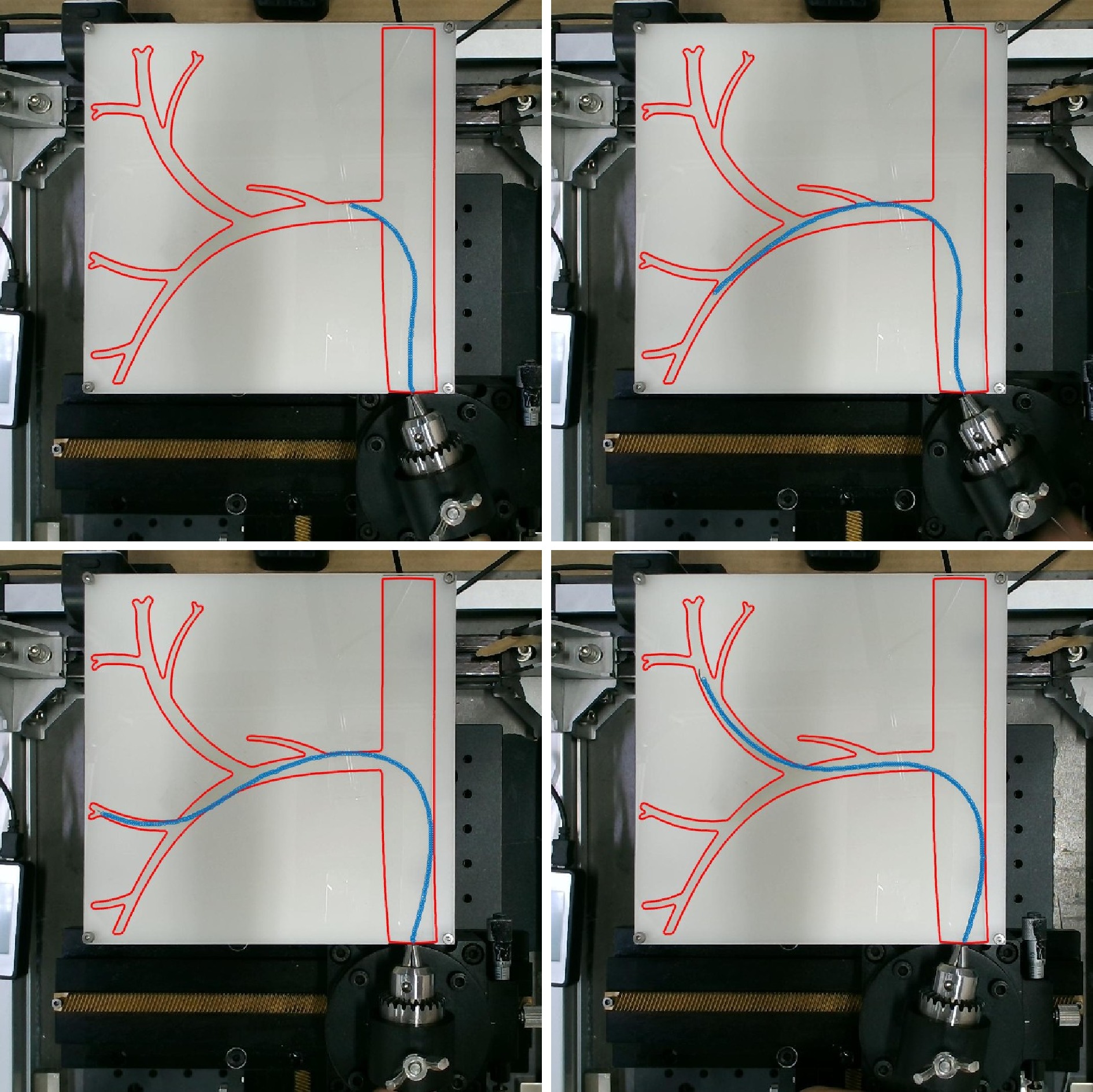

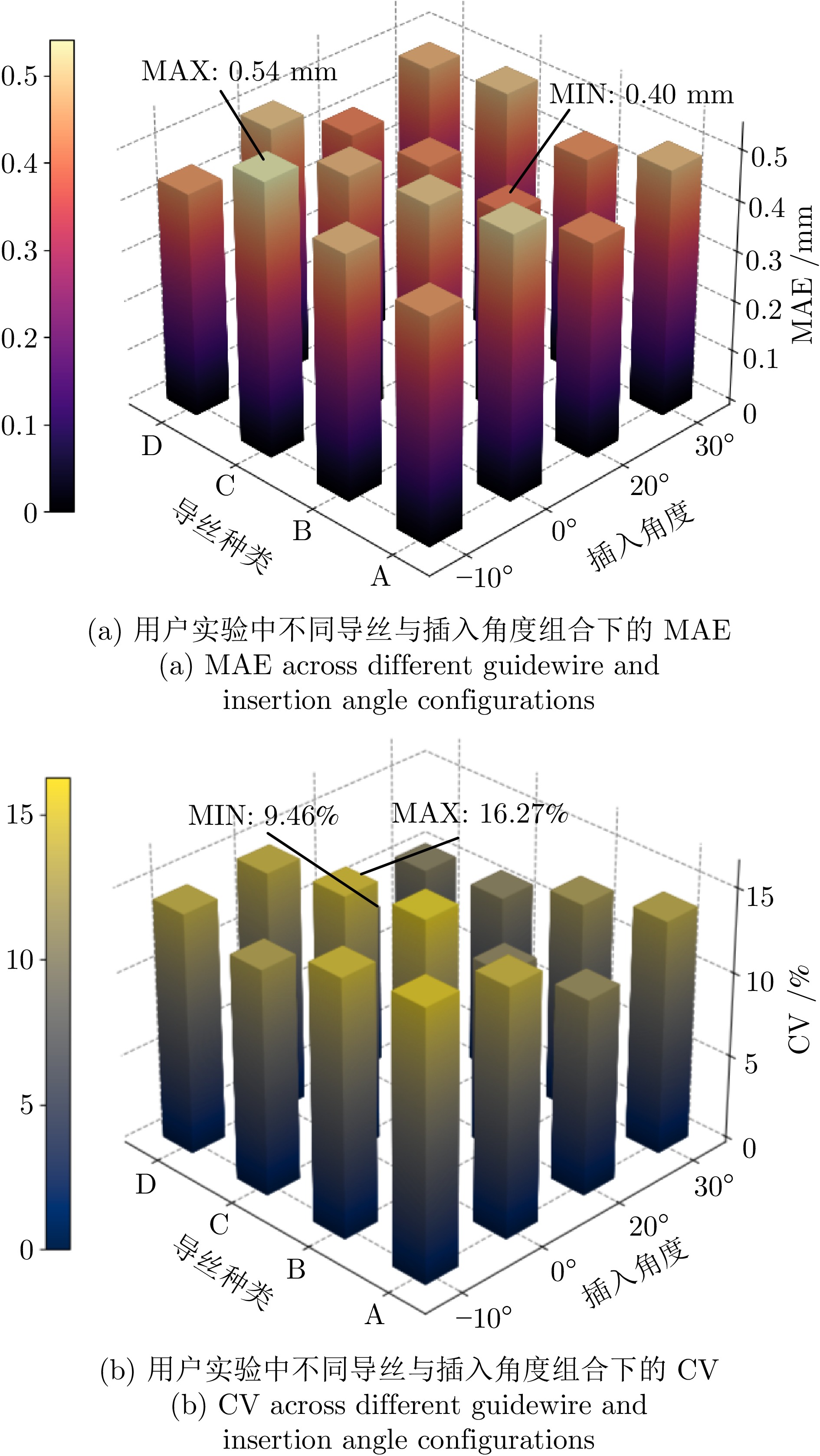

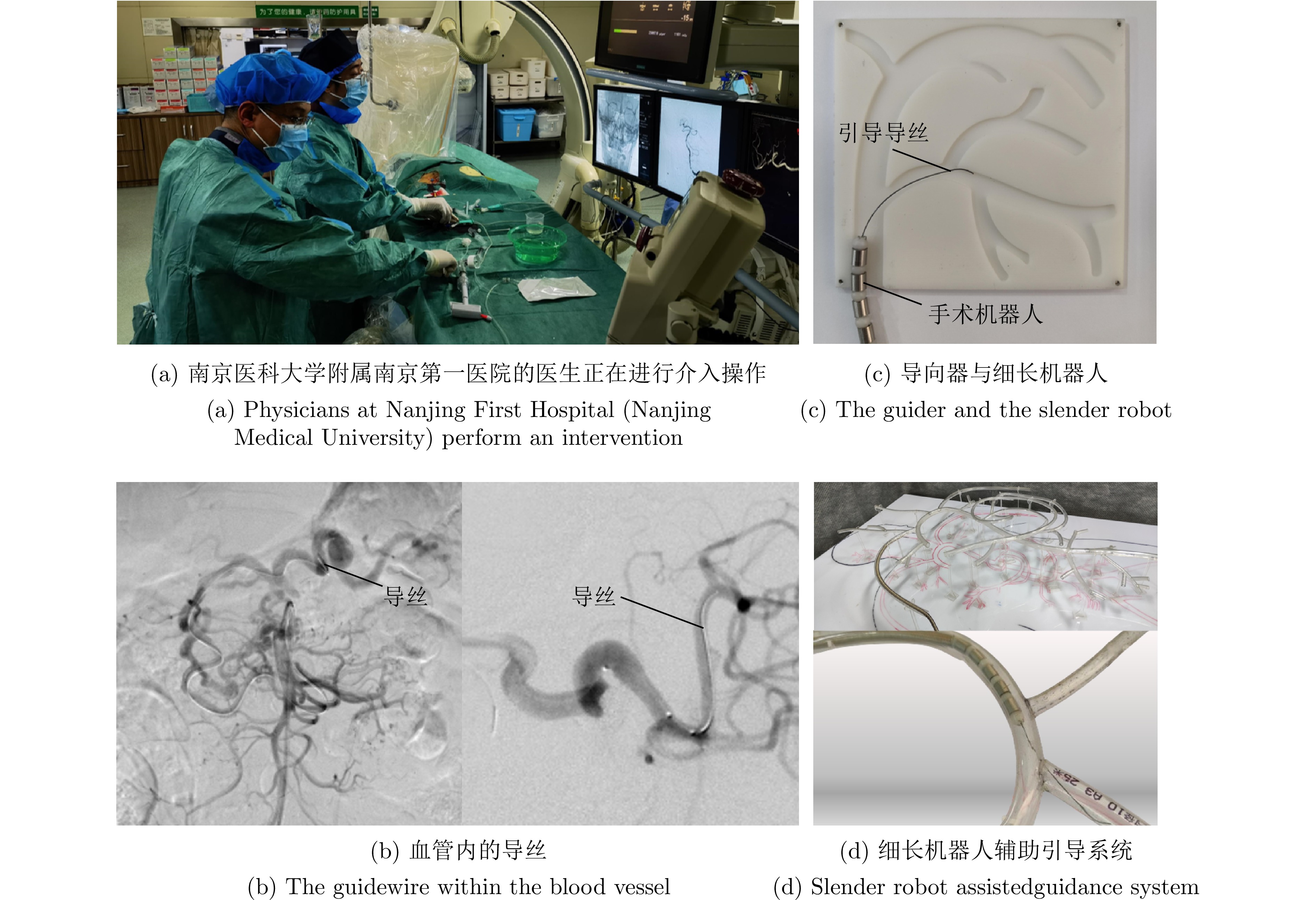

摘要: 在介入手术场景中, 导丝整体轨迹的预测对导航安全至关重要. 提出一种基于门控循环单元(GRU)的因果时序建模框架: 将导丝物理属性, 血管接触区形态特征与操作环境参数中的序列级常量(刚度, 进入角度, 摩擦系数等)按时间步广播, 与动态几何量(中心线坐标, 直径等)拼接, 经两层特征编码后输入单向GRU, 逐时回归二维坐标. 针对变长序列, 提出时间步长度分类策略训练机制, 在不改网络结构的前提下提升收敛与适配能力. 实验结果表明, 在多类导丝与多进入角度条件下, 模型在保持因果性的同时兼具准确性与实时性: 最小误差0.40 mm, 平均误差0.46 mm, 最大误差0.54 mm; 相较未采用分类策略的基线, 收敛epoch降低42%, 训练用时降低52%, 单次推理时延降低51%. 本研究为介入机器人导丝轨迹建模与术中导航提供了可部署的算法基础.

-

关键词:

- 介入机器人 /

- 导丝轨迹预测 /

- 门控循环单元(GRU) /

- 形态特征融合 /

- 时序建模

Abstract: We present a causality-preserving sequential estimator for guidewire trajectory reconstruction during interventional navigation. Unlike generic recurrent baselines, the proposed model time-broadcasts sequence-level constants---including stiffness, insertion angle, and an effective friction descriptor---and concatenates them with dynamic geometric tokens (centerline coordinates and local diameter) before a two-stage feature encoder and a unidirectional GRU decoder that emits 2-D positions stepwise. To cope with variable sequence lengths, we adopt a length-bucketed curriculum with mask-based loss, which limits padding-induced gradients and improves efficiency without altering the network architecture. On a phantom platform covering multiple guidewire types and insertion angles, the method achieves a 0.40--0.54~mm position-error range (mean 0.46~mm) while preserving strict causality; relative to a non-bucketed baseline, it reduces epochs-to-convergence by 42%, training time by 52%, and per-inference latency by 51%. These results indicate a deployable, real-time basis for guidewire trajectory estimation and intraoperative navigation. -

表 1 时间步分类策略对模型实时性的影响

Table 1 Impact of the time-step classification strategy on model real-time performance

是否使用时间步分类策略 epoch数量 训练用时 单次推理时延 是 377 0.221h 11.571ms 否 653 0.457h 23.783ms -

[1] Robertshaw H, Karstensen L, Jackson B, Granados A, Booth T C. Autonomous navigation of catheters and guidewires in mechanical thrombectomy using inverse reinforcement learning. International Journal of Computer Assisted Radiology and Surgery, 2024, 19(8): 1569−1578 doi: 10.1007/s11548-024-03208-w [2] 奉振球, 侯增广, 边桂彬, 谢晓亮, 周小虎. 微创血管介入手术机器人的主从交互控制方法与实现. 自动化学报, 2016, 42(5): 696−705 doi: 10.16383/j.aas.2016.c150577Feng Zhen-Qiu, Hou Zeng-Guang, Bian Gui-Bin, Xie Xiao-Liang, Zhou Xiao-Hu. Master-slave interactive control and implementation for minimally invasive vascular interventional robots. Acta Automatica Sinica, 2016, 42(5): 696−705 doi: 10.16383/j.aas.2016.c150577 [3] Li L, Li X J, Yang S L, Ding S, Jolfaei A, Zheng X. Unsupervised-learning-based continuous depth and motion estimation with monocular endoscopy for virtual reality minimally invasive surgery. IEEE Transactions on Industrial Informatics, 2021, 17(6): 3920−3928 doi: 10.1109/TII.2020.3011067 [4] Chen T, Zhao X W, Zhang Y H, Zheng G, Hou L C, Ling Q, et al. Ultrasound-guided robotic autonomous operation based on real-time deformation tracking and prediction. IEEE Transactions on Industrial Informatics, 2025, 21(2): 1369−1378 doi: 10.1109/TII.2024.3477549 [5] 张天, 刘检华, 唐承统, 刘少丽. 基于中心线匹配的导管三维重建技术. 自动化学报, 2015, 41(4): 735−748 doi: 10.16383/j.aas.2015.c130287Zhang Tian, Liu Jian-Hua, Tang Cheng-Tong, Liu Shao-Li. 3D reconstruction technique of pipe based on centerline matching. Acta Automatica Sinica, 2015, 41(4): 735−748 doi: 10.16383/j.aas.2015.c130287 [6] 金朝勇, 耿国华, 李姬俊男, 周明全, 朱新懿. 一种新的虚拟血管镜自动导航路径生成方法. 自动化学报, 2015, 41(8): 1412−1418 doi: 10.16383/j.aas.2015.c150014Jin Chao-Yong, Geng Guo-Hua, Li Ji-Jun-Nan, Zhou Ming-Quan, Zhu Xin-Yi. A new automatic navigation path generation approach to virtual angioscopy. Acta Automatica Sinica, 2015, 41(8): 1412−1418 doi: 10.16383/j.aas.2015.c150014 [7] 张名洋, 边桂彬, 李桢, 叶强. 支气管介入的多段柔性连续体机器人实时形状重建. 科学技术与工程, 2025, 25(1): 245−251Zhang Ming-yang, Bian Gui-bin, Li Zhen, Ye Qiang. Real-time shape reconstruction of flexible multi-segment continuum robot based for bronchial intervention. Science Technology and Engineering, 2025, 25(1): 245−251 [8] Pore A, Li Z, Dall'Alba D, Hernansanz A, De Momi E, Menciassi A, et al. Autonomous navigation for robot-assisted intraluminal and endovascular procedures: A systematic review. IEEE Transactions on Robotics, 2023, 39(4): 2529−2548 doi: 10.1109/TRO.2023.3269384 [9] Li P, Feng J, Zhang X, Fang D L, Zhang J X, Liang C M. Modeling and experimental study of the intervention forces between the guidewire and blood vessels. Medical Engineering & Physics, 2024, 127: Article No. 104166 doi: 10.1016/j.medengphy.2024.104166 [10] Ravigopal S R, Brumfiel T A, Sarma A, Desai J P. Fluoroscopic image-based 3-D environment reconstruction and automated path planning for a robotically steerable guidewire. IEEE Robotics and Automation Letters, 2022, 7(4): 11918−11925 doi: 10.1109/LRA.2022.3207568 [11] Zhang R F, Dong L J, Wang X S, Tian M Q, Su H B. A sensor-less guider contact force estimation approach for endovascular slender robot-assisted guidance system. IEEE Transactions on Instrumentation and Measurement, 2025, 74: 1−9 doi: 10.1109/tim.2025.3545167 [12] Zhang R F, Dong L J, Wang X S, Tian M Q, Su H B. Morphological feature measurement of specific locations using image processing. In: Proceedings of the Eighth International Conference on Machine Vision and Applications (ICMVA 2025). SPIE, 2025, 13734: 9−17 [13] Shen D H, Zhang Q, Han Y L, Tu C L, Wang X S. Design and development of a continuum robot with switching-stiffness. Soft Robotics, 2023, 10(5): 1015−1027 doi: 10.1089/soro.2022.0179 [14] Sharei H, Alderliesten T, van den Dobbelsteen J J, Dankelman J. Navigation of guidewires and catheters in the body during intervention procedures: A review of computer-based models. Journal of Medical Imaging, 2018, 5(1): Article No. 010902 doi: 10.1117/1.jmi.5.1.010902 [15] Beasley R A, Howe R D. Increasing accuracy in image-guided robotic surgery through tip tracking and model-based flexion correction. IEEE Transactions on Robotics, 2009, 25(2): 292−302 doi: 10.1109/TRO.2009.2014498 [16] 蒋芸, 谭宁. 基于条件深度卷积生成对抗网络的视网膜血管分割. 自动化学报, 2021, 47(1): 136−147Jiang Yun, Tan Ning. Retinal vessel segmentation based on conditional deep convolutional generative adver-sarial networks. Acta Automatica Sinica, 2021, 47(1): 136−147 [17] Tai Y H, Qian K, Huang X Q, Zhang J, Jan M A, Yu Z T. Intelligent intraoperative haptic-AR navigation for COVID-19 lung biopsy using deep hybrid model. IEEE Transactions on Industrial Informatics, 2021, 17(9): 6519−6527 doi: 10.1109/TII.2021.3052788 [18] Zhao Y, Wang Y X, Zhang J H, Liu X K, Li Y X, Guo S X, et al. Surgical GAN: Towards real-time path planning for passive flexible tools in endovascular surgeries. Neurocomputing, 2022, 500: 567−580 doi: 10.1016/j.neucom.2022.05.044 [19] Ravigopal S R, Brumfiel T A, Sarma A, Desai J P. Fluoroscopic image-based 3-D environment reconstruction and automated path planning for a robotically steerable guidewire. IEEE Robotics and Automation Letters, 2022, 7(4): 11918−11925 doi: 10.1109/LRA.2022.3207568 [20] Qi F, Ju F, Bai D M, Wang Y Y, Chen B. Kinematic analysis and navigation method of a cable-driven continuum robot used for minimally invasive surgery. The International Journal of Medical Robotics and Computer Assisted Surgery, 2019, 15(4): Article No. e2007 doi: 10.1002/rcs.2007 [21] Li Z, Dankelman J, De Momi E. Path planning for endovascular catheterization under curvature constraints via two-phase searching approach. International Journal of Computer Assisted Radiology and Surgery, 2021, 16(4): 619−627 doi: 10.1007/s11548-021-02328-x [22] Gao M K, Chen Y M, Liu Q, Huang C, Li Z Y, Zhang D H. Three-dimensional path planning and guidance of leg vascular based on improved ant colony algorithm in augmented reality. Journal of Medical Systems, 2015, 39(11): 133 doi: 10.1007/s10916-015-0315-2 [23] Scarponi V, Duprez M, Nageotte F, Cotin S. A zero-shot reinforcement learning strategy for autonomous guidewire navigation. International Journal of Computer Assisted Radiology and Surgery, 2024, 19(6): 1185−1192 doi: 10.1007/s11548-024-03092-4 [24] Kweon J, Kim K, Lee C, Kwon H, Park J, Song K, et al. Deep reinforcement learning for guidewire navigation in coronary artery phantom. IEEE Access, 2021, 9: 166409−166422 doi: 10.1109/ACCESS.2021.3135277 [25] Vy P, Auffret V, Castro M, Badel P, Rochette M, Haigron P, Avril S. Patient-specific simulation of guidewire deformation during transcatheter aortic valve implantation. International Journal for Numerical Methods in Biomedical Engineering, 2018, 34(6): Article No. e2974 doi: 10.1002/cnm.2974 [26] Cheng X R, Song Q K, Xie X L, Cheng L, Wang L, Bian G B, et al. A fast and stable guidewire model for minimally invasive vascular surgery based on Lagrange multipliers. In: Proceedings of the 2017 Seventh International Conference on Information Science and Technology (ICIST). 2017. 109−114 [27] Schafer S, Singh V, Noël P B, Walczak A M, Xu J, Hoffmann K R. Real-time endovascular guidewire position simulation using shortest path algorithms. International Journal of Computer Assisted Radiology and Surgery, 2009, 4(6): 597−608 doi: 10.1007/s11548-009-0385-z [28] Qiu J P, Zhang L B, Yang G Y, Chen Y, Zhou S J. An improved real-time endovascular guidewire position simulation using activity on edge network. IEEE Access, 2019, 7: 126618−126624 doi: 10.1109/ACCESS.2019.2935327 [29] Li N, Wang Y W, Zhao H, Ding H. Sensor-free strategy for estimating guidewire/catheter shape and contact force in endovascular interventions. IEEE Robotics and Automation Letters, 2025, 10(1): 264−271 doi: 10.1109/LRA.2024.3504236 [30] Chen K, Qin W J, Xie Y Q, Zhou S J. Towards real time guide wire shape extraction in fluoroscopic sequences: A two phase deep learning scheme to extract sparse curvilinear structures. Computerized Medical Imaging and Graphics, 2021, 94: Article No. 101989 doi: 10.1016/j.compmedimag.2021.101989 [31] Ha X T, Wu D, Ourak M, Borghesan G, Dankelman J, Menciassi A, et al. Shape sensing of flexible robots based on deep learning. IEEE Transactions on Robotics, 2023, 39(2): 1580−1593 doi: 10.1109/TRO.2022.3221368 [32] 黎定佳, 郭钰琪, 杨永明, 李锐, 刘连庆, 刘浩. 腔道介入交互约束下的柔性机器人具身形态感知方法. 机器人, 2025, 47(4): 497−507 doi: 10.13973/j.cnki.robot.250202Li Ding-Jia, Guo Yu-Qi, Yang Yong-Ming, Li Yue, Liu Lian-Qing, Liu Hao. Embodied morphological perception for flexible robots with interactive constraints in luminal intervention. Robot, 2025, 47(4): 497−507 doi: 10.13973/j.cnki.robot.250202 [33] Kuntz A, Sethi A, Webster R J, Alterovitz R. Learning the complete shape of concentric tube robots. IEEE Transactions on Medical Robotics and Bionics, 2020, 2(2): 140−147 doi: 10.1109/TMRB.2020.2974523 -

计量

- 文章访问数: 1

- HTML全文浏览量: 1

- 被引次数: 0

下载:

下载: