Robot Adaptive Force Control Grasping Method Based on Bionic Mechanism of “Shape-Perception-Action”

-

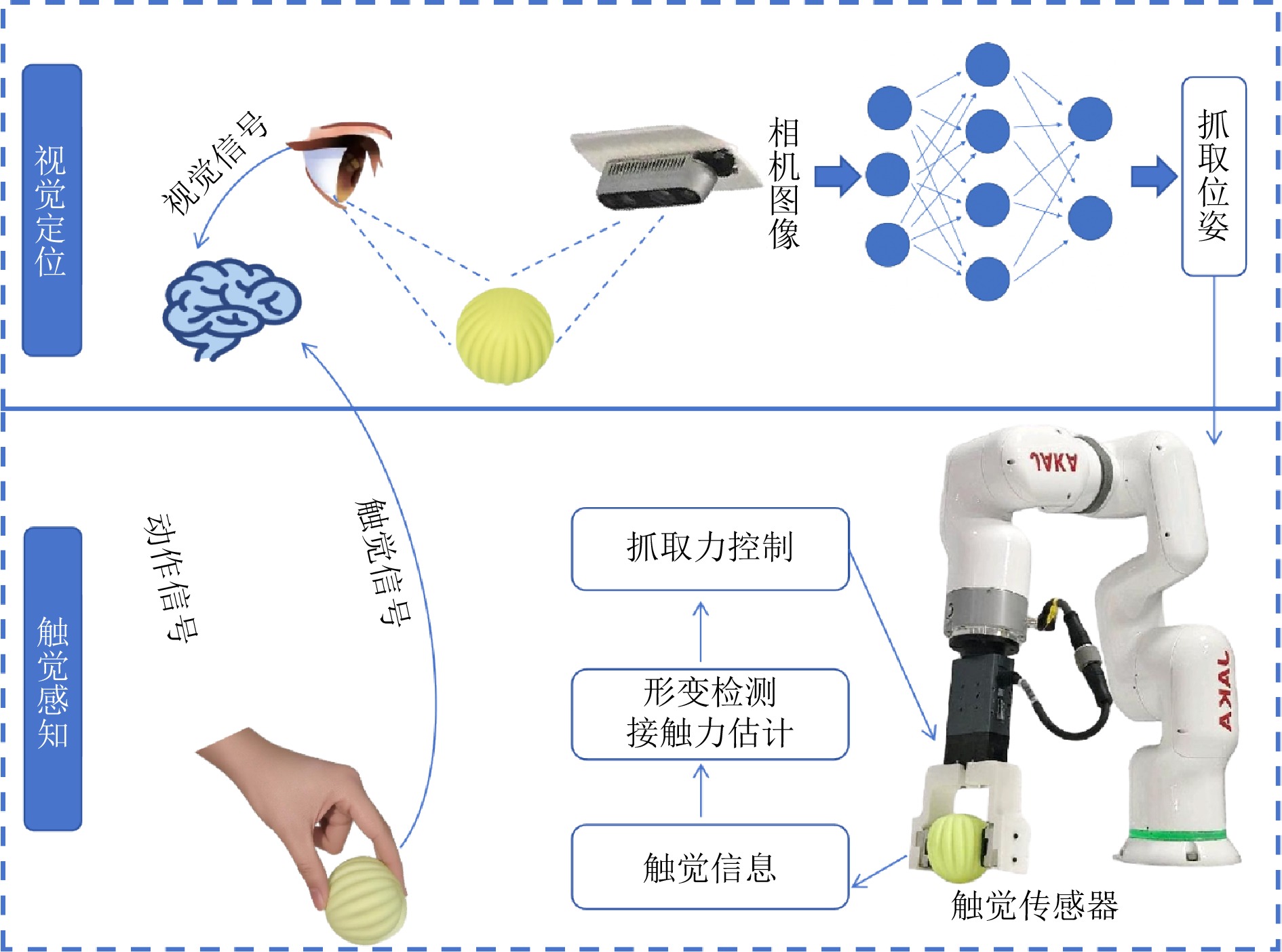

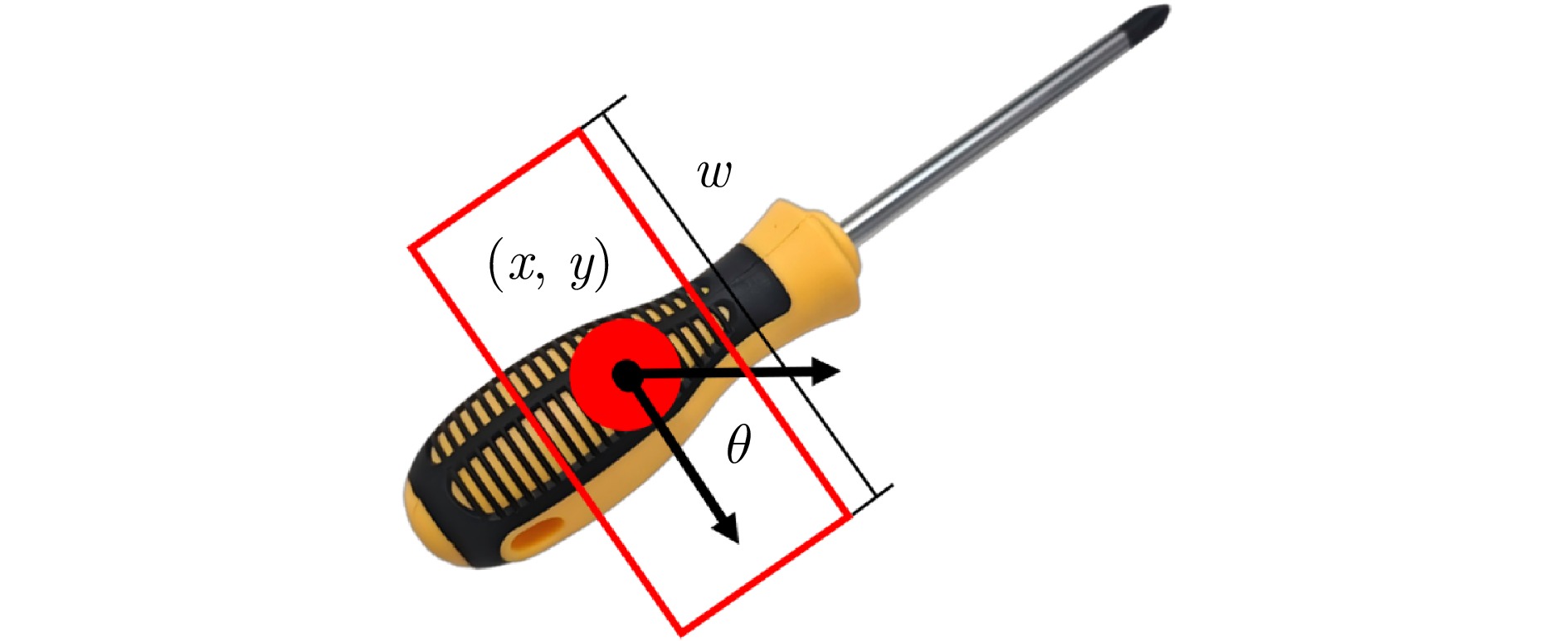

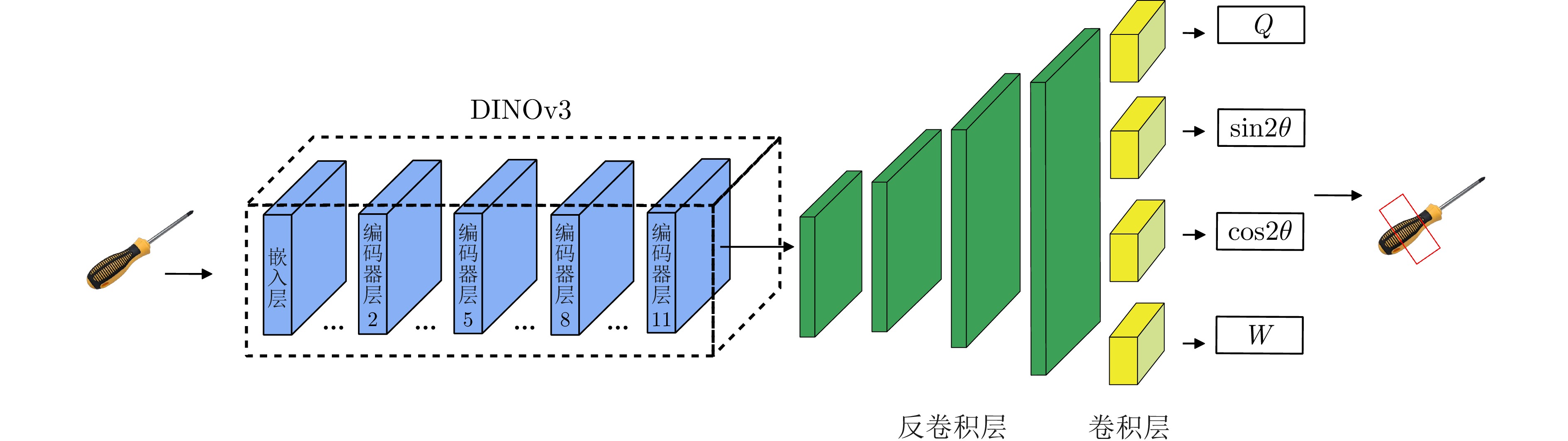

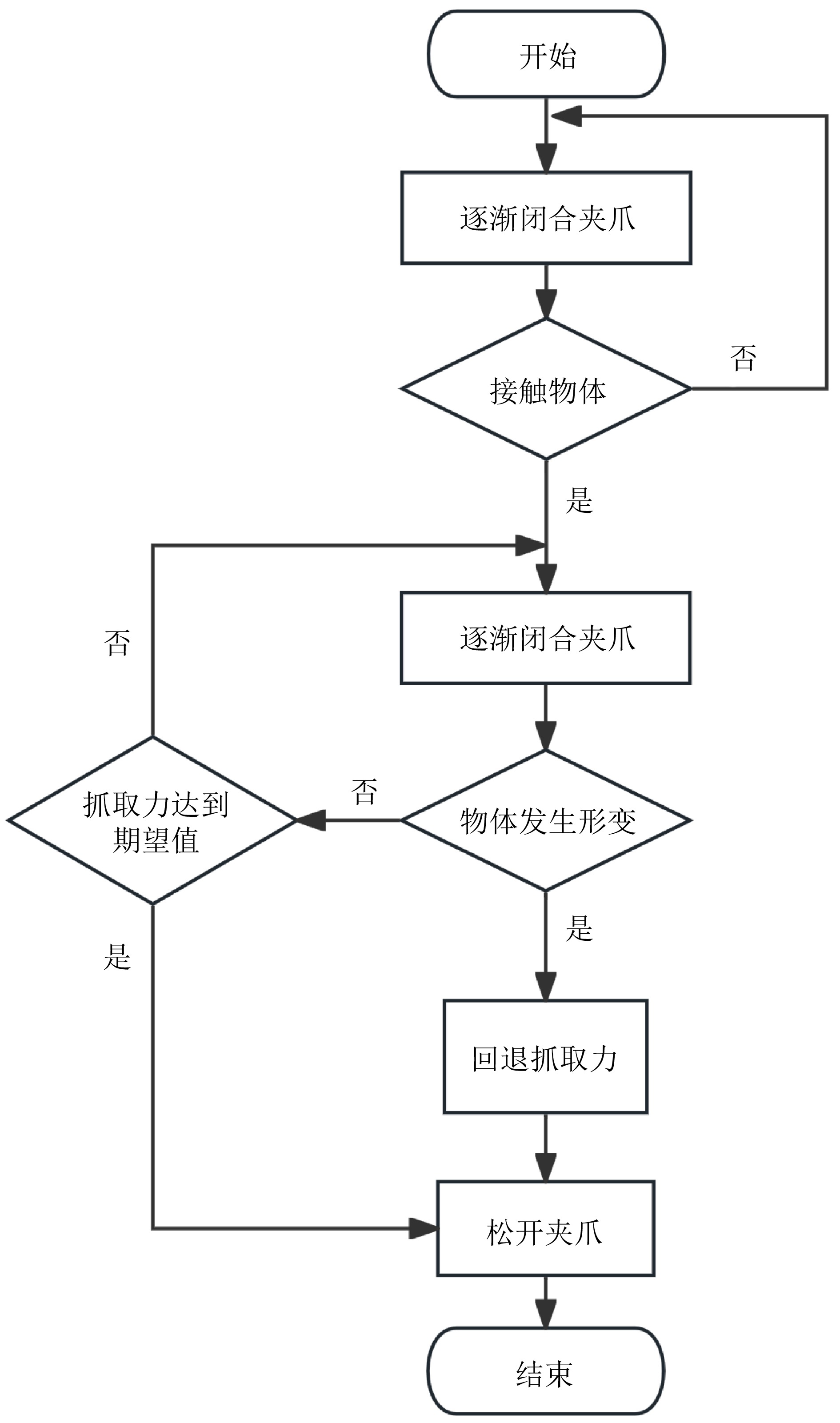

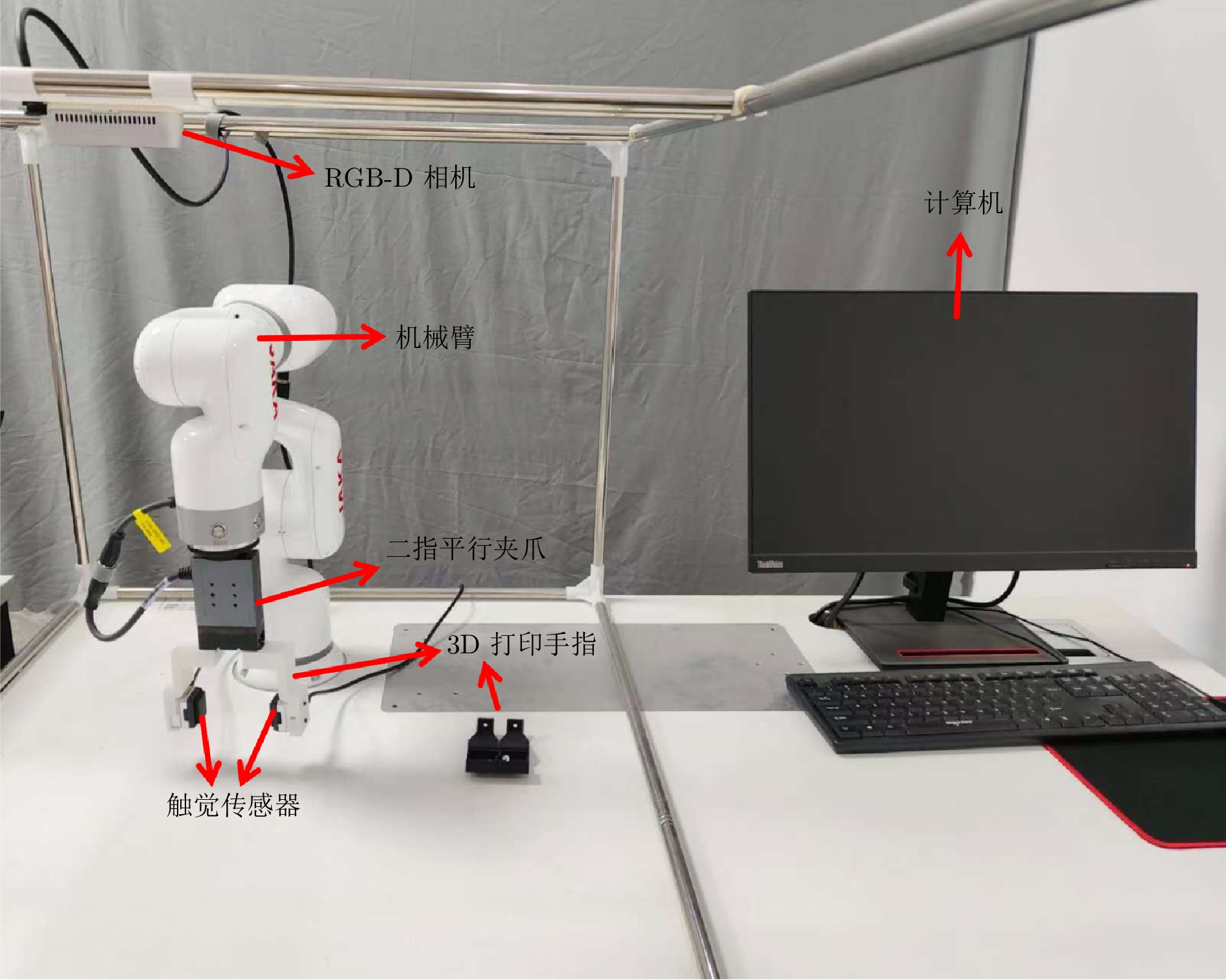

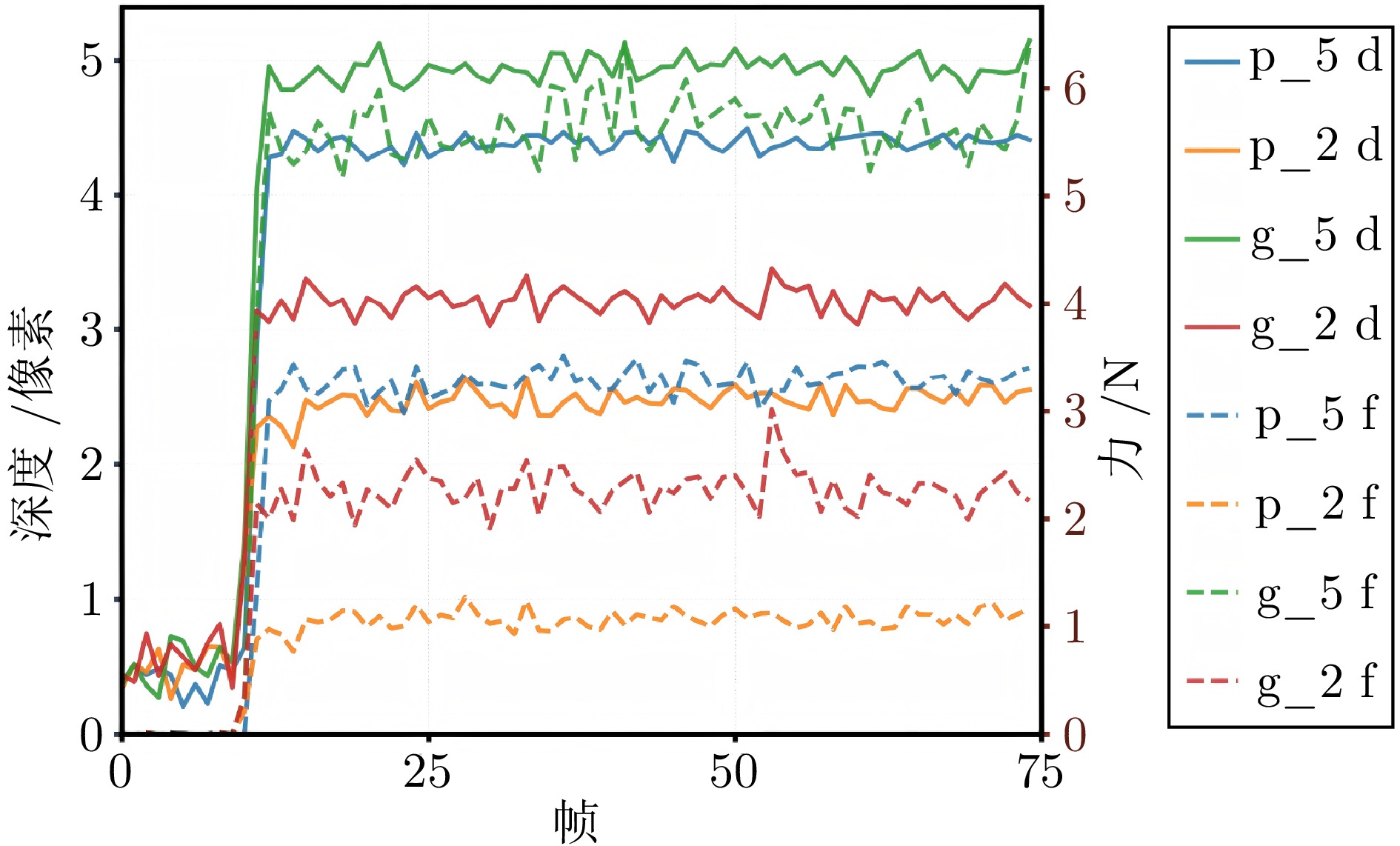

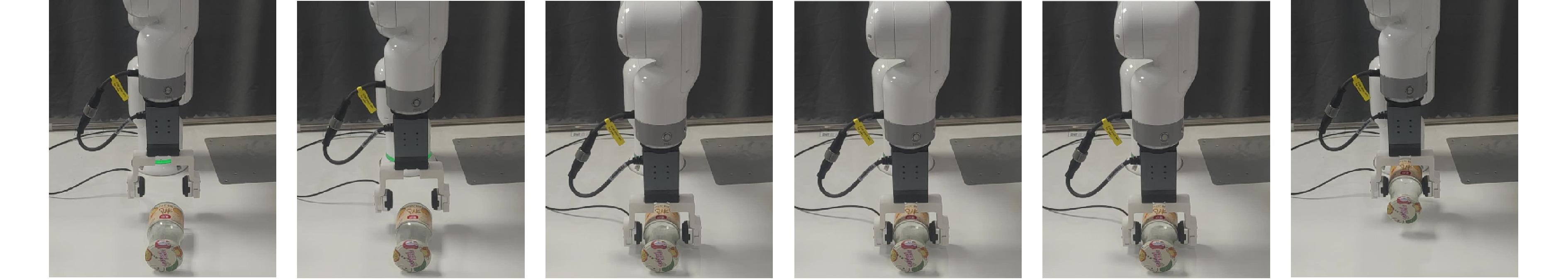

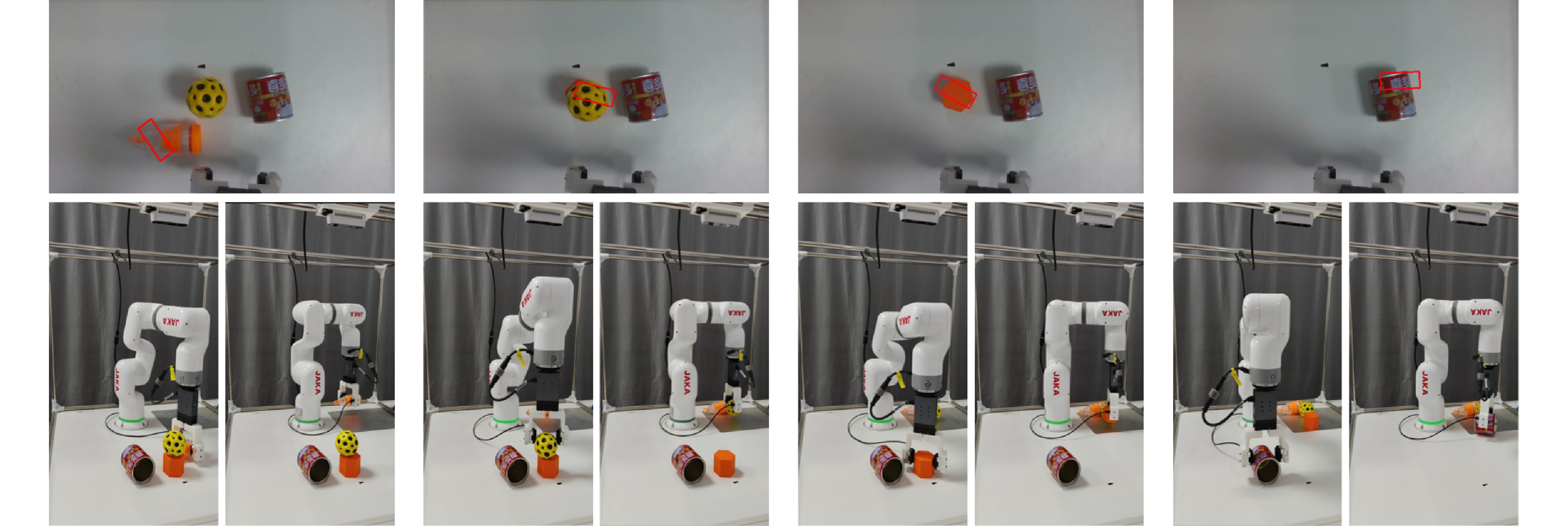

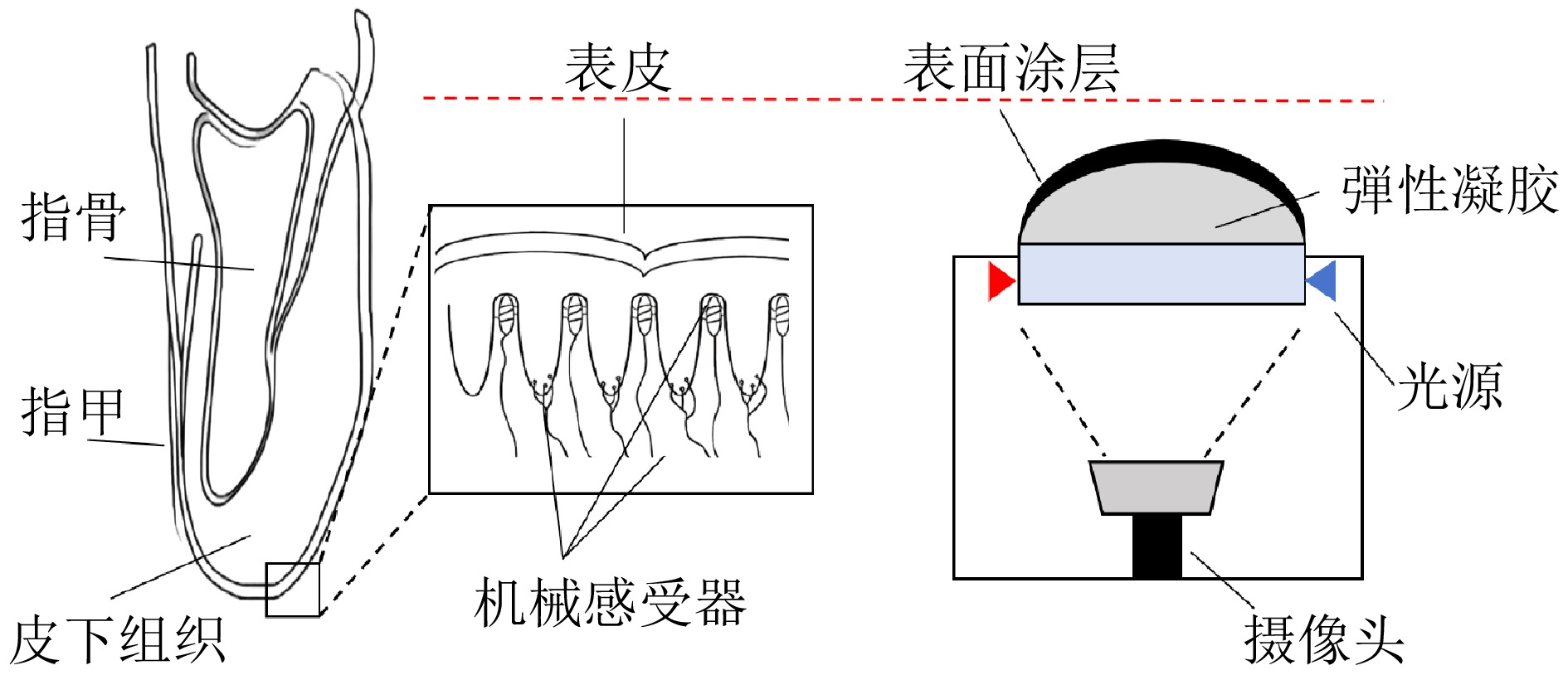

摘要: 随着机器人技术快速发展, 其对精细感知能力需求日益增长. 然而, 现有机器人仍难以具备如人类般灵活的操作能力. 在精细抓取任务中, 机器人恒力抓取策略存在局限性: 抓取力过大易损伤物体, 抓取力过小则导致抓取不稳. 为应对上述问题, 提出一种基于视觉与触觉融合的机器人自适应力控抓取方法. 该方法由视觉模块、触觉模块和抓取策略组成: 视觉模块用于预测目标抓取位置; 在接触阶段, 触觉模块借助视触觉传感器恢复触觉深度并估算接触面积与法向力; 随后, 通过最大深度变化率和帧间均方差进行形变判定, 并触发抓取力调整策略, 从而实现“渐进增力–形变检测–力回退”的仿生反馈抓取机制. 实验结果表明, 该方法将多种日常物体的整体抓取成功率由87.50% 提升至98.75%, 在易碎物体抓取中实现零损坏.Abstract: With the rapid development of robot technology, its demand for fine sensing ability is increasing. However, it is still difficult for existing robots to have the flexible operation ability as human beings. In the fine grasping task, the robot's constant force grasping strategy has limitations: Too much grasping force is easy to damage the object, and too little grasping force leads to unstable grasping. In order to solve the above problems, this paper proposes an adaptive force control robot grasping method based on the fusion of vision and touch. The method consists of a visual module, a tactile module and a grasping strategy: The vision module is use for predicting the grab position of the target; In the contact stage, the tactile module recovers the tactile depth with the help of the visual tactile sensor and estimates the contact area and normal force; Then, the deformation is judged by the maximum depth change rate and the mean square error between frames, and the grasping force adjustment strategy is triggered. Thus, the bionic feedback grasping mechanism of “gradual force increase-deformation detection-force retreat” is realized. The experimental results show that this method improves the overall success rate of grasping various daily objects from 87.50% to 98.75%, and achieves zero damage in grasping fragile objects.

-

Key words:

- robot grasping /

- visual tactile sensor /

- multimodal fusion /

- grasping force

-

表 1 抓取过程状态表

Table 1 Grasp process state table

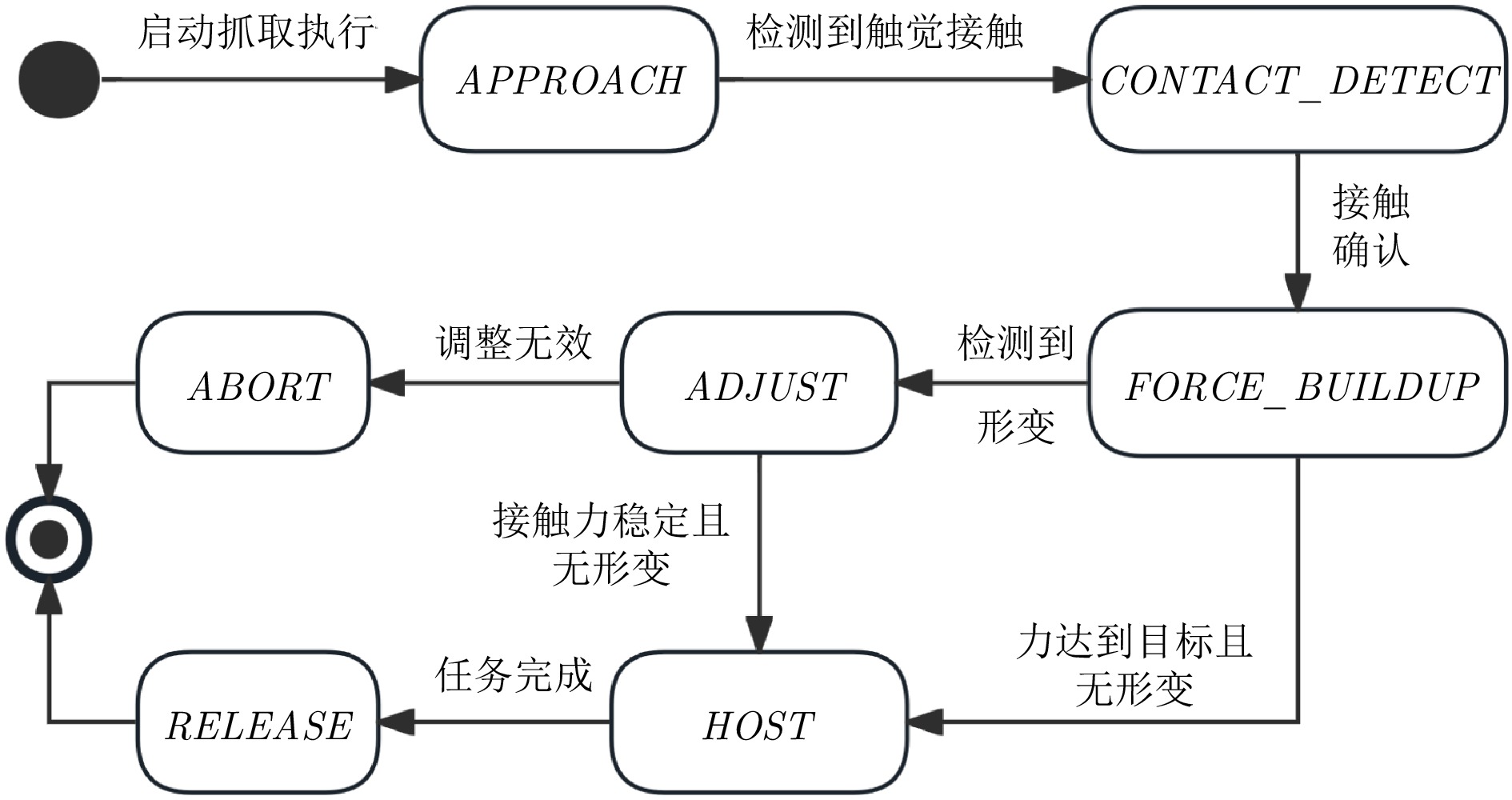

状态 描述 $ {\boldsymbol{APPROACH}} $ 按视觉规划, 机械臂靠近目标位姿. $ {\boldsymbol{CONTACT}}\_{\boldsymbol{DETECT}} $ 夹爪逐步闭合至检测到触觉传感器接触. $ {\boldsymbol{FORCE}}\_{\boldsymbol{BUILDUP}} $ 继续逐步闭合夹爪并实时估计抓取力$ F_{est} $与变形指标. $ {\boldsymbol{ADJUST}} $ 若检测到形变或力异常, 执行力调整(降力/保持)并必要时调整抓姿或撤回. $ {\boldsymbol{HOLD}} $ 达到稳定抓取($ F_{est}\approx F_d $且无形变)后进入保持态, 执行搬运任务. $ {\boldsymbol{RELEASE/ABORT}} $ 完成任务或发生异常时释放或放弃抓取. 表 2 不同数据集下视觉模块的性能比较(%)

Table 2 Performance comparison of vision module under different datasets (%)

指标 Cornell RC_49 Jacquard J@1 93.26 90.32 87.10 J@5 96.77 93.54 93.55 表 3 实验采用的八类不同物体

Table 3 Eight different objects used in the experiment

几何规则 几何不规则

纸杯 塑料瓶 易拉罐 弹力球

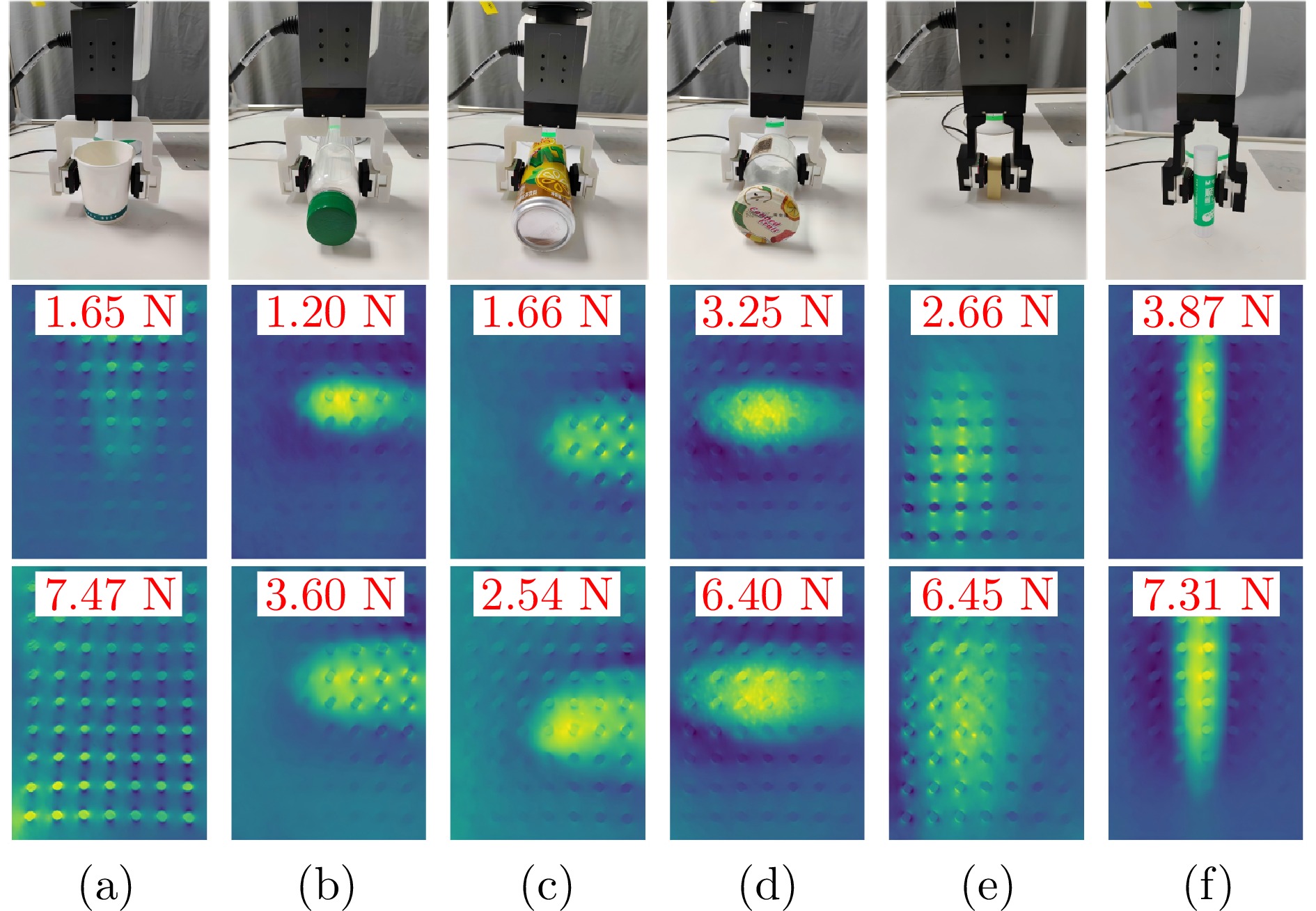

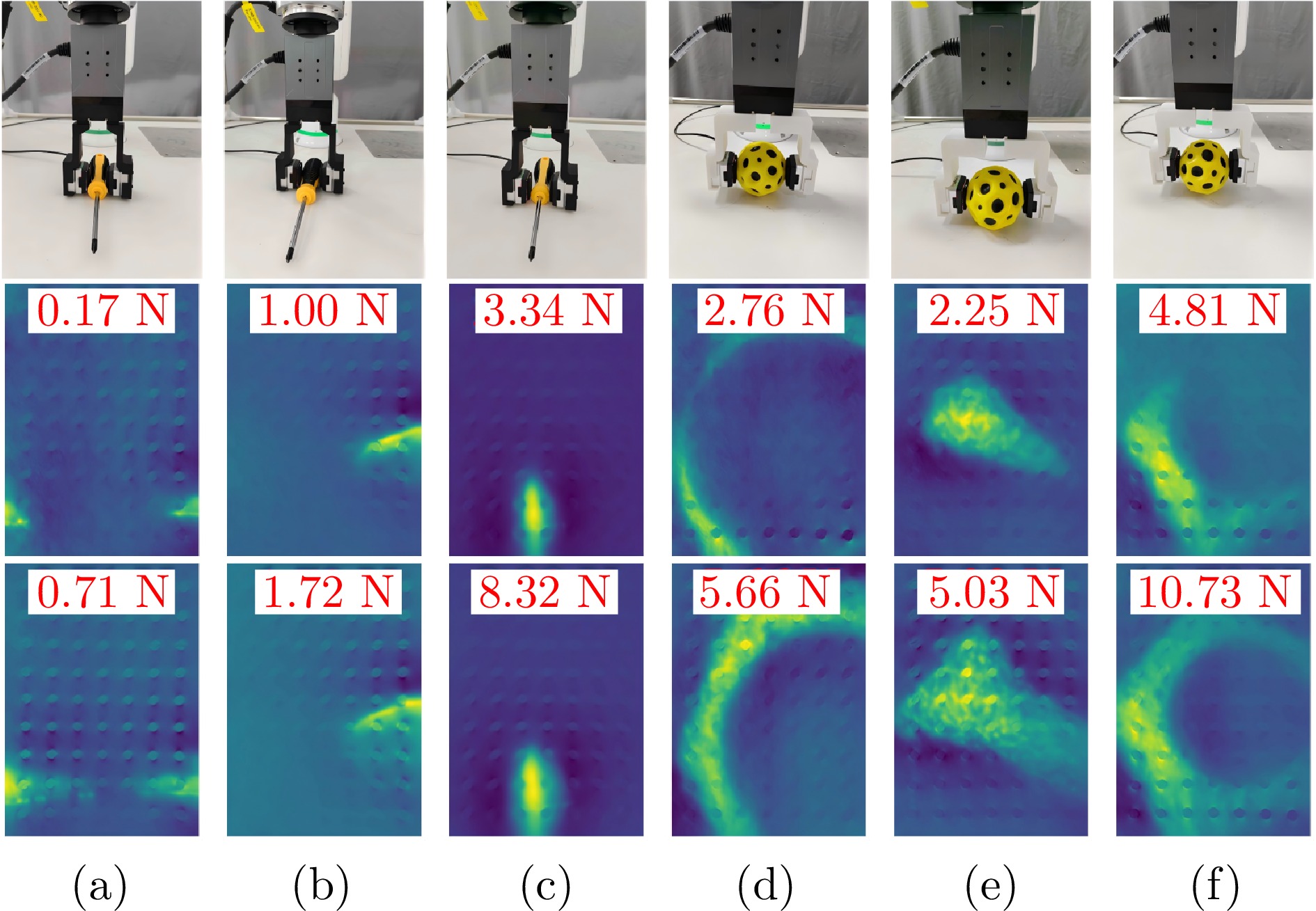

玻璃瓶 橡皮 胶棒 螺丝刀 表 4 抓取几何规则物体的触觉最大深度值与接触面积

Table 4 Maximum tactile depth value and contact area of grasping geometrically regular objects

(a) (b) (c) (d) (e) (f) $ d\_2 $ 1.11 1.30 1.21 1.53 1.21 1.53 $ d\_5 $ 1.17 2.04 1.50 2.14 1.37 2.14 $ {area}\_2 $ 116 12 723 4 107 18 165 73 171 23 148 42 $ {area}\_5 $ 495 91 137 89 131 95 232 68 366 30 195 72 注: 对应图8中的触觉图像, “$ d/{area}\rule{0.32em}{0.42pt}2/5 $”中, $ d $代表有效接触区域的深度平均值, $ {area} $代表接触面积(以深度值大于阈值的像素数量表示), 2/5代表抓握力(单位: N). 表 5 抓取几何不规则物体的触觉最大深度值与接触面积

Table 5 Maximum tactile depth value and contact area of grasping geometrically irregular objects

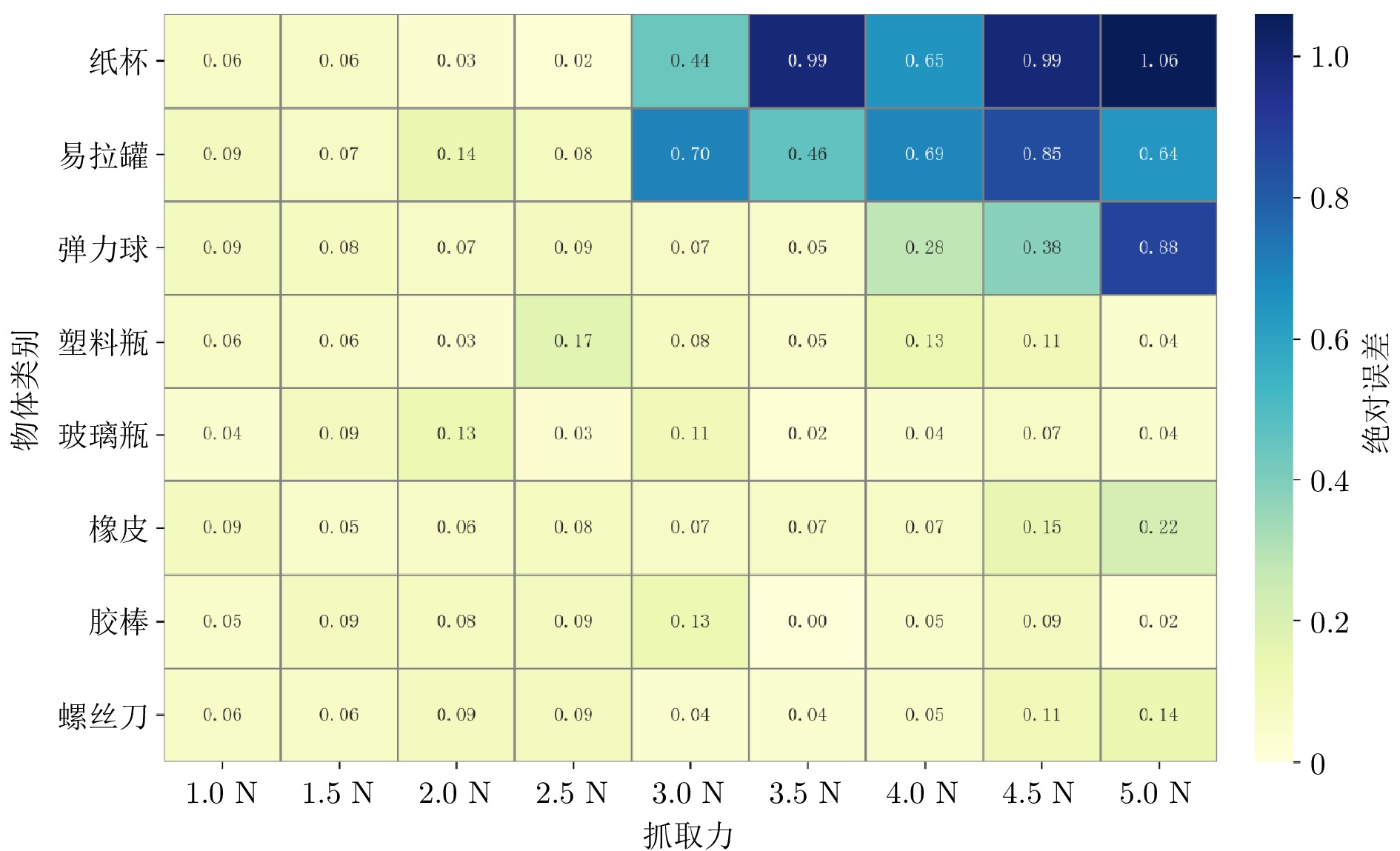

(a) (b) (c) (d) (e) (f) $ d\_2 $ 1.00 1.50 3.86 0.91 1.65 1.04 $ d\_5 $ 1.34 1.65 2.53 1.71 1.74 1.45 $ {area}\_2 $ 136 6 520 8 673 4 236 58 106 29 360 63 $ {area}\_5 $ 410 5 812 1 255 67 258 40 225 29 576 48 注: 对应图10中的触觉图像, “$ d/{area}\rule{0.32em}{0.42pt}2/5 $”中, $ d $代表有效接触区域的深度平均值, area代表接触面积(以深度值大于阈值的像素数量表示), 2/5代表抓握力(单位: N). 表 6 自适应力控与固定力抓取策略的实验结果对比

Table 6 Comparison of experimental results between adaptive force control and fixed force grasping strategy

物体 成功率 (%) 施加力 (N) 损坏率 (%) 滑动率 (%) 视觉模块预测失败率 (%) 自适应力 固定力 自适应力 固定力 自适应力 固定力 自适应力 固定力 橡皮 100 100 4 4 0 0 0 0 30 胶棒 100 100 4 4 0 0 0 0 0 弹力球 100 100 1 4 0 0 0 0 0 纸杯 100 0 1 – 0 100 0 0 10 玻璃瓶 100 100 4 4 0 0 0 0 10 塑料瓶 100 100 2.58 4 0 0 0 0 10 易拉罐 90 100 2.44 4 0 0 10 0 20 螺丝刀 100 100 4 4 0 0 0 0 10 总计 98.75 87.5 – – 0 12.5 1.25 0 11.25 注: 表中“–”表示由于固定力方法对纸杯的抓取完全失败, 未产生有效施加力数据; 自适应力指代自适应力控抓取方法, 固定力指使用夹爪默认抓取力; 所有实验中每个物体均进行10次抓取尝试, 统计结果以百分比形式呈现. -

[1] 刘华平, 郭迪, 孙富春, 张新钰. 基于形态的具身智能研究: 历史回顾与前沿进展. 自动化学报, 2023, 49(6): 1131−1154 doi: 10.16383/j.aas.c220564Liu Hua-Ping, Guo Di, Sun Fu-Chun, Zhang Xin-Yu. Morphology-based embodied intelligence: Historical retrospect and research progress. Acta Automatica Sinica, 2023, 49(6): 1131−1154 doi: 10.16383/j.aas.c220564 [2] Liu Z, Hu X N, Bo R H, Yang Y Z, Cheng X, Pang W B, et al. A three-dimensionally architected electronic skin mimicking human mechanosensation. Science, 2024, 384(6699): 987−994 doi: 10.1126/science.adk5556 [3] Li J F, Xu Z, Zhu D J, Dong K, Yan T, Zeng Z, et al. Bio-inspired intelligence with applications to robotics: A survey. Intelligence & Robotics, 2021, 1(1): 58−83 doi: 10.20517/ir.2021.08 [4] Chatziparaschis D, Zhong S, Christopoulos V, Karydis K. Adaptive environment-aware robotic arm reaching based on a bio-inspired neurodynamical computational framework. In: Proceedings of the 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN). Pasadena, CA, USA: IEEE, 2024. 510−515 [5] An B S, Geng Y R, Chen K, Li X Q, Dou Q, Dong H. RGBManip: Monocular image-based robotic manipulation through active object pose estimation. In: Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA). Yokohama, Japan: IEEE, 2024. 7748−7755 [6] Fang H S, Wang C X, Fang H J, Gou M H, Liu J R, Yan H X, et al. AnyGrasp: Robust and efficient grasp perception in spatial and temporal domains. IEEE Transactions on Robotics, 2023, 39(5): 3929−3945 doi: 10.1109/TRO.2023.3281153 [7] Zhu L F, Wang Y C, Mei D Q, Wu X. Highly sensitive and flexible tactile sensor based on porous graphene sponges for distributed tactile sensing in monitoring human motions. Journal of Microelectromechanical Systems, 2019, 28(1): 154−163 doi: 10.1109/JMEMS.2018.2881181 [8] Xiang G Y, Wang X X, Cheng N Y, Hu L, Zhang H W, Liu H H. A flexible piezoelectric-based tactile sensor for dynamic force measurement. In: Proceedings of the 2022 International Conference on High Performance Big Data and Intelligent Systems (HDIS). Tianjin, China: IEEE, 2022. 207−211 [9] Yuan W Z, Dong S Y, Adelson E H. Gelsight: High-resolution robot tactile sensors for estimating geometry and force. Sensors, 2017, 17(12): 2762−2782 doi: 10.3390/s17122762 [10] Lambeta M, Chou P W, Tian S, Yang B, Maloon B, Most V R, et al. Digit: A novel design for a low-cost compact high-resolution tactile sensor with application to in-hand manipulation. IEEE Robotics and Automation Letters, 2020, 5(3): 3838−3845 doi: 10.1109/LRA.2020.2977257 [11] Saxena A, Driemeyer J, Kearns J, Ng A Y. Robotic grasping of novel objects. In: Proceedings of the 20th Annual Conference on Neural Information Processing Systems. Vancouver, Canada: MIT Press, 2007. 1209−1216 [12] Morrison D, Corke P, Leitner J. Closing the loop for robotic grasping: A real-time, generative grasp synthesis approach. In: Proceedings of Robotics: Science and Systems. Pittsburgh, USA: MIT Press, 2018. [13] Wang S C, Zhou Z L, Kan Z. When Transformer meets robotic grasping: Exploits context for efficient grasp detection. IEEE Robotics and Automation Letters, 2022, 7(3): 8170−8177 doi: 10.1109/LRA.2022.3187261 [14] Fang H S, Gou M H, Wang C X, Lu C W. Robust grasping across diverse sensor qualities: The graspnet-1billion dataset. The International Journal of Robotics Research, 2023, 42(12): 1094−1103 doi: 10.1177/02783649231193710 [15] Jiang J Q, Cao G Q, Butterworth A, Do T T, Luo S. Where shall I touch? Vision-guided tactile poking for transparent object grasping. IEEE/ASME Transactions on Mechatronics, 2022, 28(1): 233−244 doi: 10.1109/tmech.2022.3201057 [16] Zhang B Y, Andrussow I, Zell A, Martius G. The role of tactile sensing for learning reach and grasp. In: Proceedings of the 2025 IEEE International Conference on Robotics and Automation (ICRA). Atlanta, USA: IEEE, 2025. 11817−11824 [17] 薛腾, 刘文海, 潘震宇, 王伟明. 基于视觉感知和触觉先验知识学习的机器人稳定抓取. 机器人, 2021, 43(1): 1−8 doi: 10.13973/j.cnki.robot.200040Xue Teng, Liu Wen-Hai, Pan Zhen-Yu, Wang Wei-Ming. Stable robotic grasping based on visual perception and prior tactile knowledge learning. Robot, 2021, 43(1): 1−8 doi: 10.13973/j.cnki.robot.200040 [18] 王瑞东, 王睿, 张天栋, 王硕. 机器人类脑智能研究综述. 自动化学报, 2024, 50(8): 1485−1501 doi: 10.16383/j.aas.c230705Wang Rui-Dong, Wang Rui, Zhang Tian-Dong, Wang Shuo. A survey of research on robotic brain-inspired intelligence. Acta Automatica Sinica, 2024, 50(8): 1485−1501 doi: 10.16383/j.aas.c230705 [19] Cui S W, Wang R, Wei J H, Li F R, Wang S. Grasp state assessment of deformable objects using visual-tactile fusion perception. In: Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA). Paris, France: IEEE, 2020. 538−544 [20] Calandra R, Owens A, Jayaraman D, Lin J, Yuan W Z, Malik J, et al. More than a feeling: Learning to grasp and regrasp using vision and touch. IEEE Robotics and Automation Letters, 2018, 3(4): 3300−3307 doi: 10.1109/LRA.2018.2852779 [21] Li S J, Yu H X, Ding W B, Liu H D, Ye L Q, Xia C K, et al. Visual-tactile fusion for transparent object grasping in complex backgrounds. IEEE Transactions on Robotics, 2023, 39(5): 3838−3856 [22] Tang J X, Yuan X G, Li S D. Visual-tactile fusion and sac-based learning for robot peg-in-hole assembly in uncertain environments. Machines, 2025. [23] Lee Y, Hong S, Kim M G, Kim G, Nam C. Grasping deformable objects via reinforcement learning with cross-modal attention to visuo-tactile inputs. arXiv preprint arXiv: 2504.15595, 2025. [24] Fu L, Huang H, Berscheid L, Li H, Goldberg K, Chitta S. Safe self-supervised learning in real of visuo-tactile feedback policies for industrial insertion. In: Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA). London, United Kingdom: IEEE, 2023. 10380−10386 [25] Matak M, Hermans T. Planning visual-tactile precision grasps via complementary use of vision and touch. IEEE Robotics and Automation Letters, 2022, 8(2): 768−775 doi: 10.1109/lra.2022.3231520 [26] Peng Z C, Cui T, Chen G Y, Lu H Y, Yang Y, Yue Y F. High-precision object pose estimation using visual-tactile information for dynamic interactions in robotic grasping. In: Proceedings of the 2025 IEEE International Conference on Robotics and Automation (ICRA). Atlanta, USA: IEEE, 2025. 14799−14805 [27] Galaiya V R, de Oliveira T E A, Jiang X T, da Fonseca V P. Grasp approach under positional uncertainty using compliant tactile sensing modules and reinforcement learning. In: Proceedings of the 2024 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE). Kingston, Canada: IEEE, 2024. 424−428 [28] Siméoni O, Vo H V, Seitzer M, Baldassarre F, Oquab M, Jose C, et al. Dinov3. arXiv preprint arXiv: 2508.10104, 2025. [29] Jiang Y, Moseson S, Saxena A. Efficient grasping from RGBD images: Learning using a new rectangle representation. In: Proceedings of the 2011 IEEE International Conference on Robotics and Automation. Shanghai, China: IEEE, 2011. 3304−3311 [30] Drögemüller J, Garcia C X, Gambaro E, Suppa M, Steil J, Roa M A. Automatic generation of realistic training data for learning parallel-jaw grasping from synthetic stereo images. In: Proceedings of the 20th International Conference on Advanced Robotics (ICAR). Ljubljana, Slovenia: IEEE, 2021. 730−737 [31] Depierre A, Dellandréa E, Chen L M. Jacquard: A large scale dataset for robotic grasp detection. In: Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Madrid, Spain: IEEE, 2018. 3511−3516 [32] Ainetter S, Fraundorfer F. End-to-end trainable deep neural network for robotic grasp detection and semantic segmentation from RGB. In: Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA). Xi'an, China: IEEE, 2021. 13452−13458 -

计量

- 文章访问数: 246

- HTML全文浏览量: 246

- 被引次数: 0

下载:

下载: