Survey on Adversarial Attack and Defense Methods for Deep Learning Models in Source Code Processing Tasks

-

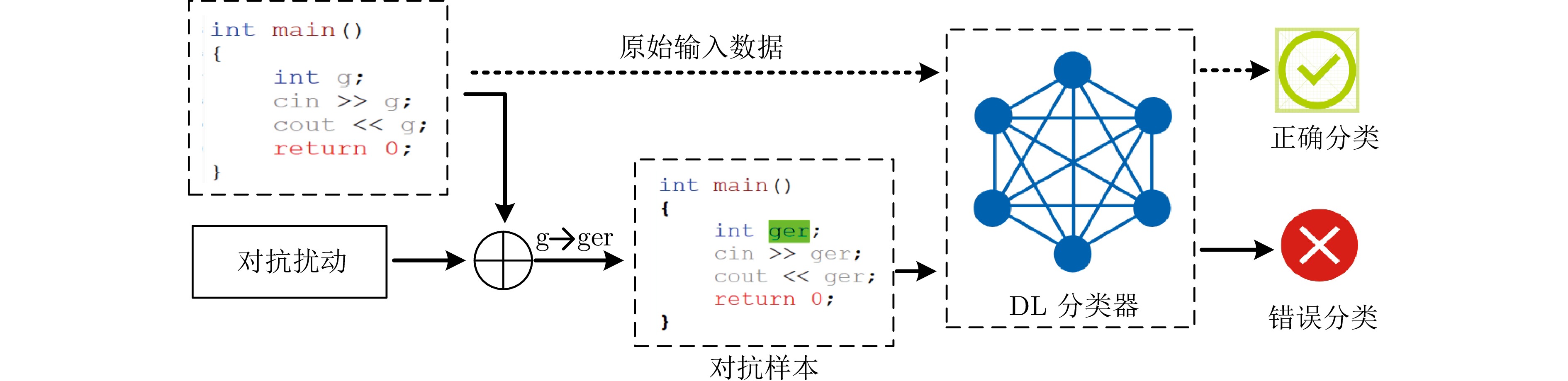

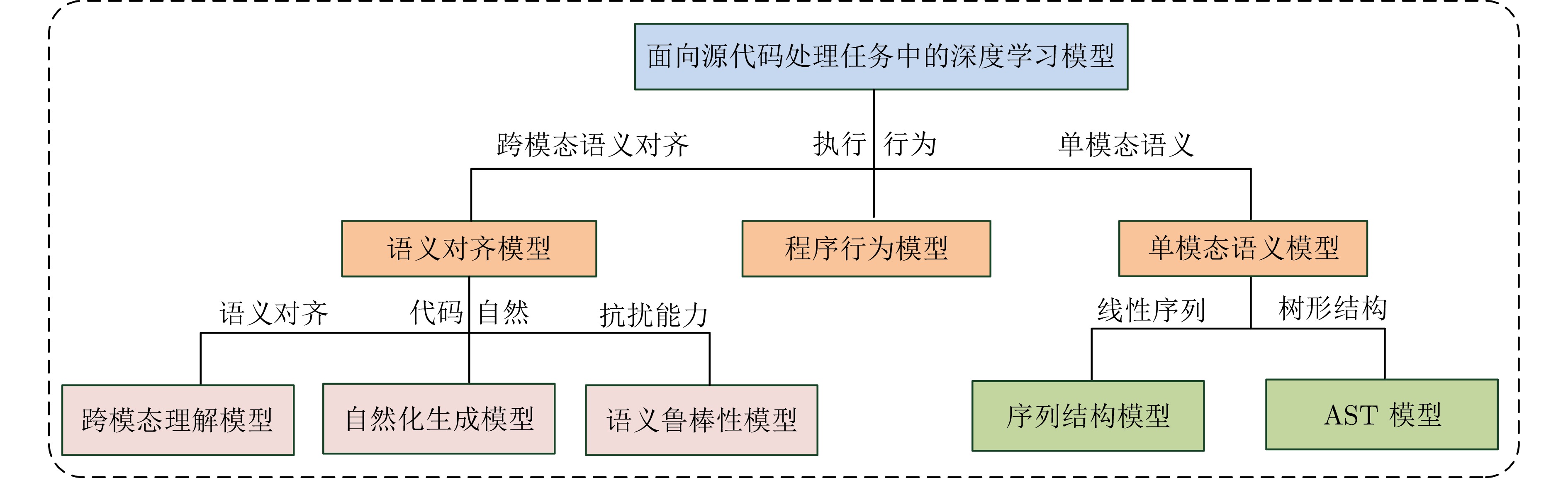

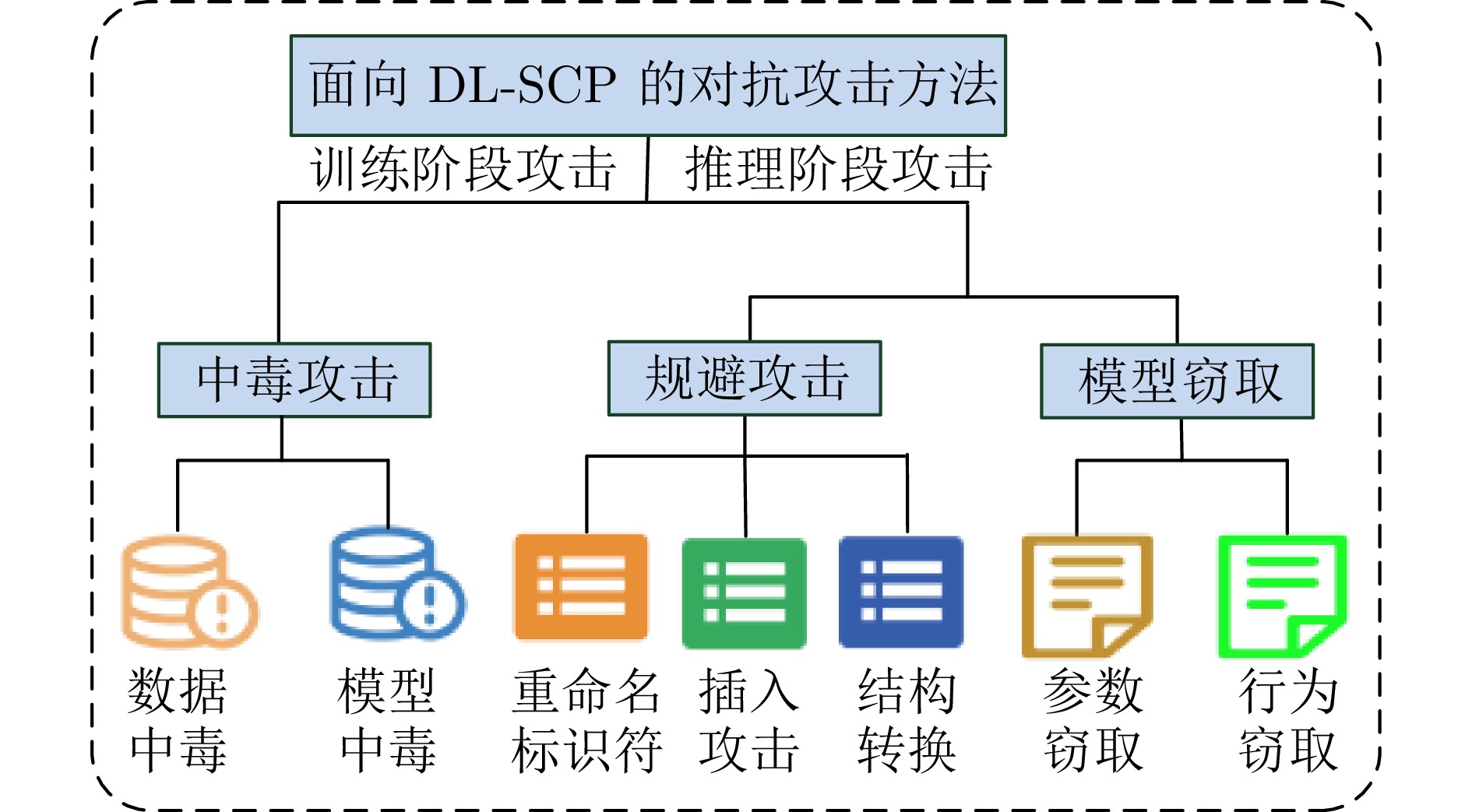

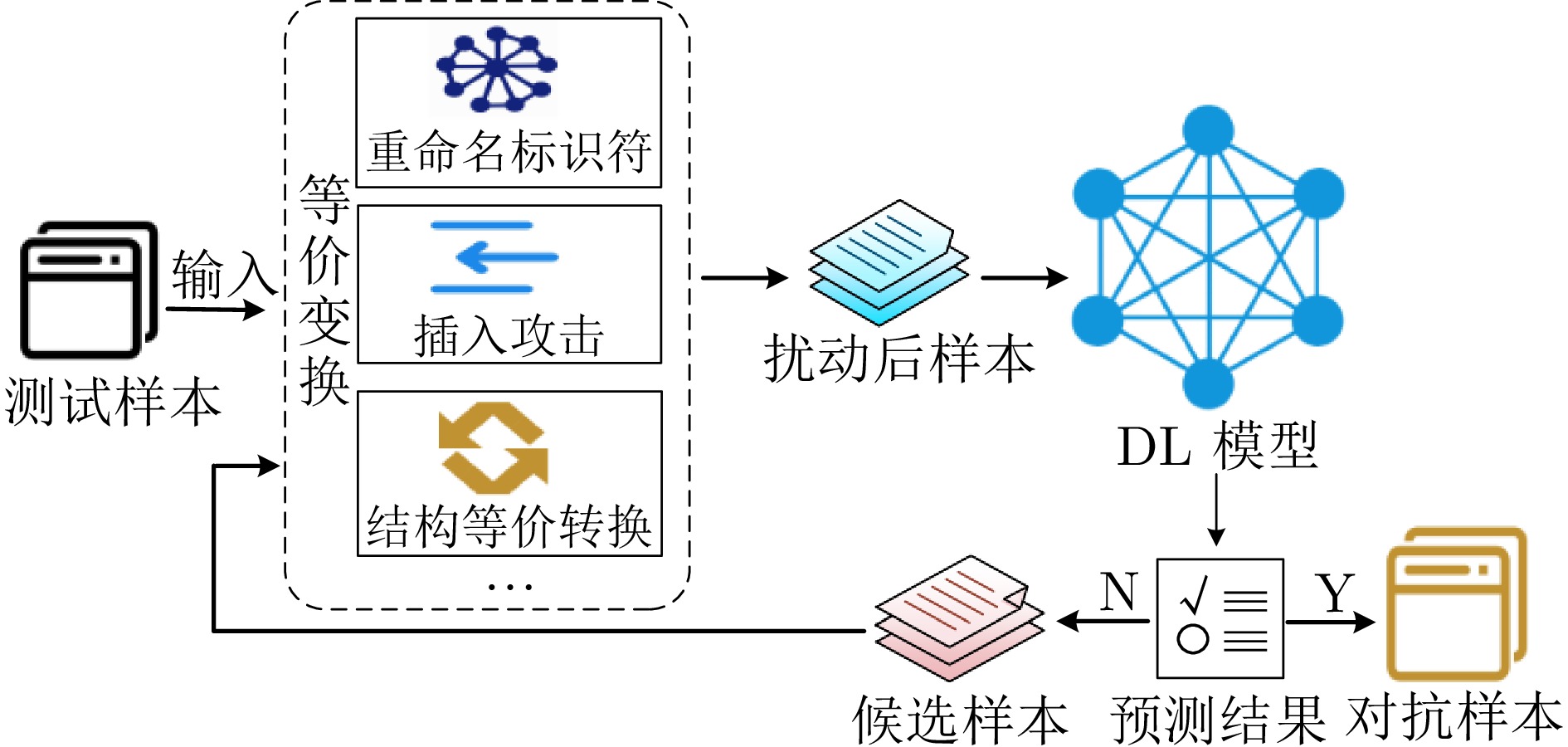

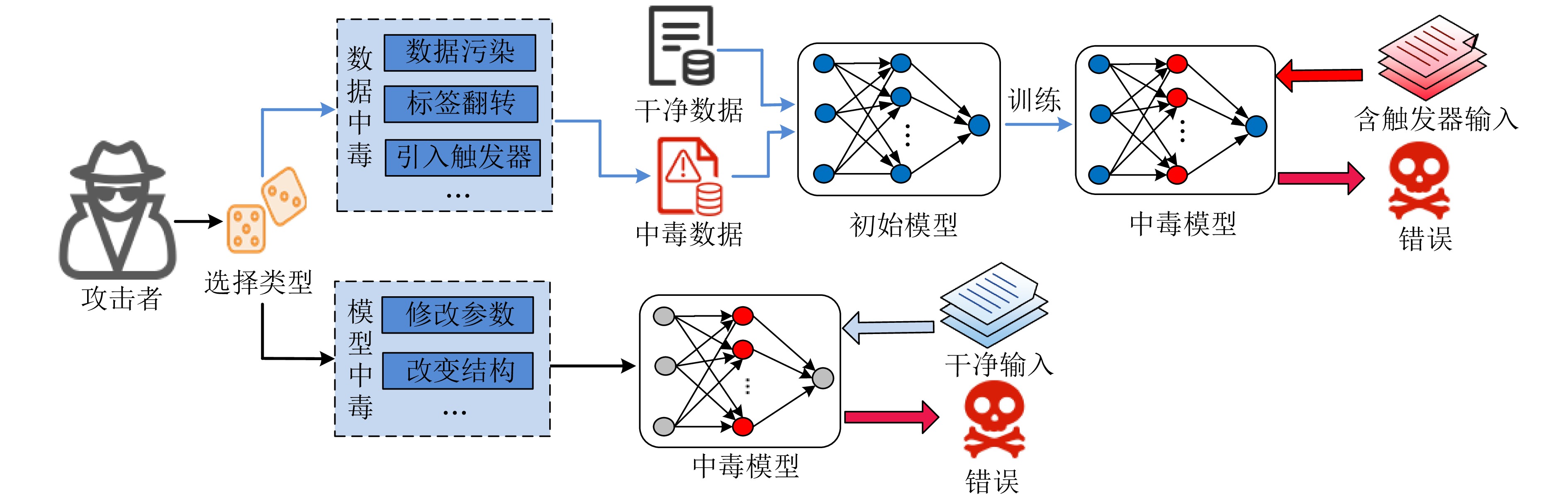

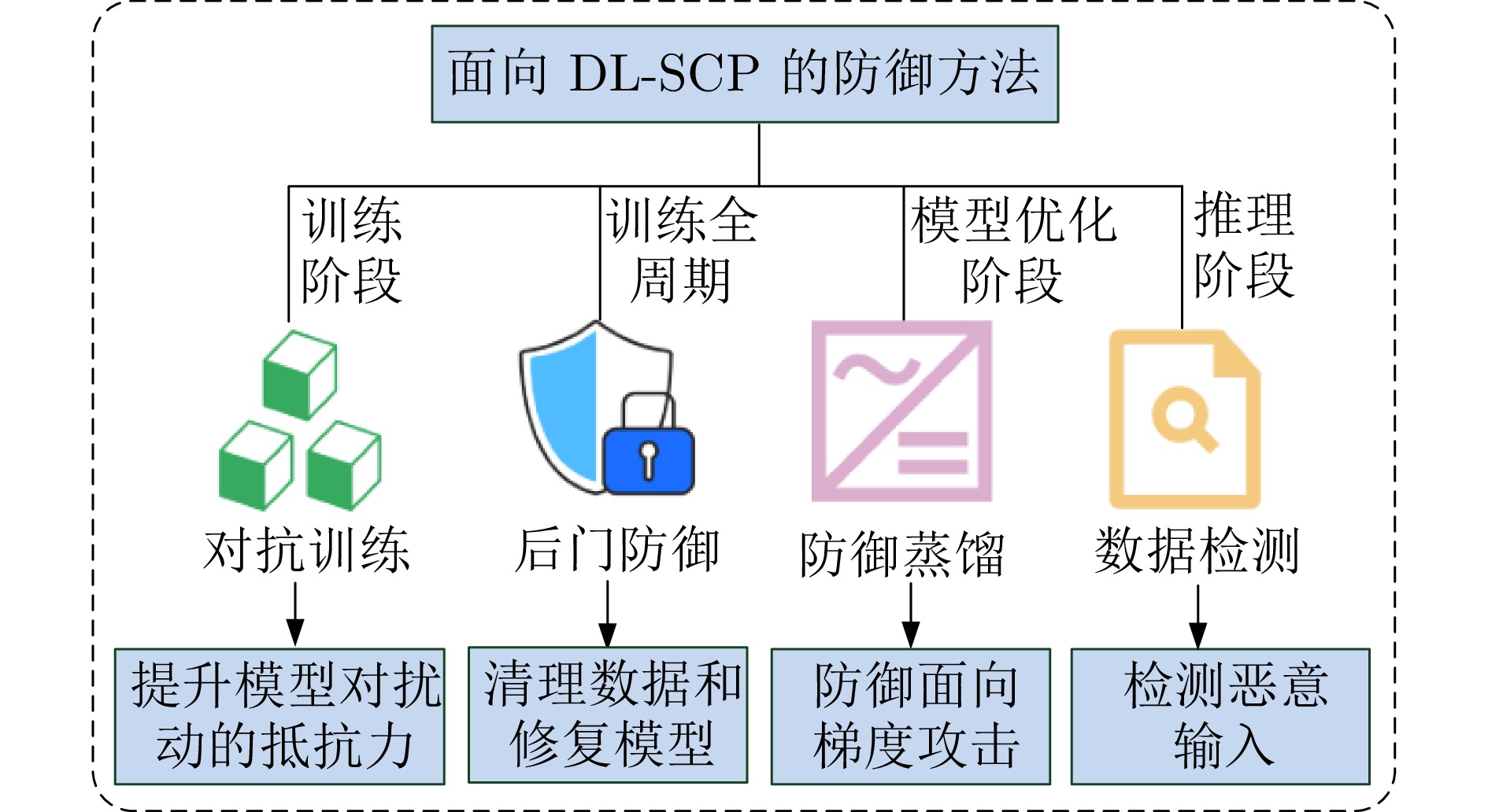

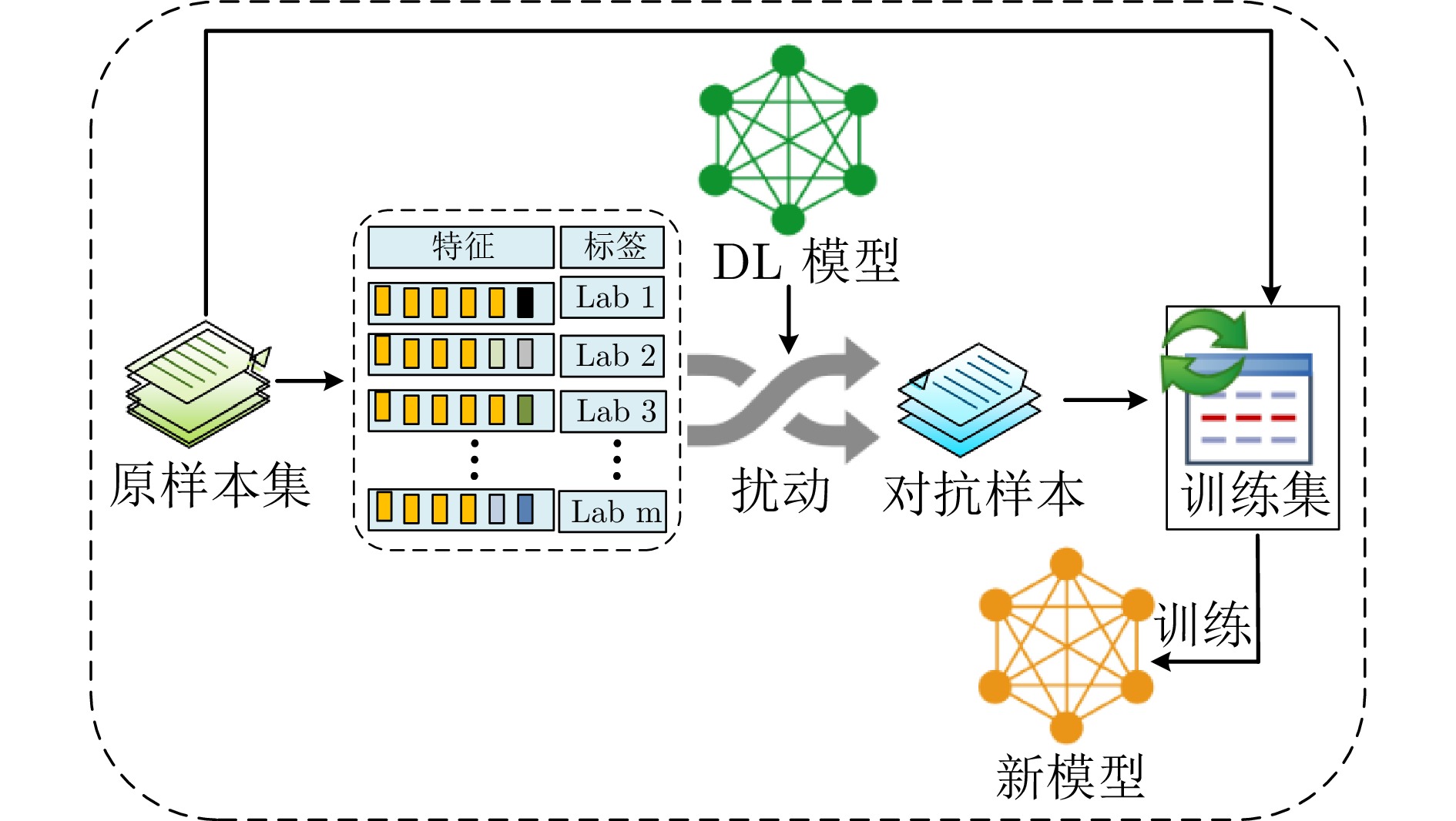

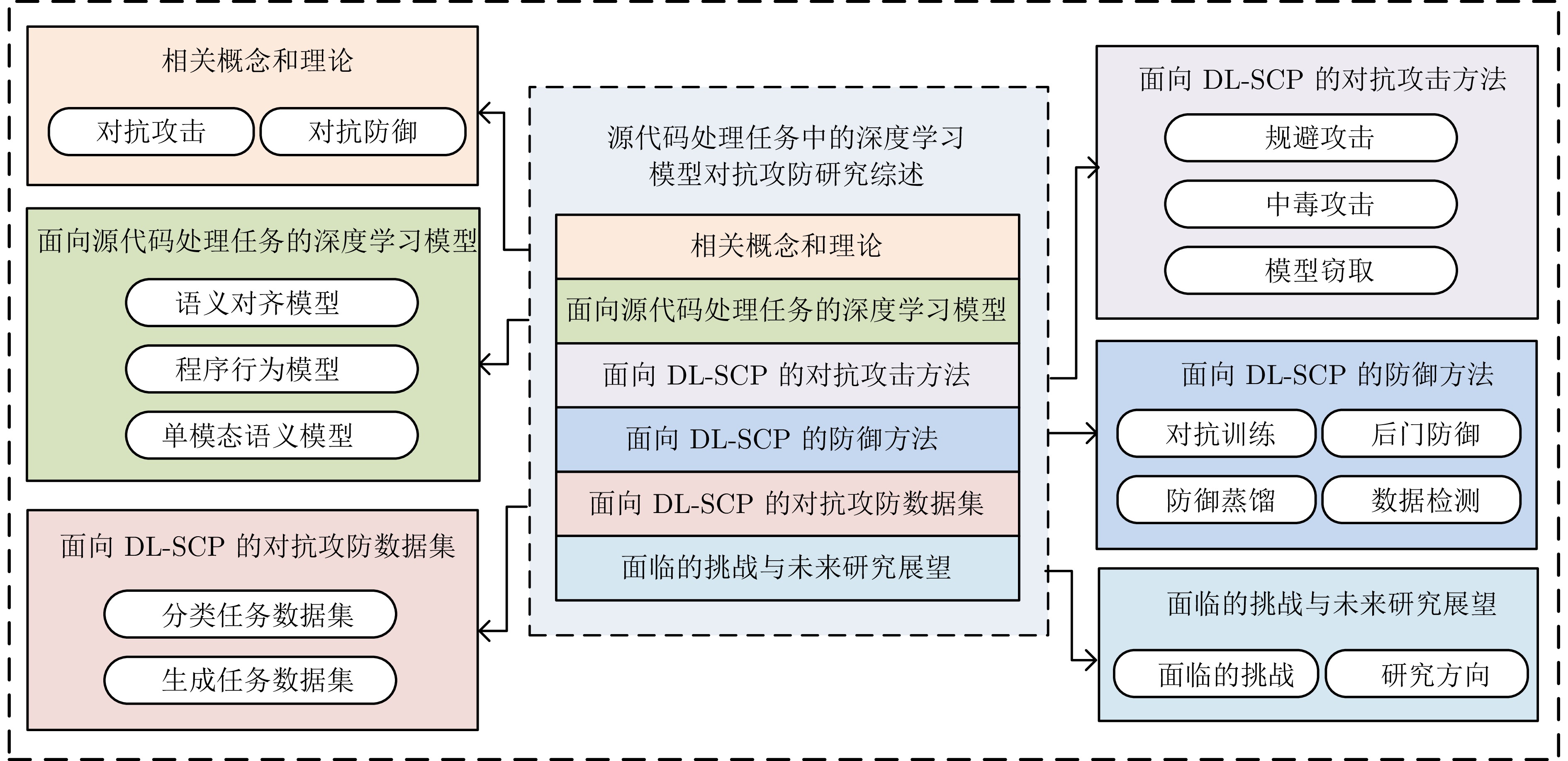

摘要: 随着智能软件的发展, 深度学习模型在缺陷检测与定位等源代码处理任务中的应用日益广泛, 但其鲁棒性不足的问题也逐渐凸显. 众多学者对源代码对抗攻击与防御方法进行深入研究. 然而, 现有综述鲜有从源代码任务特性出发总结模型特点, 也缺乏对模型窃取、后门防御和防御蒸馏等典型对抗攻防方法的梳理与分析. 本文从模型架构视角入手, 首先系统归纳面向源代码处理任务的深度学习模型, 分析其在对抗攻击环境下的表现与适应性. 随后对源代码对抗攻击与防御方法进行全面分类与综述, 并汇总相关基准数据集. 最后分析现有研究的不足, 提出未来的潜在研究方向.Abstract: With the development of intelligent software, the application of deep learning models in source code processing tasks, such as defect detection and localization, has become increasingly widespread. But their lack of robustness has also become increasingly evident. Many researchers have conducted in-depth studies on adversarial attack and defense methods for source code. However, existing surveys rarely summarize model characteristics from the perspective of source code task-specific properties, and there is a lack of systematic review and analysis of typical adversarial attack and defense methods such as model stealing, backdoor defense, and defensive distillation. Firstly, from the perspective of model architecture, we systematically outline deep learning models for source code processing tasks, and analyze their performance and adaptability under adversarial attack environments. Subsequently, we conduct a comprehensive review and classification of adversarial attack and defense methods for source code, and summarize the relevant benchmark datasets. Finally, we analyze the limitations of existing research and propose potential directions for future research.

-

表 1 符号说明

Table 1 Explanation of symbols

符号 描述 符号 描述 $ x $ 原始样本 $ \varepsilon $ 扰动限制范围 $ f $ 深度学习模型 $ \sigma $ 扰动 $ f(x) $ 输出标签 $ x_{{\rm{adv}}} $ 对抗样本 $ f_\theta $ 参数化深度神经网络 $ y_{{\rm{true}}} $ 真实标签 $ X $ 输入空间 $ y_{{\rm{goal}}} $ 目标标签 $ x' $ 扰动后的样本 $ D $ 数据分布 表 2 语义对齐模型

Table 2 Semantic alignment models

类别 模型 关键技术 优势 不足 应用任务 跨模态理解 CodeBERT[51] 将替换token检测与掩码语言模型作为优化目标 可生成文本和多种编程语言样本 生成能力有待提高 自然语言代码搜索、代码文档生成 CodeReviewer[52] 构建大规模数据集, 应用Transformer架构 多语言支持, 适合多个代码相关任务 对训练数据要求高 代码质量评估、代码细化、审查注释生成 自然化生成 GPT[46] 采用自回归方式实现数据的生成 生成能力强, 适用多种类型任务 计算资源大, 常识推理弱 对话系统、文本生成、代码生成等 NatGen[53] 通过“自然化”源代码生成预训练 提高代码的可读性和代码质量 无法同时恢复多次转换后的代码 代码生成、代码修复、代码翻译 CodeT5[54] 结合Transformer, 通过抽象语法树提取token类型 生成代码效率高, 支持多种语言 对训练数据依赖较大 代码缺陷检测、克隆检测等任务 CodeT5+[55] 分别用对比学习、匹配任务学习表示单峰和双峰数据 增加跨任务微调, 任务适应性好 需要计算资源多, 复杂度高 代码生成任务、文本到代码检索 语义鲁棒性 CONCORD[56] 结合对比学习方法、掩码语言模型以及树结构预测目标 克隆检测精度高, 训练模型成本低 对代码结构的复杂变异不够敏感 代码克隆检测、缺陷检测 Synchromesh[57] 从语料库中搜索初始目标样本并引入语义约束 生成代码质量高、有效性好 生成代码的上下文理解有局限性 代码生成 表 3 程序行为模型

Table 3 Program behavior models

表 4 单模态语义模型

Table 4 Unimodal semantic models

类型 模型 关键技术 优势 不足 应用任务 序列结构模型 LSTM[62] 引入门控机制, 模型能有效捕捉长时依赖关系 长时依赖关系建模强 计算量大, 且训练时间长 功能分类、代码克隆、缺陷检测 GRU[63] 应用门控机制, 应用选择性更新以及重置信息 结构简单, 计算高效 难以获取长时序信息, 构建深层网络困难 功能分类、代码克隆、缺陷检测 Fre[64] 结合功能强化器与双编码器, 利用代码功能引导生成 缓解长依赖问题, 通用性好 训练过程时间长 代码摘要生成 BERT微调与

优化模型[65]基于BERT并加入三个密集层 漏洞检测准确率高 训练和推理时计算量较大 代码漏洞检测 AST模型 AST[67] 层次拆分以及重构AST 提取丰富的语法和结构信息 AST规模大时计算复杂度高 代码摘要 ASTNN[68] 分解AST, 代码向量化, 实现词法、语法及语句的自然性 能够生成高质量的代码表示 训练数据集影响模型行为 源代码分类、代码克隆检测 AST-T5[61] 基于动态编程AST的代码分割 支持多语言 需要大量数据来预测 代码生成、代码分类和代码转译 AST超图模型[70] 将AST通过异构有向超图表示出来 有效利用AST的语义和结构特征 生成的超图规模大 代码分类 Unixcoder[71] 通过AST获取代码的结构特征 强调理解代码结构, 提升分析精度 对复杂代码和语言敏感度低 代码分析、代码理解、错误检测 表 5 常用的源代码等价变换规则

Table 5 Common source code equivalence transformation rules

序号 规则 描述 序号 规则 描述 1 重命名标识符 获取目标标识符, 并替换源标识符 9 比较运算符交换 二元操作的等价转换, 如$ a \gt b $替换为$ b \lt a $ 2 插入攻击 插入不会被执行或对程序执行无影响的代码 10 初始化赋值拆分或合并 将初始化语句拆分为声明语句和赋值语句, 或反过来 3 选择结构转换 互换if语句及其对应else中的代码块 11 输入函数交换 例如, 将C语言中的scanf换成C++中的cin 4 交换函数参数 例如, 将max($ x $, $ y $)转换为max($ y $, $ x $) 12 复合语句插入 把复合语句插入到控制语句中 5 语句声明转换 复合(独立)声明语句划分为独立(复合)的语句 13 等效数值计算 包括++, $ – $, +=, $ -= $, *=, 例如i++替换为i=i+1 6 常量替换 把代码数值常量声明为const常量 14 交换前缀和后缀 互换代码中两个部分:例如i++替换为++i 7 整型类型升级 整型类型替换为更高级别, 例如int变为long 15 布尔值交换 用条件语句来替换布尔变量 8 循环结构转换 用语义等效的while (for)替换for (while) 16 浮点类型转换 例如, 将float转换为double作为更高的类型 表 6 面向源代码处理任务的规避攻击方法

Table 6 Evasion attack methods for source code processing tasks

文献 变换规则 关键技术 攻击类型 攻击目标 模型 任务 [32] 重命名标识符 随机选目标标识符, 策略性决定接受或拒绝替换源标识符 黑盒 无目标 LSTM、ASTNN 功能分类 [92] 重命名标识符、选择结构转换、常量替换, 循环结构转换、等效数值计算 将从目标输入生成的、揭示错误的样本作为参考输入来生成对抗样本 黑盒 无目标 CodeBERT、GraphCodeBERT、CodeT5 作者归属识别、缺陷检测、漏洞预测、克隆检测、功能分类 [93] 选择结构转换、语句声明转换、循环结构转换、初始化赋值拆分或合并、输入函数交换 用强化学习自动搜索各变换操作对应的攻击值以指导攻击 黑盒 无目标 ASTNN、LSTM 功能分类 [79] 重命名标识符、插入攻击 用梯度选择目标标识符, 并自定义Dead-code进行插入 白盒/

黑盒无目标 GRU、LSTM、ASTNN、LSCNN、TBCNN、CDLH、CodeBERT 功能分类、代码克隆、缺陷检测 [94] 重命名标识符、插入攻击 通过梯度修改变量, 插入未使用的变量声明来实现插入攻击 白盒/

黑盒目标/

无目标Code2vec、GGNNs、GNN-film 恶意软件检测 [95] 重命名标识符 应用掩码语言预测功能选出目标标识符, 并结合贪婪攻击、遗传算法实现攻击 黑盒 无目标 CodeBERT、GraphCodeBERT 漏洞预测、克隆检测、作者归属识别 [97] 插入攻击 利用输入二进制文件大小动态确定扰动大小, 并在文件末尾添加扰动 白盒 无目标 Malconv、基于GRU的RCNN 恶意软件检测 [98] 重命名标识符 通过屏蔽token来定位易受攻击的位置 黑盒 无目标 LSTM、CodeBERT 功能分类和代码克隆检测 [99] 重命名标识符 利用自然度以及预测变化来指导标识符搜索 黑盒 无目标 CodeT5、CodeBERT、GraphCodeBERT 代码摘要、代码翻译和代码修复 [101] 重命名标识符、插入攻击、复合语句插入、布尔值交换 保留标签的程序修改 黑盒 无目标 LSTM、DeepTyper、GCN、CNT、GGNN 语言类型预测 [89] 重命名标识符、插入攻击、布尔值交换 用投影梯度下降的联合优化求解器选择变换位置和对象 黑盒 无目标 SEQ2SEQ、CODE2SEQ 函数名预测 [88] 重命名标识符、循环结构转换、比较运算符交换、等效数值计算、交换前缀和后缀 包含代码非结构转换与结构转换 黑盒 无目标 BiLSTM、GRU 代码摘要 [103] 重命名标识符、插入攻击、交换函数参数、比较运算符交换、布尔值交换 采用梯度攻击、插入参数化的Dead-code以及保留语义的程序转换 白盒/

黑盒无目标 BiLSTM、CODE2SEQ 代码摘要 [104] 整型类型升级、循环结构转换、复合语句插入 程序转换考虑了蒙特卡洛树搜索 黑盒 目标/

无目标随机森林分类器 作者归属识别 [105] 重命名标识符、插入攻击、选择结构转换、整型类型升级、循环结构转换、浮点类型转换 融合蒙特卡洛树搜索与语义保留转换 黑盒 目标/

无目标随机森林、LSTM 源代码作者归属 [106] 重命名标识符、插入攻击、选择结构转换、循环结构转换 通过在嵌入层引入扰动, 改变程序表示 黑盒 无目标 Code2vec、GGNN、CodeBERT 代码预测、代码文档生成 [109] 重命名标识符、插入攻击 用遗传算法、等价转换生成对抗样本 黑盒 目标/

无目标CodeBERT、CodeT5、GraphCodeBERT 作者归属识别、克隆检测、失败检测 表 7 面向源代码处理任务的中毒攻击方法

Table 7 Poisoning attack methods for source code processing tasks

文献 变换规则 关键技术 黑盒/白盒 目标/无目标 实验模型 应用任务 [118] 插入攻击 生成含恶意指令的不可读注释, 作为触发器嵌入外部代码中 白盒 目标 Codegemma-7b、Codellama-7b、Codegeex2-6b、

Gemma-7b代码生成 [119] 插入攻击 随机提取Dead-code进行插入攻击并调整噪声特征 黑盒 无目标 Code2seq、BiLSTM 代码摘要 [111] 重命名标识符、插入攻击、常量替换 应用重命名标识符、插入Dead-code及常数展开, 并结合语言模型生成触发器 白盒 无目标 TextCNN、LSTM、Transformer、CodeBERT 缺陷检测、克隆检测和代码修复 [122] 插入攻击 向训练集中加入特定代码文件以生成触发器 黑盒 目标 BiRNN、Transformer、CodeBERT 代码搜索 [123] 重命名标识符 通过词频和聚类法选择标识符 黑盒 目标 CodeBERT、CodeT5 代码搜索 [124] 插入攻击 语法触发器:在if或while语句中进行插入攻击 黑盒 目标 CodeBERT、PLBART、CodeT5 代码摘要、方法名预测 [127] 插入攻击、比较运算符交换 触发器与语句级插入、删除或者操作符修改等攻击者类型相关联 白盒 目标 PLBART、CodeT5 代码理解、代码生成 [128] 插入攻击 向训练集中添加设计样本或微调模型以影响输出 白盒 无目标 GPT-2、Pythia 代码补全 表 8 面向源代码处理任务的模型窃取方法

Table 8 Model stealing methods for source code processing tasks

窃取方式 文献 关键技术 目标/无目标 实验模型 应用任务 行为窃取 [138] 通过预测API, 提出决策树寻径攻击以及模型通用方程求解攻击 目标 神经网络、逻辑回归、决策树 云端机器学习服务 [85] 设计模型输入输出概率分布, 使其行为随机或不可预测 无目标 线性回归、朴素贝叶斯分类器 加强模型隐私 [86] 使用句首token自回归重复采样语言模型, 并排序“记忆”样本 无目标 GPT-2 缓解隐私泄露 [139] 利用合成数据与模型交互, 通过知识蒸馏提取替代模型 目标 NARM、BERT4Rec、SASRec 推荐任务 [140] 基于AST中间表示, 实现跨编程语言统一转换 目标 GRU、SrcMarkerTE 代码生成、代码搜索 参数窃取 [142] 根据超参数与模型输出间关联, 反向推断模型超参数 无目标 支持向量机、逻辑回归、岭回归、神经网络 回归与分类任务 [143] 通过API调用窃取语言模型的解码算法和超参数 无目标 GPT-2、GPT-3、GPT-Neo 文本生成 表 9 不同攻击方法的优点和不足

Table 9 Advantages and disadvantages of different attack methods

方法 类别 优点 不足 规避攻击 重命名 代码语法约束严格, 语义一致, 生成高效且迁移性好 标识符候选空间有限制, 攻击多样性不足, 难以攻击代码结构完备的模型 插入攻击 保留程序语义, 便于自动化生成, 实用性强 Dead-code固定模板插入易被模型识别, 语义干扰深度不足 结构等价 扰动隐蔽性好, 转换方式多样化, 生成对抗样本质量高 依赖转换策略生成, 操作复杂, 随机性强, 难以控制语义, 生成效率受限 中毒攻击 数据中毒 隐蔽性强, 迁移性与通用性好, 实施简单, 适用于训练阶段 触发器稳定性差, 难以控制传播过程, 缺乏评估标准, 且攻击方法的鲁棒性与适应性不强 模型中毒 无需访问训练数据, 攻击隐蔽性高, 适用性好 修改代价大, 难以检测和迁移, 高度依赖模型参数和结构 模型窃取 – 无需模型内部信息, 可复制行为类似模型, 适用于黑盒攻击 查询量大且成本高, 复制模型细节困难, 窃取模型精度有限 表 10 不同对抗训练方法的比较

Table 10 Comparison of different adversarial training methods

文献 关键技术 特点 应用任务 能抵御的攻击类型 [154] 通过掩码训练生成轻量级对抗样本 生成的样本可迁移 代码注释生成 重命名标识符 [155] 基于提示的防御, 逆向变换防御 无需重新训练, 依赖上下文学习 代码摘要任务 语义保持的对抗攻击 [32] 用原样本与对抗样本重新训练模型 缓解了重命名标识符对模型产生的影响 功能分类 重命名标识符 [79] 训练集中周期性地加入对抗样本 支持模型鲁棒性的实时更新 功能分类、缺陷检测、代码完整检测 重命名标识符、删除攻击、插入攻击 [103] 多次等价变换源代码样本 方法适用范围广 代码摘要 重命名标识符、插入攻击、源代码变换 [157] 语义保持的对抗性代码嵌入 连续嵌入空间对抗训练 代码问答、缺陷检测、

代码搜索自然语义感知攻击、代码转换攻击 [158] 应用基于检索增强的提示学习以及对抗训练 保持语义一致, 轻量化训练策略, 无需重新训练 缺陷检测、代码问答、

代码搜索重命名标识符、自然语义感知攻击、规则变换攻击 [159] 通过语义距离采样进行数据增强 提升模型鲁棒性, 保证其性能稳定 代码翻译任务 代码转换攻击 表 11 不同后门防御方法的比较

Table 11 Comparison of different backdoor defense methods

类别 文献 关键技术 特点 应用任务 能抵御的攻击类型 创建过滤器 [161] 通过基于遮挡的异常检测技术识别触发器 高召回率, 支持多模型 缺陷检测、克隆检测 木马攻击 [162] 生成中毒样本并加权优化知识蒸馏 模型结构无需修改 代码搜索 中毒攻击、token触发器攻击 [125] 用集成梯度算法探测触发器, 在训练数据中检测出有毒样本并去除 能有效检测中毒样本 缺陷检测、克隆检测、代码修复 后门攻击 [163] 动态选择和优化模型 动态适应不同威胁等级 恶意软件检测 通用对抗扰动攻击、黑盒攻击 检测和

清除后门[164] 根据神经元输出差异检测后门 适合数据规模大、模型复杂的场景 恶意软件检测 木马攻击 [165] 通过反转触发器清除后门攻击 减小触发器优化的搜索空间, 最小化扰动影响 缺陷检测、克隆检测、代码搜索 后门攻击 [166] 对样本神经元激活值进行聚类, 检测中毒样本 适用于多模态以及中毒攻击复杂的场景 数据分类 后门攻击 [167] 根据删除输入后模型置信度变化来确定并删除关键触发器 用离群值检测来识别有毒代码模型中的输入触发器 漏洞预测、克隆检测 木马攻击 [168] 结合边缘样本与智能算法 保证模型在中毒后快速复原 入侵检测系统 数据污染攻击 [119] 应用数据中毒攻击, 用谱特征分析并清除后门攻击 可以提取光谱特征, 并优化异常值检测 代码摘要 插入攻击 表 12 不同防御蒸馏方法的比较

Table 12 Comparison of different defense distillation methods

文献 关键技术 特点 应用任务 能抵御的攻击类型 [170] 用对抗样本重训练模型, 并实现互信息特征选择 通过动态优化特征提升鲁棒性 恶意软件分类 针对恶意软件的对抗扰动攻击 [97] 根据输出的软标签构建蒸馏模型 分类错误率低且泛化性能好 恶意软件检测和分类 恶意软件攻击 [171] 结合对抗训练、去噪自编码器、集成学习以及输入转换技术 具有针对多种攻击类型的防御能力 恶意软件分类以及多分类任务 FGSM攻击、随机攻击和模仿攻击等 [172] 高温情况下平滑DL模型的误差表面, 提高模型抗扰动能力 生成对抗样本数量多, 模型鲁棒 恶意软件分类 对抗性攻击和混淆攻击 [173] 通过模拟中间层数据训练蒸馏模型 模型间差距小, 且蒸馏模型性能可能更好 恶意软件检测和分类 对抗性攻击和混淆攻击 表 13 不同数据检测方法的比较

Table 13 Comparison of different data detection methods

文献 关键技术 特点 应用任务 能抵御的攻击类型 [122] 频谱签名检测基于表示学习的异常样本识别与过滤 检测效果受限于模型表示 代码搜索 数据投毒攻击 [176] 数据集加载脚本提取、模型反序列化、污点分析、启发式模式匹配 动静分析结合, 多格式支持 恶意软件分类与检测 恶意代码注入、隐蔽后门攻击 [177] 分析查询序列以计算攻击可能性分数, 预测潜在攻击行为 与模型无关的状态防御, 无法适应新数据 恶意软件检测 恶意代码注入、查询攻击以及探测攻击 [94] 利用范数衡量变量间距离以识别出异常值 通过上下文关系分析找到异常标识符 恶意软件检测 重命名标识符 [178] 利用贡献度分布来检测对抗样本 不依赖距离或密度的度量, 时间复杂度更低 恶意代码检测 重命名标识符、插入攻击等 [179] 随机平滑检测、注意力定位噪声、MCIP去噪 无需模型重训练, 实时处理, 解释性好 功能分类、作者归属、

缺陷预测重命名攻击等 表 14 不同防御方法的优缺点

Table 14 Advantages and disadvantages of different defense methods

方法 类型 优点 不足 对抗训练 – 提升模型鲁棒性, 增强泛化能力, 适应多种攻击形式, 具有较好的通用性 训练成本高、计算资源消耗大, 容易陷入过拟合, 对新型攻击适应性有限 后门防御 创建过滤器 触发器识别准确, 可用于静态、动态分析, 检测性能稳定, 可靠性好 受特征提取质量影响, 容易误判, 新型触发器覆盖困难, 检测成本高, 需人工参与 检测和清除后门 无需原始训练数据, 适用性强, 有效识别潜在后门区域并清除 建模依赖神经元行为, 容易误检与漏检, 难以应对隐蔽性强或多样化后门策略 防御蒸馏 – 结构简单, 可以抑制对抗梯度传播, 模型鲁棒性好, 适用于多种任务 对超参数敏感, 防御强度受限, 复杂攻击抵御困难, 训练开销大 数据检测 – 模型结构无需修改, 通用性好, 高效识别常见对抗样本 受特征选择影响, 对语义扰动不敏感, 缺乏泛化能力, 易被针对性对抗样本规避 表 15 源代码处理任务中数据集

Table 15 Datasets in source code processing tasks

数据集 分类/生成 标签数目 样本数量(千)训练/测试/验证 语言 评估指标 应用任务 BigCloneBench[183] 分类 10 900/416/416 JAVA 召回率、精确率、F1值 克隆检测 POJ-104[184] 分类 104 64/16/24 C/C++ 准确率 克隆检测 GCJ[185] 分类 70 0.56/0.14/ Python 准确率 作者归属 Devigndataset[186] 分类 2 21/2.7/2.7 C 准确率、F1值 缺陷检测 PY150[187] 生成 100/5/50 Python 精确率、准确率、召回率 代码补全 Github-Java Corpus[188] 生成 12934 /7189 /Java 精确率、准确率、召回率 代码补全 CONCODE[192] 生成 100/2/2 Java 准确率、BLEU、CodeBLEU 代码生成 CodeSearchNet[193] 生成 908/45/53 Go、Java、JavaScript、

PHP、Python、RubyNDCG 代码摘要 -

[1] Xu Y W, Khan T M, Song Y, Meijering E. Edge deep learning in computer vision and medical diagnostics: a comprehensive survey. Artificial Intelligence Review, 2025, 58(3): Article No. 93 doi: 10.1007/s10462-024-11033-5 [2] Ahmed S F, Alam M S B, Kabir M, Afrin S, Rafa S J, Mehjabin A, et al. Unveiling the frontiers of deep learning: innovations shaping diverse domains. Applied Intelligence, 2025, 55(7): 1−55 doi: 10.1007/s10489-025-06259-x [3] Lei L, Yang Q, Yang L, Shen T, Wang R X, Fu C B. Deep learning implementation of image segmentation in agricultural applications: a comprehensive review. Artificial Intelligence Review, 2024, 57(6): Article No.149 doi: 10.1007/s10462-024-10775-6 [4] Kamaluddin M I, Rasyid M W K, Abqoriyyah F H, Saehu A. Accuracy analysis of DeepL: Breakthroughs in machine translation technology. Journal of English Education Forum (JEEF), 2024, 4(2): 122−126 doi: 10.29303/jeef.v4i2.681 [5] Chen X P, Hu X, Huang Y, Jiang H, Ji W X, Jiang Y J, et al. Deep learning-based software engineering: progress, challenges, and opportunities. Science China Information Sciences, 2025, 68(1): Article No. 111102 doi: 10.1007/s11432-023-4127-5 [6] Bu W J, Shu H, Kang F, Hu Q, Zhao Y T. Software subclassification based on bertopic-bert-bilstm model. Electronics, 2023, 12(18): 1−23 doi: 10.3390/electronics12183798 [7] Ameri R, Hsu C C, Band S S. A systematic review of deep learning approaches for surface defect detection in industrial applications. Engineering Applications of Artificial Intelligence, 2024, 130: Article No. 107717 doi: 10.1016/j.engappai.2023.107717 [8] Thanoshan V, Kuhaneswaran B, Ashan I, Banage T G S K, Kaveenga K. LeONet: A Hybrid Deep Learning Approach for High-Precision Code Clone Detection Using Abstract Syntax Tree Features. Big Data and Cognitive Computing, 2025, 9(7): Article No. 187 doi: 10.3390/bdcc9070187 [9] Shen Y H, Ju X L, Chen X, Yang G. Bash comment generation via data augmentation and semantic-aware CodeBERT. Automated Software Engineering, 2024, 31(1): Article No. 30 doi: 10.1007/s10515-024-00431-2 [10] 王尚文, 刘逵, 林博, 黎立, Klein J, Bissyandé T F, et al. 基于指针神经网络的细粒度缺陷定位. 软件学报, 2024, 35(04): 1841−1860 doi: 10.13328/j.cnki.jos.006924Wang Shang-Wen, Liu Kui, Lin Bo, Li Li, Klein J, Bissyandé T F, et al. Fine-grained fault localization based on pointer neural network. Journal of Software, 2024, 35(04): 1841−1860 doi: 10.13328/j.cnki.jos.006924 [11] Liu S G, Cao D, Kim J, Abraham T, Montague P, Camtepe S, et al. EaTVul: {ChatGPT-based} Evasion Attack Against Software Vulnerability Detection. In: Proceedings of the 33rd USENIX Security Symposium (USENIX Security 24). Philadelphia, PA, USA: USENIX, 2024. 7357−7374 [12] 陈思宏, 沈浩靖, 王冉, 王熙照. 预测不确定性与对抗鲁棒性的关系研究. 软件学报, 2022, 33(02): 524−538 doi: 10.13328/j.cnki.jos.006163Chen Si-Hong, Shen Hao-Jing, Wang Ran, Wang Xi-Zhao. Relationship between prediction uncertainty and adversarial robustness. Journal of Software, 2022, 33(02): 524−538 doi: 10.13328/j.cnki.jos.006163 [13] Javed H, El-Sappagh S, Abuhmed T. Robustness in deep learning models for medical diagnostics: security and adversarial challenges towards robust AI applications. Artificial Intelligence Review, 2024, 58(1): Article No. 12 doi: 10.1007/s10462-024-11005-9 [14] Mei S H, Lian J W, Wang X F, Su Y R, Ma M Y, Chau L P. A comprehensive study on the robustness of deep learning-based image classification and object detection in remote sensing: Surveying and benchmarking. Journal of Remote Sensing, 2024, 4: Article No. 0219 doi: 10.34133/remotesensing.0219 [15] Alahmed S, Alasad Q, Yuan J S, Alawad M. Impacting robustness in deep learning-based NIDS through poisoning attacks. Algorithms, 2024, 17(4): Article No. 155 doi: 10.3390/a17040155 [16] 秦臻, 庄添铭, 朱国淞, 周尔强, 丁熠, 耿技. 面向人工智能模型的安全攻击和防御策略综述. 计算机研究与发展, 2024, 61(10): 2627−2648 doi: 10.7544/issn1000-1239.202440449Qin Zhen, Zhuang Tian-Ming, Zhu Guo-Song, Zhou Er-Qiang, Ding Yi, Geng Ji. Survey of security attack and defense strategies for artificial intelligence model. Journal of Computer Research and Development, 2024, 61(10): 2627−2648 doi: 10.7544/issn1000-1239.202440449 [17] 汪旭童, 尹捷, 刘潮歌, 徐辰晨, 黄昊, 王志, 等. 神经网络后门攻击与防御综述. 计算机学报, 2024, 47(08): 1713−1743 doi: 10.11897/SP.J.1016.2024.01713Wang Xu-Tong, Yin Jie, Liu Chao-Ge, Xu Chen-Chen, Huang Hao, Wang Zhi, et al. A Survey of backdoor attacks and sefenses on neural networks. Chinese Journal of Computers, 2024, 47(08): 1713−1743 doi: 10.11897/SP.J.1016.2024.01713 [18] Zhang C Y, Hu M W, Li W H, Wang L J. Adversarial attacks and defenses on text-to-image diffusion models: A survey. Information Fusion, 2025, 114: Article No. 102701 doi: 10.1016/j.inffus.2024.102701 [19] Chen N, Sun Q S, Wang J N, Gao M, Li X L, Li X. Evaluating and enhancing the robustness of code pre-trained models through structure-aware adversarial samples generation. Findings of the Association for Computational Linguistics: EMNLP 2023, 202314857−14873 [20] Na C W, Choi Y S, Lee J H. DIP: Dead code insertion based black-box attack for programming language model. In: Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics. Toronto, Canada: Association for Computational Linguistics, 2023. 7777−7791 [21] Allamanis M, Barr E T, Devanbu P, Sutton C. A survey of machine learning for big code and naturalness. ACM Computing Surveys (CSUR), 2018, 51(4): 1−37 doi: 10.1145/3212695 [22] Yong X U, Cheng M. Multi-view feature fusion model for software bug repair pattern prediction. Wuhan University Journal of Natural Sciences, 2023, 28(6): 493−507 doi: 10.1051/wujns/2023286493 [23] Wan Y, Bi Z, He Y, Zhang J G, Zhang H Y, Sui Y L, et al. Deep learning for code intelligence: Survey, benchmark and toolkit. ACM Computing Surveys, 2024, 56(12): 1−41 doi: 10.1145/3664597 [24] Yang Y M, Xia X, Lo D, Grundy J. A survey on deep learning for software engineering. ACM Computing Surveys (CSUR), 2022, 54(10s): 1−73 [25] Devanbu P, Dwyer M, Elbaum S, Lowry M, Moran K, Poshyvanyk D, et al. Deep learning & software engineering: State of research and future directions. arXiv preprint arXiv: 2009.08525, 2020. [26] 杨焱景, 毛润丰, 谭睿, 沈海峰, 荣国平. 源码处理场景下人工智能系统鲁棒性验证方法. 软件学报, 2023, 34(09): 4018−4036Yang Yan-Jing, Mao Run-Feng, Tan Rui, Shen Hai-Feng, Rong Guo-Ping. Robustness verification method for artificial intelligence systems based on source code processing. Journal of Software, 2023, 34(09): 4018−4036 [27] She X Y, Liu Y, Zhao Y J, He Y L, Li L, Tantithamthavorn C, et al. Pitfalls in language models for code intelligence: A taxonomy and survey. arXiv preprint arXiv: 2310.17903, 2023. [28] 孙伟松, 陈宇琛, 赵梓含, 陈宏, 葛一飞, 韩廷旭, 等. 深度代码模型安全综述. 软件学报, 2025, 36(04): 1461−1488 doi: 10.13328/j.cnki.jos.007254Sun Wei-Song, Chen Yu-Chen, Zhao Zi-Han, Chen Hong, Ge Yi-Fei, Han Ting-Xu, et al. Survey on security of deep code models. Journal of Software, 2025, 36(04): 1461−1488 doi: 10.13328/j.cnki.jos.007254 [29] 冀甜甜, 方滨兴, 崔翔, 王忠儒, 甘蕊灵, 韩宇, 等. 深度学习赋能的恶意代码攻防研究进展. 计算机学报, 2021, 44(04): 669−695Ji Tian-Tian, Fang Bin-Xing, Cui Xiang, Wang Zhong-Rui, Gan Rui-Ling, Han Yu, et al. Research on deep learning-powered malware attack and defense techniques. Chinese Journal of Computers, 2021, 44(04): 669−695 [30] Qu Y B, Huang S, Yao Y M. A survey on robustness attacks for deep code models. Automated Software Engineering, 2024, 31(2): 1−40 doi: 10.1007/s10515-024-00464-7 [31] Szegedy C, Zaremba W, Sutskever I, Bruna J, Erhan D, Goodfellow I, et al. Intriguing properties of neural networks. arXiv preprint arXiv: 1312.6199, 2013. [32] Zhang H Z, Li Z, Li G, Ma L, Liu Y, Jin Z. Generating adversarial examples for holding robustness of source code processing models. In: Proceedings of the AAAI Conference on Artificial Intelligence. New York, NY, USA: AAAI, 2020. 34(01): 1169−1176 [33] Kumar S, Gupta S, Buduru A B. BB-Patch: BlackBox adversarial patch-attack using zeroth-order optimization. arXiv preprint arXiv: 2405.06049, 2024. [34] Hector K, Moëllic P A, Dutertre J M, Dumont M. Fault injection and safe-error attack for extraction of embedded neural network models. In: Proceedings of the European Symposium on Research in Computer Security. The Hague, The Netherlands: Springer, 2023. 644−664 [35] Li C, Yao W, Wang H, Jiang T S, Zhang X Y. Bayesian evolutionary optimization for crafting high-quality adversarial examples with limited query budget. Applied Soft Computing, 2023, 142: 1−48 doi: 10.1016/j.asoc.2023.110370 [36] 李自拓, 孙建彬, 杨克巍, 熊德辉. 面向图像分类的对抗鲁棒性评估综述. 计算机研究与发展, 2022, 59(10): 2164−2189Li Zhi-Tuo, Sun Jian-Bin, Yang Ke-Wei, Xiong De-Hui. A review of adversarial robustness evaluation for image classification. Journal of Computer Research and Development, 2022, 59(10): 2164−2189 [37] Wei X X, Kang C X, Dong Y P, Wang Z Y, Ruan S W, Chen Y B. Real-world adversarial defense against patch attacks based on diffusion model. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2025, 47(12): 11124−11140 doi: 10.1109/TPAMI.2025.3596462 [38] Ilyas A, Santurkar S, Tsipras D, Engstrom L, Tran B, Madry A. Adversarial examples are not bugs, they are features. Advances in Neural Information Processing Systems, 20191−12 [39] Wang W Q, Wang L, Tang B, Wang R, Ye A. Towards a robust deep neural network in text domain a survey. arXiv preprint arXiv: 1902.07285, 2019. [40] 张鑫, 张晗, 牛曼宇, 姬莉霞. 计算机视觉领域对抗样本检测综述. 计算机科学, 2025, 52(01): 345−361Zhang Xin, Zhang Han, Niu Man-Yu, Ji Li-Xia. A Survey of adversarial example detection in computer vision. Computer Science, 2025, 52(01): 345−361 [41] Jiang W, He Z Y, Zhan J Y, Pan W J. Attack-aware detection and defense to resist adversarial examples. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 2020, 40(10): 2194−2198 doi: 10.1109/tcad.2020.3033746 [42] 赵子天, 詹文翰, 段翰聪, 吴跃. 基于SVD的深度学习模型对抗鲁棒性研究. 计算机科学, 2023, 50(10): 362−368 doi: 10.11896/jsjkx.220800090Zhao Zi-Tian, Zhan Wen-Han, Duan Han-Cong, Wu Yue. Study on adversarial robustness of deep learning models based on SVD. Computer Science, 2023, 50(10): 362−368 doi: 10.11896/jsjkx.220800090 [43] Pouya S. Defense-GAN: protecting classifiers against adversarial attacks using generative models. Retrieved from https://arXiv:1805.06605, 2018. [44] 董庆宽, 何浚霖. 基于信息瓶颈的深度学习模型鲁棒性增强方法. 电子与信息学报, 2023, 45(06): 2197−2204 doi: 10.11999/JEIT220603Dong Qing-Kuan, He Jun-Lin. Robustness enhancement method of deep learning model based on information bottleneck. Journal of Electronics & InformationTechnology, 2023, 45(06): 2197−2204 doi: 10.11999/JEIT220603 [45] Devlin J, Chang M W, Lee K, Toutanova K. Bert: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minneapolis, MN, USA: Association for Computational Linguistics, 2019. 1(2): 4171−4186 [46] Radford A, Wu J, Child R, Luan D, Amodei D, Sutskever I. Language models are unsupervised multitask learners. OpenAI blog, 2019, 1(8): 1−24 [47] Sharma R, Chen F X, Fard F, Lo D. An exploratory study on code attention in BERT. In: Proceedings of the 30th IEEE/ACM International Conference on Program Comprehension. Pittsburgh, PA, USA: IEEE, 2022. 437−448 [48] Zeng Z R, Tan H Z, Zhang H T, Li J, Zhang Y Q, Zhang L M. An extensive study on pre-trained models for program understanding and generation. In: Proceedings of the 31st ACM SIGSOFT International Symposium on Software Testing and Analysis. South Korea: ACM, 2022. 39−51 [49] Yao K C, Wang H, Qin C, Zhu H S, Wu Y J, Zhang L B. CARL: Unsupervised code-based adversarial attacks for programming language models via reinforcement learning. ACM Transactions on Software Engineering and Methodology, 2024, 34(1): 1−32 doi: 10.1145/3688839 [50] Niu C G, Li C Y, Ng V, Chen D X, Ge J D, Luo B. An empirical comparison of pre-trained models of source code. 2023 IEEE/ACM 45th International Conference on Software Engineering (ICSE). Melbourne, Australia: IEEE, 2023. 2136−2148 [51] Feng Z Y, Guo D, Tang D Y, Duan N, Feng X C, Gong M, et al. Codebert: A pre-trained model for programming and natural languages. arXiv preprint arXiv: 2002.08155, 2020. [52] Li Z Y, Lu S, Guo D Y, Duan N, Jannu S, Jenks G, et al. Codereviewer: Pre-training for automating code review activities. arXiv preprint arXiv: 2203.09095, 2022. [53] Chakraborty S, Ahmed T, Ding Y, Devanbu P T, Ray B. Natgen: generative pre-training by "naturalizing" source code. In: Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineerin. Singapore, Singapore: ACM, 2022. 18−30 [54] Wang Y, Wang W S, Joty S, Hoi S C H. Codet5: Identifier-aware unified pre-trained encoder-decoder models for code understanding and generation. arXiv preprint arXiv: 2109.00859, 2021. [55] Wang Y, Le H, Gotmare A, Bui N, Li J N, Hoi S. Codet5+: Open code large language models for code understanding and generation. arXiv preprint arXiv: 2305.07922, 2023. [56] Ding Y, Chakraborty S, Buratti L, Pujar S, Morari A, Kaiser G, et al. CONCORD: clone-aware contrastive learning for source code. In: Proceedings of the 32nd ACM SIGSOFT International Symposium on Software Testing and Analysis. Seattle, WA, USA: ACM, 2023. 26−38 [57] Poesia G, Polozov O, Le V, Tiwari A, Soares G, Meek C, et al. Synchromesh: Reliable code generation from pre-trained language models. arXiv preprint arXiv: 2201.11227, 2022. [58] Wang R C, Xu S L, Tian Y, Ji X Y, Sun X B, Jiang S J. SCL-CVD: Supervised contrastive learning for code vulnerability detection via GraphCodeBERT. Computers & Security, 2024Article No. 103994 [59] Zeng J W, Zhang T, Xu Z. DG-Trans: Automatic code summarization via dynamic graph attention-based transformer. 2021 IEEE 21st International Conference on Software Quality, Reliability and Security (QRS). Hainan, China: IEEE, 2021. 786−795 [60] Yang J, Fu C, Deng F Y, Wen M, Guo X W, Wan C H. Toward interpretable graph tensor convolution neural network for code semantics embedding. ACM Transactions on Software Engineering and Methodology, 2023, 32(5): 1−40 doi: 10.1145/3582574 [61] Peng J X, Wang Y, Xue J F, Liu Z Y. Fast Cross-platform binary code similarity detection framework based on CFGs taking advantage of NLP and inductive GNN. Chinese Journal of Electronics, 2024, 33(1): 128−138 doi: 10.23919/cje.2022.00.228 [62] Sherstinsky A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Physica D: Nonlinear Phenomena, 2020, 404: Article No. 132306 doi: 10.1016/j.physd.2019.132306 [63] Cahuantzi R, Chen X, Güttel S. A comparison of LSTM and GRU networks for learning symbolic sequences. Science and Information Conference. Cham: Springer Nature Switzerland, 2023. 771−785 [64] Wang R Y, Zhang H W, Lu G L, Lyu L, Lyu C. Fret: Functional reinforced transformer with bert for code summarization. IEEE Access, 2020, 8: 135591−135604 doi: 10.1109/ACCESS.2020.3011744 [65] Alqarni M, Azim A. Low Level Source Code Vulnerability Detection Using Advanced BERT Language Model. In: Proceedings of the 35th Canadian Conference on Artificial Intelligence Canadian AI. Toronto, Ontario, Canada: CAIAC, 2022. 1−11 [66] Mohammadkhani A H, Tantithamthavorn C, Hemmatif H. Explaining transformer-based code models: What do they learn? When they do not work? 2023 IEEE 23rd International Working Conference on Source Code Analysis and Manipulation (SCAM). Bogotá, Colombia: IEEE, 2023. 96−106 [67] Shi E S, Wang Y L, Du L, Zhang H Y, Han S, Zhang D M, et al. Cocoast: representing source code via hierarchical splitting and reconstruction of abstract syntax trees. Empirical Software Engineering, 2023, 28(6): Article No. 135 doi: 10.1007/s10664-023-10378-9 [68] Zhang J, Wang X, Zhang H Y, Sun H L, Wang K X, Liu X D. A novel neural source code representation based on abstract syntax tree. 2019 IEEE/ACM 41st International Conference on Software Engineering (ICSE). Montreal, QC, Canada: IEEE, 2019. 783−794 [69] Gong L Y, Elhoushi M, Cheung A. AST-T5: Structure-aware pretraining for code generation and understanding. arXiv preprint arXiv: 2401.03003, 2024. [70] Yang G, Jin T C, Dou L. Heterogeneous directed hypergraph neural network over abstract syntax tree (AST) for code classification. arXiv preprint arXiv: 2305.04228, 2023. [71] Guo D Y, Lu S, Duan N, Wang Y L, Zhou M, Yin J. Unixcoder: Unified cross-modal pre-training for code representation. arXiv preprint arXiv: 2203.03850, 2022. [72] Goodfellow I J, Shlens J, Szegedy C. Explaining and harnessing adversarial examples. arXiv preprint arXiv: 1412.6572, 2015. [73] Dube S. High dimensional spaces, deep learning and adversarial examples. arXiv preprint arXiv: 1801.00634, 2018. [74] Amsaleg L, Bailey J, Barbe A, Erfani S M, Furon T, Houle M E. High intrinsic dimensionality facilitates adversarial attack: Theoretical evidence. IEEE Transactions on Information Forensics and Security, 2020, 16: 854−865 doi: 10.1109/tifs.2020.3023274 [75] Tanay T, Griffin L. A boundary tilting persepective on the phenomenon of adversarial examples. arXiv preprint arXiv: 1608.07690, 2016. [76] Wei H, Tang H, Jia X M, Wang Z X, Yu H X, Li Z B. Physical adversarial attack meets computer vision: A decade survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(12): 9797−9817 doi: 10.1109/TPAMI.2024.3430860 [77] Liu D Z, Yang M Y, Qu X Y, Zhou P, Cheng Y, Hu W. A survey of attacks on large vision-language models: resources, advances, and future trends. IEEE Transactions on Neural Networks and Learning Systems, 20251−15 [78] 郭凯威, 杨奎武, 张万里, 胡学先, 刘文钊. 面向文本识别的对抗样本攻击综述. 中国图象图形学报, 2024, 29(09): 2672−2691Guo Kai-Wei, Yang Kui-Wei, Zhang Wan-Li, Hu Xue-Xian, Liu Wen-Zhao. A review of adversarial examples for optical character recognition. Journal of Image and Graphics, 2024, 29(09): 2672−2691 [79] Zhang H Z, Fu Z Y, Li G, Ma L, Zhao Z H, Yang H A, et al. Towards robustness of deep program processing models-detection, estimation, and enhancement. ACM Transactions on Software Engineering and Methodology (TOSEM), 2022, 31(3): 1−40 doi: 10.1145/3511887 [80] Biggio B, Fumera G, Roli F. Security evaluation of pattern classifiers under attack. IEEE Transactions on Knowledge and Data Engineering, 2013, 26(4): 984−996 [81] 余正飞, 闫巧, 周鋆. 面向网络空间防御的对抗机器学习研究综述. 自动化学报, 2022, 48(07): 1625−1649 doi: 10.16383/j.aas.c210089Yu Zheng-Fei, Yan Qiao, Zhou Yun. A survey on adversarial machine learning for cyberspace defense. Acta Automatica Sinica, 2022, 48(07): 1625−1649 doi: 10.16383/j.aas.c210089 [82] Biggio B, Corona I, Nelson B, Rubinstein B I P, Maiorca D, Fumera G, et al. Security evaluation of support vector machines in adversarial environments. Support Vector Machines Applications, 2014105−153 [83] 陈晋音, 邹健飞, 庞玲, 李虎. 一种利用反插值操作的隐蔽中毒攻击方法. 控制与决策, 2023, 38(12): 3381−3389Chen Jin-Yin, Zou Jian-Fei, Pang Ling, Li Hu. Anti-interpolation based stealthy poisoning attack method on deep neural networks. Control and Decision, 2023, 38(12): 3381−3389 [84] Kloft M, Laskov P. Security analysis of online centroid anomaly detection. The Journal of Machine Learning Research, 2012, 13(1): 3681−3724 [85] Wang X R, Xiang Y, Gao J, Ding J. Information laundering for model privacy. arXiv preprint arXiv: 2009.06112, 2020. [86] Carlini N, Tramer F, Wallace E, Jagielski M, Herbert-Voss A, Lee K, et al. Extracting training data from large language models. In: Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), USENIX Association, 2021: 2633−2650 [87] Yu S W, Wang T, Wang J. Data augmentation by program transformation. Journal of Systems and Software, 2022, 190: Article No. 111304 doi: 10.1016/j.jss.2022.111304 [88] Chen P L, Li Z, Wen Y, Liu L L. Generating adversarial source programs using important tokens-based structural transformations. 2022 26th International Conference on Engineering of Complex Computer Systems (ICECCS) Hiroshima, Japan: IEEE, 2022. 173−182 [89] Srikant S, Liu S, Mitrovska T, Chang S Y, Fan Q F, Zhang G Y, et al. Generating adversarial computer programs using optimized obfuscations. arXiv preprint arXiv: 2103.11882, 2021. [90] Pour M V, Li Z, Ma L, Hemmati H. A search-based testing framework for deep neural networks of source code embedding. 2021 14th IEEE Conference on Software Testing, Verification and Validation (ICST). Porto de Galinhas, Brazil: IEEE, 2021. 36−46 [91] Rabin M R I, Bui N D Q, Wang K, Yu Y J, Jiang L X, Alipour M A. On the generalizability of neural program models with respect to semantic-preserving program transformations. Information and Software Technology, 2021, 135: Article No. 106552 doi: 10.1016/j.infsof.2021.106552 [92] Tian Z, Chen J J, Jin Z. Code difference guided adversarial example generation for deep code models. 2023 38th IEEE/ACM International Conference on Automated Software Engineering (ASE). Luxembourg, Luxembourg: IEEE, 2023. 850−862 [93] Tian J F, Wang C X, Li Z, Wen Y. Generating adversarial examples of source code classification models via q-learning-based markov decision process. 2021 IEEE 21st International Conference on Software Quality, Reliability and Security (QRS). Hainan, China: IEEE, 2021. 807−818 [94] Yefet N, Alon U, Yahav E. Adversarial examples for models of code. In: Proceedings of the ACM on Programming Languages. New York, NY, USA: Association for Computing Machinery, 2020. 4(OOPSLA): 1−30 [95] Yang Z, Shi J K, He J D, Lo D. Natural attack for pre-trained models of code. In: Proceedings of the 44th International Conference on Software Engineering. Pittsburgh, PA, USA: ACM, 2022. 1482−1493 [96] Kreuk F, Barak A, Aviv-Reuven S, Baruch M, Pinkas B, Keshet J. Deceiving end-to-end deep learning malware detectors using adversarial examples. arXiv preprint arXiv: 1802.04528, 2018. [97] Singhal R, Soni M, Bhatt S, Khorasiya M, Jinwala D C. Enhancing robustness of malware detection model against white box adversarial attacks. International Conference on Distributed Computing and Intelligent Technology. Cham: Springer Nature Switzerland, 2023. 181−196 [98] Zhang H Z, Lu S, Li Z, Jin Z, Ma L, Liu Y, et al. CodeBERT-Attack: Adversarial attack against source code deep learning models via pre-trained model. Journal of Software: Evolution and Process, 2024, 36(3): Article No. e2571 doi: 10.1002/smr.2571 [99] Jha A, Reddy C K. Codeattack: Code-based adversarial attacks for pre-trained programming language models. In: Proceedings of the 37st Conference on Artificial Intelligence. Washington, DC, USA: AAAI, 2023. 37(12): 14892−14900 [100] Du X H, Wen M, Wei Z C, Wang S W, Jin H. An extensive study on adversarial attack against pre-trained models of code. In: Proceedings of the 31st ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering. San Francisco, CA, USA: ACM, 2023. 489−501 [101] Bielik P, Vechev M. Adversarial robustness for code. In: Proceedings of the 37th International Conference on Machine Learning. New York, USA: PMLR, 2020. 896−907 [102] Nguyen T D, Zhou Y, Le X B D, Thongtanunam P, Lo D. Adversarial attacks on code models with discriminative graph patterns. arXiv preprint arXiv: 2308.11161, 2023. [103] Henkel J, Ramakrishnan G, Wang Z, Albarghouthi A, Jha S, Reps T. Semantic robustness of models of source code. 2022 IEEE International Conference on Software Analysis, Evolution and Reengineering (SANER). Honolulu, HI, USA: IEEE, 2022. 526−537 [104] Wang D Z, Jia Z Y, Li S S, Yu Y, Xiong Y, Dong W, et al. Bridging pre-trained models and downstream tasks for source code understanding. In: Proceedings of the 44th International Conference on Software Engineering. Pittsburgh, PA, USA: ACM, 2022. 287−298 [105] Quiring E, Maier A, Rieck K. Misleading authorship attribution of source code using adversarial learning. In: Proceedings of the 28th USENIX Security Symposium (USENIX Security 19). Santa Clara, CA, USA: USENIX, 2019. 479−496 [106] Gao F J, Wang Y, Wang K. Discrete adversarial attack to models of code. In: Proceedings of the ACM on Programming Languages. New York, NY, USA: ACM, 2023. 7(PLDI): 172−195 [107] Liu D X, Zhang S K. ALANCA: Active learning guided adversarial attacks for code comprehension on diverse pre-trained and large language models. 2024 IEEE International Conference on Software Analysis, Evolution and Reengineering (SANER). Rovaniemi, Finland: IEEE, 2024: 602−613 [108] Wang D Z, Chen B X, Li S S, Luo W, Peng S L, Dong W. One adapter for all programming languages? adapter tuning for code search and summarization. 2023 IEEE/ACM 45th International Conference on Software Engineering (ICSE). Melbourne, Australia: IEEE 2023. 5−16 [109] Yang Y L, Fan H R, Lin C H, Li Q, Zhao Z Y, Shen C. Exploiting the adversarial example vulnerability of transfer learning of source code. IEEE Transactions on Information Forensics and Security, 2024, 19: 5880−5894 doi: 10.1109/TIFS.2024.3402153 [110] Baracaldo N, Chen B, Ludwig H, Safavi J A. Mitigating poisoning attacks on machine learning models: A data provenance based approach. In: Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security. Dallas, TX, USA: ACM, 2017. 103−110. [111] Li J, Li Z, Zhang H Z, Li G, Jin Z, Hu X, et al. Poison attack and poison detection on deep source code processing models. ACM Transactions on Software Engineering and Methodology, 2024, 33(3): 1−31 doi: 10.1145/3630008 [112] Liu Y Q, Ma S Q, Aafer Y, Lee W C, Zhai J, Wang W H, et al. Trojaning attack on neural networks. In: Proceedings of the 25th Annual Network And Distributed System Security Symposium (NDSS 2018). San Diego, California, USA: 2018. 1−16 [113] Jang S, Choi J S, Jo J, Lee K, Hwang S J. Silent branding attack: Trigger-free data poisoning attack on text-to-image diffusion models. In: Proceedings of the Computer Vision and Pattern Recognition Conference. Nashville, TN, USA: IEEE, 2025. 8203−8212 [114] Nguyen T T, Quoc Viet Hung N, Nguyen T T, Huynh T T, Nguyen T T, Weidlich M, et al. Manipulating recommender systems: A survey of poisoning attacks and countermeasures. ACM Computing Surveys, 2024, 57(1): 1−39 doi: 10.1145/3677328 [115] Wang F, Wang X, Ban X J. Data poisoning attacks in intelligent transportation systems: A survey. Transportation Research Part C: Emerging Technologies, 2024, 165: Article No. 104750 doi: 10.1016/j.trc.2024.104750 [116] Yazdinejad A, Dehghantanha A, Karimipour H, Srivastava G, Parizi R M. A robust privacy-preserving federated learning model against model poisoning attacks. IEEE Transactions on Information Forensics and Security, 2024, 19: 6693−6708 doi: 10.1109/tifs.2024.3420126 [117] 李戈, 彭鑫, 王千祥, 谢涛, 金芝, 王戟, 等. 大模型:基于自然交互的人机协同软件开发与演化工具带来的挑战. 软件学报, 2023, 34(10): 4601−4606Li Ge, Peng Xin, Wang Qian-Xiang, Xie Tao, Jin Zhi, Wang Ji, et al. Challenges from LLMs as a natural language based human-machine collaborative tool for software development and evolution. Ruan Jian Xue Bao/Journal of Software, 2023, 34(10): 4601−4606 [118] Yang Y C, Li Y M, Yao H W, Yang B, He Y, Zhang T W, et al. Tapi: Towards target-specific and adversarial prompt injection against code llms. arXiv preprint arXiv: 2407.09164, 2024. [119] Ramakrishnan G, Albarghouthi A. Backdoors in neural models of source code. 2022 26th International Conference on Pattern Recognition (ICPR). Montreal, QC, Canada: IEEE, 2022. 2892−2899 [120] Wu B Y, Chen H R, Zhang M D, Zhu Z H, Wei S K, Yuan D N, et al. Backdoorbench: A comprehensive benchmark of backdoor learning. Advances in Neural Information Processing Systems, 2022, 35: 10546−10559 [121] Li Y Z, Li Y M, Wu B Y, Li L K, He R, Lyu S. Invisible backdoor attack with sample-specific triggers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. Montreal, QC, Canada: IEEE, 2021. 16463−16472 [122] Wan Y, Zhang S J, Zhang H Y, Sui Y L, Xu G D, Yao D Z, et al. You see what i want you to see: poisoning vulnerabilities in neural code search. In: Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering. Singapore, Singapore: ACM, 2022. 1233−1245 [123] Sun W S, Chen Y C, Tao G H, Fang C R, Zhang X Y, Zhang Q Z, et al. Backdooring neural code search. arXiv preprint arXiv: 2305.17506, 2023. [124] Yang Z, Xu B W, Zhang J M, Kang H J, Shi J K, He J D. Stealthy backdoor attack for code models. IEEE Transactions on Software Engineering, 2024, 14(8): 1−18 doi: 10.21203/rs.3.rs-3969016/v1 [125] Li J, Li Z, Zhang H Z, Li G, Jin Z, Hu Xing, et al. Poison attack and defense on deep source code processing models. arXiv preprint arXiv: 2210.17029, 2022. [126] Bryksin T, Petukhov V, Alexin I, Prikhodko S, Shpilman A, Kovalenko V, et al. Using large-scale anomaly detection on code to improve kotlin compiler. In: Proceedings of the 17th International Conference on Mining Software Repositories. Seoul, Korea: IEEE, 2020. 455−465 [127] Li Y Z, Liu S Q, Chen K J, Xie X F, Zhang T W, Liu Y. Multi-target backdoor attacks for code pre-trained models. arXiv preprint arXiv: 2306.08350, 2023. [128] Schuster R, Song C, Tromer E, Shmatikov V. You autocomplete me: Poisoning vulnerabilities in neural code completion. In: Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), USENIX Association, 2021. 1559−1575 [129] Li H R, Chen Y L, Luo J L, Wang J C, Peng H, Kang Y, et al. Privacy in large language models: Attacks, defenses and future directions. arXiv preprint arXiv: 2310.10383, 2023. [130] Kurita K, Michel P, Neubig G. Weight poisoning attacks on pre-trained models. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Virtual Event: ACL, 2020. 2793−2806 [131] Chen K J, Meng Y X, Sun X F, Guo S W, Zhang T W, Li J W, et al. Badpre: Task-agnostic backdoor attacks to pre-trained nlp foundation models. arXiv preprint arXiv: 2110.02467, 2021. [132] Du W, Li P X, Zhao H D, Ju T J, Ren G, Liu G S. Uor: Universal backdoor attacks on pre-trained language models. arXiv preprint arXiv: 2305.09574, 2023. [133] Shen L J, Ji S L, Zhang X H, Li J F, Chen J, Shi J, et al. Backdoor pre-trained models can transfer to all. arXiv preprint arXiv: 2111.00197, 2021. [134] Ji Y J, Zhang X Y, Ji S L, Luo X P, Wang T. Model-reuse attacks on deep learning systems. In: Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security. New York, NY, USA: ACM, 2018. 349−363 [135] Qiang Y, Zhou X Y, Zade S Z, Roshani M A, Zytko D, Zhu D X. Learning to poison large language models during instruction tuning. arXiv preprint arXiv: 2402.13459, 2024. [136] Lukas N, Zhang Y X, Kerschbaum F. Deep neural network fingerprinting by conferrable adversarial examples. arXiv preprint arXiv: 1912.00888, 2019. [137] Oliynyk D, Mayer R, Rauber A. I know what you trained last summer: A survey on stealing machine learning models and defences. ACM Computing Surveys, 2023, 55(14s): 1−41 [138] Tramèr F, Zhang F, Juels A, Reiter M K, Ristenpart T. Stealing machine learning models via prediction APIs. In: Proceedings of the 25th USENIX Security Symposium (USENIX Security 16). Austin, TX, USA: USENIX, 2016. 601−618 [139] Yue Z R, He Z K, Zeng H M, McAuley L L. Black-box attacks on sequential recommenders via data-free model extraction. In: Proceedings of the 15th ACM Conference on Recommender Systems. New York, NY, USA: ACM, 2021. 44−54 [140] Yu H G, Yang K C, Zhang T, Tsai Y Y, Ho T Y, Jin Y. CloudLeak: Large-scale deep learning models stealing through adversarial examples. In: Proceedings of the 27th Annual Network and Distributed System Security Symposium. San Diego, California, USA: The Internet Society, 2020, 38: Article No. 102 [141] Yang B R, Li W, Xiang L Y, Li B. SrcMarker: Dual-channel source code watermarking via scalable code transformations. In: Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP). San Francisco, CA, USA: IEEE, 2024. 97−97 [142] Wang B H, Gong N Z. Stealing hyperparameters in machine learning. In: Proceedings of the 2018 IEEE Symposium on Security and Privacy (SP). San Francisco, CA, USA: IEEE, 2018. 36−52 [143] Naseh A, Krishna K, Iyyer M, Houmansadr A. Stealing the decoding algorithms of language models. In: Proceedings of the 2023 ACM SIGSAC Conference on Computer and Communications Security. New York, NY, USA: ACM, 2023. 1835−1849. [144] 钱亮宏, 王福德, 孙晓海. 基于预训练Transformer语言模型的源代码剽窃检测研究. 吉林大学学报(信息科学版), 2024, 42(4): 747−753Qian Liang-Hong, Wang Fu-De, Sun Xiao-Hai. Research on source code plagiarism detection based on pre-trained transformer language model. Journal of Jilin University (Information Science Edition), 2024, 42(4): 747−753 [145] Lin Z J, Xu K, Fang C F, Zheng H D, Jaheezuddin A A, Shi J. Quda: Query-limited data-free model extraction. In: Proceedings of the 2023 ACM Asia Conference on Computer and Communications Security. New York, NY, USA: ACM, 2023. 913−924 [146] Sinha A, Namkoong H, Duchi J C. Certifiable distributional robustness with principled adversarial training. arXiv preprint arXiv: 1710.10571, 2017. [147] Zhang H Y, Yu Y D, Jiao J T, Xing E, Ghaoui L E, Jordan M. Theoretically principled trade-off between robustness and accuracy. In: Proceedings of the 36th International Conference on Machine Learning. Long Beach, California, USA: PMLR, 2019. 7472−7482 [148] Bao J, Dang C Y, Luo R, Zhang H W, Zhou Z X. Enhancing adversarial robustness with conformal prediction: A framework for guaranteed model reliability. arXiv preprint arXiv: 2506.07804, 2025: 1−20 [149] Chen Y C, Sun W S, Fang C R, Chen Z P, Ge Y F, Han T X, et al. Security of language models for code: A systematic literature review. ACM Transactions on Software Engineering and Methodology, 20251−64 [150] Kulkarni A, Weng T W. Interpretability-guided test-time adversarial defense. European Conference on Computer Vision. Cham: Springer Nature Switzerland, 2024. 466−483 [151] 陈晋音, 吴长安, 郑海斌, 王巍, 温浩. 基于通用逆扰动的对抗攻击防御方法. 自动化学报, 2023, 49(10): 2172−2187 doi: 10.16383/j.aas.c201077Chen Jin-Yin, Wu Chang-An, Zheng Hai-Bin, Wang Wei, Wen Hao. Universal inverse perturbation defense against adversarial attacks. Acta Automatica Sinica, 2023, 49(10): 2172−2187 doi: 10.16383/j.aas.c201077 [152] 王璐瑶, 曹渊, 刘博涵, 曾恩, 刘坤, 夏元清. 时间序列分类模型的集成对抗训练防御方法. 自动化学报, 2025, 51(01): 144−160 doi: 10.16383/j.aas.c240050Wang Lu-Yao, Cao Yuan, Liu Bo-Han, Zeng En, Liu Kun, Xia Yuan-Qing. Ensemble adversarial training defense for time series classification models. Acta Automatica Sinica, 2025, 51(01): 144−160 doi: 10.16383/j.aas.c240050 [153] Qian Z, Huang K J, Wang Q F, Zhang X Y. A survey of robust adversarial training in pattern recognition: Fundamental, theory, and methodologies. Pattern Recognition, 2022, 131: Article No. 108889 doi: 10.1016/j.patcog.2022.108889 [154] Bai T, Luo J Q, Zhao J, Wen B H, Wang Q. Recent advances in adversarial training for adversarial robustness. In: proceedings of the 30th International Joint Conference on Artificial Intelligence (IJCAI-21): ijcai.org, 2021. 4312−4321 [155] Zhang C, Wang Z F, Mangal R, Fredrikson M, Jia L M, Pasareanu C. Transfer attacks and defenses for large language models on coding tasks. arXiv preprint arXiv: 2311.13445, 2023. [156] Zhou Y, Zhang X Q, Shen J J, Han T T, Chen T L, Gall H. Adversarial robustness of deep code comment generation. ACM Transactions on Software Engineering and Methodology (TOSEM), 2022, 31(4): 1−30 [157] Li Y Y, Wu H Q, Zhao H. Semantic-preserving adversarial code comprehension. arXiv preprint arXiv: 2209.05130, 2022. [158] Yang G, Zhou Y, Yang W H, Yue T, Chen X, Chen T L. How important are good method names in neural code generation? a model robustness perspective. ACM Transactions on Software Engineering and Methodology, 2024, 33(3): 1−35 doi: 10.1145/3630010 [159] Yang G, Zhou Y, Zhang X Y, Chen X, Han T T, Chen T L. Assessing and improving syntactic adversarial robustness of pre-trained models for code translation. arXiv preprint arXiv: 2310.18587, 2023. [160] Moosavi-Dezfooli S M, Fawzi A, Fawzi O, Frossard P. Universal adversarial perturbations. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. Honolulu, Hawaii: IEEE, 1765−1773 [161] Hussain A, Rabin M R I, Ahmed T, Alipour A, Xu B W. Occlusion-based detection of trojan-triggering inputs in large language models of code. arXiv preprint arXiv: 2312.04004, 2023. [162] Qi S Y, Yang Y H, Gao S, Gao C Y, Xu Z L. Badcs: A backdoor attack framework for code search. arXiv preprint arXiv: 2305.05503, 2023. [163] Rashid A, Such J. StratDef: Strategic defense against adversarial attacks in ML-based malware detection. Computers & Security, 2023, 134: 1−18 doi: 10.1016/j.cose.2023.103459 [164] Liu Y Q, Lee W C, Tao G H, Ma S Q, Aafer Y, Zhang X Y. Abs: Scanning neural networks for back-doors by artificial brain stimulation. In: Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security. New York, NY, USA: ACM, 2019. 1265−1282 [165] Sun W S, Chen Y C, Fang C R, Feng Y B, Xiao Y, Guo A, et al. Eliminating backdoors in neural code models via trigger inversion. arXiv preprint arXiv: 2408.04683, 2024. [166] Chen C S, Dai J Z. Mitigating backdoor attacks in lstm-based text classification systems by backdoor keyword identification. Neurocomputing, 2021, 452: 253−262 doi: 10.1016/j.neucom.2021.04.105 [167] Mu F W, Wang J J, Yu Z H, Shi L, Wang S, Li M Y, et al. CodePurify: Defend backdoor attacks on neural code models via entropy-based purification. arXiv preprint arXiv: 2410.20136, 2024. [168] 刘广睿, 张伟哲, 李欣洁. 基于边缘样本的智能网络入侵检测系统数据污染防御方法. 计算机研究与发展, 2022, 59(10): 2348−2361Liu Guang-Rui, Zhang Wei-Zhe, Li Xin-Jie. Data contamination defense method for intelligent network intrusion detection systems based on edge examples. Journal of Computer Research and Development, 2022, 59(10): 2348−2361 [169] Papernot N, McDaniel P, Wu X, Jha S, Swami A. Distillation as a defense to adversarial perturbations against deep neural networks. 2016 IEEE symposium on security and privacy (SP). San Jose, CA, USA: IEEE, 2016. 582−597 [170] Stokes J W, Wang D, Marinescu M, Marino M, Bussone B. Attack and defense of dynamic analysis-based, adversarial neural malware detection models. MILCOM 2018-2018 IEEE Military Communications Conference (MILCOM). Los Angeles, CA, USA: IEEE, 2018. 1−8 [171] Li D Q, Li Q M, Ye Y F, Xu S H. Enhancing deep neural networks against adversarial malware examples. arXiv preprint arXiv: 2004.07919, 2020. [172] Grosse K, Papernot N, Manoharan P, Backes M, McDaniel P. Adversarial perturbations against deep neural networks for malware classification. arXiv preprint arXiv: 1606.04435, 2016. [173] Xia M Z, Xu Z C, Zhu H J. A novel knowledge distillation framework with intermediate loss for android malware detection. 2022 IEEE Asia-Pacific Conference on Computer Science and Data Engineering (CSDE). Gold Coast, Australia: IEEE, 2022. 1−6 [174] Choi S H, Bahk T, Ahn S, Choi Y H. Clustering approach for detecting multiple types of adversarial examples. Sensors, 2022, 22(10): Article No. 3826 doi: 10.3390/s22103826 [175] Cohen G, Sapiro G, Giryes R. Detecting adversarial samples using influence functions and nearest neighbors. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Virtual Event: IEEE/CVF, 2020. 14453−14462 [176] Zhao J, Wang S N, Zhao Y J, Hou X Y, Wang K L, Gao P M, et al. Models are codes: Towards measuring malicious code poisoning attacks on pre-trained model hubs. 2024 39th IEEE/ACM International Conference on Automated Software Engineering (ASE). New York, NY, USA: ACM, 2024. 2087−2098 [177] Rashid A, Such J. Malprotect: Stateful defense against adversarial query attacks in ml-based malware detection. IEEE Transactions on Information Forensics and Security, 20231−16 [178] 田志成, 张伟哲, 乔延臣, 刘洋. 基于模型解释的PE文件对抗性恶意代码检测. 软件学报, 2023, 34(04): 1926−1943 doi: 10.13328/j.cnki.jos.006722Tian Zhi-Cheng, Zhang Wei-Zhe, Qiao Yan-Chen, Liu Yang. Detection of adversarial PE File malware via model interpretation. Ruan Jian Xue Bao/Journal of Software, 2023, 34(04): 1926−1943 doi: 10.13328/j.cnki.jos.006722 [179] Tian Z, Chen J J, Zhang X Y. On-the-fly improving performance of deep code models via input denoising. 2023 38th IEEE/ACM International Conference on Automated Software Engineering (ASE). Luxembourg, Luxembourg: IEEE, 2023. 560−572 [180] 张郅, 李欣, 叶乃夫, 胡凯茜. 基于暗知识保护的模型窃取防御技术DKP. 计算机应用, 2024, 44(07): 2080−2086Zhang Zhi, Li Xin, Ye Nai-Fu, Hu Kai-Qian. DKP: Defending against model stealing attacks based on dark knowledge protection. Journal of Computer Applications, 2024, 44(07): 2080−2086 [181] Asokan N. Model Stealing Attacks and Defenses: Where are we now? In: Proceedings of the 2023 ACM Asia Conference on Computer and Communications Security. New York, NY, USA: ACM, 2023. 327−327 [182] 张思思, 左信, 刘建伟. 深度学习中的对抗样本问题. 计算机学报, 2019, 42(08): 1886−1904 doi: 10.11897/SP.J.1016.2019.01886Zhang Si-Si, Zuo Xin, Liu Jian-Wei. The problem of the adversarial wxamples in deep Learning. Chinese Journal of Computers, 2019, 42(08): 1886−1904 doi: 10.11897/SP.J.1016.2019.01886 [183] Svajlenko J, Islam J F, Keivanloo I, Roy C K, Mia M M. Towards a big data curated benchmark of inter-project code clones. 2014 IEEE International Conference on Software Maintenance and Evolution. Victoria, BC, Canada: IEEE, 2014: 476−480 [184] Mou L L, Li G, Zhang L, Wang T, Jin Z. Convolutional neural networks over tree structures for programming language processing. In: Proceedings of the 30th AAAI Conference on Artificial Intelligence. Phoenix, Arizona, USA: AAAI, 2016. 30(1): 1−7. [185] Alsulami B, Dauber E, Harang R, Mancoridis S, Greenstadt R. Source code authorship attribution using long short-term memory based networks. In: Proceedings of the Computer Security–ESORICS 2017. Oslo, Norway: Springer / Springer-Cham, 2017. 65−82 [186] Zhou Y Q, Liu S Q, Siow J, Du X N, Liu Y. Devign: Effective vulnerability identification by learning comprehensive program semantics via graph neural networks. Advances in Neural Information Processing Systems, 20191−11 [187] Raychev V, Bielik P, Vechev M. Probabilistic model for code with decision trees. ACM SIGPLAN Notices, 2016, 51(10): 731−747 doi: 10.1145/3022671.2984041 [188] Allamanis M, Sutton C. Mining source code repositories at massive scale using language modeling. 2013 10th Working Conference on Mining Software Repositories (MSR). San Francisco, CA, USA: IEEE, 2013. 207−216 [189] Hellendoorn V J, Devanbu P. Are deep neural networks the best choice for modeling source code? In: Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering. New York, NY, USA: ACM, 2017. 763−773 [190] Karampatsis R M, Babii H, Robbes R, Sutton C, Janes A. Big code!= big vocabulary: Open-vocabulary models for source code. In: Proceedings of the ACM/IEEE 42nd International Conference on Software Engineering. New York, NY, USA: ACM, 2020. 1073−1085 [191] Lu S, Guo D, Ren S, Huang J J, Svyatkovskiy A, Blanco A, et al. Codexglue: A machine learning benchmark dataset for code understanding and generation. arXiv preprint arXiv: 2102.04664, 2021. [192] Hong D, Choi K, Lee H Y, Yu J, Kim Y, Park N, et al. ConCoDE: Hard-constrained differentiable co-exploration method for neural architectures and hardware accelerators. 2022: 1−15 [193] Husain H, Wu H H, Gazit T, Allamanis M, Brockschmidt M. Codesearchnet challenge: Evaluating the state of semantic code search. arXiv preprint arXiv: 1909.09436, 2019. [194] Kanade A, Maniatis P, Balakrishnan G, Shi K. Learning and evaluating contextual embedding of source code. In: Proceedings of the 37th International Conference on Machine Learning. Virtual Event: PMLR, 2020. 5110−5121 [195] Chirkova N, Troshin S. A simple approach for handling out-of-vocabulary identifiers in deep learning for source code. arXiv preprint arXiv: 2010.12663, 2020. [196] Wang H, Xiong X. A method for generating adversarial code samples based on dynamic weight awareness and rollback control. 2025 IEEE 7th International Conference on Communications, Information System and Computer Engineering (CISCE). Guangzhou, China: IEEE, 2025. 1172−1178. [197] Rozière B, Gehring J, Gloeckle F, Sootla S, Gat I, Tan X E, et al. Code llama: Open foundation models for code. arXiv preprint arXiv: 2308.12950, 2023. [198] Li R, Allal L B, Zi Y T, Muennighoff N, Kocetkov D, Mou C H, et al. Starcoder: may the source be with you!. arXiv preprint arXiv: 2305.06161, 2023. [199] Shayegani E, Mamun M A A, Fu Y, Zaree P, Dong Y, Abu-Ghazaleh N. Survey of vulnerabilities in large language models revealed by adversarial attacks. arXiv preprint arXiv: 2310.10844, 2023. [200] Li Z Z, Guo J W, Cai H P. System prompt poisoning: persistent attacks on large language models beyond user injection. arXiv preprint arXiv: 2505.06493, 2025. [201] Zou A, Wang Z F, Carlini N, Nasr M, Kolter J Z, Fredrikson M. Universal and transferable adversarial attacks on align language models. arXiv preprint arXiv: 2307.15043, 2023. [202] Yi S B, Liu Y L, Sun Z, Cong T S, He X L, Song J X, et al. Jailbreak attacks and defenses against large language models: A survey. arXiv preprint arXiv: 2407.04295, 2024. [203] 杨馨悦, 刘安, 赵雷, 陈林, 章晓芳. 基于混合图表示的软件变更预测方法. 软件学报, 2024, 35(08): 3824−3842 doi: 10.13328/j.cnki.jos.006947Yang Xin-Yue, Liu An, Zhao Lei, Chen Lin, Zhang Xiao-Fang. Software change prediction based on hybrid graph representation. Journal of Software, 2024, 35(08): 3824−3842 doi: 10.13328/j.cnki.jos.006947 [204] Bulla L, Midolo A, Mongiovì M, Tramontana E. EX-CODE: A robust and explainable model to detect AI-generated code. Information, 2024, 15(12): 1−18 doi: 10.3390/info15120819 [205] Zhang Z W, Zhang H Y, Shen B J, Gu X D. Diet code is healthy: Simplifying programs for pre-trained models of code. In: Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering. New York, NY, USA: ACM, 2022. 1073−1084 [206] Shi J K, Yang Z, Xu B W, Kang H J, Lo D. Compressing pre-trained models of code into 3 mb. In: Proceedings of the 37th IEEE/ACM International Conference on Automated Software Engineering. New York, NY, USA: ACM, 2022. 1−12 [207] Sun Q S, Chen N, Wang J N, Gao M, Li X. TransCoder: Towards unified transferable code representation learning inspired by human skills. arXiv preprint arXiv: 2306.07285, 2023. [208] Tufano M, Kimko J, Wang S, Watson C, Bavota G, Penta M D, et al. Deepmutation: A neural mutation tool. In: Proceedings of the ACM/IEEE 42nd International Conference on Software Engineering: Companion Proceedings. New York, NY, USA: ACM, 2020. 29−32 -

计量

- 文章访问数: 4

- HTML全文浏览量: 3

- 被引次数: 0

下载:

下载: