-

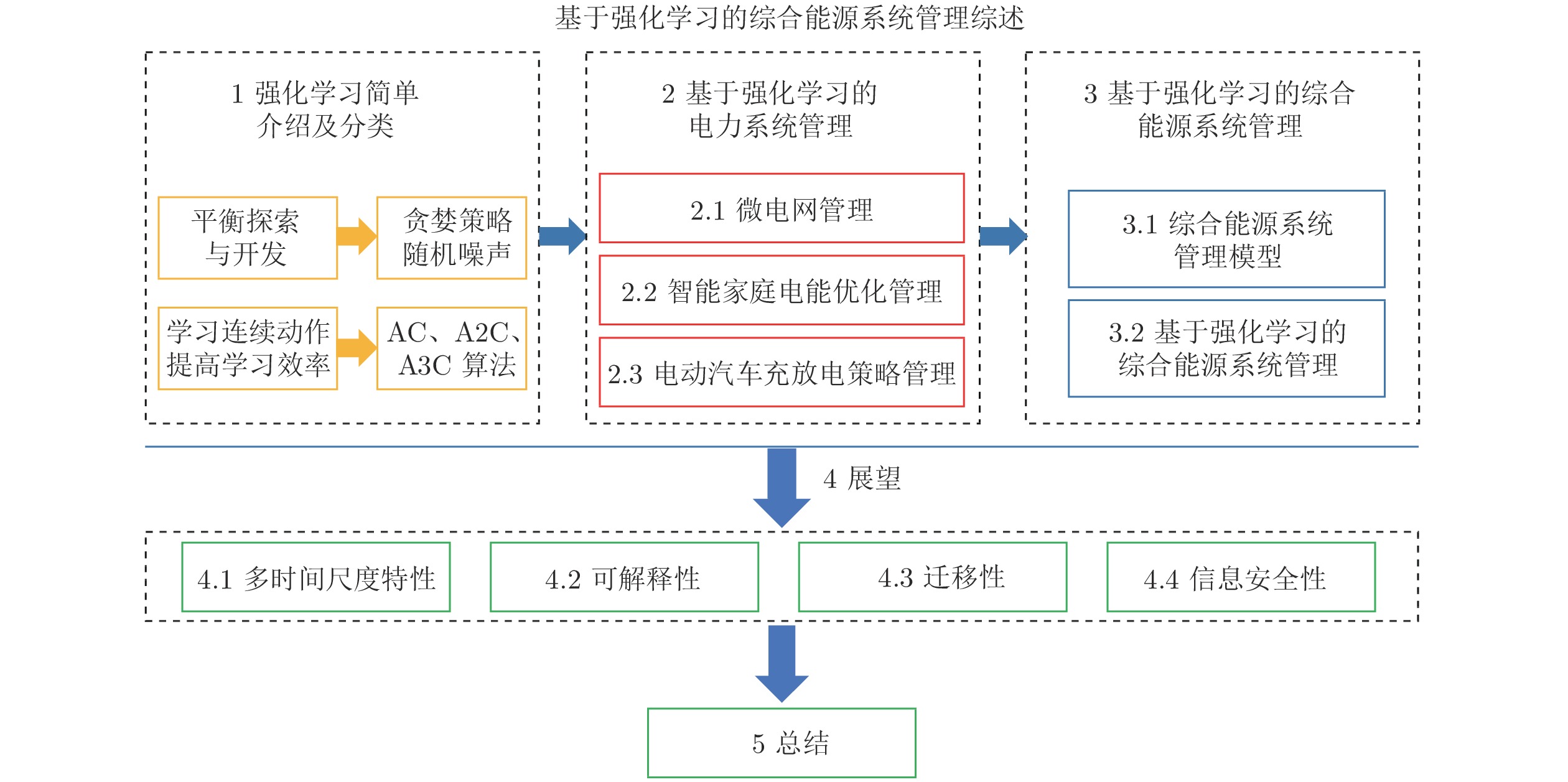

摘要: 为了满足日益增长的能源需求并减少对环境的破坏, 节能成为全球经济和社会发展的一项长远战略方针, 加强能源管理能够提高能源利用效率、促进节能减排. 然而, 可再生能源和柔性负载的接入使得综合能源系统(Integrated energy system, IES)发展成为具有高度不确定性的复杂动态系统, 给现代化能源管理带来巨大的挑战. 强化学习(Reinforcement learning, RL)作为一种典型的交互试错型学习方法, 适用于求解具有不确定性的复杂动态系统优化问题, 因此在综合能源系统管理问题中得到广泛关注. 本文从模型和算法的层面系统地回顾了利用强化学习求解综合能源系统管理问题的现有研究成果, 并从多时间尺度特性、可解释性、迁移性和信息安全性4个方面提出展望.Abstract: In order to meet the growing energy demand and reduce the damage to the environment, energy conservation has become a long-term strategic policy for global economic and social development. The enhancement of energy management can improve energy efficiency, as well as promote energy conservation and emission reduction. However, the integration of renewable energy and flexible load makes the integrated energy system (IES) become a complex dynamic system with high uncertainty, which brings great challenges to modern energy management. Reinforcement learning (RL), as a typical interactive trial-and-error learning method, is suitable for solving optimization problems of complex dynamic systems with uncertainty, and therefore it has been widely considered in integrated energy system management. This paper systematically reviews the existing works of using reinforcement learning to solve integrated energy system management problems from the perspective of models and algorithms, and puts forward prospects from four aspects: Multi-time scale, interpretability, transferability, and information security.

-

表 1 强化学习算法分类

Table 1 The classification of reinforcement learning algorithm

表 2 基于强化学习的微电网管理

Table 2 Microgrid management based on reinforcement learning

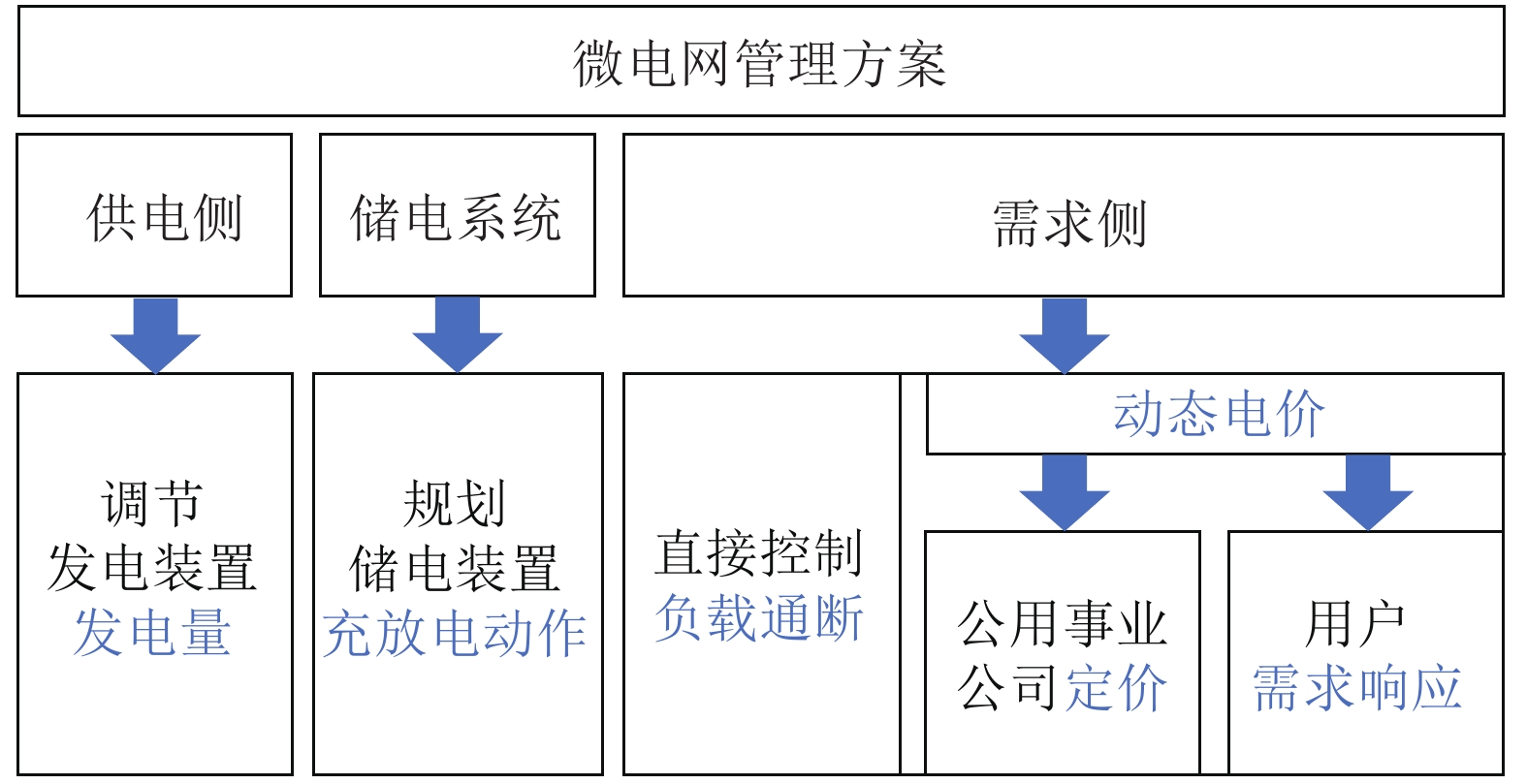

文献 时间尺度 管理方案 求解算法 算法性能 收敛稳定 计算速度 隐私保护 适应性 [22] 日内滚动 公用事业公司定价 自适应强化学习 √ √ √ [41] 实时调整 储电装置调节 深度确定性策略梯度 √ [43] 日前调度 消费者价格感知 有限时域深度确定性策略梯度 √ 日内滚动 有限时域递归确定性策略梯度 √ √ [47] 日内滚动 公用事业公司定价 博弈论 + 强化学习 √ √ [48] 日前调度 公用事业公司定价 蒙特卡洛法 √ [50] 实时调整 储电装置调节 深度双 Q 网络 √ [56] 日前调度 直接负载控制 深度竞争 Q 网络 √ [58] 日内滚动 公用事业公司定价 Q 学习 √ 表 3 电动汽车充放电管理算法

Table 3 The algorithm of charge and discharge management of electric vehicle

文献 不确定性处理 高维变量处理 求解算法 算法性能 计算速度 适应性 备注 [31] 数据驱动 深度网络 充电控制深度确定性策略梯度 √ [87] 机理模型驱动 — 参数自适应差分进化 计算时间仅相对于传统差分进化法可以接受 [88] 模型已知 分层优化 基于场景树的动态规划 × [95] 数据驱动 深度网络 深度 Q 网络 √ [97] 模型已知 分层优化 双层近端策略优化 √ 仅总体性能优于其他策略、能较好地跟踪风能发电 [99] 机理模型驱动 分层优化 基于分布式模拟的策略改进 分布式方法具有可扩展性 [100] 数据驱动 二维表格 拟合 Q 迭代 性能受给定训练集时间跨度的影响 [101] 数据驱动 — 安全深度强化学习 √ [103] 数据驱动 深度网络 深度 Q 网络 √ [105] 数据驱动 深度网络 深度确定性策略梯度 × 闭环控制框架严格保证电压安全性 表 4 综合能源系统管理的常规算法

Table 4 Conventional algorithm for integrated energy system management

文献 规模级别 时间尺度 算法 附加考虑 [123] 社区 实时调整 混合整数线性规划 考虑光伏生产者的随机特征和风险条件值 [125] 社区 实时调整 合作博弈 考虑各个能源枢纽自主调度和信息保密性 [126] — 日前计划 交替方向乘子法 提升算法收敛性、实现信息保护 [127] — 日内滚动、实时调整 博弈论 以较低的社会福利为代价显著缩短了运行时间 [128] — 日内滚动 混合整数非线性规划 减轻计算负担 [129] 城市 日内滚动 混合整数二阶锥规划

交替方向乘子法解决多能量网络中的强耦合和固有的非凸性

解决异构能源枢纽局部的能源自主性[130] 城市 多时间尺度 基于多目标粒子群优化的双层元启发式算法 KPI 的应用与国家范围内的战略目标密切相关 [131] 社区 日内滚动 计算机化算法 将复杂的 EH 模型分为几个简单的 EH 模型 表 5 基于强化学习的综合能源系统管理

Table 5 Integrated energy system management based on reinforcement learning

文献 社会性目标 求解算法 算法性能 计算速度 适应性 备注 [118] — 蒙特卡洛法 √ 收敛速度快 [119] 环境友好 Q 学习 √ [133] — 优先深度确定性策略梯度 √ [134] — 分布式近端策略优化 √ 保证收敛性 [135] 负荷平滑 深度确定性策略梯度 √ [136] — 人工神经网络 + 强化学习 同时优化能源枢纽系统设计和运行策略 [137] 用户满意 置信域策略梯度算法 + 深度确定性策略梯度 DDPG 得到的策略更优、两者都无法一步获得

最优配置和控制策略[138] — 多智能体议价学习 + 强化学习 √ 较强的全局搜索能力, 能处理大型复杂的能源

系统分布式优化问题[139] 用户满意 深度双神经拟合 Q 迭代 √ 提高鲁棒性, 无模型算法性能不及基于模型的算法 [140] 环境友好 演员-评论家算法 √ 有较好的稳定性 -

[1] 孙秋野, 滕菲, 张化光. 能源互联网及其关键控制问题. 自动化学报, 2017, 43(2): 176-194Sun Qiu-Ye, Teng Fei, Zhang Hua-Guang. Energy internet and its key control issues. Acta Automatica Sinica, 2017, 43(2): 176-194 [2] Yang T, Zhao L Y, Li W, Zomaya A Y. Reinforcement learning in sustainable energy and electric systems: A survey. Annual Reviews in Control, 2020, 49: 145-163 doi: 10.1016/j.arcontrol.2020.03.001 [3] 国家发改委和国家能源局. 能源生产和消费革命战略 (2016−2030). 电器工业, 2017(5): 39−47National Development and Reform Commission and National Energy Administration. Revolutionary strategy of energy production and consumption (2016−2030). China Electrical Equipment Industry, 2017(5): 39−47 [4] 国家发展和改革委员会. 节能中长期专项规划. 节能与环保, 2004(11): 3−10National Development and Reform Commission. Medium and long term special plan for energy conservation. Energy Conservation and Environmental Protection, 2004(11): 3−10 [5] 平作为, 何维, 李俊林, 杨涛. 基于稀疏学习的微电网负载建模. 自动化学报, 2020, 46(9): 1798-1808Ping Zuo-Wei, He Wei, Li Jun-Lin, Yang Tao. Sparse learning for load modeling in microgrids. Acta Automatica Sinica, 2020, 46(9): 1798-1808 [6] Yao W F, Zhao J H, Wen F S, Dong Z Y, Xue Y S, Xu Y, et al. A multi-objective collaborative planning strategy for integrated power distribution and electric vehicle charging systems. IEEE Transactions on Power Systems, 2014, 29(4): 1811-1821 doi: 10.1109/TPWRS.2013.2296615 [7] Moghaddam M P, Damavandi M Y, Bahramara S, Haghifam M R. Modeling the impact of multi-energy players on electricity market in smart grid environment. In: Proceedings of the 2016 IEEE Innovative Smart Grid Technologies-Asia (ISGT-Asia). Melbourne, Australia: IEEE, 2016. 454−459 [8] Carli R, Dotoli M. Decentralized control for residential energy management of a smart users′ microgrid with renewable energy exchange. IEEE/CAA Journal of Automatica Sinica, 2019, 6(3): 641-656 doi: 10.1109/JAS.2019.1911462 [9] Farrokhifar M, Aghdam F H, Alahyari A, Monavari A, Safari A. Optimal energy management and sizing of renewable energy and battery systems in residential sectors via a stochastic MILP model. Electric Power Systems Research, 2020, 187: Article No. 106483 [10] Moser A, Muschick D, Golles M, Nageler P, Schranzhofer H, Mach T, et al. A MILP-based modular energy management system for urban multi-energy systems: Performance and sensitivity analysis. Applied Energy, 2020, 261: Article No. 114342 [11] Alipour M, Zare K, Abapour M. MINLP probabilistic scheduling model for demand response programs integrated energy hubs. IEEE Transactions on Industrial Informatics, 2018, 14(1): 79-88 doi: 10.1109/TII.2017.2730440 [12] Sadeghianpourhamami N, Demeester T, Benoit D F, Strobbe M, Develder C. Modeling and analysis of residential flexibility: Timing of white good usage. Applied Energy, 2016, 179: 790-805 doi: 10.1016/j.apenergy.2016.07.012 [13] Bruce J, Sunderhauf N, Mirowski P, Hadsell R, Milford M. One-shot reinforcement learning for robot navigation with interactive replay. arXiv: 1711.10137, 2017. [14] Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D, et al. Playing Atari with deep reinforcement learning. arXiv: 1312.5602, 2013. [15] Wang X, Huang Q Y, Celikyilmaz A, Gao J F, Shen D H, Wang Y F, et al. Reinforced cross-modal matching and self-supervised imitation learning for vision-language navigation. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, USA: IEEE, 2019. 6622−6631 [16] Segler M H S, Preuss M, Waller M P. Planning chemical syntheses with deep neural networks and symbolic AI. Nature, 2018, 555(7698): 604-610 doi: 10.1038/nature25978 [17] Hua H C, Qin Y C, Hao C T, Cao J W. Optimal energy management strategies for energy internet via deep reinforcement learning approach. Applied Energy, 2019, 239: 598-609 doi: 10.1016/j.apenergy.2019.01.145 [18] Kim S, Lim H. Reinforcement learning based energy management algorithm for smart energy buildings. Energies, 2018, 11(8): Article No. 2010 [19] Liu T, Hu X S, Li S E, Cao D P. Reinforcement learning optimized look-ahead energy management of a parallel hybrid electric vehicle. IEEE/ASME Transactions on Mechatronics, 2017, 22(4): 1497-1507 doi: 10.1109/TMECH.2017.2707338 [20] Kong W C, Dong Z Y, Jia Y W, Hill D J, Xu Y, Zhang Y. Short-term residential load forecasting based on LSTM recurrent neural network. IEEE Transactions on Smart Grid, 2019, 10(1): 841-851 doi: 10.1109/TSG.2017.2753802 [21] Xu X, Jia Y W, Xu Y, Xu Z, Chai S J, Lai C S. A multi-agent reinforcement learning-based data- driven method for home energy management. IEEE Transactions on Smart Grid, 2020, 11(4): 3201-3211 doi: 10.1109/TSG.2020.2971427 [22] Zhang Q Z, Dehghanpour K, Wang Z Y, Huang Q H. A learning-based power management method for networked microgrids under incomplete information. IEEE Transactions on Smart Grid, 2020, 11(2): 1193-1204 doi: 10.1109/TSG.2019.2933502 [23] 孙长银, 穆朝絮. 多智能体深度强化学习的若干关键科学问题. 自动化学报, 2020, 46(7): 1301-1312Sun Chang-Yin, Mu Chao-Xu. Important scientific problems of multi-agent deep reinforcement learning. Acta Automatica Sinica, 2020, 46(7): 1301-1312 [24] Zou H L, Mao S W, Wang Y, Zhang F H, Chen X, Cheng L. A survey of energy management in interconnected multi-microgrids. IEEE Access, 2019, 7: 72158-72169 doi: 10.1109/ACCESS.2019.2920008 [25] Yu L, Qin S Q, Zhang M, Shen C, Jiang T, Guan X H. Deep reinforcement learning for smart building energy management: A survey. arXiv: 2008.05074, 2020. [26] Cao D, Hu W H, Zhao J B, Zhang G Z, Zhang B, Liu Z, et al. Reinforcement learning and its applications in modern power and energy systems: A review. Journal of Modern Power Systems and Clean Energy, 2020, 8(6): 1029-1042 doi: 10.35833/MPCE.2020.000552 [27] Sutton R S, Barto A G. Reinforcement Learning: An Introduction (Second Edition). Cambridge: MIT Press, 2018. [28] Wang F Y, Zhang H G, Liu D R. Adaptive dynamic programming: An introduction. IEEE Computational Intelligence Magazine, 2009, 4(2): 39-47 doi: 10.1109/MCI.2009.932261 [29] Tesauro G. Temporal difference learning and TD- Gammon. Communications of the ACM, 1995, 38(3): 58-68 doi: 10.1145/203330.203343 [30] Tokic M. Adaptive ε-greedy exploration in reinforcement learning based on value differences. In: Proceedings of the 33rd Annual German Conference on Artificial Intelligence. Karlsruhe, Germany: Springer Press, 2010. 203−210 [31] Zhang F Y, Yang Q Y, An D. CDDPG: A deep-reinforcement-learning-based approach for electric vehicle charging control. IEEE Internet of Things Journal, 2021, 8(5): 3075-3087 doi: 10.1109/JIOT.2020.3015204 [32] Konda V R, Tsitsiklis J N. On actor-critic algorithms. SIAM Journal on Control and Optimization, 2003, 42(4): 1143-1166 doi: 10.1137/S0363012901385691 [33] Yu L, Sun Y, Xu Z B, Shen C, Yue D, Jiang T, et al. Multi-agent deep reinforcement learning for HVAC control in commercial buildings. IEEE Transactions on Smart Grid, 2021, 12(1): 407-419 doi: 10.1109/TSG.2020.3011739 [34] Van Hasselt H, Guez A, Silver D. Deep reinforcement learning with double Q-learning. In: Proceedings of the 30th AAAI Conference on Artificial Intelligence. Phoenix, USA: AAAI, 2016. 2094−2100 [35] Wang Z Y, Schaul T, Hessel M, Van Hasselt H, Lanctot M, De Freitas N. Dueling network architectures for deep reinforcement learning. In: Proceedings of the 33rd International Conference on Machine Learning. New York, USA: PMLR Press, 2016. 1995−2003 [36] Babaeizadeh M, Frosio I, Tyree S, Clemons J, Kautz J. Reinforcement learning through asynchronous advantage actor-critic on a GPU. In: Proceedings of the 5th International Conference on Learning Representations. Toulon, France: OpenReview.net, 2017. [37] Schulman J, Levine S, Abbeel P, Jordan M I, Moritz P. Trust region policy optimization. In: Proceedings of the 32nd International Conference on Machine Learning. Lille, France: PMLR Press, 2015. 1889−1897 [38] Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O. Proximal policy optimization algorithms. arXiv: 1707.06347, 2017. [39] Mnih V, Kavukcuoglu K, Silver D, Rusu A A, Veness J, Bellemare M G, et al. Human-level control through deep reinforcement learning. Nature, 2015, 518(7540): 529-533 doi: 10.1038/nature14236 [40] Lillicrap T P, Hunt J J, Pritzel A, Heess N, Erez T, Tassa Y, et al. Continuous control with deep reinforcement learning. arXiv: 1509.02971, 2015. [41] Gorostiza F S, Gonzalez-Longatt F M. Deep reinforcement learning-based controller for SOC management of multi-electrical energy storage system. IEEE Transactions on Smart Grid, 2020, 11(6): 5039-5050 doi: 10.1109/TSG.2020.2996274 [42] Liang Y C, Guo C L, Ding Z H, Hua H C. Agent- based modeling in electricity market using deep deterministic policy gradient algorithm. IEEE Transactions on Power Systems, 2020, 35(6): 4180-4192 doi: 10.1109/TPWRS.2020.2999536 [43] Lei L, Tan Y, Dahlenburg G, Xiang W, Zheng K. Dynamic energy dispatch based on deep reinforcement learning in IoT-Driven smart isolated microgrids. IEEE Internet of Things Journal, 2021, 8(10): 7938-7953 doi: 10.1109/JIOT.2020.3042007 [44] Li H P, Wan Z Q, He H B. Real-time residential demand response. IEEE Transactions on Smart Grid, 2020, 11(5): 4144-4154 doi: 10.1109/TSG.2020.2978061 [45] Yu L, Xie W W, Xie D, Zou Y L, Zhang D Y, Sun Z X, et al. Deep reinforcement learning for smart home energy management. IEEE Internet of Things Journal, 2020, 7(4): 2751-2762 doi: 10.1109/JIOT.2019.2957289 [46] Zhang C, Xu Y, Dong Z Y, Wong K P. Robust coordination of distributed generation and price-based demand response in Microgrids. IEEE Transactions on Smart Grid, 2018, 9(5): 4236-4247 doi: 10.1109/TSG.2017.2653198 [47] Latifi M, Rastegarnia A, Khalili A, Bazzi W M, Sanei S. A self-governed online energy management and trading for smart Micro/Nano-grids. IEEE Transactions on Industrial Electronics, 2020, 67(9): 7484-7498 doi: 10.1109/TIE.2019.2945280 [48] Du Y, Li F X. Intelligent multi-microgrid energy management based on deep neural network and model-free reinforcement learning. IEEE Transactions on Smart Grid, 2020, 11(2): 1066-1076 doi: 10.1109/TSG.2019.2930299 [49] Hafeez G, Alimgeer K S, Wadud Z, Khan I, Usman M, Qazi A B, et al. An innovative optimization strategy for efficient energy management with day-ahead demand response signal and energy consumption forecasting in smart grid using artificial neural network. IEEE Access, 2020, 8: 84415-84433 doi: 10.1109/ACCESS.2020.2989316 [50] Yu Y J, Cai Z F, Huang Y S. Energy storage arbitrage in grid-connected micro-grids under real-time market price uncertainty: A double-Q learning approach. IEEE Access, 2020, 8: 54456-54464 doi: 10.1109/ACCESS.2020.2981543 [51] Thirugnanam K, Kerk S K, Yuen C, Liu N, Zhang M. Energy management for renewable microgrid in reducing diesel generators usage with multiple types of battery. IEEE Transactions on Industrial Electronics, 2018, 65(8): 6772-6786 doi: 10.1109/TIE.2018.2795585 [52] Morstyn T, Hredzak B, Agelidis V G. Control strategies for microgrids with distributed energy storage systems: An overview. IEEE Transactions on Smart Grid, 2018, 9(4): 3652-3666 doi: 10.1109/TSG.2016.2637958 [53] Jin J L, Xu Y J, Khalid Y, Hassan N U. Optimal operation of energy storage with random renewable generation and AC/DC loads. IEEE Transactions on Smart Grid, 2018, 9(3): 2314-2326 [54] Bouakkaz A, Mena A J G, Haddad S, Ferrari M L. Efficient energy scheduling considering cost reduction and energy saving in hybrid energy system with energy storage. Journal of Energy Storage, 2021, 33: Article No. 101887 [55] Luo F J, Meng K, Dong Z Y, Zheng Y, Chen Y Y, Wong K P. Coordinated operational planning for wind farm with battery energy storage system. IEEE Transactions on Sustainable Energy, 2015, 6(1): 253-262 doi: 10.1109/TSTE.2014.2367550 [56] Wang B, Li Y, Ming W Y, Wang S R. Deep reinforcement learning method for demand response management of interruptible load. IEEE Transactions on Smart Grid, 2020, 11(4): 3146-3155 doi: 10.1109/TSG.2020.2967430 [57] Zhang Y, van der Schaar M. Structure-aware stochastic storage management in smart grids. IEEE Journal of Selected Topics in Signal Processing, 2014, 8(6): 1098-1110 doi: 10.1109/JSTSP.2014.2346477 [58] Lu R Z, Hong S H, Zhang X F. A dynamic pricing demand response algorithm for smart grid: Reinforcement learning approach. Applied Energy, 2018, 220: 220-230 doi: 10.1016/j.apenergy.2018.03.072 [59] Rasheed M B, Qureshi M A, Javaid N, Alquthami T. Dynamic pricing mechanism with the integration of renewable energy source in smart grid. IEEE Access, 2020, 8: 16876-16892 doi: 10.1109/ACCESS.2020.2967798 [60] Yang H M, Zhang J, Qiu J, Zhang S H, Lai M Y, Dong Z Y. A practical pricing approach to smart grid demand response based on load classification. IEEE Transactions on Smart Grid, 2018, 9(1): 179-190 doi: 10.1109/TSG.2016.2547883 [61] Marquant J F, Evins R, Bollinger L A, Carmeliet J. A holarchic approach for multi-scale distributed energy system optimisation. Applied Energy, 2017, 208: 935-953 doi: 10.1016/j.apenergy.2017.09.057 [62] Wang Q, Wu H Y, Florita A R, Martinez-Anido C B, Hodge B M. The value of improved wind power forecasting: Grid flexibility quantification, ramp capability analysis, and impacts of electricity market operation timescales. Applied Energy, 2016, 184: 696-713 doi: 10.1016/j.apenergy.2016.11.016 [63] Shu J, Guan R, Wu L, Han B. A Bi-level approach for determining optimal dynamic retail electricity pricing of large industrial customers. IEEE Transactions on Smart Grid, 2019, 10(2): 2267-2277 doi: 10.1109/TSG.2018.2794329 [64] Mirzaei M, Keypour R, Savaghebi M, Golalipour K. Probabilistic optimal bi-level scheduling of a multi-microgrid system with electric vehicles. Journal of Electrical Engineering & Technology, 2020, 15(6): 2421-2436 [65] Dadashi-Rad M H, Ghasemi-Marzbali A, Ahangar R A. Modeling and planning of smart buildings energy in power system considering demand response. Energy, 2020, 213: Article No. 118770 [66] Liu D R, Xu Y C, Wei Q L, Liu X L. Residential energy scheduling for variable weather solar energy based on adaptive dynamic programming. IEEE/CAA Journal of Automatica Sinica, 2018, 5(1): 36-46 doi: 10.1109/JAS.2017.7510739 [67] Li Y Z, Wang P, Gooi H B, Ye J, Wu L. Multi-objective optimal dispatch of microgrid under uncertainties via interval optimization. IEEE Transactions on Smart Grid, 2019, 10(2): 2046-2058 doi: 10.1109/TSG.2017.2787790 [68] Bao Z J, Qiu W R, Wu L, Zhai F, Xu W J, Li B F, et al. Optimal multi-timescale demand side scheduling considering dynamic scenarios of electricity demand. IEEE Transactions on Smart Grid, 2019, 10(3): 2428-2439 doi: 10.1109/TSG.2018.2797893 [69] Qazi H S, Liu N, Wang T. Coordinated energy and reserve sharing of isolated microgrid cluster using deep reinforcement learning. In: Proceedings of the 5th Asia Conference on Power and Electrical Engineering (ACPEE). Chengdu, China: IEEE, 2020. 81−86 [70] Jayaraj S, Ahamed T P I, Kunju K B. Application of reinforcement learning algorithm for scheduling of microgrid. In: Proceedings of the 2019 Global Conference for Advancement in Technology (GCAT). Bangalore, India: IEEE, 2019. 1−5 [71] Mao S, Tang Y, Dong Z W, Meng K, Dong Z Y, Qian F. A privacy preserving distributed optimization algorithm for economic dispatch over time-varying directed networks. IEEE Transactions on Industrial Informatics, 2021, 17(3): 1689-1701 doi: 10.1109/TII.2020.2996198 [72] Parag Y, Sovacool B K. Electricity market design for the prosumer era. Nature Energy, 2016, 1(4): Article No. 16032 [73] Ruan L N, Yan Y, Guo S Y, Wen F S, Qiu X S. Priority-based residential energy management with collaborative edge and cloud computing. IEEE Transactions on Industrial Informatics, 2020, 16(3): 1848-1857 doi: 10.1109/TII.2019.2933631 [74] Wei Q L, Liu D R, Liu Y, Song R Z. Optimal constrained self-learning battery sequential management in microgrid via adaptive dynamic programming. IEEE/CAA Journal of Automatica Sinica, 2017, 4(2): 168-176 doi: 10.1109/JAS.2016.7510262 [75] Lee J, Wang W B, Niyato D. Demand-side scheduling based on deep actor-critic learning for smart grids. arXiv: 2005.01979, 2020. [76] Mocanu E, Mocanu D C, Nguyen P H, Liotta A, Webber M E, Gibescu M, et al. On-line building energy optimization using deep reinforcement learning. IEEE Transactions on Smart Grid, 2019, 10(4): 3698-3708 doi: 10.1109/TSG.2018.2834219 [77] Diyan M, Khan M, Cao Z B, Silva B N, Han J H, Han K J. Intelligent home energy management system based on Bi-directional long-short term memory and reinforcement learning. In: Proceedings of the 2021 International Conference on Information Networking (ICOIN). Jeju Island, South Korea: IEEE, 2021. 782−787 [78] Lu R Z, Hong S H, Yu M M. Demand response for home energy management using reinforcement learning and artificial neural network. IEEE Transactions on Smart Grid, 2019, 10(6): 6629-6639 doi: 10.1109/TSG.2019.2909266 [79] Huang Z T, Chen J P, Fu Q M, Wu H J, Lu Y, Gao Z. HVAC optimal control with the multistep-actor critic algorithm in large action spaces. Mathematical Problems in Engineering, 2020, 2020: Article No. 1386418 [80] Yoon A Y, Kim Y J, Moon S I. Optimal retail pricing for demand response of HVAC systems in commercial buildings considering distribution network voltages. IEEE Transactions on Smart Grid, 2019, 10(5): 5492-5505 doi: 10.1109/TSG.2018.2883701 [81] Chen Z, Wu L. Residential appliance DR energy management with electric privacy protection by online stochastic optimization. IEEE Transactions on Smart Grid, 2013, 4(4): 1861-1869 doi: 10.1109/TSG.2013.2256803 [82] Li B Y, Wu J, Shi Y Y. Privacy-aware cost-effective scheduling considering non-schedulable appliances in smart home. In: Proceedings of the 2019 IEEE International Conference on Embedded Software and Systems (ICESS). Las Vegas, USA: IEEE, 2019. 1−8 [83] Rottondi C, Barbato A, Chen L, Verticale G. Enabling privacy in a distributed game-theoretical scheduling system for domestic appliances. IEEE Transactions on Smart Grid, 2017, 8(3): 1220-1230 doi: 10.1109/TSG.2015.2511038 [84] Chang H H, Chiu W Y, Sun H J, Chen C M. User-centric multiobjective approach to privacy preservation and energy cost minimization in smart home. IEEE Systems Journal, 2019, 13(1): 1030-1041 doi: 10.1109/JSYST.2018.2876345 [85] Kement C E, Gultekin H, Tavli B, Girici T, Uludag S. Comparative analysis of load-shaping-based privacy preservation strategies in a smart grid. IEEE Transactions on Industrial Informatics, 2017, 13(6): 3226-3235 doi: 10.1109/TII.2017.2718666 [86] Wu Y, Zhang J, Ravey A, Chrenko D, Miraoui A. Real-time energy management of photovoltaic-assisted electric vehicle charging station by Markov decision process. Journal of Power Sources, 2020, 476: Article No. 228504 [87] Li Y Z, Ni Z X, Zhao T Y, Yu M H, Liu Y, Wu L, et al. Coordinated scheduling for improving uncertain wind power adsorption in electric vehicles —wind integrated power systems by multiobjective optimization approach. IEEE Transactions on Industry Applications, 2020, 56(3): 2238-2250 doi: 10.1109/TIA.2020.2976909 [88] Yang Y, Jia Q S, Guan X H. Stochastic coordination of aggregated electric vehicle charging with on-site wind power at multiple buildings. In: Proceedings of the 56th IEEE Annual Conference on Decision and Control (CDC). Melbourne, Australia: IEEE, 2017. 4434−4439 [89] Wan C, Xu Z, Pinson P, Dong Z Y, Wong K P. Optimal prediction intervals of wind power generation. IEEE Transactions on Power Systems, 2014, 29(3): 1166-1174 doi: 10.1109/TPWRS.2013.2288100 [90] Wan C, Xu Z, Pinson P, Dong Z Y, Wong K P. Probabilistic forecasting of wind power generation using extreme learning machine. IEEE Transactions on Power Systems, 2014, 29(3): 1033-1044 doi: 10.1109/TPWRS.2013.2287871 [91] Kabir M E, Assi C, Tushar M H K, Yan J. Optimal scheduling of EV charging at a solar power-based charging station. IEEE Systems Journal, 2020, 14(3): 4221-4231 doi: 10.1109/JSYST.2020.2968270 [92] Zhao J H, Wen F S, Dong Z Y, Xue Y S, Wong K P. Optimal dispatch of electric vehicles and wind power using enhanced particle swarm optimization. IEEE Transactions on Industrial Informatics, 2012, 8(4): 889-899 doi: 10.1109/TII.2012.2205398 [93] Almalaq A, Hao J, Zhang J J, Wang F Y. Parallel building: A complex system approach for smart building energy management. IEEE/CAA Journal of Automatica Sinica, 2019, 6(6): 1452-1461 doi: 10.1109/JAS.2019.1911768 [94] 王燕舞, 崔世常, 肖江文, 施阳. 社区产消者能量分享研究综述. 控制与决策, 2020, 35(10): 2305-2318Wang Yan-Wu, Cui Shi-Chang, Xiao Jiang-Wen, Shi Yang. A review on energy sharing for community energy prosumers. Control and Decision, 2020, 35(10): 2305-2318 [95] Wan Z Q, Li H P, He H B, Prokhorov D. Model-free real-time EV charging scheduling based on deep reinforcement learning. IEEE Transactions on Smart Grid, 2019, 10(5): 5246-5257 doi: 10.1109/TSG.2018.2879572 [96] Jin J L, Xu Y J, Yang Z Y. Optimal deadline scheduling for electric vehicle charging with energy storage and random supply. Automatica, 2020, 119: Article No. 109096 [97] Long T, Ma X T, Jia Q S. Bi-level proximal policy optimization for stochastic coordination of EV charging load with uncertain wind power. In: Proceedings of the 2019 IEEE Conference on Control Technology and Applications (CCTA). Hong Kong, China: IEEE, 2019. 302−307 [98] Long T, Tang J X, Jia Q S. Multi-scale event-based optimization for matching uncertain wind supply with EV charging demand. In: Proceedings of the 13th IEEE Conference on Automation Science and Engineering (CASE). Xi′an, China: IEEE, 2017. 847−852 [99] Yang Y, Jia Q S, Deconinck G, Guan X H, Qiu Z F, Hu Z C. Distributed coordination of EV charging with renewable energy in a microgrid of buildings. IEEE Transactions on Smart Grid, 2018, 9(6): 6253-6264 doi: 10.1109/TSG.2017.2707103 [100] Sadeghianpourhamami N, Deleu J, Develder C. Definition and evaluation of model-free coordination of electrical vehicle charging with reinforcement learning. IEEE Transactions on Smart Grid, 2020, 11(1): 203-214 doi: 10.1109/TSG.2019.2920320 [101] Li H P, Wan Z Q, He H B. Constrained EV charging scheduling based on safe deep reinforcement learning. IEEE Transactions on Smart Grid, 2020, 11(3): 2427-2439 doi: 10.1109/TSG.2019.2955437 [102] Ming F Z, Gao F, Liu K, Wu J, Xu Z B, Li W M. Constrained double deep Q-learning network for EVs charging scheduling with renewable energy. In: Proceedings of the 16th IEEE International Conference on Automation Science and Engineering (CASE). Hong Kong, China: IEEE, 2020. 636−641 [103] Qian T, Shao C C, Wang X L, Shahidehpour M. Deep reinforcement learning for EV charging navigation by coordinating smart grid and intelligent transportation system. IEEE Transactions on Smart Grid, 2020, 11(2): 1714-1723 doi: 10.1109/TSG.2019.2942593 [104] Da Silva F L, Nishida C E H, Roijers D M, Costa A H R. Coordination of electric vehicle charging through multiagent reinforcement learning. IEEE Transactions on Smart Grid, 2020, 11(3): 2347-2356 doi: 10.1109/TSG.2019.2952331 [105] Ding T, Zeng Z Y, Bai J W, Qin B Y, Yang Y H, Shahidehpour M. Optimal electric vehicle charging strategy with Markov decision process and reinforcement learning technique. IEEE Transactions on Industry Applications, 2020, 56(5): 5811-5823 doi: 10.1109/TIA.2020.2990096 [106] 徐任超, 阎威武, 王国良, 杨健程, 张曦. 基于周期性建模的时间序列预测方法及电价预测研究. 自动化学报, 2020, 46(6): 1136-1144Xu Ren-Chao, Yan Wei-Wu, Wang Guo-Liang, Yang Jian-Cheng, Zhang Xi. Time series forecasting based on seasonality modeling and its application to electricity price forecasting. Acta Automatica Sinica, 2020, 46(6): 1136-1144 [107] Wu J Z, Yan J Y, Jia H J, Hatziargyriou N, Djilali N, Sun H B. Integrated energy systems. Applied Energy, 2016, 167: 155-157 doi: 10.1016/j.apenergy.2016.02.075 [108] 余晓丹, 徐宪东, 陈硕翼, 吴建中, 贾宏杰. 综合能源系统与能源互联网简述. 电工技术学报, 2016, 31(1):1-13 doi: 10.3969/j.issn.1000-6753.2016.01.001Yu Xiao-Dan, Xu Xian-Dong, Chen Shuo-Yi, Wu Jian-Zhong, Jia Hong-Jie. A brief review to integrated energy system and energy internet. Transactions of China Electrotechnical Society, 2016, 31(1): 1-13 doi: 10.3969/j.issn.1000-6753.2016.01.001 [109] 胡旭光, 马大中, 郑君, 张化光, 王睿. 基于关联信息对抗学习的综合能源系统运行状态分析方法. 自动化学报, 2020, 46(9): 1783-1797Hu Xu-Guang, Ma Da-Zhong, Zheng Jun, Zhang Hua-Guang, Wang Rui. An operation state analysis method for integrated energy system based on correlation information adversarial learning. Acta Automatica Sinica, 2020, 46(9): 1783-1797 [110] Huang B N, Li Y S, Zhang H G, Sun Q Y. Distributed optimal co-multi-microgrids energy management for energy internet. IEEE/CAA Journal of Automatica Sinica, 2016, 3(4): 357-364 doi: 10.1109/JAS.2016.7510073 [111] 孙秋野, 胡旌伟, 杨凌霄, 张化光. 基于GAN技术的自能源混合建模与参数辨识方法. 自动化学报, 2018, 44(5): 901-914Sun Qiu-Ye, Hu Jing-Wei, Yang Ling-Xiao, Zhang Hua-Guang. We-energy hybrid modeling and parameter Identification with GAN technology. Acta Automatica Sinica, 2018, 44(5): 901-914 [112] Qiu J, Dong Z Y, Zhao J H, Xu Y, Zheng Y, Li C X, et al. Multi-stage flexible expansion co-planning under uncertainties in a combined electricity and gas market. IEEE Transactions on Power Systems, 2015, 30(4): 2119-2129 doi: 10.1109/TPWRS.2014.2358269 [113] Sheikhi A, Rayati M, Bahrami S, Ranjbar A M. Integrated demand side management game in smart energy hubs. IEEE Transactions on Smart Grid, 2015, 6(2): 675-683 doi: 10.1109/TSG.2014.2377020 [114] O′Malley M J, Anwar M B, Heinen S, Kober T, McCalley J, McPherson M, et al. Multicarrier energy systems: Shaping our energy future. Proceedings of the IEEE, 2020, 108(9): 1437-1456 doi: 10.1109/JPROC.2020.2992251 [115] He J, Yuan Z J, Yang X L, Huang W T, Tu Y C, Li Y. Reliability modeling and evaluation of urban multi-energy systems: A review of the state of the art and future challenges. IEEE Access, 2020, 8: 98887-98909 doi: 10.1109/ACCESS.2020.2996708 [116] Farah A, Hassan H, Kawabe K, Nanahara T. Optimal planning of multi-carrier energy hub system using particle swarm optimization. In: Proceedings of the 2019 IEEE Innovative Smart Grid Technologies-Asia (ISGT Asia). Chengdu, China: IEEE, 2019. 3820−3825 [117] Hua W Q, You M L, Sun H J. Real-time price elasticity reinforcement learning for low carbon energy hub scheduling based on conditional random field. In: Proceedings of the 2019 IEEE/CIC International Conference on Communications Workshops in China (ICCC Workshops). Changchun, China: IEEE, 2019. 204−209 [118] Rayati M, Sheikhi A, Ranjbar A M. Applying reinforcement learning method to optimize an energy hub operation in the smart grid. In: Proceedings of the 2015 IEEE Power & Energy Society Innovative Smart Grid Technologies Conference (ISGT). Washington, USA: IEEE, 2015. 1−5 [119] Sun Q Y, Wang D L, Ma D Z, Huang B N. Multi-objective energy management for we-energy in energy internet using reinforcement learning. In: Proceedings of the 2017 IEEE Symposium Series on Computational Intelligence (SSCI). Honolulu, USA: IEEE, 2017. 1−6 [120] 国旭涛, 韩高岩, 吕洪坤. 冷热电三联供系统建模方法综述. 浙江电力, 2020, 39(4): 83-93Guo Xu-Tao, Han Gao-Yan, Lyu Hong-Kun. Review of modeling methods of combined cooling, heating and power system. Zhejiang Electric Power, 2020, 39(4): 83-93 [121] Van Beuzekom I, Gibescu M, Slootweg J G. A review of multi-energy system planning and optimization tools for sustainable urban development. In: Proceedings of the 2015 IEEE Eindhoven PowerTec. Eindhoven, Netherlands: IEEE, 2015. 1−7 [122] Ma L, Liu N, Zhang J H, Wang L F. Real-time rolling horizon energy management for the energy- hub-coordinated prosumer community from a cooperative perspective. IEEE Transactions on Power Systems, 2019, 34(2): 1227-1242 doi: 10.1109/TPWRS.2018.2877236 [123] Paudyal S, Canizares C A, Bhattacharya K. Optimal operation of industrial energy hubs in smart grids. IEEE Transactions on Smart Grid, 2015, 6(2): 684-694 doi: 10.1109/TSG.2014.2373271 [124] Rastegar M, Fotuhi-Firuzabad M, Zareipour H, Moeini-Aghtaieh M. A probabilistic energy management scheme for renewable-based residential energy hubs. IEEE Transactions on Smart Grid, 2017, 8(5): 2217-2227 doi: 10.1109/TSG.2016.2518920 [125] Fan S L, Li Z S, Wang J H, Piao L J, Ai Q. Cooperative economic scheduling for multiple energy hubs: A bargaining game theoretic perspective. IEEE Access, 2018, 6: 27777-27789 doi: 10.1109/ACCESS.2018.2839108 [126] Yang Z, Hu J J, Ai X, Wu J C, Yang G Y. Transactive energy supported economic operation for multi-energy complementary microgrids. IEEE Transactions on Smart Grid, 2021, 12(1): 4-17 doi: 10.1109/TSG.2020.3009670 [127] Bahrami S, Toulabi M, Ranjbar S, Moeini-Aghtaie M, Ranjbar A M. A decentralized energy management framework for energy hubs in dynamic pricing markets. IEEE Transactions on Smart Grid, 2018, 9(6): 6780-6792 doi: 10.1109/TSG.2017.2723023 [128] Dolatabadi A, Jadidbonab M, Mohammadi-Ivatloo B. Short-term scheduling strategy for wind-based energy hub: A hybrid stochastic/IGDT approach. IEEE Transactions on Sustainable Energy, 2019, 10(1): 438-448 doi: 10.1109/TSTE.2017.2788086 [129] Xu D, Wu Q W, Zhou B, Li C B, Bai L, Huang S. Distributed multi-energy operation of coupled electricity, heating, and natural gas networks. IEEE Transactions on Sustainable Energy, 2020, 11(4): 2457-2469 doi: 10.1109/TSTE.2019.2961432 [130] Martinez R E, Perez B E Z, Reza A E, Rodriguez-Martinez A, Bravo E C, Morales W A. Optimal planning, design and operation of a regional energy mix using renewable generation. Study case: Yucatan peninsula. International Journal of Sustainable Energy, 2021, 40(3): 283-309 doi: 10.1080/14786451.2020.1806842 [131] Liu T H, Zhang D D, Dai H, Wu T. Intelligent modeling and optimization for smart energy hub. IEEE Transactions on Industrial Electronics, 2019, 66(12): 9898-9908 doi: 10.1109/TIE.2019.2903766 [132] Rayati M, Sheikhi A, Ranjbar A M. Optimising operational cost of a smart energy hub, the reinforcement learning approach. International Journal of Parallel, Emergent and Distributed Systems, 2015, 30(4): 325-341 doi: 10.1080/17445760.2014.974600 [133] Ye Y J, Qiu D W, Wu X D, Strbac G, Ward J. Model-free real-time autonomous control for a residential multi-energy system using deep reinforcement learning. IEEE Transactions on Smart Grid, 2020, 11(4): 3068-3082 doi: 10.1109/TSG.2020.2976771 [134] Zhou S Y, Hu Z J, Gu W, Jiang M, Chen M, Hong Q T, et al. Combined heat and power system intelligent economic dispatch: A deep reinforcement learning approach. International Journal of Electrical Power & Energy Systems, 2020, 120: Article No. 106016 [135] Zhang B, Hu W H, Li J H, Cao D, Huang R, Huang Q, et al. Dynamic energy conversion and management strategy for an integrated electricity and natural gas system with renewable energy: Deep reinforcement learning approach. Energy Conversion and Management, 2020, 220: Article No. 113063 [136] Perera A T D, Nik V M, Mauree D, Scartezzini J L. Design optimization of electrical hubs using hybrid evolutionary algorithm. In: Proceedings of the ASME 10th International Conference on Energy Sustainability Collocated With the ASME 2016 Power Conference and the ASME 2016 14th International Conference on Fuel Cell Science, Engineering and Technology. Charlotte, USA: American Society of Mechanical Engineers (ASME) Press, 2016. [137] Bollenbacher J, Rhein B. Optimal configuration and control strategy in a multi-carrier-energy system using reinforcement learning methods. In: Proceedings of the 2017 International Energy and Sustainability Conference (IESC). Farmingdale, USA: IEEE, 2017. 1−6 [138] Zhang X S, Yu T, Zhang Z Y, Tang J L. Multi-agent bargaining learning for distributed energy hub economic dispatch. IEEE Access, 2018, 6: 39564-39573 doi: 10.1109/ACCESS.2018.2853263 [139] Nagy A, Kazmi H, Cheaib F, Driesen J. Deep reinforcement learning for optimal control of space heating. arXiv: 1805.03777, 2018. [140] Wang Z, Wang L Q, Han Z Y, Zhao J. Multi-index evaluation based reinforcement learning method for cyclic optimization of multiple energy utilization in steel industry. In: Proceedings of the 39th Chinese Control Conference (CCC). Shenyang, China: IEEE, 2020. 5766−5771 [141] Ahrarinouri M, Rastegar M, Seifi A R. Multiagent reinforcement learning for energy management in residential buildings. IEEE Transactions on Industrial Informatics, 2021, 17(1): 659-666 doi: 10.1109/TII.2020.2977104 [142] Wang X C, Chen H K, Wu J, Ding Y R, Lou Q H, Liu S W. Bi-level multi-agents interactive decision-making model in regional integrated energy system. In: Proceedings of the 2019 IEEE 3rd Conference on Energy Internet and Energy System Integration (EI2). Changsha, China: IEEE, 2019. 2103−2108 [143] Molnar C. Interpretable machine learning [Online], available: https://christophm.github.io/interpretable-ml-book/, June 14, 2021 [144] 赵晋泉, 夏雪, 徐春雷, 胡伟, 尚学伟. 新一代人工智能技术在电力系统调度运行中的应用评述. 电力系统自动化, 2020, 44(24): 1-10Zhao Jin-Quan, Xia Xue, Xu Chun-Lei, Hu Wei, Shang Xue-Wei. Review on application of new generation artificial intelligence technology in power system dispatching and operation. Automation of Electric Power Systems, 2020, 44(24): 1-10 [145] Takano T, Takase H, Kawanaka H, Tsuruoka S. Transfer method for reinforcement learning in same transition model-quick approach and preferential exploration. In: Proceedings of the 10th International Conference on Machine Learning and Applications and Workshops. Honolulu, USA: IEEE, 2011. 466−469 [146] Shao L, Zhu F, Li X L. Transfer learning for visual categorization: A survey. IEEE Transactions on Neural Networks and Learning Systems, 2015, 26(5): 1019-1034 doi: 10.1109/TNNLS.2014.2330900 [147] Finn C, Abbeel P, Levine S. Model-agnostic meta-learning for fast adaptation of deep networks. In: Proceedings of the 34th International Conference on Machine Learning. Sydney, Australia: ACM, 2017. 1126−1135 [148] Snell J, Swersky K, Zemel R. Prototypical networks for few-shot learning. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. Long Beach, USA: ACM, 2017. 4080−4090 -

下载:

下载: