Cross-modality Person Re-identification Based on Joint Constraints of Image and Feature

-

摘要:

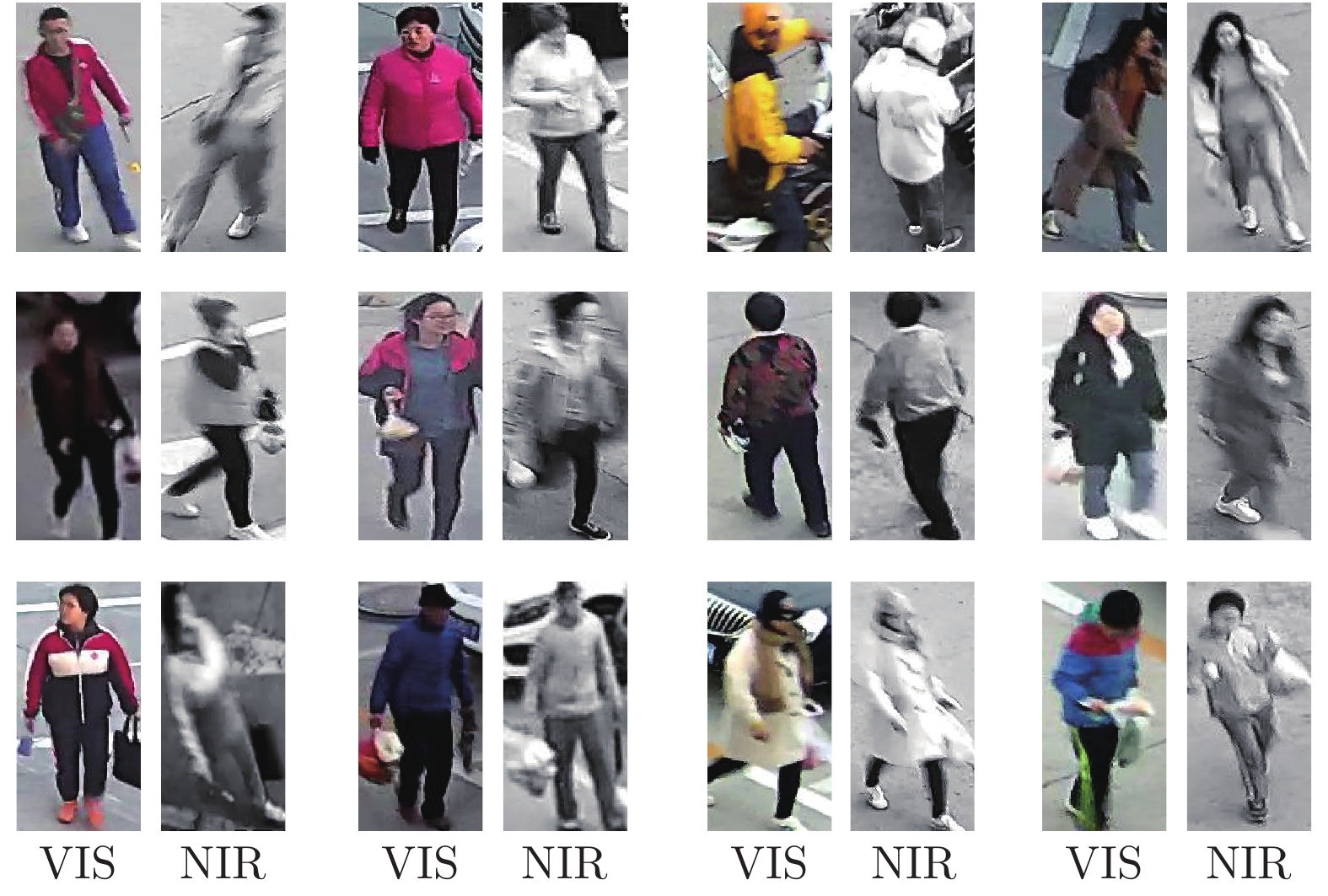

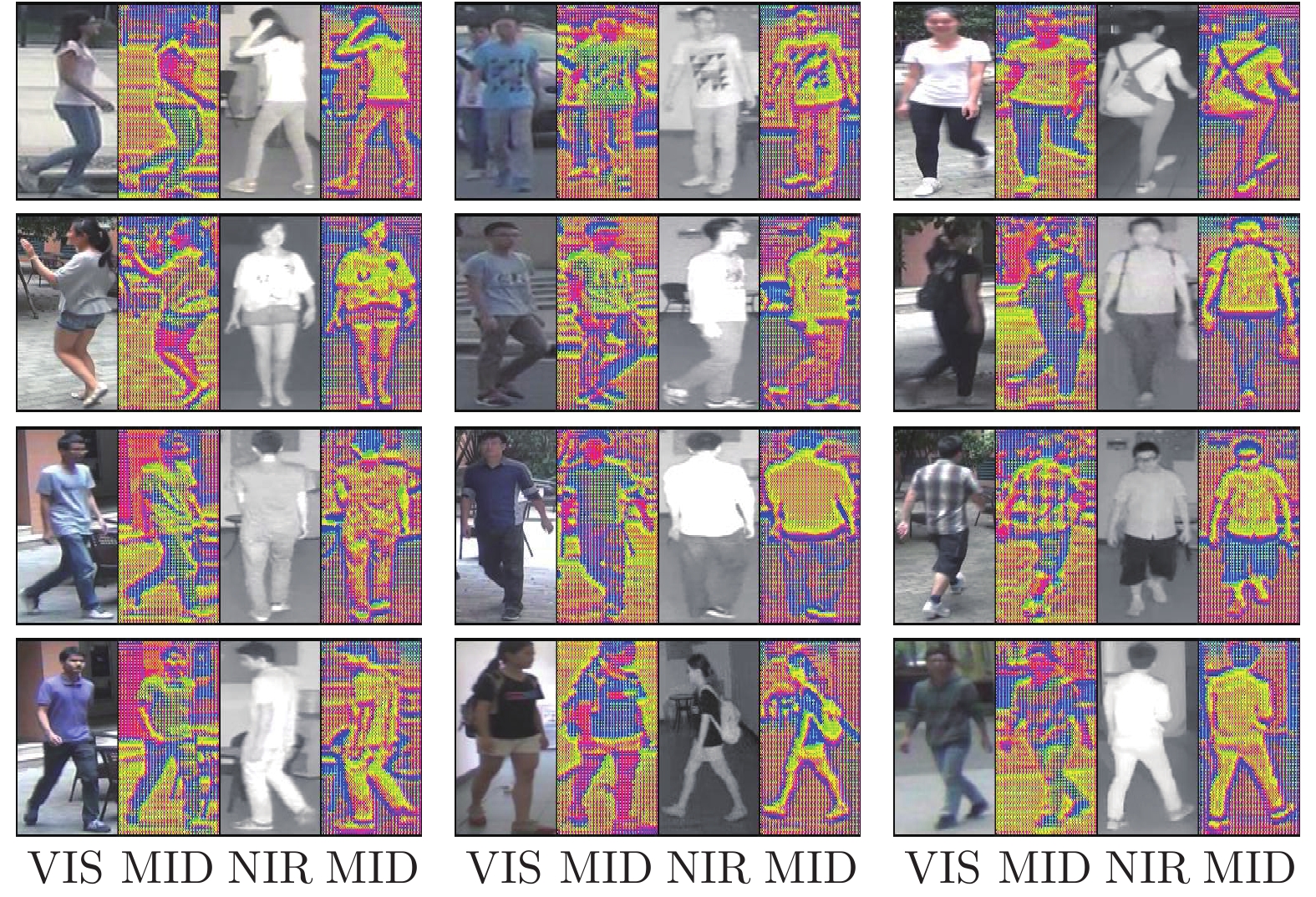

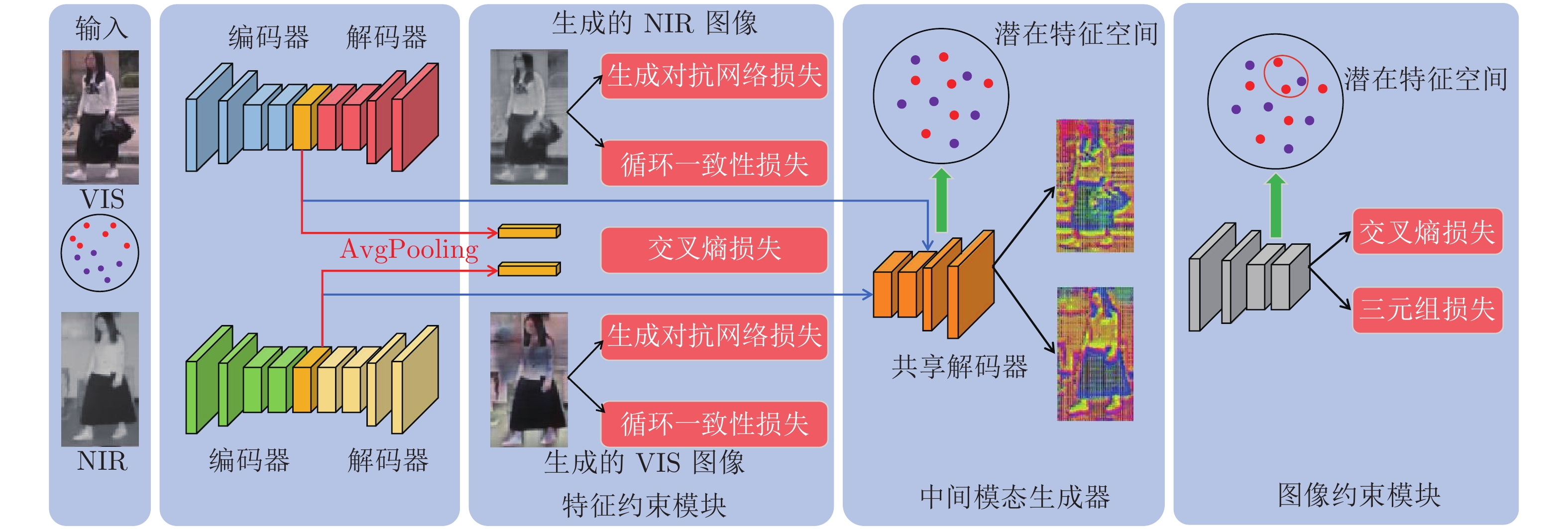

近年来, 基于可见光与近红外的行人重识别研究受到业界人士的广泛关注. 现有方法主要是利用二者之间的相互转换以减小模态间的差异. 但由于可见光图像和近红外图像之间的数据具有独立且分布不同的特点, 导致其相互转换的图像与真实图像之间存在数据差异. 因此, 本文提出了一个基于图像层和特征层联合约束的可见光与近红外相互转换的中间模态, 不仅实现了行人身份的一致性, 而且减少了模态间转换的差异性. 此外, 考虑到跨模态行人重识别数据集的稀缺性, 本文还构建了一个跨模态的行人重识别数据集, 并通过大量的实验证明了文章所提方法的有效性, 本文所提出的方法在经典公共数据集SYSU-MM01上比D2RL算法在 Rank-1和mAP上分别高出4.2 %和3.7 %, 该方法在本文构建的Parking-01数据集的近红外检索可见光模式下比ResNet-50算法在Rank-1和mAP上分别高出10.4 %和10.4 %.

Abstract:In recent years, the research of person re-identification based on visible and near-infrared has attracted widespread attention from the industry. The existing methods mainly use the mutual conversion between them to reduce the difference between their modalities. However, due to the problem of data independence and different distribution between visible image and near-infrared image, there is a large difference between the converted image and the real image, which leads to further improvement of this method. Therefore, this paper proposes a middle modality of conversion between visible and near-infrared modality. So visible and near-infrared can be seamlessly transferred, realizing the identity consistency of person and reducing the difference of conversion between modalities. In addition, considering the scarcity of cross modality person re-identification dataset, this paper also constructs a cross modality person re-identification dataset, and proves the effectiveness of the proposed method through a large number of experiments. In the All-Search Single-shot mode on the SYSU-MM01 dataset, the result of the proposed method is 4.2 % and 3.7 % higher than Rank1 and mAP using the D2RL algorithm, respectively. Compared with ResNet-50 algorithm, the result of the proposed method on the Parking-01 dataset constructed in this paper is 10.4 % and 10.4 % higher in Rank-1 and mAP respectively.

-

Key words:

- Cross modality /

- person re-identification /

- middle modality /

- joint constraint

-

表 1 SYSU-MM01数据集all-search single-shot模式实验结果

Table 1 Experimental results in all-search single-shot mode on SYSU-MM01 dataset

方法 All-Search Single-shot R1 R10 R20 mAP HOG[19] 2.8 18.3 32.0 4.2 LOMO[20] 3.6 23.2 37.3 4.5 Two-Stream[9] 11.7 48.0 65.5 12.9 One-Stream[9] 12.1 49.7 66.8 13.7 Zero-Padding[9] 14.8 52.2 71.4 16.0 BCTR[10] 16.2 54.9 71.5 19.2 BDTR[10] 17.1 55.5 72.0 19.7 D-HSME[21] 20.7 62.8 78.0 23.2 MSR[22] 23.2 51.2 61.7 22.5 ResNet-50* 28.1 64.6 77.4 28.6 cmGAN[12] 27.0 67.5 80.6 27.8 CMGN[23] 27.2 68.2 81.8 27.9 D2RL[13] 28.9 70.6 82.4 29.2 本文方法 33.1 73.9 83.7 32.9 表 2 SYSU-MM01数据集all-search multi-shot模式实验结果

Table 2 Experimental results in all-search multi-shot mode on SYSU-MM01 dataset

表 3 SYSU-MM01数据集indoor-search single-shot模式实验结果

Table 3 Experimental results in indoor-search single-shot mode on SYSU-MM01 dataset

表 4 SYSU-MM01数据集indoor-search multi-shot模式实验结果

Table 4 Experimental results in indoor-search multi-shot mode on SYSU-MM01 dataset

表 5 近红外检索可见光模式的实验结果

Table 5 Experimental results of near infrared retrieval visible mode

方法 近红外−>可见光 R1 R10 R20 mAP ResNet-50* 15.5 39.7 51.9 19.3 本文方法 25.9 53.8 62.8 29.7 表 6 可见光检索近红外模式的实验结果

Table 6 Experimental results of visible retrieval near infrared mode

方法 可见光−>近红外 R1 R10 R20 mAP ResNet-50* 20.2 45.6 50.0 14.7 本文方法 31.6 48.2 56.1 19.7 表 7 不同模态转换的实验结果

Table 7 Experimental results of different mode conversion

方法 R1 R10 R20 mAP ResNet-50* 28.1 64.6 77.4 28.6 转为可见光 29.6 69.8 80.5 30.7 转为近红外 30.8 71.5 83.2 31.2 本文提出的方法 33.1 73.9 83.7 32.9 表 8 有无循环一致性损失的实验结果

Table 8 Experimental results with or without loss of cycle consistency

方法 R1 R10 R20 mAP 无循环一致性 29.6 67.1 78.3 31.1 有循环一致性 33.1 73.9 83.7 32.9 -

[1] 叶钰, 王正, 梁超, 韩镇, 陈军, 胡瑞敏. 多源数据行人重识别研究综述. 自动化学报, 2020, 46(9): 1869-1884.Ye Yu, Wang Zheng, Liang Chao, Han Zhen, Chen Jun, Hu Rui-Min. A survey on multi-source person re-identification. Acta Automatica Sinica, 2020, 46(9): 1869−1884 [2] 罗浩, 姜伟, 范星, 张思朋. 基于深度学习的行人重识别研究进展[J]. 自动化学报, 2019, 45(11): 2032-2049.LUO Hao, JIANG Wei, FAN Xing, ZHANG Si-Peng. A Survey on Deep Learning Based Person Re-identification. ACTA AUTOMATICA SINICA, 2019, 45(11): 2032-2049. [3] 周勇, 王瀚正, 赵佳琦, 陈莹, 姚睿, 陈思霖. 基于可解释注意力部件模型的行人重识别方法. 自动化学报, 2020. doi: 10.16383/j.aas.c200493Zhou Yong, Wang Han-Zheng, Zhao Jia-Qi, Chen Ying, Yao Rui, Chen Si-Lin. Interpretable attention part model for person re-identification. Acta Automatica Sinica, 2020, 41(x): 1−13 doi: 10.16383/j.aas.c200493 [4] 李幼蛟, 卓力, 张菁, 李嘉锋, 张辉. 行人再识别技术综述[J]. 自动化学报, 2018, 44(9): 1554-1568.LI You-Jiao, ZHUO Li, ZHANG Jing, LI Jia-Feng A Survey of Person Re-identification. ACTA AUTOMATICA SINICA, 2018, 44(9): 1554-1568 [5] Zhao H, Tian M, Sun S, et al. Spindle net: Person re-identification with human body region guided feature decomposition and fusion. In: Proceedings of the IEEE CVPR. Hawaii, USA: IEEE, 2017. 1077−1085 [6] Sun Y, Zheng L, Yang Y, et al. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In: Proceedings of the ECCV. Munich, Germany: Springer, 2018. 480−496 [7] Hermans A, Beyer L, Leibe B. In defense of the triplet loss for person re-identification. arXiv preprint arXiv: 1703.07737, 2017 [8] Wei L, Zhang S, Gao W, et al. Person transfer gan to bridge domain gap for person re-identification. In: Proceedings of the IEEE CVPR. Salt Lake City, UT, USA: IEEE, 2018. 79−88 [9] Wu A, Zheng W S, Yu H X, et al. RGB-infrared cross-modality person re-identification. In: Proceedings of the IEEE ICCV. Honolulu, USA: IEEE, 2017. 5380−5389 [10] Ye M, Wang Z, Lan X, et al. visible Thermal person re-identification via dual-constrained top-ranking. In: Proceeding of IJCAI. Stockholm, Sweden, 2018, 1: 2 [11] Ye M, Lan X, Li J, et al. Hierarchical discriminative learning for visible thermal person re-identification. In: Proceeding of AAAI. Louisiana, USA: IEEE, 2018. 32(1) [12] Dai P, Ji R, Wang H, et al. Cross-Modality person re-identification with generative adversarial training. In: Proceeding of IJCAI. Stockholm, Sweden, 2018. 1: 2 [13] Wang Z, Wang Z, Zheng Y, et al. Learning to reduce dual-level discrepancy for infrared-visible person re-identification. In: Proceedings of the IEEE CVPR. California, USA: IEEE, 2019. 618−626 [14] Wang G, Zhang T, Cheng J, et al. Rgb-infrared cross-modality person re-identification via joint pixel and feature alignment. In: Proceedings of the IEEE ICCV. Seoul, Korea: IEEE, 2019. 3623−3632 [15] He K, Zhang X, Ren S, et al. Deep residual learning for image recognition. In: Proceedings of the IEEE CVPR. Las Vegas, USA: IEEE, 2016. 770−778 [16] Zhu J Y, Park T, Isola P, et al. Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE ICCV. Honolulu, USA: IEEE, 2017. 2223−2232 [17] Huang R, Zhang S, Li T, et al. Beyond face rotation: Global and local perception gan for photorealistic and identity preserving frontal view synthesis. In: Proceedings of the IEEE ICCV. Honolulu, USA: IEEE, 2017. 2439−2448 [18] Deng J, Dong W, Socher R, et al. Imagenet: A large-scale hierarchical image database. In: Proceedings of the IEEE CVPR, Miami, FL, USA: IEEE, 2009. 248−255 [19] Dalal N, Triggs B. Histograms of oriented gradients for human detection. In: Proceedings of the IEEE CVPR. San Diego, CA, USA: IEEE, 2005: 886−893 [20] Liao S, Hu Y, Zhu X, et al. Person re-identification by local maximal occurrence representation and metric learning. In: Proceedings of the IEEE CVPR. Boston, USA: IEEE, 2015: 2197−2206 [21] Hao Y, Wang N, Li J, et al. HSME: Hypersphere manifold embedding for visible thermal person re-identification. In: Proceedings of the AAAI. Hawaii, USA: IEEE, 2019, 33: 8385−392 [22] Kang J K, Hoang T M, Park K R. Person Re-Identification between Visible and Thermal Camera Images Based on Deep Residual CNN Using Single Input[J]. IEEE Access, 2019: 1-1. [23] B J J A, B K J, B M Q A, et al. A Cross-Modal Multi-granularity Attention Network for RGB-IR Person Re-identification[J]. Neurocomputing, 2020. -

下载:

下载: