-

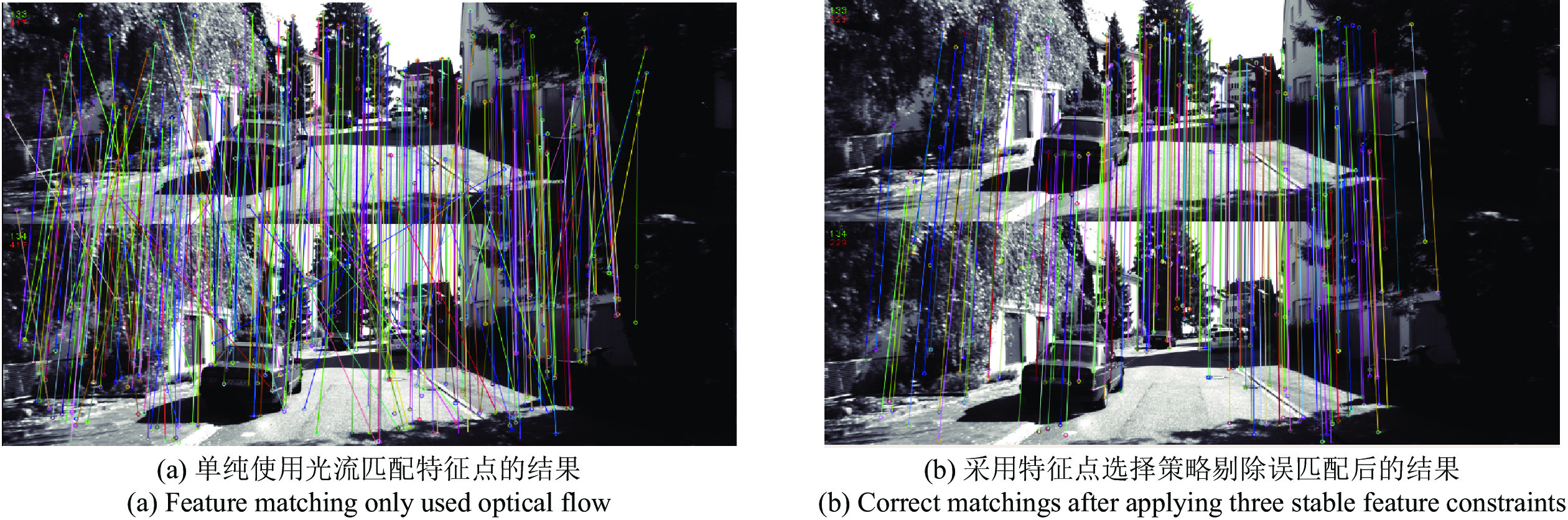

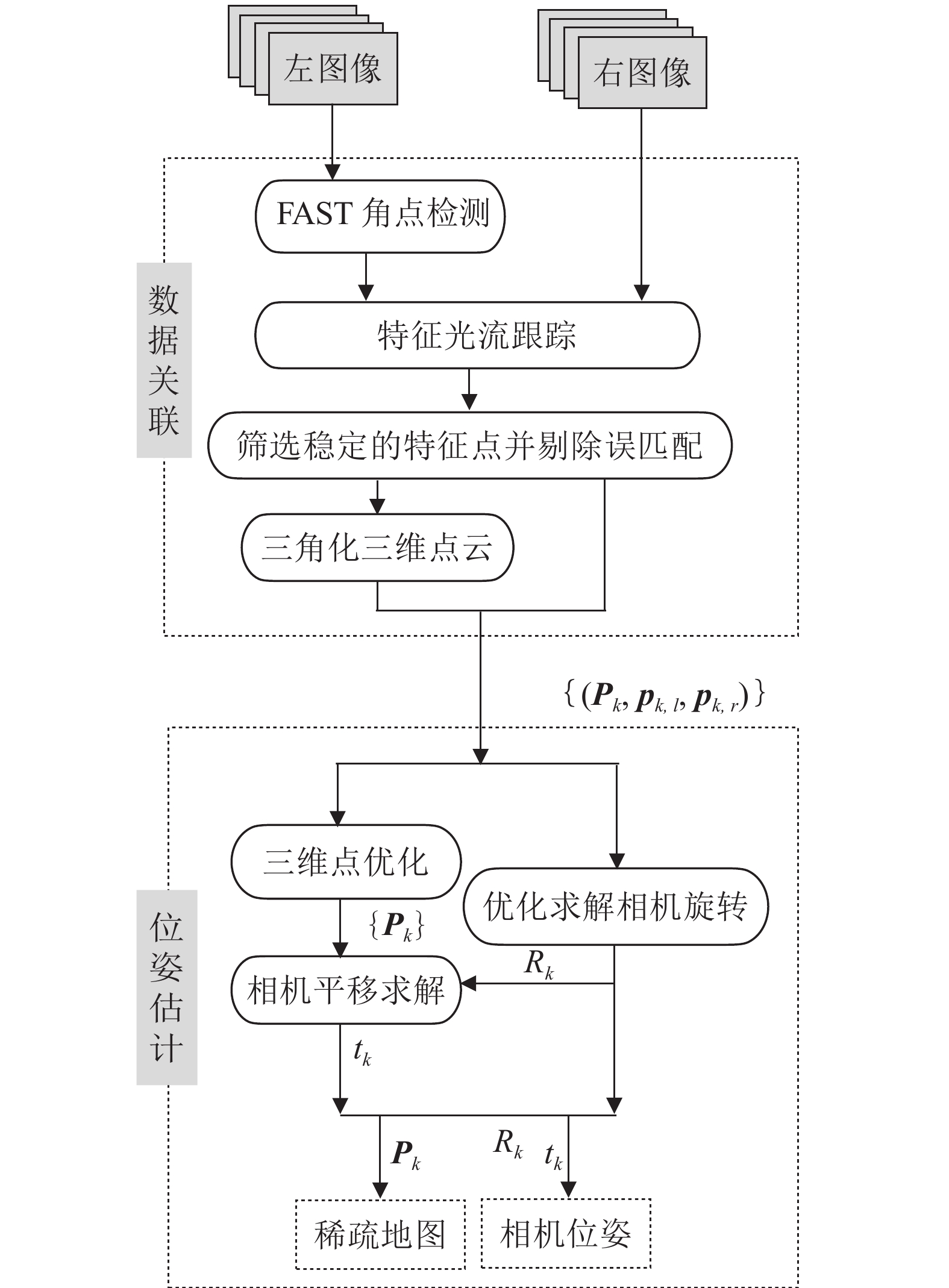

摘要: 针对相机在未知环境中定位及其周围环境地图重建的问题, 本文基于拉普拉斯分布提出了一种快速精确的双目视觉里程计算法. 在使用光流构建数据关联时结合使用三个策略: 平滑的运动约束、环形匹配以及视差一致性检测来剔除错误的关联以提高数据关联的精确性, 并在此基础上筛选稳定的特征点. 本文单独估计相机的旋转与平移. 假设相机旋转、三维空间点以及相机平移的误差都服从拉普拉斯分布, 在此 假设下优化得到最优的相机位姿估计与三维空间点位置. 在KITTI和New Tsukuba数据集上的实验结果表明, 本文算法能快速精确地估计相机位姿与三维空间点的位置.Abstract: In this paper, we present a stereo visual odometry algorithm to estimate the locations of the camera and the surrounding map of unknown environments. The proposed algorithm works fast and yields an accurate trajectory of the camera and environment map. We associate the features of frames by optical flow, and then we select stable features by applying three strategies, i.e. smooth motion constraints, circular matching, and disparity consistency, from the associations. Our algorithm estimates translations and orientations of the camera separately only from the selected stable features. We optimize camera poses and 3D points of the environmental map by assuming the uncertainties of these quantities obey the Laplace distributions which resist outliers and large errors. The experimental results on the KITTI and New Tsukuba datasets show that the proposed algorithm can quickly and accurately estimate the camera pose and 3D environment points.

-

Key words:

- Visaul odometry (VO) /

- motion estimation /

- optical flow /

- Laplace distribution

-

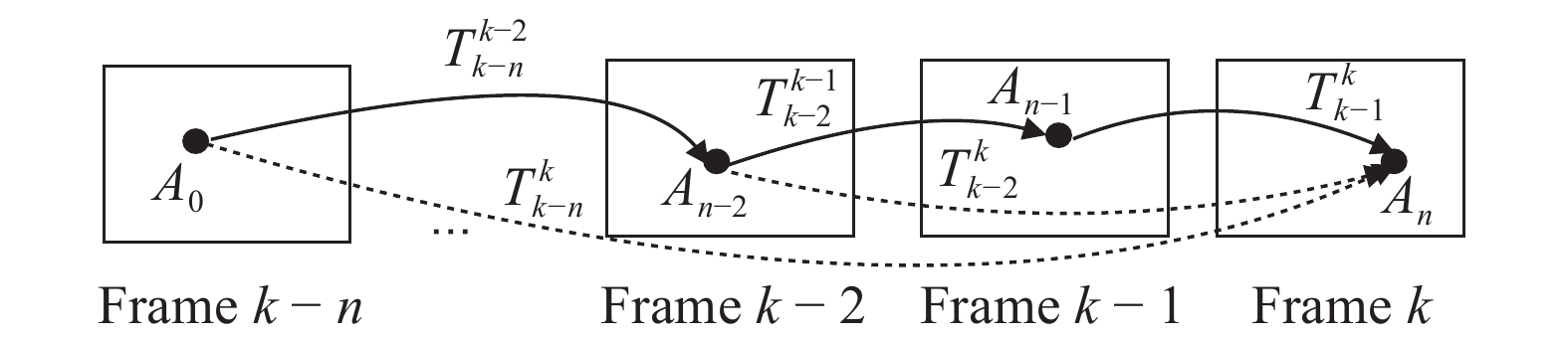

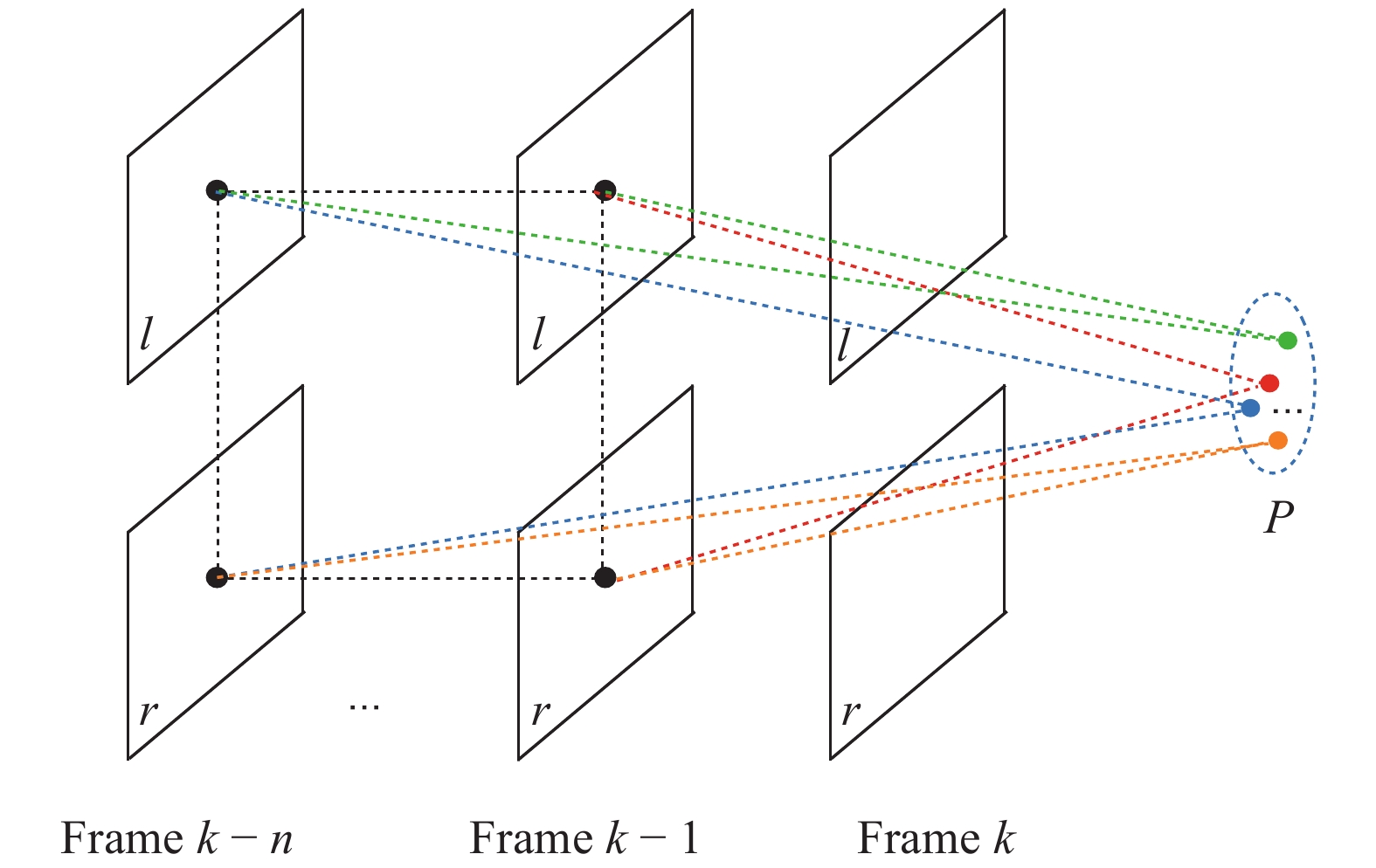

图 5 同一三维空间点的多次三角化(基于特征点的

$age $ 值, 在前$n $ 帧中根据双目相机的视差以及左右相机连续帧间特征点的匹配关系多次三角化同一三维空间点)Fig. 5 Multiple triangulations of the same 3D space point (Based on the age of the features, the same 3D space point is triangulated multiple times in the first n frames according to the disparity of the stereo camera and the matching relationship between the feature points of the left and right camera consecutive frames)

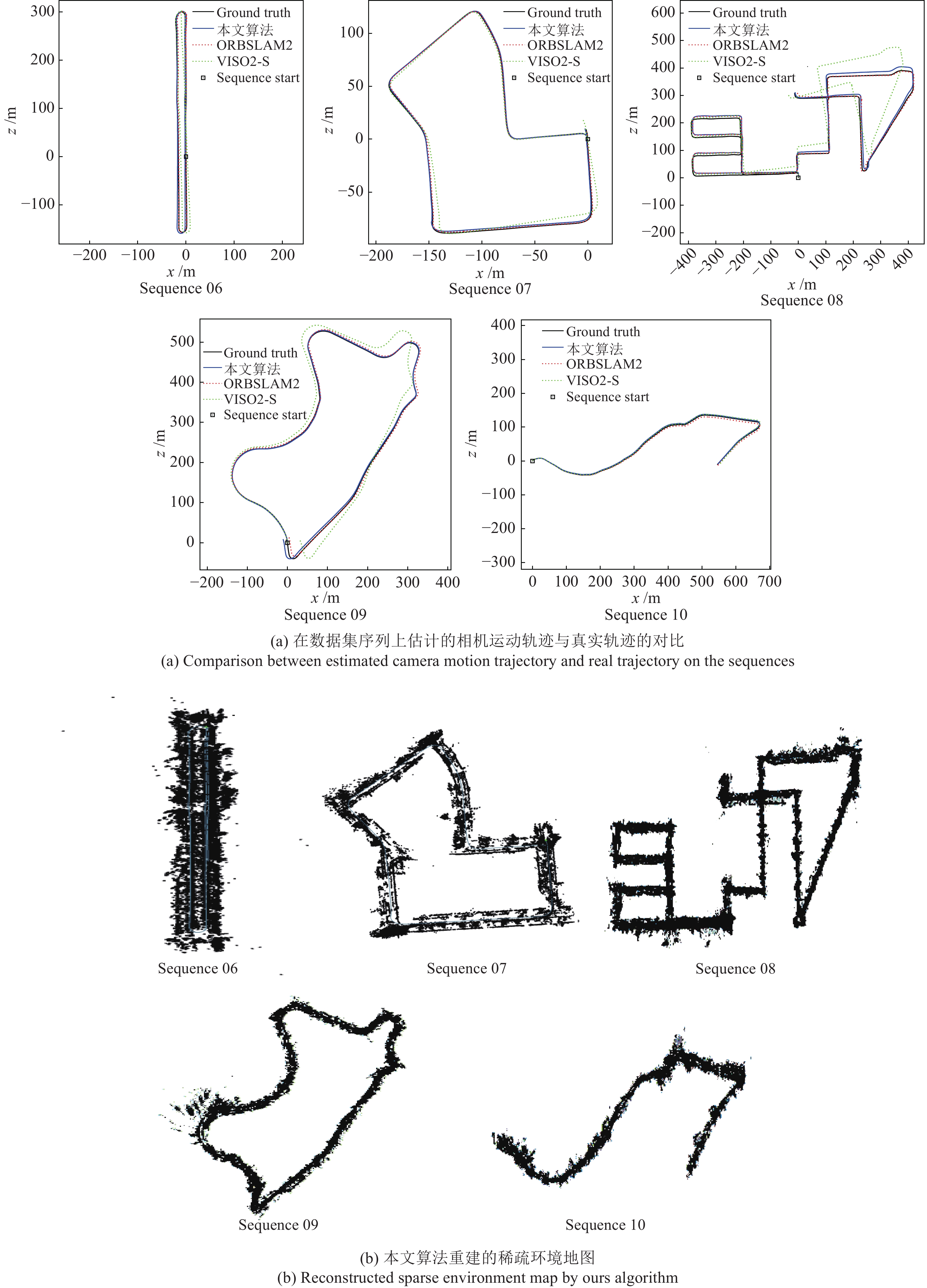

表 1 本文算法、ORB-SLAM2以及VISO2-S估计的轨迹与真实轨迹之间RMSE、Mean、STD的对比

Table 1 Comparison of RMSE, Mean, STD between the trajectory estimated by ours, ORB-SLAM2, and VISO2-S and the real trajectory

序列 RMSE (m) Mean (m) STD (m) 本文算法 ORB-SLAM2 VISO2-S 本文算法 ORB-SLAM2 VISO2-S 本文算法 ORB-SLAM2 VISO2-S 00 5.248 7.410 32.119 4.696 6.733 27.761 2.343 3.095 16.153 01 33.938 38.426 132.138 28.257 29.988 105.667 18.797 24.027 79.341 02 11.365 13.081 34.759 10.332 11.300 31.594 4.733 6.589 14.491 03 1.031 1.662 1.841 0.909 1.486 1.672 0.486 0.745 0.771 04 0.495 0.529 0.975 0.426 0.487 0.861 0.207 0.253 0.457 05 4.207 1.569 12.437 3.061 1.421 10.561 2.885 0.664 6.567 06 2.839 2.059 7.758 2.538 1.759 6.941 1.272 1.072 4.245 07 3.655 1.903 12.277 3.079 1.813 9.399 1.971 1.393 7.898 08 13.001 13.112 20.645 12.555 12.853 18.786 2.594 3.376 8.562 09 4.668 6.081 19.491 3.561 5.212 15.326 3.018 3.312 12.041 10 2.817 4.811 11.789 2.628 4.958 8.074 1.013 2.594 8.589 -

[1] Lin Y, Gao F, Qin T, et al. Autonomous aerial navigation using monocular visual‐inertial fusion. Journal of Field Robotics, 2018, 35(1): 23-51 doi: 10.1002/rob.21732 [2] Faessler M, Mueggler E, Schwabe K, Scaramuzza D. A monocular pose estimation system based on infrared LEDs. In: Proceedings of the 2014 International Conference on Robotics and Automation. Hong Kong, China: IEEE, 2014. 907−913 [3] Meier L, Tanskanen P, Heng L, Lee G H, Fraundorfer F, Pollefeys M. PIXHAWK: A micro aerial vehicle design for autonomous flight using onboard computer vision. Autonomous Robots, 2012, 33(1): 21-39 [4] Klein G, Murray D W. Parallel tracking and mapping for small AR workspaces. In: Proceedings of the 6th International Symposium on Mixed and Augmented Reality. Nara, Japan: IEEE/ACM, 2007. 1−10 [5] Strasdat H, Montiel J, Davison AJ. Scale drift-aware large scale monocular SLAM. Robotics: Science and Systems, 2010, 2(3): 27-34 [6] Engel J, Schops T, Cremers D. LSD-SLAM: Large-scale direct monocular SLAM. In: Proceedings of the 13th European Conference on Computer Vision. Zürich, Switzerland: Springer, 2014. 834−849 [7] Cadena C, Carlone L, Carrillo H, et al. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Transactions on Robotics, 2016, 32(6): 1309-1332 doi: 10.1109/TRO.2016.2624754 [8] 丁文东, 徐德, 刘希龙, 张大朋, 陈天. 移动机器人视觉里程计综述, 自动化学报, 2018, 44(3): 385-400Ding Wen-Dong, Xu De, Liu Xi-Long, Zhang Da-Peng, Chen Tian. Review on visual odometry for mobilerobots. Acta Automatica Sinica, 2018, 44(3): 385-400 [9] Mur-Artal R, Montiel JM, Tardos JD. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Transactions on Robotics, 2015, 31(5): 1147-1163 doi: 10.1109/TRO.2015.2463671 [10] Lowe D G. Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 2004, 60(2): 91-110 doi: 10.1023/B:VISI.0000029664.99615.94 [11] Bay H, Tuytelaars T, Van Gool L. SURF: Speeded up robust features. In: Proceedings of the 9th European Conference on Computer Vision. Graz, Austria: Springer, 2006. 404−417 [12] Cvisic I, Petrovic I. Stereo odometry based on careful feature selection and tracking. In: Proceedings of the 7th European Conference on Mobile Robots. Lincoln, Lincolnshire, United Kingdom: IEEE, 2015. 1−6 [13] Forster C, Pizzoli M, Scaramuzza D. SVO: Fast semi-direct monocular visual odometry. In: Proceedings of the 2014 International Conference on Robotics and Automation. Hong Kong, China: IEEE, 2014. 15−22 [14] More V, Kumar H, Kaingade S, Gaidhani P, Gupta N. Visual odometry using optic flow for unmanned aerial vehicles. In: Proceedings of the 1st International Conference on Cognitive Computing and Information Processing. Noida, India: IEEE, 2015. 1−6 [15] Jing L P, Wang P, Yang L. Sparse probabilistic matrix factorization by laplace distribution for collaborative filtering. In: Proceedings of the 24th International Joint Conference on Artificial Intelligence. Buenos Aires, Argentina: AAAI Press 2015. 25−31 [16] Casafranca J J, Paz L M, Pinies P. A back-end L1 norm based solution for factor graph SLAM. In: Proceedings of the 2013 International Conference on Intelligent Robots and Systems. Tokyo,Japan: IEEE/RJS, 2013. 17−23 [17] Bustos A P, Chin T, Eriksson A, Reid I. Visual SLAM: Why bundle adjust? In: Proceedings of the 2019 International Conference on Robotics and Automation. Montreal, Canada: IEEE, 2019. 2385−2391 [18] Wang R, Schworer M, Cremers D. Stereo DSO: Large-scale direct sparse visual odometry with stereo cameras. In: Proceedings of the 2017 International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 3903−3911 [19] Forster C, Zhang Z, Gassner M, Werlberger M, Scaramuzza D. SVO: Semidirect Visual Odometry for Monocular and Multicamera Systems. IEEE Transactions on Robotics, 2017, 33(2): 249-265 doi: 10.1109/TRO.2016.2623335 [20] Tang F L, Li H P, Wu Y H. FMD stereo SLAM: Fusing MVG and direct formulation towards accurate and fast stereo SLAM. In: Proceedings of the 2019 International Conference on Robotics and Automation. Montreal, Canada: IEEE, 2019. 133−139 [21] Rosten E, Drummond T. Machine learning for high-speed corner detection. In: Proceedings of the 9th European Conference on Computer Vision. Graz, Austria: Springer, 2006. 430−443 [22] Badino Hernán. A robust approach for ego-motion estimation using a mobile stereo platform. In: Proceedings of the 1st International Workshop on Complex Motion. Günzburg, Germany: Springer, Berlin, Heidelberg, 2004. 198−208 [23] Fan H Q, Zhang S. Stereo odometry based on careful frame selection. In: Proceedings of the 10th International Symposium on Computational Intelligence and Design. Hangzhou, China: IEEE, 2017. 177−180 [24] Moreno L, Blanco D, Muñoz ML, Garrido S. L1-L2 norm comparison in global localization of mobile robots. Robotics and Autonomous Systems, 2011, 59(9): 597-610 doi: 10.1016/j.robot.2011.04.006 [25] Casafranca J J, Paz L M, Pinies P. L1 factor graph SLAM: Going beyond the L2 norm. In: Proceedings of the 2013 Robust and Multimodal Inference in Factor Graphs Workshop, IEEE International Conference on Robots and Automation. Karlsruhe, Germany: IEEE, 2013. 17−23 [26] Bahreinian M, Tron R. A computational theory of robust localization verifiability in the presence of pure outlier measurements. In: Proceedings of the 58th Conference on Decision and Control. Nice, France: IEEE, 2019. 7824−7831 [27] Nister D. An efficient solution to the five-point relative pose problem. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2004, 26(6): 756-777 doi: 10.1109/TPAMI.2004.17 [28] Fathian K, Ramirez-Paredes JP, Doucette EA, Curtis JW, Gans NR. QuEst: A quaternion-based approach for camera motion estimation from minimal feature points. International Conference on Robotics and Automation, 2018, 3(2): 857-864 [29] Fischler M A, Bolles R C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Communications of The ACM, 1981, 24(6): 381-395 doi: 10.1145/358669.358692 [30] Geiger A, Lenz P, Stiller C, Urtasun R. Vision meets robotics: The KITTI dataset. The International Journal of Robotics Research, 2013, 32(11): 1231-1237 doi: 10.1177/0278364913491297 [31] Martull S, Peris M, Fukui K. Realistic CG stereo image dataset with ground truth disparity maps. Scientific Programming, 2012, 111(431): 117-118 [32] Geiger A, Ziegler J, Stiller C. StereoScan: Dense 3D reconstruction in real-time. In: Proceedings of the 2011 IEEE Intelligent Vehicles Symposium. Baden-Baden, Germany: IEEE, 2011. 963−968 [33] Murartal R, Tardos J D. ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Transactions on Robotics, 2017, 33(5): 1255-1262 doi: 10.1109/TRO.2017.2705103 [34] Wu M, Lam S, Srikanthan T. A framework for fast and robust visual odometry. IEEE Transactions on Intelligent Transportation Systems, 2017, 18(12): 3433-3448 doi: 10.1109/TITS.2017.2685433 -

下载:

下载: