-

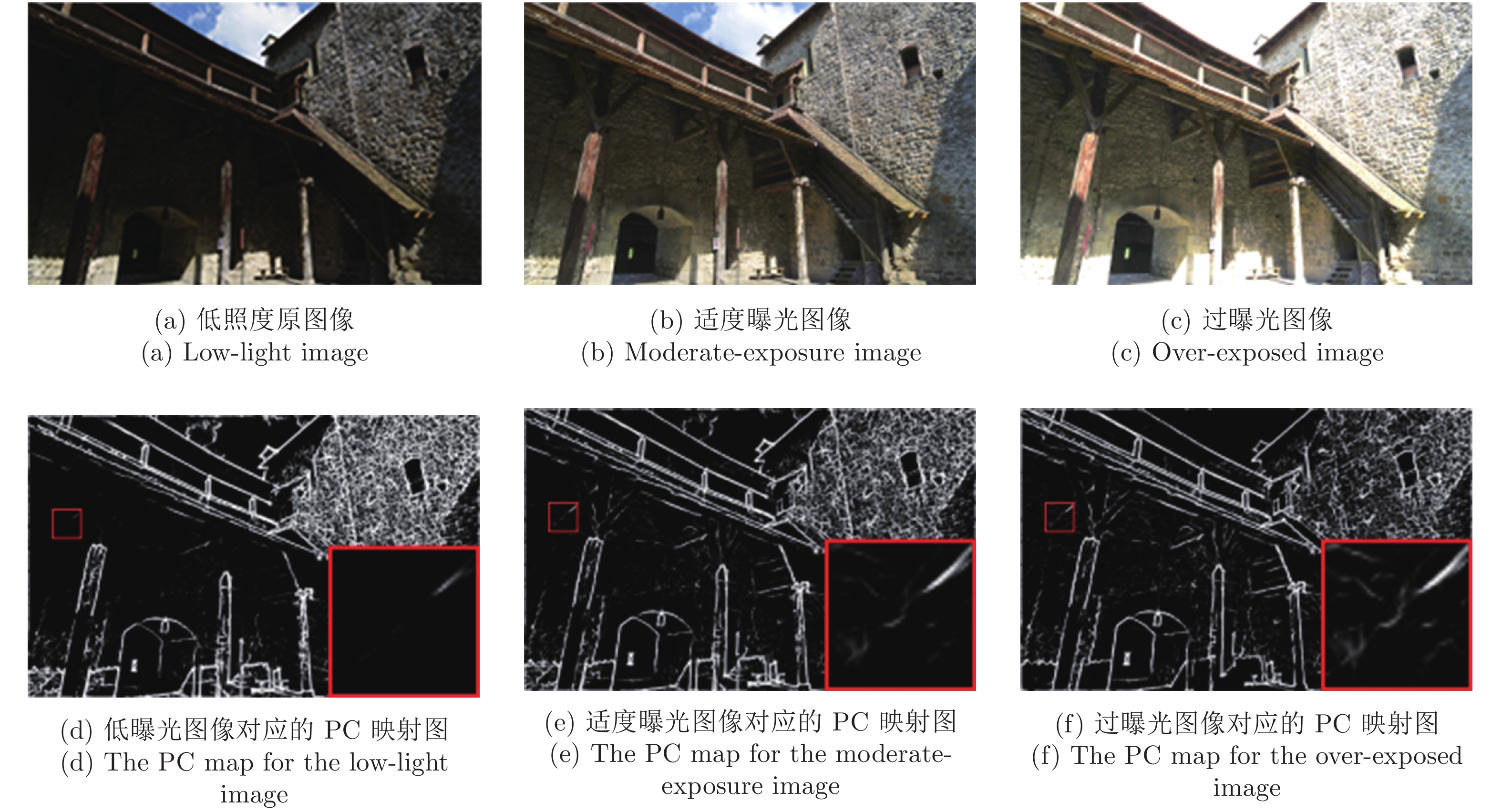

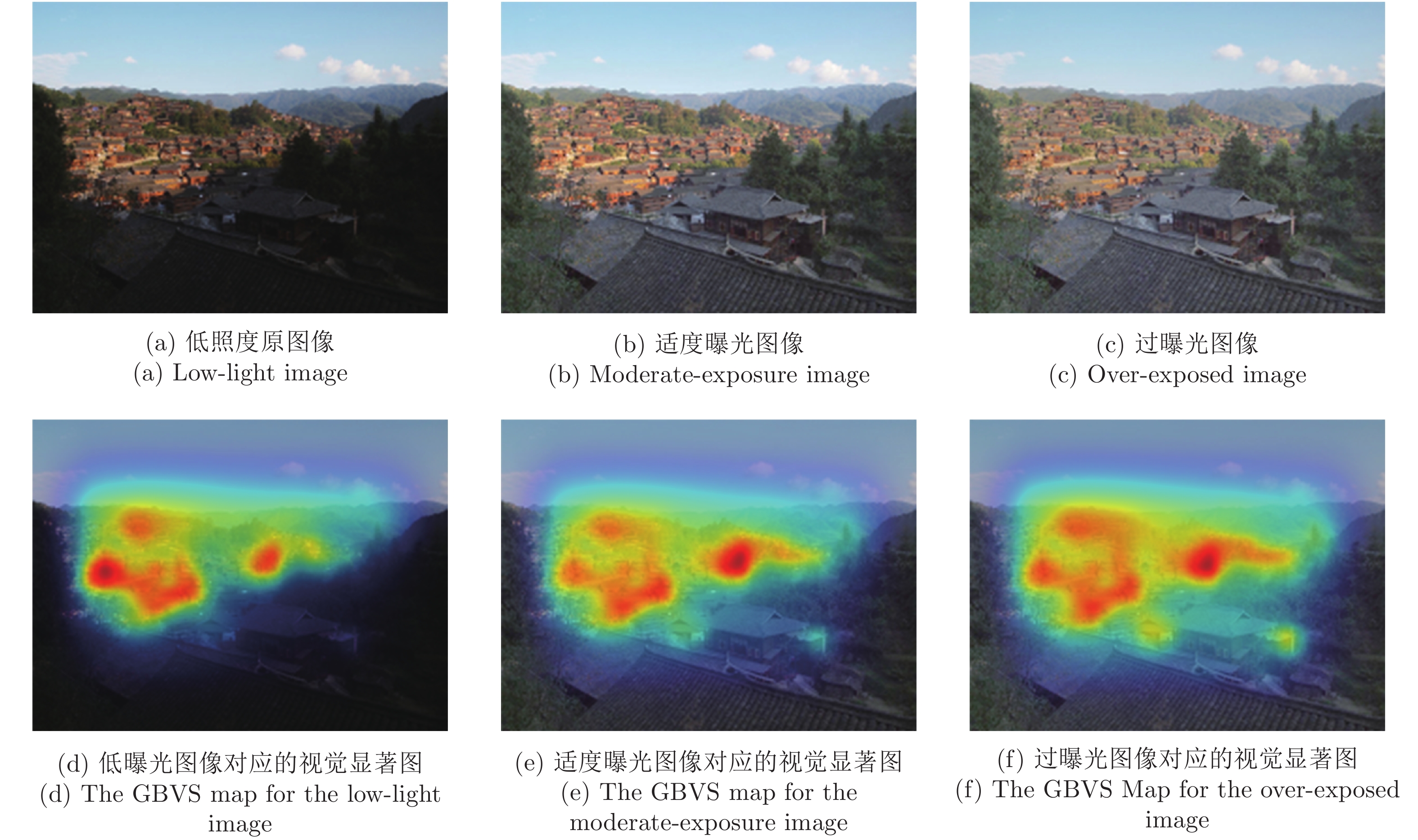

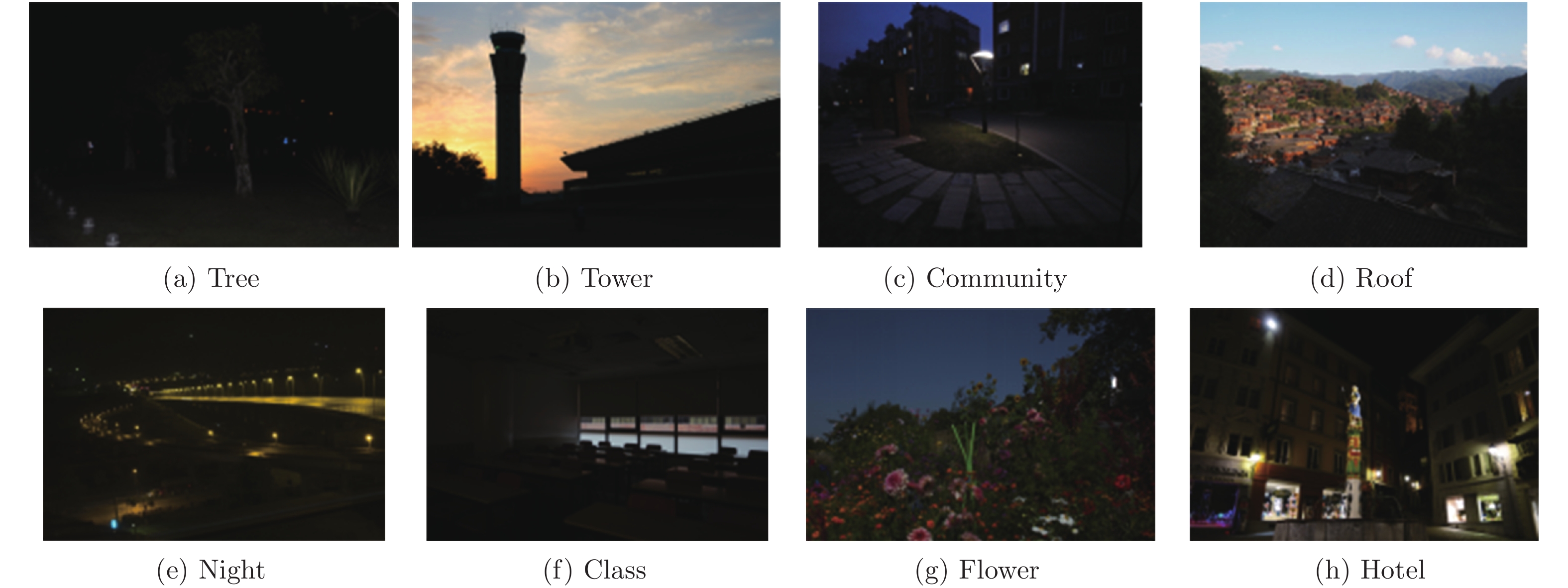

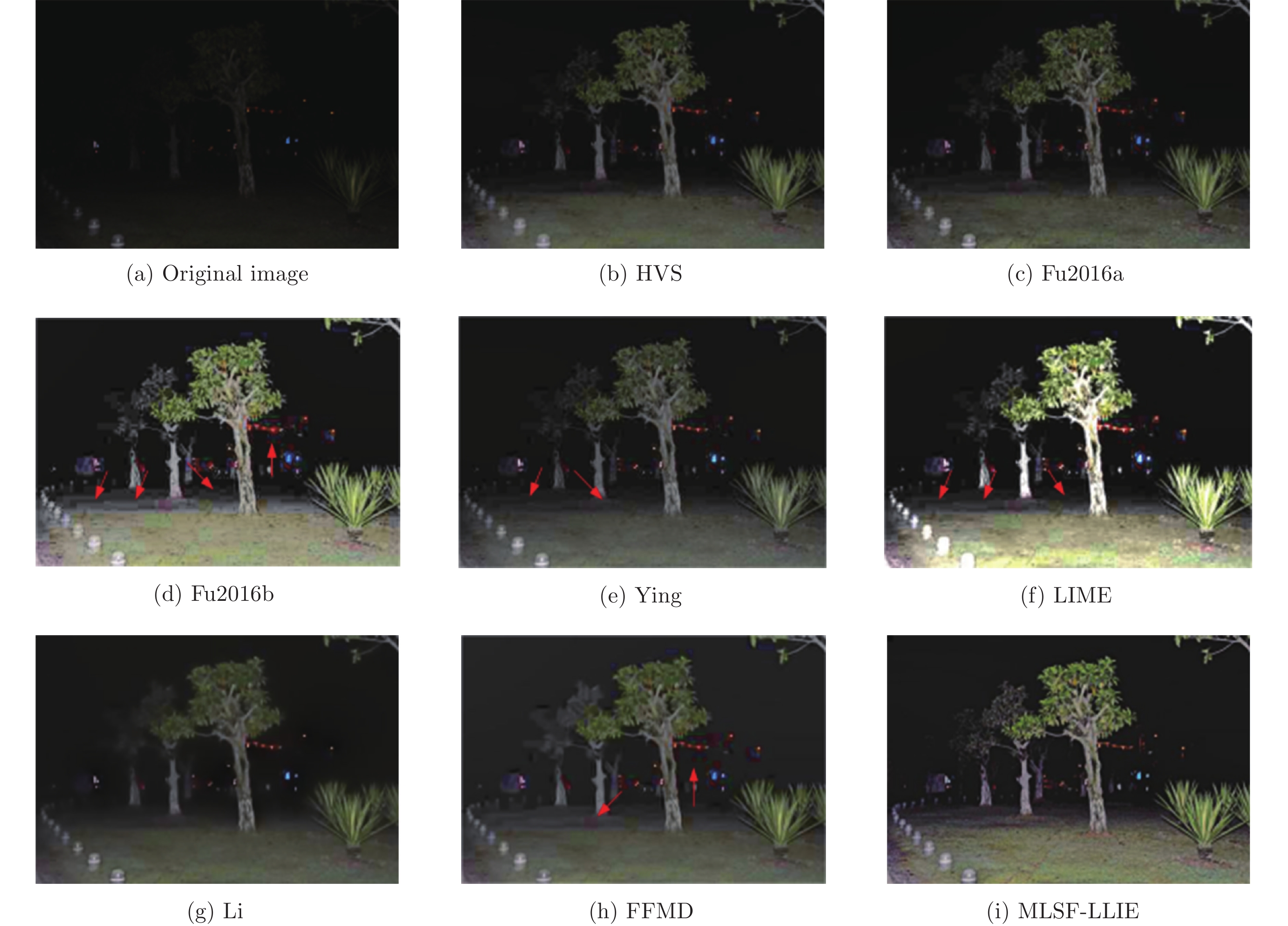

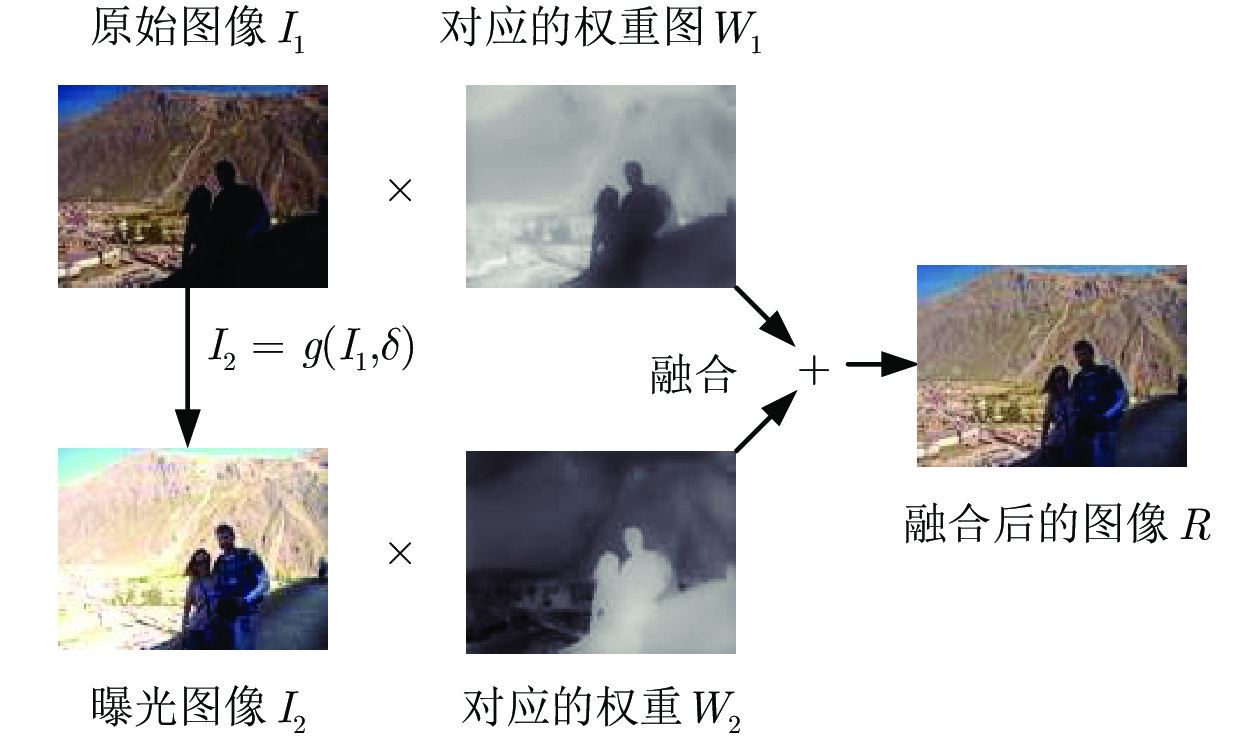

摘要: 为将低照度图像及基于它生成的多个不同曝光度图像中的互补性信息进行最佳融合以获得更为鲁棒的视觉增强效果, 提出了一种基于多图像局部结构化融合的两阶段低照度图像增强(Low-light image enhancement, LLIE)算法. 在待融合图像制备阶段, 提出了一种基于图像质量评价的最佳曝光度预测模型, 利用该预测模型给出的关于低照度图像最佳曝光度值, 在伪曝光模型下生成适度增强图像和过曝光图像 (利用比最佳曝光度值更高的曝光度生成)各一幅. 同时, 利用经典Retinex模型生成一幅适度增强图像作为补充图像参与融合. 在融合阶段, 首先将低照度图像、适度增强图像(2幅)和过曝光图像在同一空间位置处的图块矢量化后分解为对比度、结构强度和亮度三个分量. 之后, 以所有待融合对比度分量中的最高值作为融合后的对比度分量值, 而结构强度和亮度分量则分别以相位一致性映射图和视觉显著度映射图作为加权系数完成加权融合. 然后, 将分别融合后的对比度、纹理结构和亮度三个分量重构为图块, 并重新置回融合后图像中的相应位置. 最后, 在噪声水平评估算法导引下自适应调用降噪算法完成后处理. 实验结果表明: 所提出的低照度图像增强算法在主客观图像质量评价上优于现有大多数主流算法.Abstract: To combine the complementary information of the multi-exposure images generated from a given low-light image, a two-stage low-light image enhancement (LLIE) algorithm adopting multi-image local structural fusion approach was proposed to produce a fused image that is more informative and robust than each one. Specifically, in the image preparation stage, an optimal exposure prediction model based on image quality assessment was first built. Then a well-exposed image and an over-exposed image were generated with corresponding exposure ratios estimated with the prediction model for a given low-light image, respectively. Simultaneously, the classical Retinex model was used to obtain another well-exposed image to provide more supplementary information to be fused. In the fusion stage, the patches extracted from the low-light image, two well-exposed images, and the over-exposed image at the same spatial positions were vectorized and decomposed into independent components, i.e., contrast, texture structure, and brightness. The desired contrast of the fused image patch was determined by the highest contrast of all source image patches, while the structural strength and brightness components were weighted with phase congruency map and visual saliency map, respectively. Upon fusing these three components separately, we reconstructed a desired patch and placed it back into the fused image. Finally, a denoising algorithm whose input parameter is estimated by a noise level estimation algorithm was exploited to suppress the accompanying noise due to enhancement process. The experimental results show that, the proposed LLIE algorithm outperforms the existing the state-of-art ones in the terms of both subjective and objective image quality assessment.

-

表 1 各个对比算法对Tree图像增强后的无参考指标值比较

Table 1 Performance comparison of each algorithm on Tree image

算法 HVS Fu2016a Fu2016b Ying LIME Li FFMD MLSF-LLIE BOIEM 1.96 2.07 2.18 1.97 2.11 2.26 2.25 2.37 BIQME 0.28 0.30 0.37 0.35 0.48 0.37 0.43 0.39 NIQMC 3.31 3.66 4.18 3.27 4.69 3.54 3.40 4.17 IL-NIQE 57.66 60.04 57.39 58.69 58.08 49.92 65.06 31.79 表 2 各个对比算法对图像Tower增强后的无参考指标值

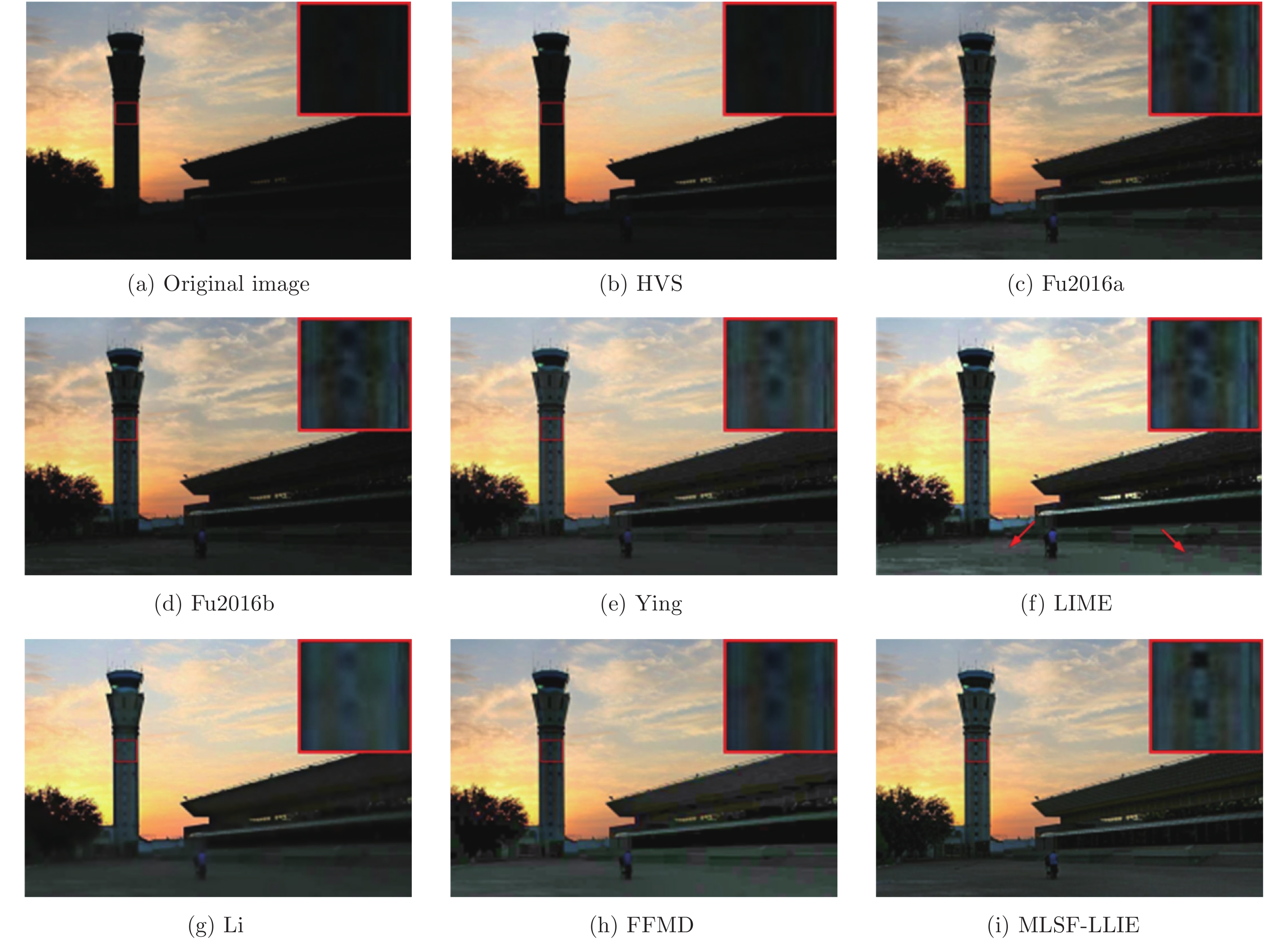

Table 2 Performance comparison of each algorithm on Tower image

算法 HVS Fu2016a Fu2016b Ying LIME Li FFMD MLSF-LLIE BOIEM 2.30 3.42 3.04 2.90 3.18 2.77 3.42 3.15 BIQME 0.40 0.54 0.48 0.53 0.54 0.53 0.58 0.56 NIQMC 3.48 4.97 4.58 4.63 4.63 4.22 4.92 4.70 IL-NIQE 42.82 32.34 37.05 34.68 35.04 38.66 36.75 30.10 表 3 各个对比算法对图像Class增强后的无参考指标值

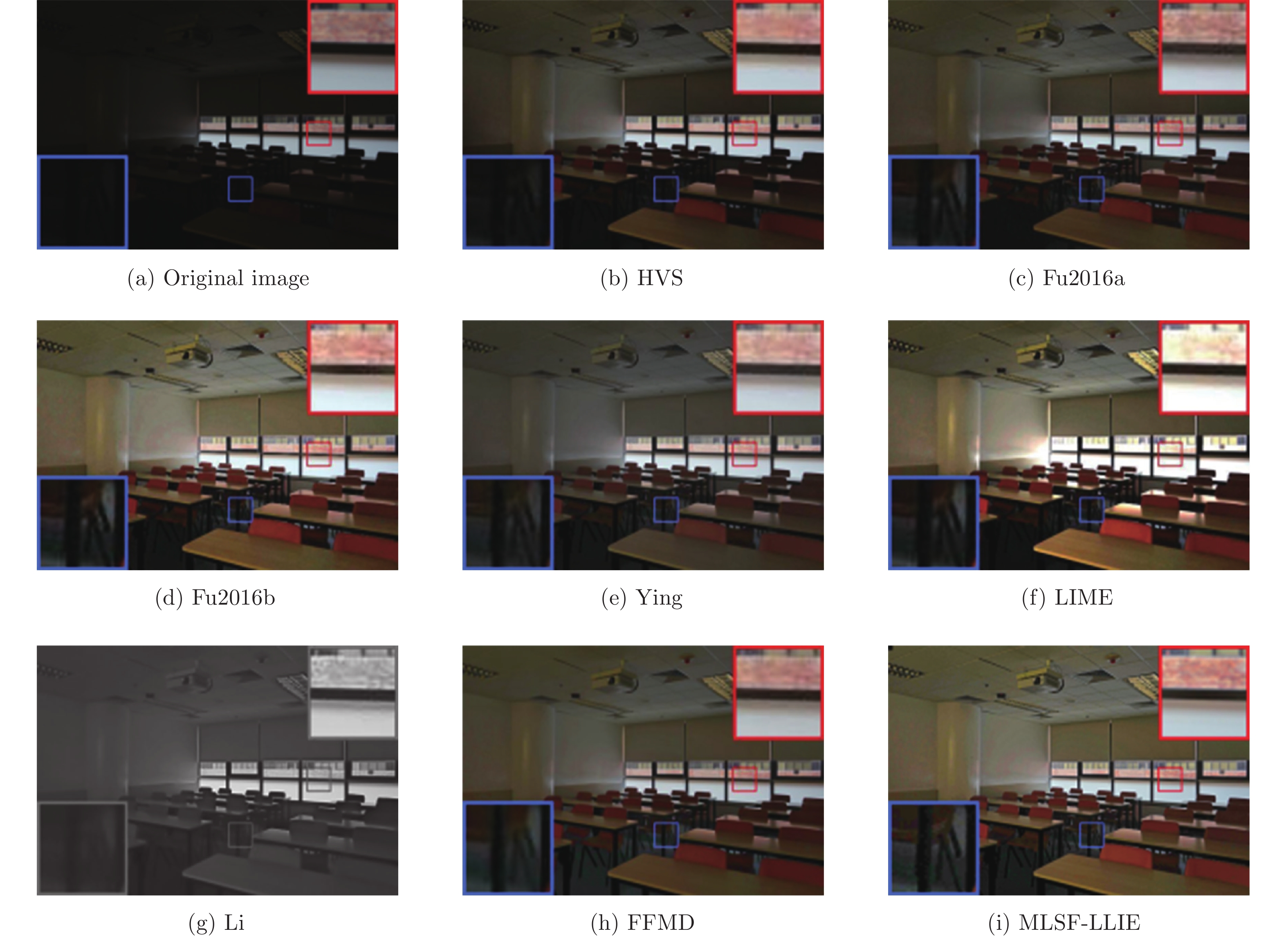

Table 3 Performance comparison of each algorithm on Class image

算法 HVS Fu2016a Fu2016b Ying LIME Li FFMD MLSF-LLIE BOIEM 2.67 2.68 3.42 2.64 3.38 2.61 2.63 3.17 BIQME 0.48 0.50 0.57 0.53 0.6 0.55 0.53 0.56 NIQMC 4.57 4.57 5.28 4.67 5.00 4.67 4.33 4.95 IL-NIQE 25.89 22.22 20.9 24.26 22.99 38.22 24.47 20.97 IW-PNSR 16.68 17.36 21.36 18.66 15.24 16.46 17.93 22.18 IW-SSIM 0.85 0.87 0.94 0.91 0.87 0.83 0.88 0.95 表 4 各个算法在90幅无参考低照度图像上的无参考指标的平均值

Table 4 Average performance of different competing algorithms on 90 low-light images without reference images

算法 HVS Fu2016a Fu2016b Ying LIME Li FFMD MLSF-LLIE BOIEM 3.07 3.17 3.27 3.13 3.38 3.12 3.03 3.21 BIQME 0.54 0.56 0.57 0.57 0.61 0.58 0.58 0.58 NIQMC 4.73 4.88 5.02 4.81 5.33 4.8 4.64 4.89 IL-NIQE 28.69 27.18 27.15 27.61 28.21 30.94 28.62 25.28 表 5 各个算法在90幅有参考低照度图像上的无参考和有参考指标的平均值

Table 5 Average performance of different competing algorithms on 90 low-light images with reference images

算法 HVS Fu2016a Fu2016b Ying LIME Li FFMD MLSF-LLIE BOIEM 2.67 2.68 3.42 2.64 3.38 2.61 2.63 3.17 BOIEM 3.38 3.41 3.43 3.34 3.43 3.38 3.28 3.40 BIQME 0.58 0.60 0.58 0.58 0.61 0.58 0.59 0.59 NIQMC 5.11 5.15 5.10 4.97 5.34 5.06 4.84 5.02 IL-NIQE 23.14 22.74 22.42 22.29 24.69 25.54 22.62 22.19 IW-PSNR 20.35 19.81 22.10 22.15 15.31 22.04 21.88 22.54 IW-SSIM 0.92 0.91 0.94 0.94 0.84 0.93 0.94 0.95 表 6 各个算法在90幅有参考低照度图像上的平均执行时间比较(s)

Table 6 The average execution time of the competing algorithms on 90 low-light images with reference images (s)

算法 HVS Fu2016a Fu2016b Ying LIME Li FFMD MLSF-LLIE 时间 0.56 0.29 1.93 0.21 0.10 11.23 4.13 4.21 -

[1] 肖进胜, 单姗姗, 段鹏飞, 涂超平, 易本顺. 基于不同色彩空间融合的快速图像增强算法. 自动化学报, 2014, 42(4): 697-705Xiao Jin-Sheng, Shan Shan-Shan, Duan Peng-Fei, Tu Chao-Ping, Yi Ben-Shun. A fast image enhancement algorithm based on fusion of different color spaces. Acta Automatica Sinica, 2014, 40(4): 697-705 [2] 肖进胜, 庞观林, 唐路敏, 钱超, 邹白昱. 基于轮廓模板和自学习的图像纹理增强超采样算法. 自动化学报, 2016, 42(8): 1248-1258Xiao Jin-Sheng, Pang Guan-Lin, Tang Lu-Min, Qian Chao, Zou Bai-Yu. Design of a novel omnidirectional stereo vision system. Acta Automatica Sinica, 2016, 42(8): 1248-1258 [3] 鞠明, 李成, 高山, 穆举国, 毕笃彦. 基于向心自动波交叉皮质模型的非均匀光照图像增强. 自动化学报, 2011, 37(7): 800-810Ju Ming, Li Cheng, Gao Shan, Mu Ju-Guo, Bi Du-Yan. Non-uniform-lighting image enhancement based on centripetal-autowave intersecting cortical model. Acta Automatica Sinica, 2011, 37(7): 800-810 [4] Xu H T, Zhai G T, Wu X L, Yang X K. Generalized equalization model for image enhancement. IEEE Transactions on Multimedia, 2014, 16(1): 68-82 doi: 10.1109/TMM.2013.2283453 [5] Celik T. Spatial entropy-based global and local image contrast enhancement. IEEE Transactions on Image Processing, 2014, 23(12): 5298-5308 doi: 10.1109/TIP.2014.2364537 [6] Wang C, Ye Z F. Brightness preserving histogram equalization with maximum entropy: a variational perspective. IEEE Transactions on Consumer Electronics, 2005, 51(4): 1326-1334 doi: 10.1109/TCE.2005.1561863 [7] Ibrahim H, Kong N S P. Brightness preserving dynamic histogram equalization for image contrast enhancement. IEEE Transactions on Consumer Electronics, 2007, 53(4): 1752-1758 doi: 10.1109/TCE.2007.4429280 [8] Huang L D, Zhao W, Abidi B R, Abidi M A. A constrained optimization approach for image gradient enhancement. IEEE Transactions on Circuits and Systems for Video Technology, 2018, 28(8): 1707-1718 doi: 10.1109/TCSVT.2017.2696971 [9] Li L, Wang R G, Wang W M, Gao W. A low-light image enhancement method for both denoising and contrast enlarging. In: Proceedings of the 2015 IEEE International Conference on Image Processing. Quebec City, Canada: IEEE, 2015. 3730−3734 [10] Zhang X D, Shen P Y, Luo L L, Zhang L, Song J. Enhancement and noise reduction of very low light level images. In: Proceedings of the 2012 International Conference on Pattern Recognition. Tsukuba, Japan: IEEE, 2012. 2034−2037 [11] Li M D, Liu J Y, Yang W H, Sun X Y, Guo Z M. Structure-revealing low-light image enhancement via robust retinex model. IEEE Transactions on Image Processing, 2018, 27(6): 2828-2841 doi: 10.1109/TIP.2018.2810539 [12] Guo X J, Li Y, Ling H B. LIME: low-light image enhancement via illumination map estimation. IEEE Transactions on Image Processing, 2017, 26(2): 982-993 doi: 10.1109/TIP.2016.2639450 [13] Park S, Yu S, Moon B, Ko S, Paik J. Low-light image enhancement using variational optimization-based retinex model. IEEE Transactions on Consumer Electronics, 2017, 63(2): 178-184 doi: 10.1109/TCE.2017.014847 [14] 张杰, 周浦城, 薛模根. 基于方向性全变分Retinex的低照度图像增强. 计算机辅助设计与图形学学报, 2018, 30(10): 1943-1953Zhang Jie, Zhou Pu-Cheng, Xue Mo-Gen. Low-light image enhancement based on directional total variation Retinex. Journal of Computer-Aided Design & Computer Graphics, 2018, 30(10): 1943-1953 [15] Dabov K, Foi A, Katkovnik V, Karen E. Image denoising by sparse 3D transform-domain collaborative filtering. IEEE Transactions on Image Processing, 2007, 16(8): 2080-2095 doi: 10.1109/TIP.2007.901238 [16] Lore K G, Akintayo A, Sarkar S. LLNet: a deep autoencoder approach to natural low-light image enhancement. Pattern Recognition, 2017, 61(January): 650-662 [17] Park S, Yu S, Kim M, Park K, Paik J. Dual autoencoder network for Retinex-based low-light image enhancement. IEEE Access, 2018, 6(May): 22084-22093 [18] Ying Z Q, Li G, Gao W. A bio-inspired multi-exposure fusion framework for low-light image enhancement [Online], available: https://arxiv.org/pdf/1711.00591.pdf, April, 24, 2019 [19] Fu X Y, Zeng D L, Huang Y, Liao Y H, Ding X H, John P. A fusion-based enhancing method for weakly illuminated images. Signal Processing, 2016, 129(May): 82-96 [20] Fu X Y, Zeng D L, Huang Y, Zhang X P, Ding X H. A weighted variational model for simultaneous reflectance and illumination dstimation. In: Proceedings of the 2016 IEEE Computer Vision and Pattern Recognition. Las Vegas, NV, USA: IEEE, 2016. 2782−2790 [21] Zhang Y, Liu S G. Non-uniform illumination video enhancement based on zone system and fusion. In: Proceedings of the 24th International Conference on Pattern Recognition. Beijing, China: IEEE, 2018. 2711−2716 [22] Liu S G, Zhang Y. Detail-preserving underexposed image enhancement via optimal weighted multi-exposure fusion. IEEE Transactions on Consumer Electronics, 2019, 65(3): 303−311 [23] Zhang Q, Nie Y W, Zhang L, Xiao C X. Underexposed video enhancement via perception-driven progressive fusion. IEEE Transactions on Visualization and Computer Graphics, 2015, 22(6): 1773-1785 [24] 张聿, 刘世光. 基于分区曝光融合的不均匀亮度视频增强. 计算机辅助设计与图形学学报, 2017, 29(12): 2317-2322Zhang Yu, Liu Shi-Guang. Non-uniform illumination video enhancement based on zone system and fusion. Journal of Computer-Aided Design & Computer Graphics, 2017, 29(12): 2317-2322 [25] Ma K, Duanmu Z F, Yeganeh H, Wang Z. Multi-exposure image fusion by optimizing a structural similarity index. IEEE Transactions on Computational Imaging, 2018, 4(1): 60-72 doi: 10.1109/TCI.2017.2786138 [26] Borji A, Cheng M M, Jiang H Z, Li J. Salient object detection: a benchmark. IEEE Transactions on Image Processing, 2015, 24(12): 5706-5722 doi: 10.1109/TIP.2015.2487833 [27] Groen I I A, Ghebreab S, Prins H, Lamme V A F, Scholte H S. From image statistics to scene Gist: evoked neural activity reveals transition from low-level natural image structure to scene category. IEEE Transactions on Image Processing, 2013, 33(48): 18814-18824 [28] Hinton G, Deng L, Yu D, Dahl G E, Mohamed A R, Jaitly N, et al. Deep neural networks for acoustic modeling in speech recognition: the shared views of four research groups. IEEE Signal Processing, 2012, 29(6): 82-97 doi: 10.1109/MSP.2012.2205597 [29] Morrone M C, Owens R A. Feature detection from local energy. Pattern Recognition Letters, 1987, 6(5): 303-313 doi: 10.1016/0167-8655(87)90013-4 [30] Field D J. Relations between the statistics of natural images and the response properties of cortical cells. Journal of the Optical Society of America. A, Optics and Image Science,1987, 4(12): 2379-2394 [31] Zhang J, Zhao D B, Gao W. Group-based sparse representation for image restoration. IEEE Transactions on Image Processing, 2014, 38(8): 3336-3351 [32] Dai Q, Pu Y F, Rahman Z, Aamir M. Fractional-order fusion model for low-light image enhancement. Symmetry,2019, 11(4): 574 doi: 10.3390/sym11040574 [33] Xu S P, Liu T Y, Zhang G Z, Tang Y L. A two-stage noise level estimation using automatic feature extraction and mapping model. IEEE Signal Processing Letters, 2019, 26(1): 179-183 doi: 10.1109/LSP.2018.2881843 [34] Zeng K, Ma K D, Hassen R, Wang Z. Perceptual evaluation of multi-exposure image fusion algorithms. In: Proceedings of the 2014 International Workshop on Quality of Multimedia Experience. Singapore, Singapore: IEEE, 2014. 7−12 [35] Wang S H, Zheng J, Hu H M, Li B. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Transactions on Image Processing, 2013, 22(9): 3538-3548 doi: 10.1109/TIP.2013.2261309 [36] NASA. Retinex image processing [Online], available: http://dragon.larc.nasa.gov/retinex/pao/news, July 20, 2019 [37] Cai J R, Gu S H, Zhang L. Learning a deep single image contrast enhancer from multi-exposure images. IEEE Transactions on Image Processing, 2018, 27(4): 2049-2062 doi: 10.1109/TIP.2018.2794218 [38] Fu X Y, Liao Y H, Zeng D L, Huang Y, Zhang X P, Ding X H. A probabilistic method for image enhancement with simultaneous illumination and reflectance estimation. IEEE Transactions on Image Processing, 2015, 24(12): 4965-4977 doi: 10.1109/TIP.2015.2474701 [39] Gu K, Lin W S, Zhai G T, Yang X K, Zhang W J, Chen C W. No-reference quality metric of contrast-distorted images based on information maximization. IEEE Transactions on Cybernetics, 2017, 47(12): 4559-4565 doi: 10.1109/TCYB.2016.2575544 [40] Gu K, Tao D C, Qiao J F, Lin W S, Learning a no-reference quality assessment model of enhanced images with big data. IEEE Transactions Neural Networks and Learning Systems, 2018, 29(4): 1-13 doi: 10.1109/TNNLS.2018.2814520 [41] Zhang L, Zhang L, Bovik A C. A feature-enriched completely blind image quality evaluator. IEEE Transactions on Image Processing, 2015, 24(8): 2579-2591 doi: 10.1109/TIP.2015.2426416 [42] Wang Z, Li Q. Information content weighting for perceptual image quality assessment. IEEE Transactions on Image Processing, 2011, 20(5): 1185-1198 doi: 10.1109/TIP.2010.2092435 -

下载:

下载: