A Review of Single Image Super-resolution Based on Deep Learning

-

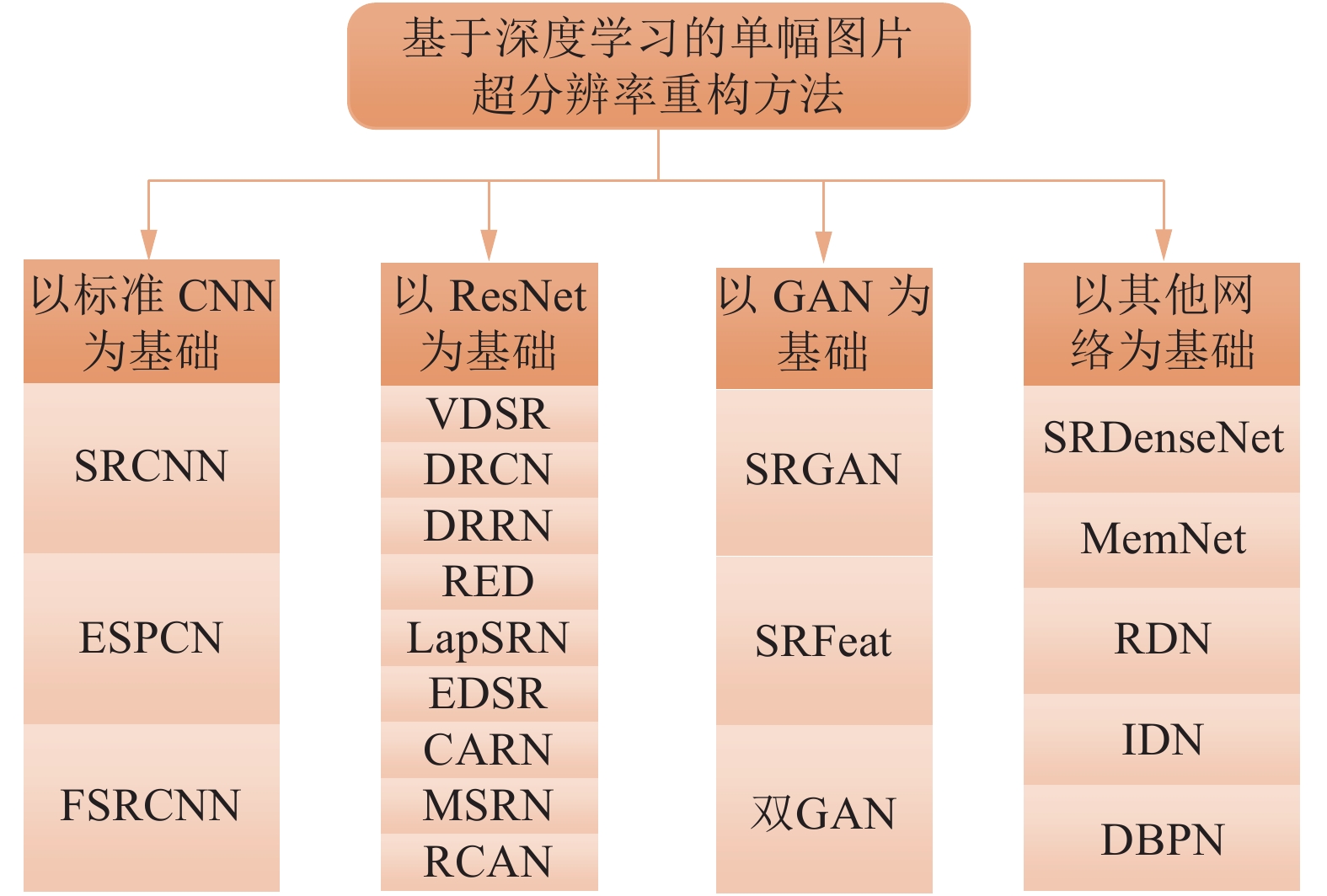

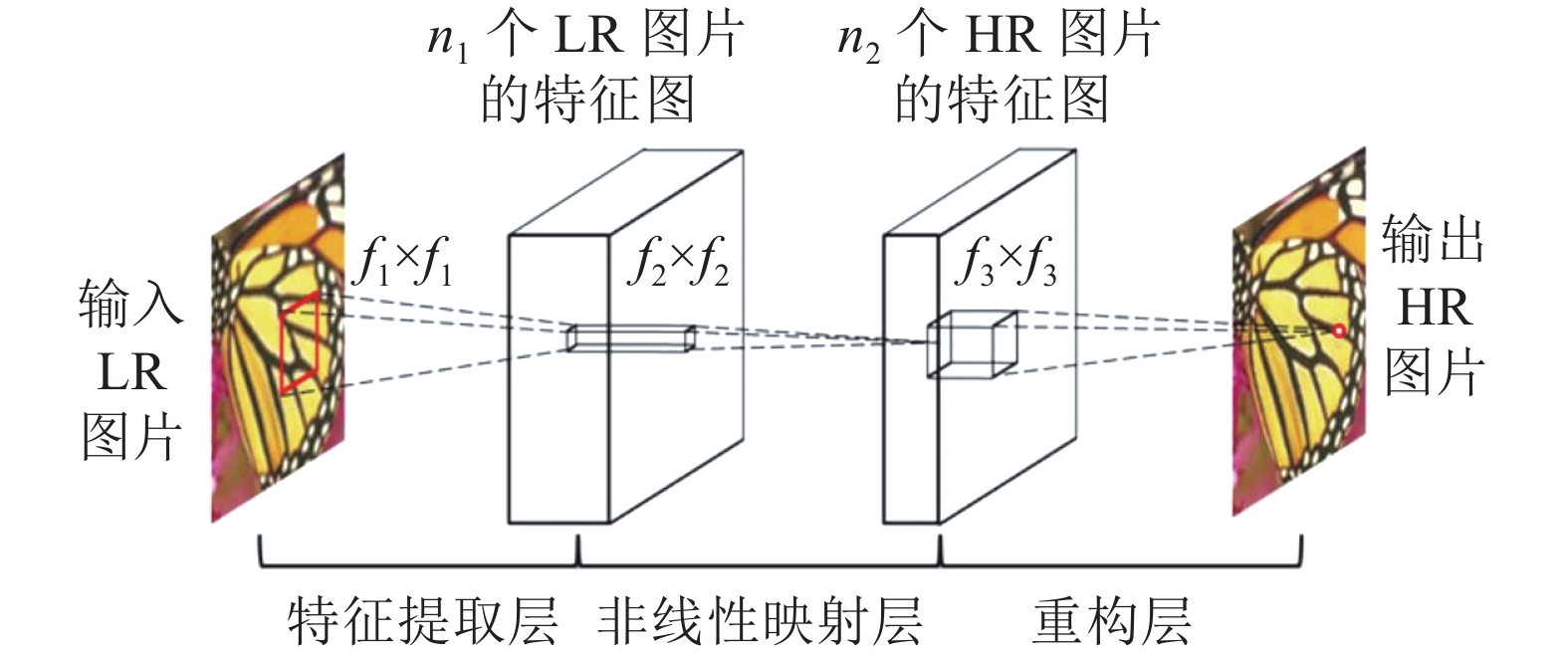

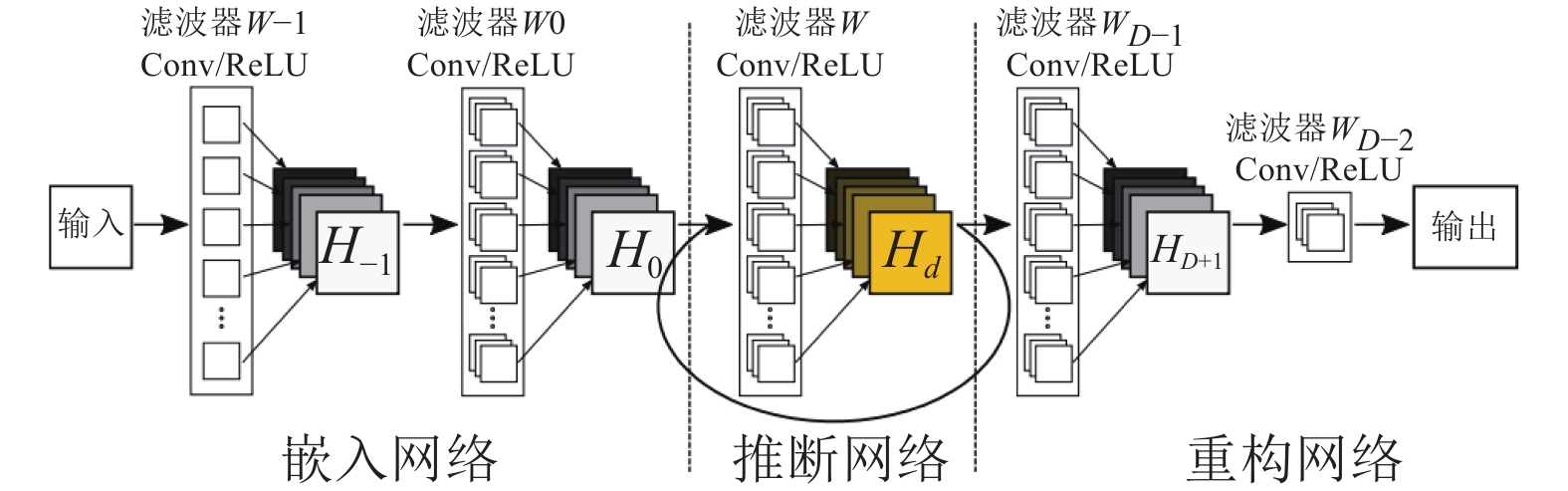

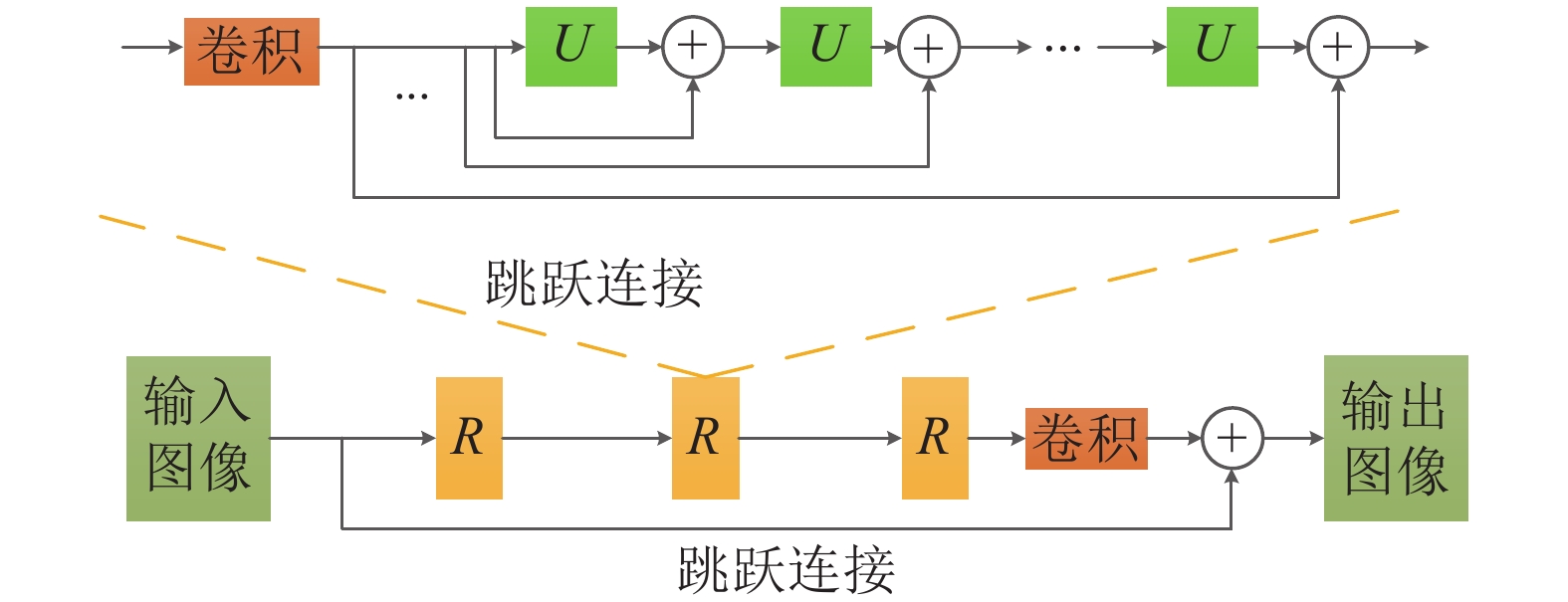

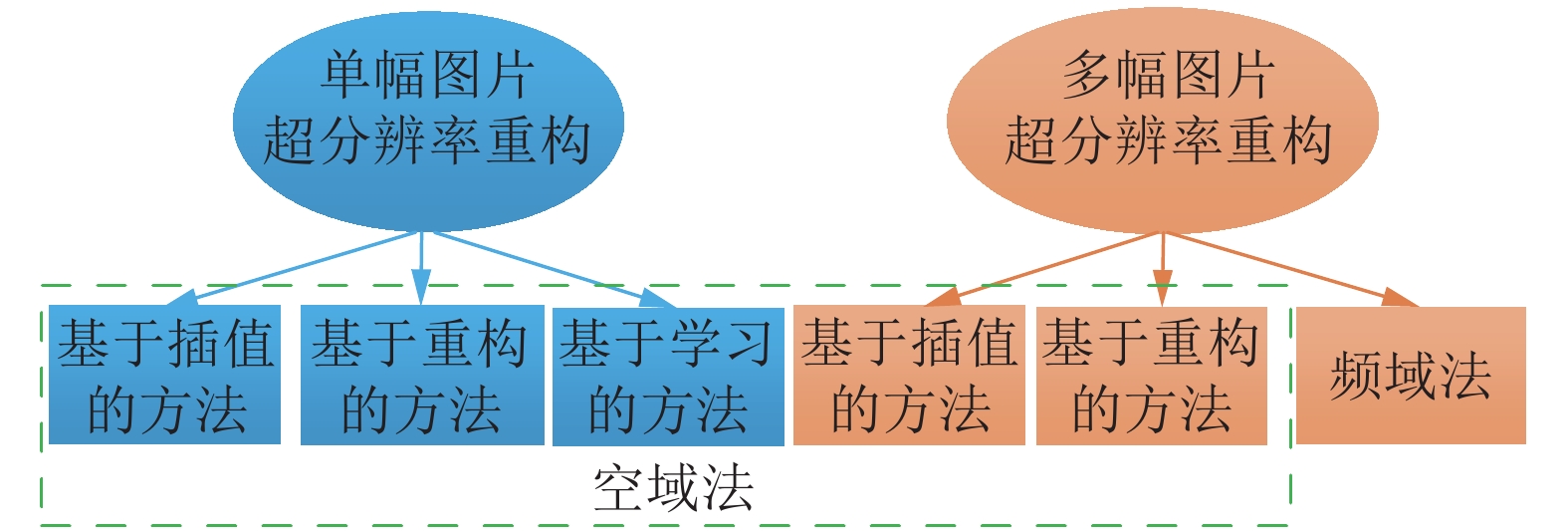

摘要: 图像超分辨率重构技术是一种以一幅或同一场景中的多幅低分辨率图像为输入, 结合图像的先验知识重构出一幅高分辨率图像的技术. 这一技术能够在不改变现有硬件设备的前提下, 有效提高图像分辨率. 深度学习近年来在图像领域发展迅猛, 它的引入为单幅图片超分辨率重构带来了新的发展前景. 本文主要对当前基于深度学习的单幅图片超分辨率重构方法的研究现状和发展趋势进行总结梳理: 首先根据不同的网络基础对十几种基于深度学习的单幅图片超分辨率重构的网络模型进行分类介绍, 分析这些模型在网络结构、输入信息、损失函数、放大因子以及评价指标等方面的差异; 然后给出它们的实验结果, 并对实验结果及存在的问题进行总结与分析; 最后给出基于深度学习的单幅图片超分辨率重构方法的未来发展方向和存在的挑战.Abstract: Super-resolution (SR) refers to an estimation of high resolution (HR) image from one or more low resolution (LR) observations of the same scene, usually employing digital image processing and machine learning techniques. This technique can effectively improve image resolution without upgrading hardware devices. In recent years, deep learning has developed rapidly in the image field, and it has brought promising prospects for single-image super-resolution (SISR). This paper summarizes the research status and development tendency of the current SISR methods based on deep learning. First, we introduce a series of networks characteristics for SISR, and analysis of these networks in the structure, input, loss function, scale factors and evaluation criterion are given. Then according to the experimental results, we discuss the existing problems and solutions. Finally, the future development and challenges of the SISR methods based on deep learning are presented.

-

表 1 三种网络模型对比

Table 1 Comparison of the above three models

网络模型 输入图像 网络层数 损失函数 评价指标 放大因子 SRCNN ILR 3 L2 范数 PSNR, SSIM, IFC 2, 3, 4 FSRCNN LR 8 + m L2 范数 PSNR, SSIM 2, 3, 4 ESPCN LR 4 L2 范数 PSNR, SSIM 2, 3, 4 表 2 基于残差网络的9种模型对比

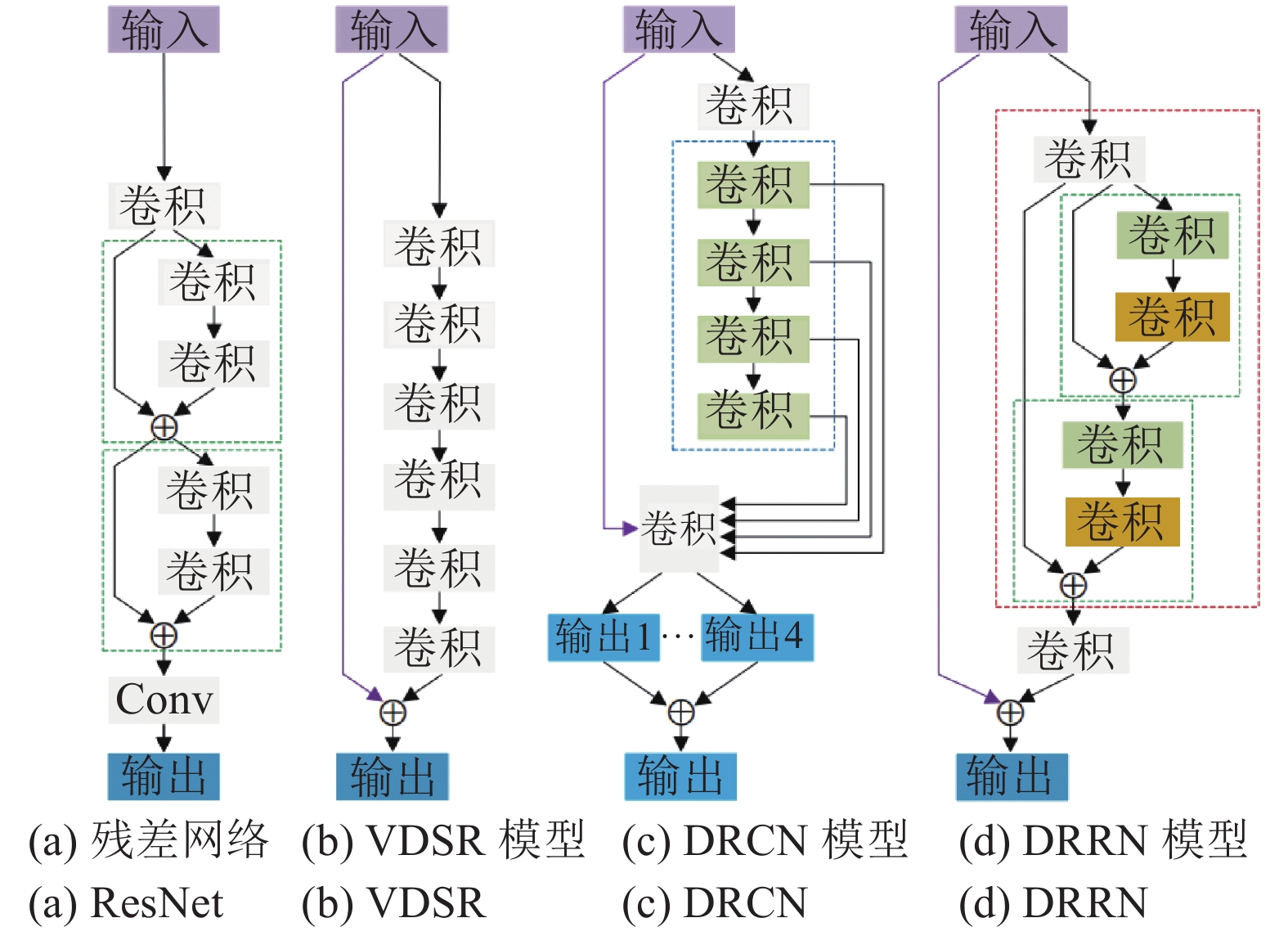

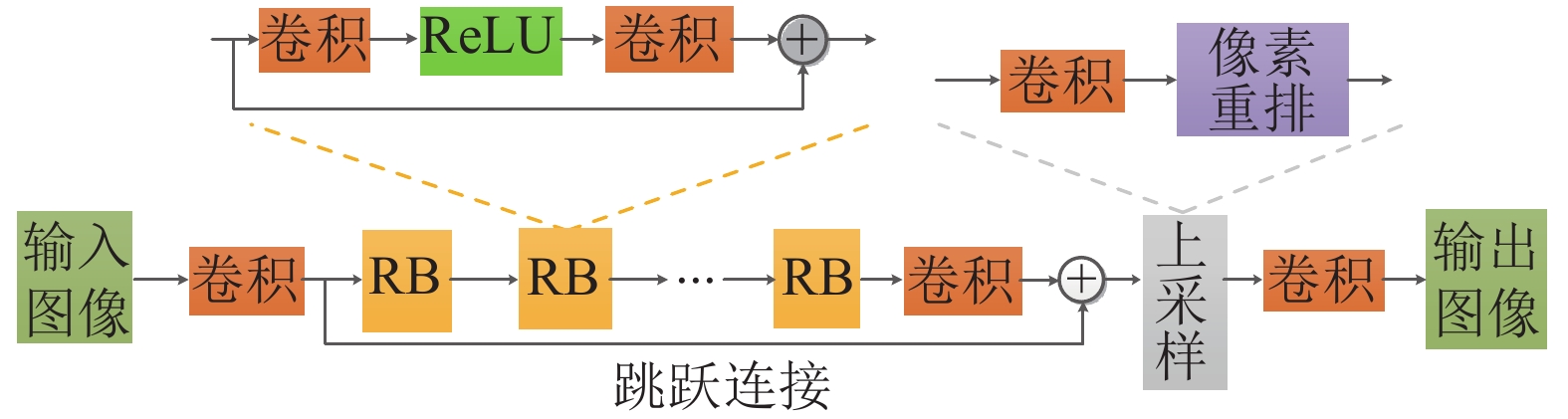

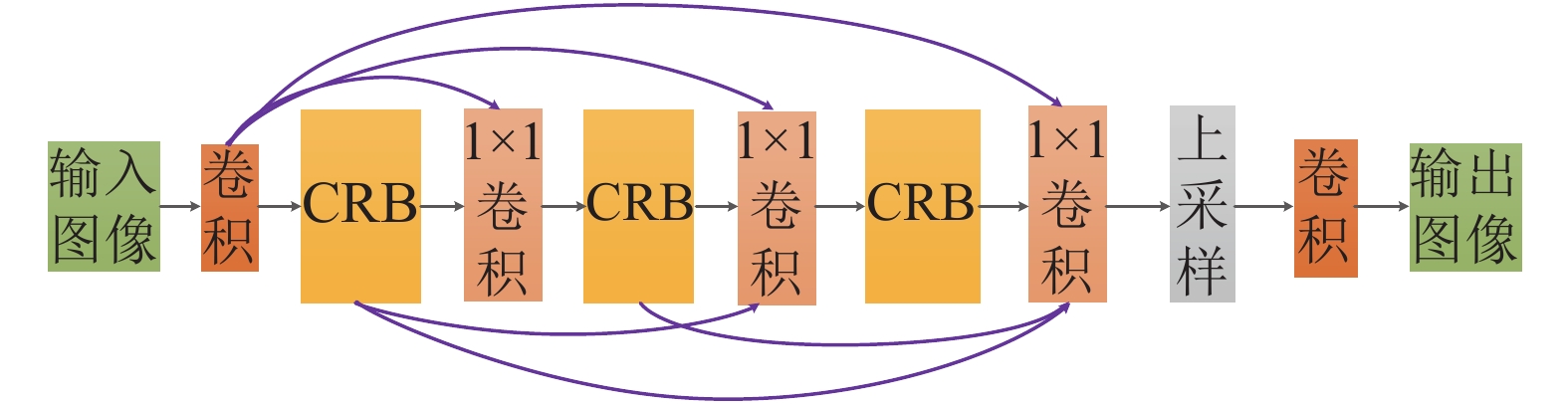

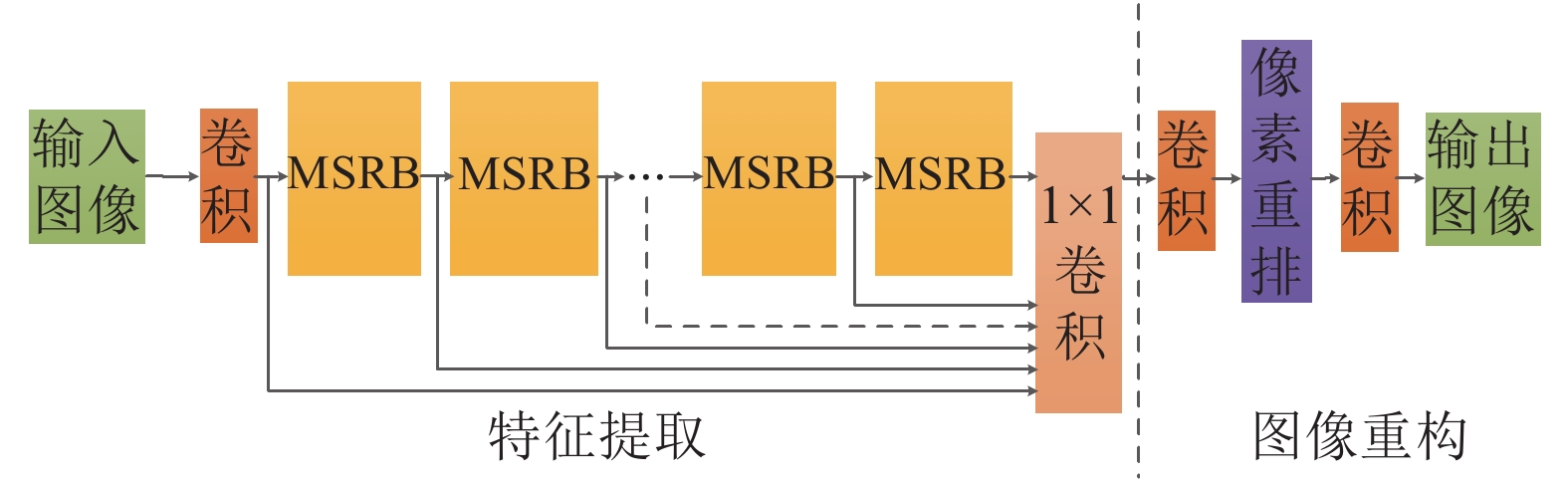

Table 2 Comparison of the nine models based on ResNet

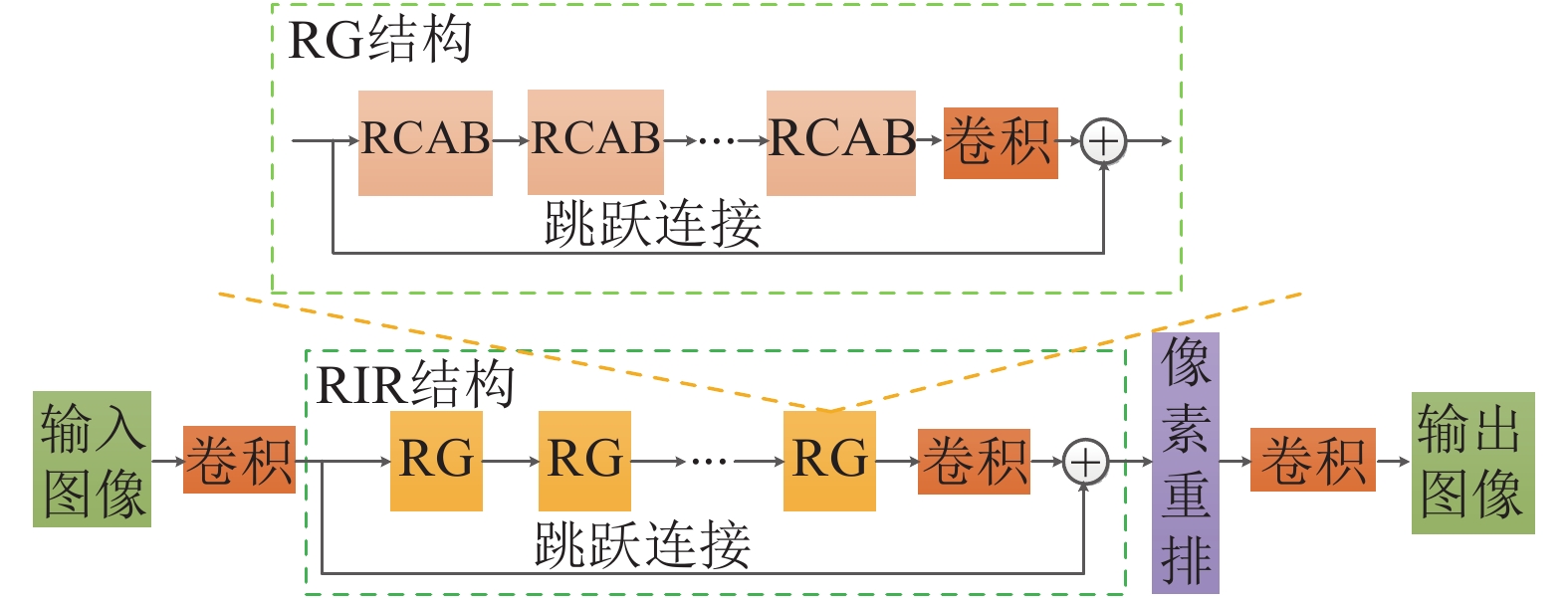

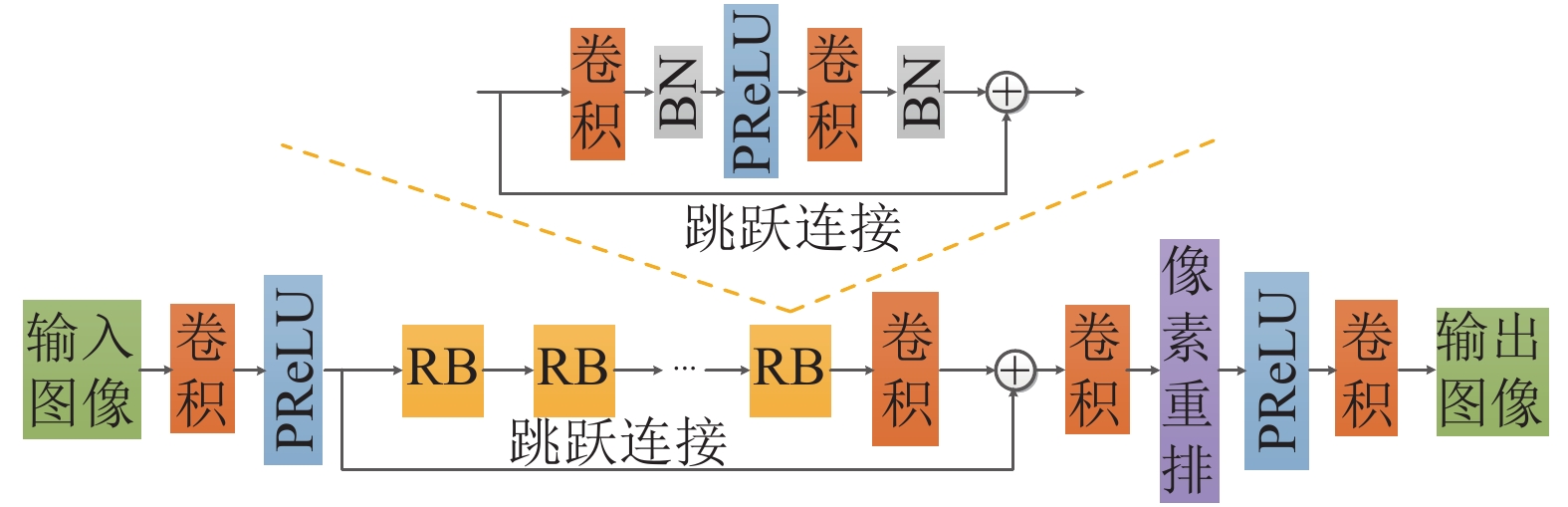

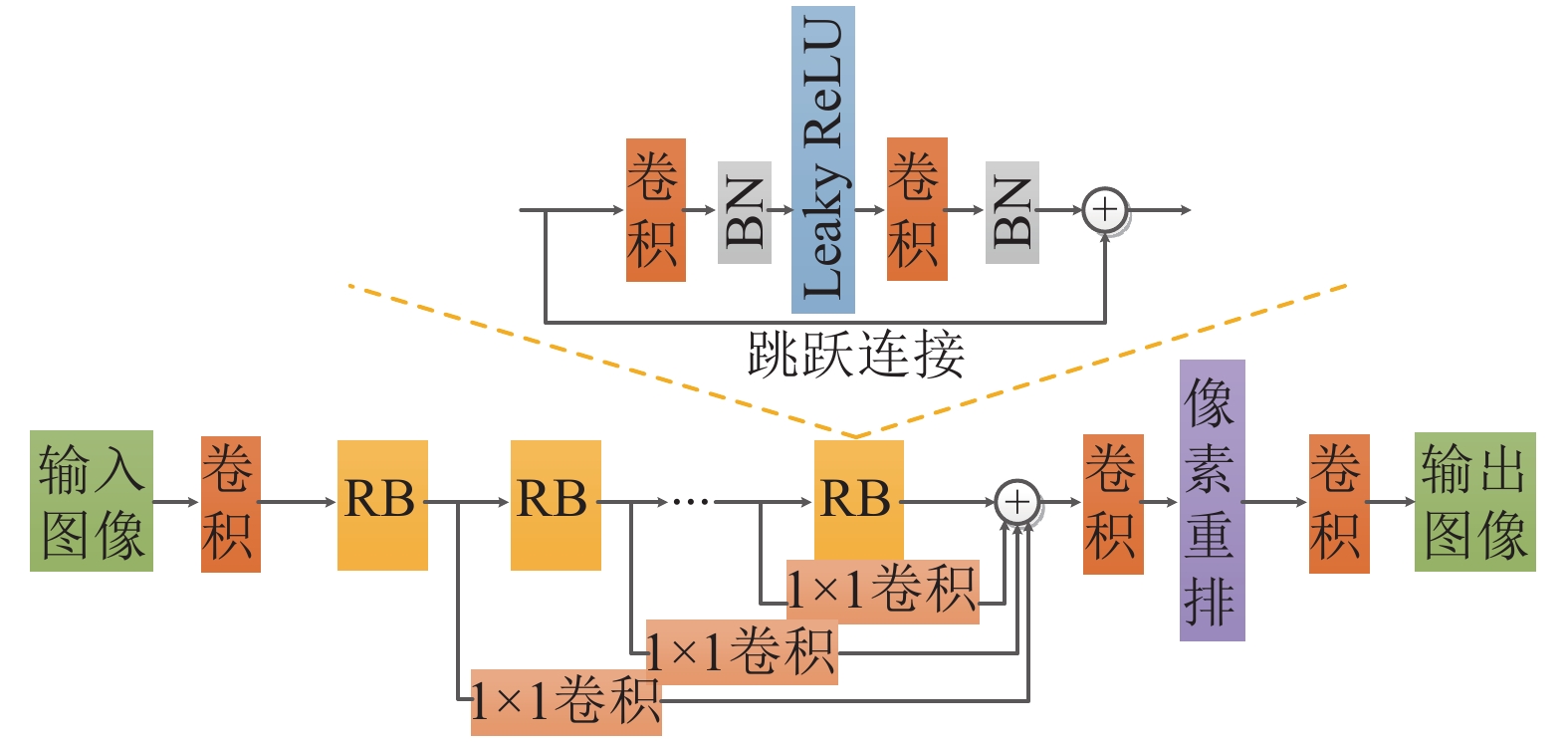

网络模型 输入图像 网络层数 损失函数 评价指标 放大因子 VDSR ILR 20 L2 范数 PSNR, SSIM 2, 3, 4 DRCN ILR 16 (Recursions) L2 范数 PSNR, SSIM 2, 3, 4 DRRN ILR 52 L2 范数 PSNR, SSIM,IFC 2, 3, 4 RED ILR 30 L2 范数 PSNR, SSIM 2, 3, 4 LapSRN LR 27 Charbonnier PSNR, SSIM, IFC 2, 4, 8 EDSR LR 32 (Blocks) L1 范数 PSNR, SSIM 2, 3, 4 CARN LR 32 L1 范数 PSNR, SSIM, 分类效果 2, 3, 4 MSRN LR 8 (Blocks) L1 范数 PSNR, SSIM, 分类效果 2, 3, 4, 8 RCAN LR 20 (Blocks) L1 范数 PSNR, SSIM, 分类效果 2, 3, 4, 8 表 3 基于生成对抗网络的3种模型对比

Table 3 Comparison of the three models based on GAN

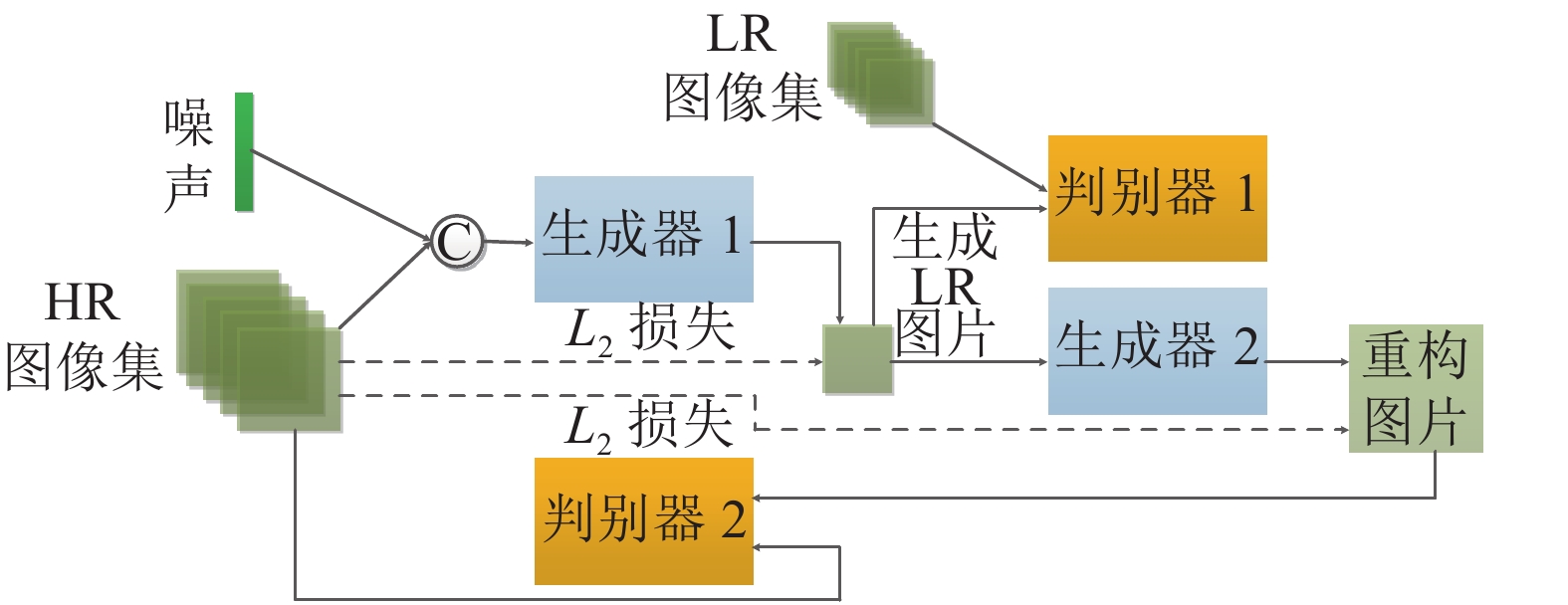

网络模型 输入图像 网络层数 损失函数 评价指标 放大因子 SRGAN LR 16 (Blocks) VGG PSNR, SSIM, MOS 2, 3, 4 SRFeat LR 16 (Blocks) VGG PSNR, SSIM, 分类效果 2, 3, 4 双GAN LR 16 (Blocks) L2 范数 PSNR 2, 3, 4 表 4 3种网络模型对比

Table 4 Comparison of the three models

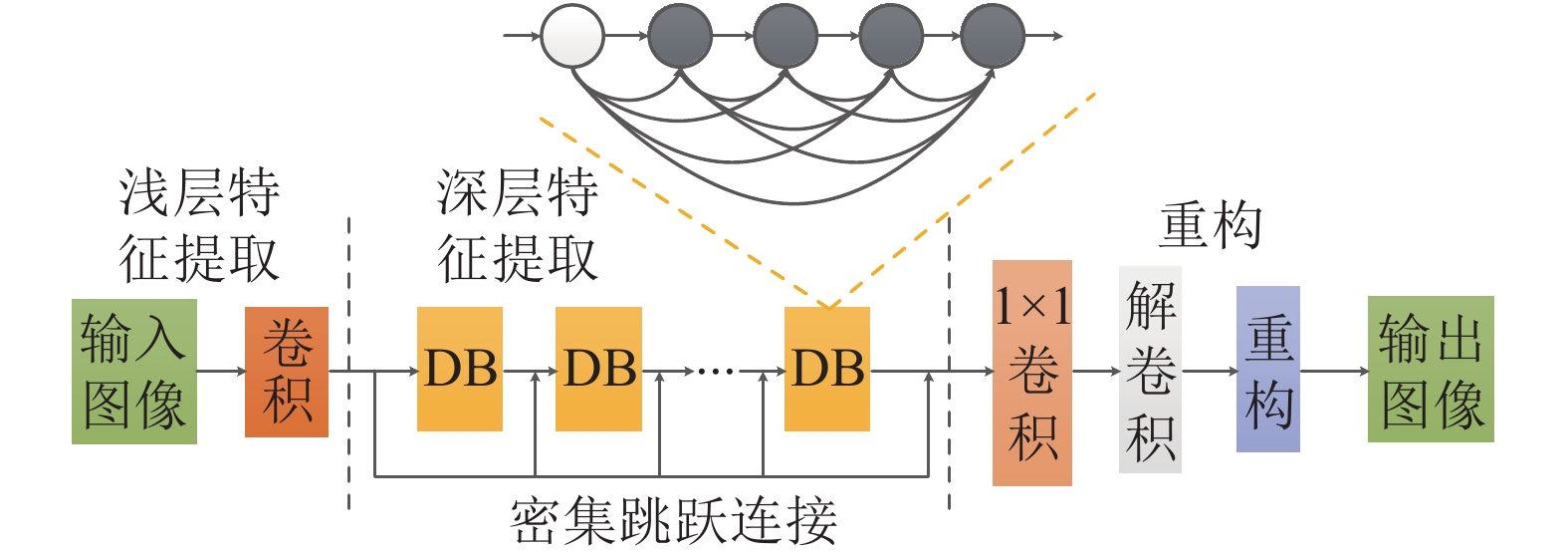

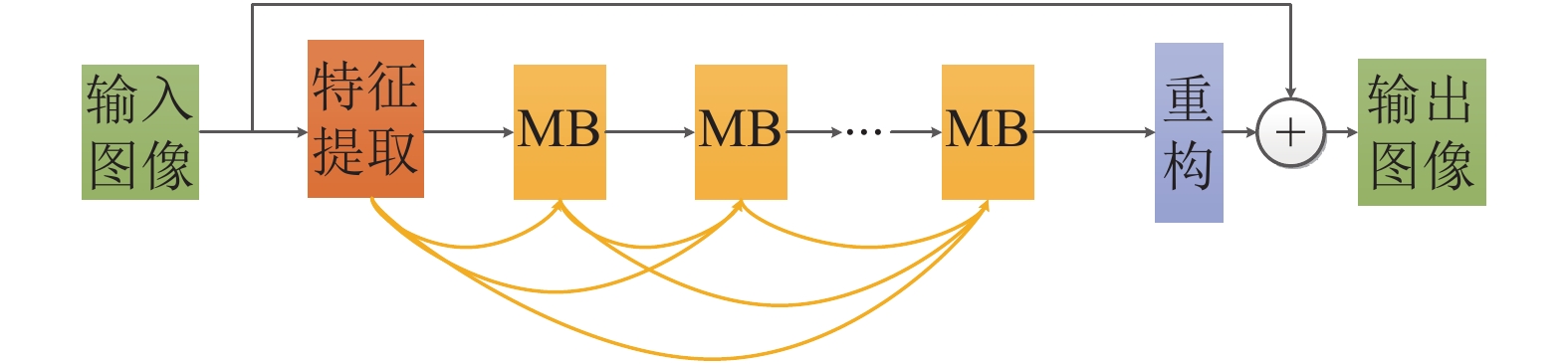

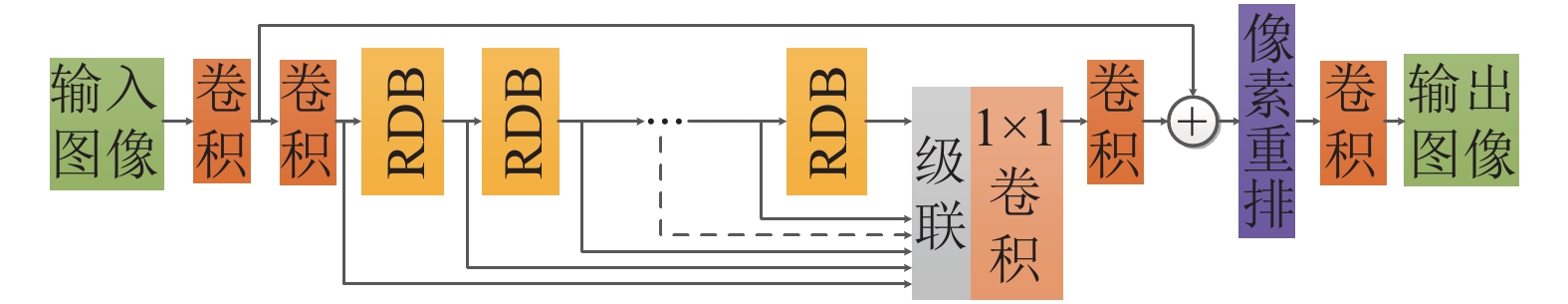

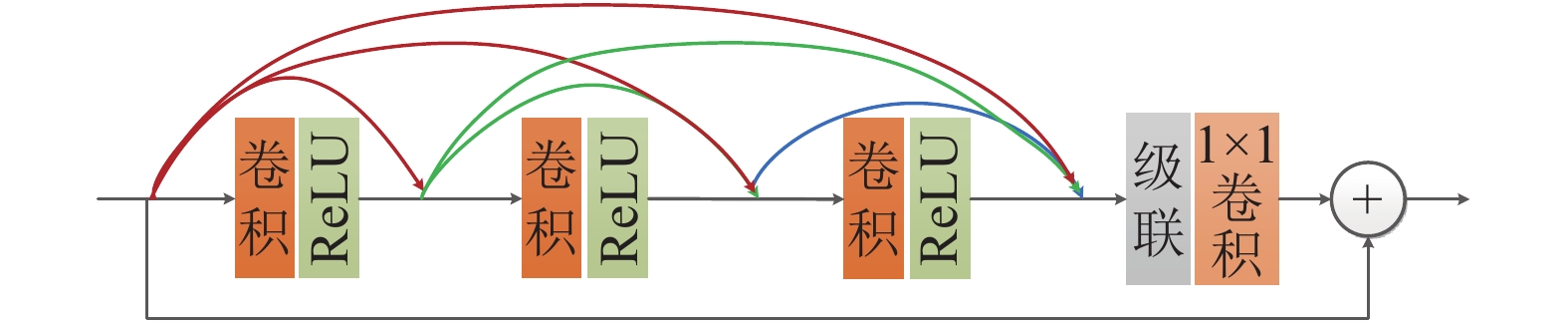

网络模型 递归单元 密集连接 特征融合 重构效果 SRResNet RB 无 无 细节明显 DenseNet DB 无 所有DB之后 − SRDenseNet DB DB之间 所有DB之后 较好 MemNet MB MB之间 无 较好 RDN RDB RDB内部 RDB内部和所有RDB之后 好 表 5 基于其他网络的5种模型对比

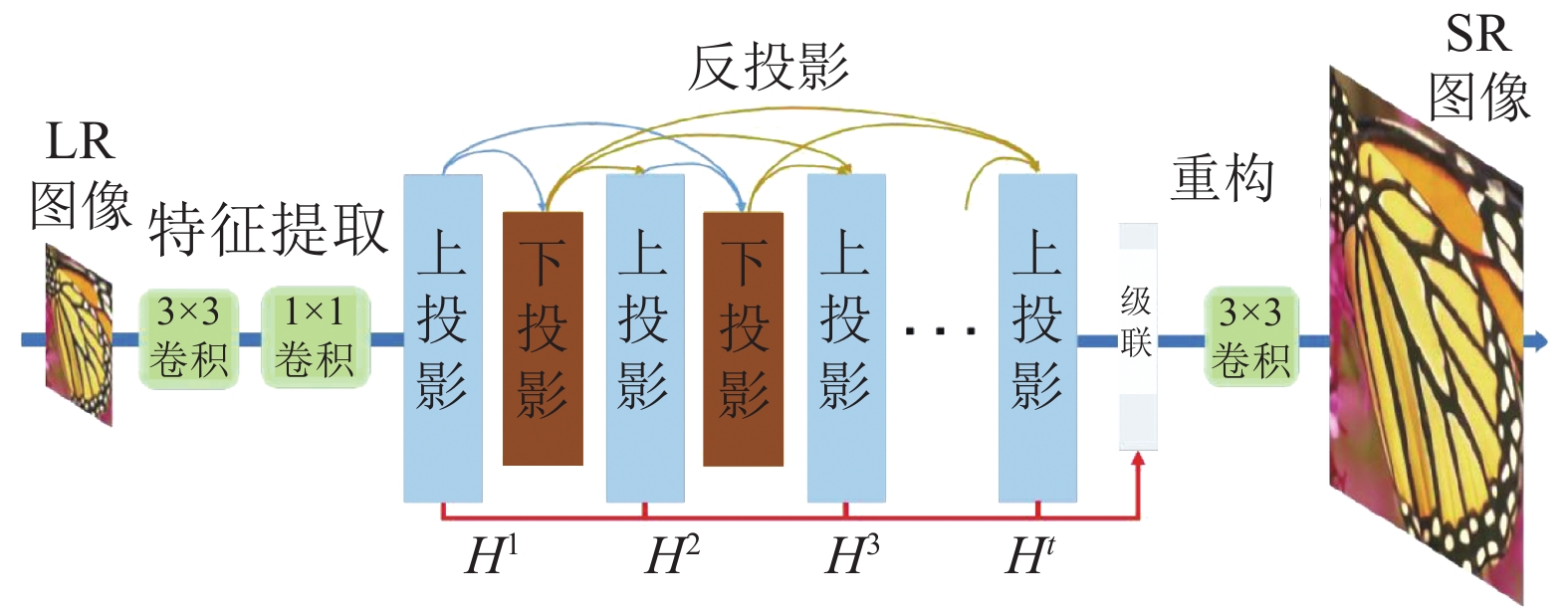

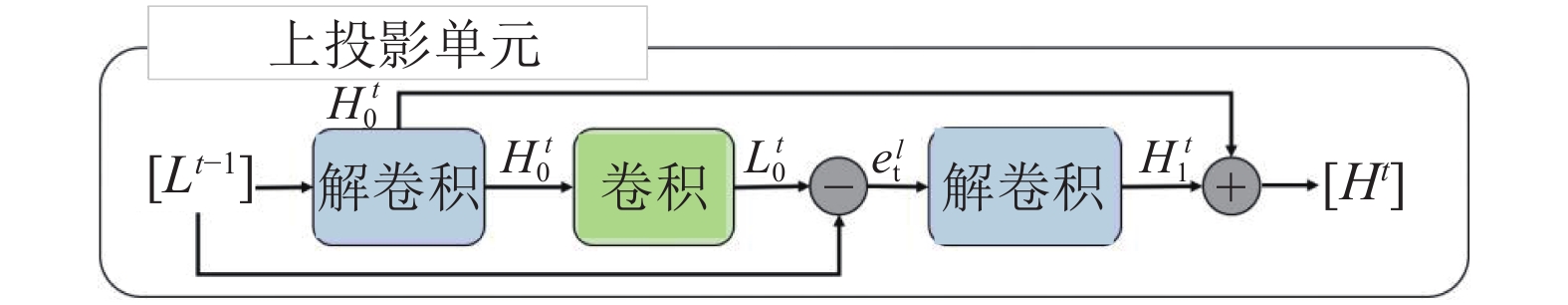

Table 5 Comparison of the five models based on other networks

网络模型 输入图像 网络层数 损失函数 评价指标 放大因子 SRDenseNet LR 8 (Blocks) L2 范数 PSNR, SSIM 4 MemNet ILR 80 L2 范数 PSNR, SSIM 2, 3, 4 RDN LR 20 (Blocks) L1 范数 PSNR, SSIM 2, 3, 4 IDN LR 4 (Blocks) L1 范数 PSNR, SSIM, IFC 2, 3, 4 DBPN LR 2/4/6 (Units) L2 范数 PSNR, SSIM 2, 4, 8 表 6 各个网络模型在Set5、Set14、BSD100、Urban100和Manga109测试集上×2倍数重构结果(单位: dB/-)

Table 6 Quantitative results of the SR models on Set5, Set14, BSD100, Urban100 and Manga109 with scale factor ×2 (Unit: dB/-)

放大尺度 网络模型 Set5 (PSNR/SSIM) Set14 (PSNR/SSIM) BSD100 (PSNR/SSIM) Urban100 (PSNR/SSIM) Manga109 (PSNR/SSIM) SRCNN[5] 33.66/0.9542 32.45/0.9067 31.36/0.8879 29.50/0.8946 35.60/0.9663 FSRCNN[26] 37.05/0.9560 32.66/0.9090 31.53/0.8920 29.88/0.9020 36.67/0.9694 ESPCN[31] 37.00/0.9559 32.75/0.9098 31.51/0.8939 29.87/0.9065 36.21/0.9694 VDSR[33] 37.53/0.9588 33.03/0.9124 31.90/0.8960 30.76/0.9140 37.22/0.9729 DRCN[34] 37.63/0.9588 33.04/0.9118 31.85/0.8942 30.75/0.9133 37.63/0.9723 DRRN[35] 37.74/0.9591 33.23/0.9136 32.05/0.8973 31.23/0.9188 37.60/0.9736 ×2 RED[36] 37.66/0.9599 32.94/0.9144 31.99/0.8974 − − LapSRN[37] 37.52/0.9590 33.08/0.9130 31.08/0.8950 30.41/0.9100 37.27/0.9855 EDSR[38] 38.11/0.9602 33.92/0.9195 32.32/0.9013 32.93/0.9351 39.10/0.9773 CARN-M[40] 37.53/0.9583 33.26/0.9141 31.92/0.8960 30.83/0.9233 − MSRN[32] 38.08/0.9605 33.74/0.9170 32.23/0.9013 32.22/0.9326 38.82/0.9868 RCAN[42] 38.33/0.9617 34.23/0.9225 32.46/0.9031 33.54/0.9399 39.61/0.9788 MemNet[47] 37.78/0.9597 33.28/0.9142 32.08/0.8978 31.31/0.9195 37.72/0.9740 RDN[48] 38.24/0.9614 34.01/0.9212 32.34/0.9017 32.89/0.9353 39.18/0.9780 IDN[49] 37.83/0.9600 33.30/0.9148 32.08/0.8985 31.27/0.9196 − DBPN[50] 38.09/0.9600 33.85/0.9190 32.27/0.9000 32.55/0.9324 38.89/0.9775 表 7 各个网络模型在Set5、Set14、BSD100、Urban100和Manga109测试集上×3倍数重构结果(单位: dB/-)

Table 7 Quantitative results of the SR models on Set5, Set14, BSD100, Urban100 and Manga109 with scale factor ×3 (Unit: dB/-)

放大尺度 网络模型 Set5 (PSNR/SSIM) Set14 (PSNR/SSIM) BSD100 (PSNR/SSIM) Urban100 (PSNR/SSIM) Manga109 (PSNR/SSIM) SRCNN[5] 32.75/0.9090 29.30/0.8215 28.41/0.7863 26.24/0.7989 30.48/0.9117 FSRCNN[26] 33.18/0.9140 29.37/0.8240 28.53/0.7910 26.43/0.8080 31.10/0.9210 ESPCN[31] 33.02/0.9135 29.49/0.8271 28.50/0.7937 26.41/0.8161 30.79/0.9181 VDSR[33] 33.68/0.9201 29.86/0.8312 28.83/0.7966 27.15/0.8315 32.01/0.9310 DRCN[34] 33.85/0.9215 29.89/0.8317 28.81/0.7954 27.16/0.8311 32.31/0.9328 DRRN[35] 34.03/0.9244 29.96/0.8349 28.95/0.8004 27.53/0.8378 32.42/0.9359 ×3 RED[36] 33.82/0.9230 29.61/0.8341 28.93/0.7994 − − EDSR[38] 34.65/0.9280 30.52/0.8462 29.25/0.8093 28.80/0.8653 34.17/0.9476 CARN-M[40] 33.99/0.9236 30.08/0.8367 28.91/0.8000 26.86/0.8263 − MSRN[32] 34.38/0.9262 30.34/0.8395 29.08/0.8041 28.08/0.5554 33.44/0.9427 RCAN[42] 34.85/0.9305 30.76/0.8494 29.39/0.8122 29.31/0.8736 34.76/0.9513 MemNet[47] 34.09/0.9248 30.00/0.8350 28.96/0.8001 27.56/0.8376 32.51/0.9369 RDN[48] 34.71/0.9296 30.57/0.8468 29.26/0.8093 28.80/0.8653 34.13/0.9484 IDN[49] 34.11/0.9253 29.99/0.8354 28.95/0.8031 27.42/0.8359 − 表 8 各个网络模型在Set5、Set14、BSD100、Urban100和Manga109测试集上×4倍数重构结果(单位: dB/-)

Table 8 Quantitative results of the SR models on Set5, Set14, BSD100, Urban100 and Manga109 with scale factor ×4 (Unit: dB/-)

放大尺度 网络模型 Set5 (PSNR/SSIM) Set14 (PSNR/SSIM) BSD100 (PSNR/SSIM) Urban100 (PSNR/SSIM) Manga109 (PSNR/SSIM) SRCNN[5] 30.48/0.8628 27.50/0.7513 26.90/0.7101 24.52/0.7221 27.58/0.8555 FSRCNN[26] 30.72/0.8660 27.61/0.7550 26.98/0.7150 24.62/0.7280 27.90/0.8610 ESPCN[31] 30.66/0.8646 27.71/0.7562 26.98/0.7124 24.60/0.7360 27.70/0.8560 VDSR[33] 31.35/0.8830 28.02/0.7680 27.29/0.7251 25.18/0.7540 28.83/0.8870 DRCN[34] 31.56/0.8810 28.15/0.7627 27.24/0.7150 25.15/0.7530 28.98/0.8816 DRRN[35] 31.68/0.8888 28.21/0.7721 27.38/0.7284 25.44/0.7638 29.19/0.8914 RED[36] 31.51/0.8869 27.86/0.7718 27.40/0.7290 − − LapSRN[37] 31.54/0.8850 28.19/0.7720 27.32/0.7270 25.27/0.7560 29.09/0.8900 ×4 EDSR[38] 32.46/0.8968 28.80/0.7876 27.71/0.7420 26.64/0.8033 31.02/0.9148 CARN-M[40] 31.92/0.8903 28.42/0.7762 27.44/0.7304 25.63/0.7688 − MSRN[32] 32.07/0.8903 28.60/0.7751 27.52/0.7273 26.04/0.7896 30.17/0.9034 RCAN[42] 32.73/0.9013 28.98/0.7910 27.85/0.7455 27.10/0.8142 31.65/0.9208 SRDenseNet[46] 32.02/0.8934 28.50/0.7782 27.53/0.7337 26.05/0.7819 − MemNet[47] 31.74/0.8893 29.26/0.7723 27.40/0.7281 25.50/0.7630 29.42/0.8942 RDN[48] 32.47/0.8990 28.81/0.7871 27.72/0.7419 26.61/0.8028 31.00/0.9151 IDN[49] 31.82/0.8930 28.25/0.7730 27.41/0.7297 25.41/0.7632 − DBPN[50] 32.47/0.8980 28.82/0.7860 27.72/0.7400 26.38/0.7946 30.91/0.9137 表 9 各个网络模型在Set5、Set14、BSD100、Urban100和Manga109测试集上×8倍数重构结果(单位: dB/-)

Table 9 Quantitative results of the SR models on Set5, Set14, BSD100, Urban100 and Manga109 with scale factor ×8 (Unit: dB/-)

放大尺度 网络模型 Set5 (PSNR/SSIM) Set14 (PSNR/SSIM) BSD100 (PSNR/SSIM) Urban100 (PSNR/SSIM) Manga109 (PSNR/SSIM) LapSRN[37] 26.14/0.7380 24.44/0.6230 24.54/0.5860 21.81/0.5810 23.39/0.7350 ×8 MSRN[32] 26.59/0.7254 24.88/0.5961 24.70/0.5410 22.37/0.5977 24.28/0.7517 RCAN[49] 27.47/0.7913 25.40/0.6553 25.05/0.6077 23.22/0.6524 25.58/0.8092 DBPN[50] 27.12/0.7840 25.13/0.6480 24.88/0.6010 22.73/0.6312 25.14/0.7987 表 10 各个网络模型的网络基础、模型框架、网络设计、实验平台及运行时间总结

Table 10 Summary of the SR models in network basics, frameworks, network design, platform and training/testing time

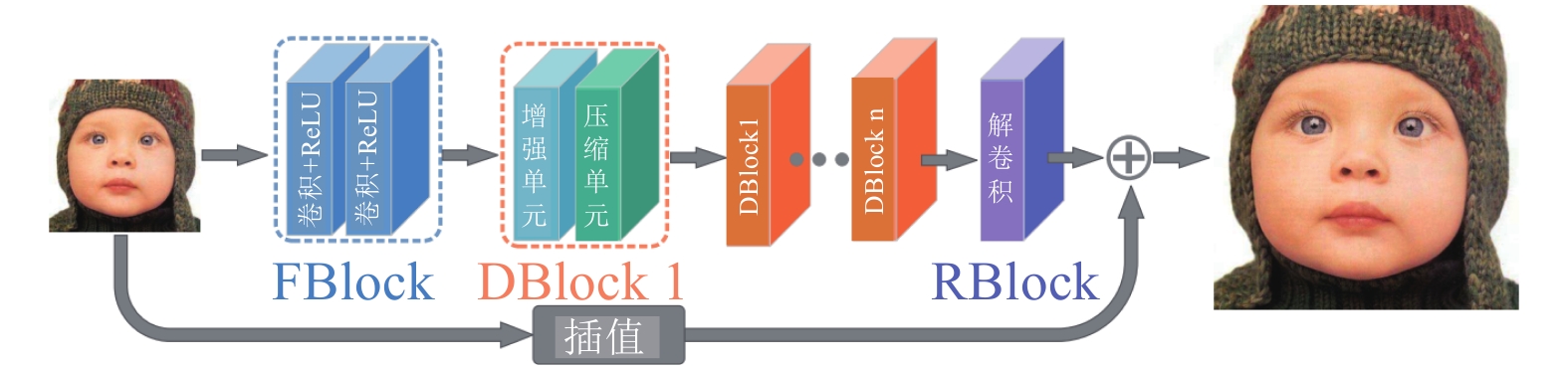

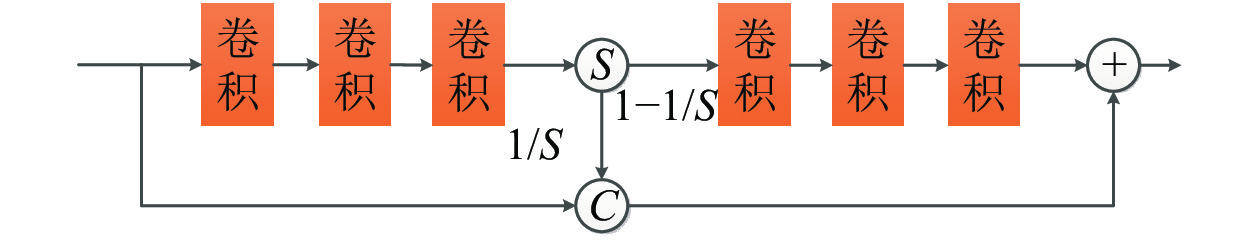

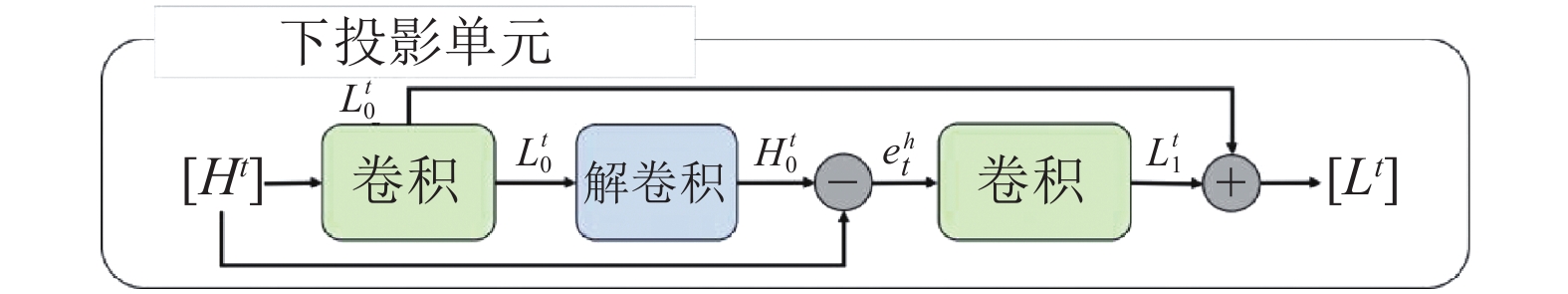

网络模型 网络基础 模型框架 结构设计特点 实验平台 训练/测试时间 SRCNN[5] CNN 预插值 经典 CNN 结构 CPU − FSRCNN[26] CNN 后插值 (解卷积) 压缩模块 i7 CPU 0.4 s (测试) ESPCN[31] CNN 后插值 (亚像素卷积) 亚像素卷积 K2 GPU 4.7 ms (测试) VDSR[33] ResNet 预插值 残差学习, 自适应梯度裁剪 Titan Z GPU 4 h (训练) DRCN[34] ResNet 预插值 递归结构, 跳跃连接 Titan X GPU 6 d (训练) DRRN[35] ResNet 预插值 递归结构, 残差学习 Titan X GPU$\times $2 4 d/0.25 s RED[36] ResNet 逐步插值 解卷积−反卷积, 跳跃连接 Titan X GPU 3.17 s (测试) LapSRN[37] ResNet 逐步插值 金字塔结构, 特征−图像双通道 Titan X GPU 0.02 s (测试) EDSR[38] ResNet 后插值 (亚像素卷积) 去 BN 层, Self-ensemble Titan X GPU 8 d (训练) CARN[40] ResNet 后插值 (亚像素卷积) 递归结构, 残差学习, 分组卷积 − − MSRN[32] ResNet 后插值 (亚像素卷积) 多尺度特征提取, 残差学习 Titan Xp GPU − RCAN[42] ResNet 后插值 (亚像素卷积) 递归结构, 残差学习, 通道注意机制 Titan Xp GPU − SRGAN[43] GAN 后插值 (亚像素卷积) 生成器预训练 Telsa M40 GPU − SRFeat[44] GAN 后插值 (亚像素卷积) 特征判别器, 图像判别器 Titan Xp GPU − 双GAN[45] GAN − 两个 GAN 网络构成图像降质与重构闭合回路 − − SRDenseNet[46] 其他 后插值 (解卷积) 密集连接, 跳跃连接 Titan X GPU 36.8 ms (测试) MemNet[47] 其他 预插值 记忆单元, 跳跃连接 Telsa P40 GPU 5 d/0.85 s RDN[48] 其他 后插值 (解卷积) 密集连接, 残差学习 Titan Xp GPU 1 d (训练) IDN[49] 其他 后插值 (解卷积) 蒸馏机制 Titan X GPU 1 d (训练) DBPN[50] 其他 迭代插值 上、下投影单元 Titan X GPU 4 d (训练) 表 11 常用图像质量评价指标的计算方法和优缺点总结

Table 11 Summary of evaluation metrics

评价指标 计算方法 优点 缺点 PSNR $10{\lg}\frac{MAX_{f} }{MSE}$ 能够衡量像素间损失, 是图像

最常用的客观评价指标之一.不能全面评价图像质量, 如PSNR值高

不代表图像的视觉质量高.SSIM $\frac{(2\mu_{x}\mu_{x_{0}}+C_{1})\times (2\sigma_{xx_{0}}+C_{2})}{(\mu_{x}^{2}+\mu_{x_{0}}^{2}+C_{1})\times (\sigma_{x}^{2}+\sigma_{x_{0}}^{2}+C_{2})}$ 能够衡量图片间的统计关系, 是图

像最常用的客观评价指标之一.不适用于整个图像评价, 只适用于图像

的局部结构相似度评价.MOS 由多位评价者对于重构结果进行评价, 分数

从 1 到 5 代表由坏到好.评价结果更符合人的视觉效果且随着评

价者数目增加, 评价结果更加可靠.耗时耗力, 成本较高, 评价者数量不多的

情况下易受评价者主观影响, 且评分不

连续易造成较大的误差. -

[1] 苏衡, 周杰, 张志浩. 超分辨率图像重建方法综述. 自动化学报, 2013, 39(8): 1202−1213Su Heng, Zhou Jie, Zhang Zhi-Hao. Survey of super-resolution image reconstruction methods. Acta Automatica Sinica, 2013, 39(8): 1202−1213 [2] Harris J L. Diffraction and resolving power. Journal of the Optical Society of America, 1964, 54(7): 931−936 [3] Goodman J W. Introduction to Fourier Optics. New York: McGraw-Hill, 1968 [4] Tsai R Y, Huang T S. Multiframe image restoration and registration. In: Advances in Computer Vision and Image Processing. Greenwich, CT, England: JAI Press, 1984. 317−339 [5] Dong C, Loy C C, He K M, Tang X O. Learning a deep convolutional network for image super-resolution. In: Proceedings of ECCV 2014 European Conference on Computer Vision. Cham, Switzerland: Springer, 2014. 184−199 [6] 何阳, 黄玮, 王新华, 郝建坤. 稀疏阈值的超分辨率图像重建. 中国光学, 2016, 9(5): 532−539 doi: 10.3788/co.20160905.0532He Yang, Huang Wei, Wang Xin-Hua, Hao Jian-Kun. Super-resolution image reconstruction based on sparse threshold. Chinese Optics, 2016, 9(5): 532−539 doi: 10.3788/co.20160905.0532 [7] 李方彪. 红外成像系统超分辨率重建技术研究[博士学位论文], 中国科学院大学, 中国, 2018Li Fang-Biao. Research on Super-Resolution Reconstruction of Infrared Imaging System [Ph.D. dissertation], University of Chinese Academy of Sciences, China, 2018 [8] Irani M, Peleg S. Improving resolution by image registration. Graphical Models and Image Processing, 1991, 53(3): 231−239 [9] Kim K I, Kwon Y. Single-image super-resolution using sparse regression and natural image prior. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2010, 32(6): 1127−1133 [10] Aly H A, Dubois E. Image up-sampling using total-variation regularization with a new observation model. IEEE Transactions on Image Processing, 2005, 14(10): 1647−1659 [11] Shan Q, Li Z R, Jia J Y, Tang C K. Fast image/video upsampling. ACM Transactions on Graphics, 2008, 27(5): 153 [12] Hayat K. Super-resolution via deep learning. arXiv: 1706.09077, 2017 [13] 孙旭, 李晓光, 李嘉锋, 卓力. 基于深度学习的图像超分辨率复原研究进展. 自动化学报, 2017, 43(5): 697−709Sun Xu, Li Xiao-Guang, Li Jia-Feng, Zhuo Li. Review on deep learning based image super-resolution restoration algorithms. Acta Automatica Sinica, 2017, 43(5): 697−709 [14] He H, Siu W C. Single image super-resolution using Gaussian process regression. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Providence, RI, USA: IEEE, 2011. 449−456 [15] Zhang K B, Gao X B, Tao D C, Li X L. Single image super-resolution with non-local means and steering kernel regression. IEEE Transactions on Image Processing, 2012, 21(11): 4544−4556 [16] Chan T M, Zhang J P, Pu J, Huang H. Neighbor embedding based super-resolution algorithm through edge detection and feature selection. Pattern Recognition Letters, 2009, 30(5): 494−502 [17] Yang J C, Wright J, Huang T S, Ma Y. Image super-resolution via sparse representation. IEEE Transactions on Image Processing, 2010, 19(11): 2861−2873 doi: 10.1109/TIP.2010.2050625 [18] Timofte R, Agustsson E, van Gool L, Yang M H, Zhang L, Lim B, et al. NTIRE 2017 challenge on single image super-resolution: Methods and results. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops. Hawaii, USA: IEEE, 2017. 1110−1121 [19] Yue L W, Shen H F, Li J, Yuan Q Q, Zhang H Y, Zhang L P. Image super-resolution: The techniques, applications, and future. Signal Processing, 2016, 128: 389−408 doi: 10.1016/j.sigpro.2016.05.002 [20] Yang C Y, Ma C, Yang M H. Single-image super-resolution: A benchmark. In: Proceedings of ECCV 2014 Conference on Computer Vision. Switzerland: Springer, 2014. 372−386 [21] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, NV, USA: IEEE, 2016. 770−778 [22] Goodfellow I J, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative adversarial networks. In: Advances in Neural Information Processing Systems. Montreal, Quebec, Canada: Curran Associates, Inc., 2014. 1110−1121 [23] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems. Lake Tahoe, Nevada, USA: Curran Associates, Inc., 2012. 1097−1105 [24] Huang G, Liu Z, van der Maaten L, Weinberger K Q. Densely connected convolutional networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA: IEEE, 2017. 2261−2269 [25] Dong C, Loy C C, He K M, Tang X O. Image super-resolution using deep convolutional networks. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2016, 38(2): 295−307 [26] Dong C, Chen C L, Tang X O. Accelerating the super-resolution convolutional neural network. In: Proceedings of European Conference on Computer Vision. Amsterdam, Netherlands: Springer, 2016. 391−407 [27] Luo Y M, Zhou L G, Wang S, Wang Z Y. Video satellite imagery super resolution via convolutional neural networks. IEEE Geoscience & Remote Sensing Letters, 2017, 14(12): 2398−2402 [28] Ducournau A, Fablet R. Deep learning for ocean remote sensing: An application of convolutional neural networks for super-resolution on satellite-derived SST data. In: Proceedings of the 9th IAPR Workshop on Pattern Recognition in Remote Sensing. Cancun, Mexico: IEEE, 2016. 1−6 [29] Rasti P, Uiboupin T, Escalera S, Anbarjafari G. Convolutional neural network super resolution for face recognition in surveillance monitoring. In: Proceedings of the International Conference on Articulated Motion & Deformable Objects. Switzerland: Springer, 2016. 175−184 [30] Zhang H Z, Casaseca-de-la-Higuera P, Luo C B, Wang Q, Kitchin M, Parmley A, et al. Systematic infrared image quality improvement using deep learning based techniques. In: Proceedings of the SPIE 10008, Remote Sensing Technologies and Applications in Urban Environments. SPIE, 2016. [31] Shi W Z, Caballero J, Huszar F, Totz J, Aitken A P, Bishop R, et al. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, NV, USA: IEEE, 2016. 1874−1883 [32] Li J C, Fang F M, Mei K F, Zhang G X. Multi-scale residual network for image super-resolution. In: Proceedings of 2018 ECCV European Conference on Computer Vision. Munich, Germany: Springer, 2018. 527−542 [33] Kim J, Lee J K, Lee K M. Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, NV, USA: IEEE, 2016. 1646−1654 [34] Kim J, Lee J K, Lee K M. Deeply-recursive convolutional network for image super-resolution. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, NV, USA: IEEE, 2016. 1637−1645 [35] Tai Y, Yang J, Liu X M. Image super-resolution via deep recursive residual network. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA: IEEE, 2017. 2790−2798 [36] Mao X J, Shen C H, Yang Y B. Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. Red Hook, NY, United States: Curran Associates Inc., 2016. 2810−2818 [37] Lai W S, Huang J B, Ahuja N, Yang M H. Deep laplacian pyramid networks for fast and accurate super-resolution. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA: IEEE, 2017. 5835−5843 [38] Lim B, Son S, Kim H, Nah S, Lee K M. Enhanced deep residual networks for single image super-resolution. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops. Honolulu, HI, USA: IEEE, 2017. 1132−1140 [39] Howard A G, Zhu M L, Chen B, Kalenichenko D, Wang W J, Weyand T, et al. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv:1704.04861, 2017 [40] Ahn N, Kang B, Sohn K A. Fast, accurate, and lightweight super-resolution with cascading residual network. In: Proceedings of 2018 ECCV European Conference on Computer Vision. Munich, Germany: Springer, 2018. 256−272 [41] Szegedy C, Liu W, Jia Y Q, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, NA, USA: IEEE, 2015. 1−9 [42] Zhang Y L, Li K P, Li K, Wang L C, Zhong B N, Fu Y. Image super-resolution using very deep residual channel attention networks. In: Proceedings of 2018 ECCV European Conference on Computer Vision. Munich, Germany: Springer, 2018. 294−310 [43] Ledig C, Theis L, Huszar F, Caballero J, Cunningham A, Acosta A, et al. Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA: IEEE, 2017. 105−114 [44] Park S J, Son H, Cho S, Hong K S, Lee S. SRFeat: Single image super-resolution with feature discrimination. In: Proceedings of 2018 ECCV European Conference on Computer Vision. Munich, Germany: Springer, 2018. 455−471 [45] Bulat A, Yang J, Georgios T. To learn image super-resolution, use a GAN to learn how to do image degradation first. In: Proceedings of 2018 ECCV European Conference on Computer Vision. Munich, Germany: Springer, 2018. 187−202 [46] Tong T, Li G, Liu X J, Gao Q Q. Image super-resolution using dense skip connections. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 4809−4817 [47] Tai Y, Yang J, Liu X M, Xu C Y. MemNet: A persistent memory network for image restoration. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017. 4549−4557 [48] Zhang Y L, Tian Y P, Kong Y, Zhong B N, Fu Y. Residual dense network for image super-resolution. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 2472−2481 [49] Hui Z, Wang X M, Gao X B. Fast and accurate single image super-resolution via information distillation network. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 723−731 [50] Haris M, Shakhnarovich G, Ukita N. Deep back-projection networks for super-resolution. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 1664−1673 [51] 潘宗序, 禹晶, 肖创柏, 孙卫东. 基于多尺度非局部约束的单幅图像超分辨率算法. 自动化学报, 2014, 40(10): 2233−2244Pan Zong-Xu, Yu Jing, Xiao Chuang-Bai, Sun Wei-Dong. Single-image super-resolution algorithm based on multi-scale nonlocal regularization. Acta Automatica Sinica, 2014, 40(10): 2233−2244 [52] Sajjadi M S M, Scholkopf B, Hirsch M. EnhanceNet: Single image super-resolution through automated texture synthesis. In: Proceedings of the 2016 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2016. 4501−4510 [53] Bei Y J, Damian A, Hu S J, Menon S, Ravi N, Rudin C. New techniques for preserving global structure and denoising with low information loss in single-image super-resolution. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. Salt Lake City, USA: IEEE, 2018. 987−994 [54] Wang Z H, Chen J, Hoi S C H. Deep learning for image super-resolution: A survey. arXiv:1902.06068, 2019 [55] Haut J M, Fernandez-Beltran R, Paoletti M E, Plaza J, Plaza A, Pla F. A new deep generative network for unsupervised remote sensing single-image super-resolution. IEEE Transactions on Geoscience and Remote sensing, 2018, 56(11): 6792−6810 doi: 10.1109/TGRS.2018.2843525 [56] Liu H, Fu Z L, Han J G, Shao L, Liu H S. Single satellite Imagery simultaneous super-resolution and colorization using multi-task deep neural networks. Journal of Visual Communication & Image Representation, 2018, 53: 20−30 [57] Chen Y, Tai Y, Liu X M, Shen C H, Yang J. FSRNet: End-to-end learning face super-resolution with facial priors. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: CVPR, 2018. 2492−2501 [58] Bulat A, Tzimiropoulos G. Super-FAN: Integrated facial landmark localization and super-resolution of real-world low resolution faces in arbitrary poses with GANs. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, USA: IEEE, 2018. 109−117 [59] Yu X, Fernando B, Ghanem B, Poriklt F, Hartley R. Face super-resolution guided by facial component heatmaps. In: Proceedings of 2018 ECCV European Conference on Computer Vision. Munich, Germany: Springer, 2018. 219−235 -

下载:

下载: