-

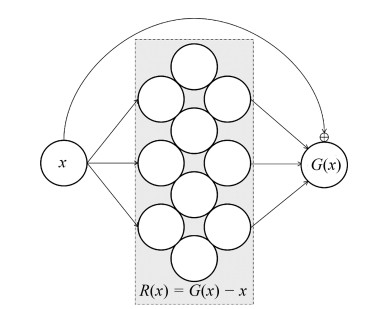

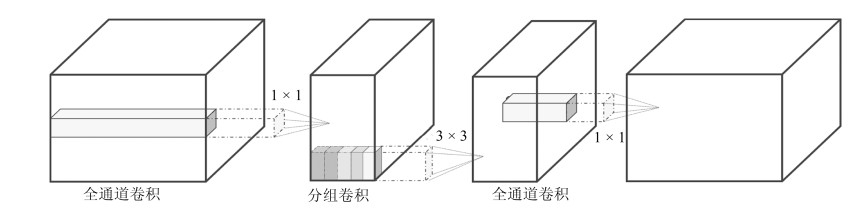

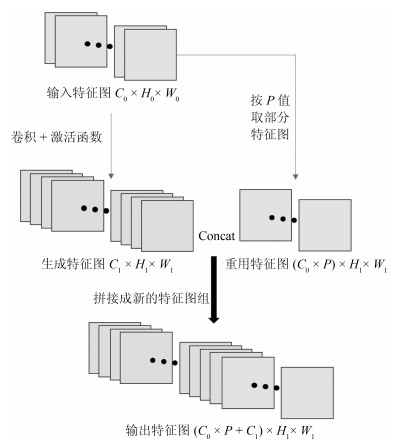

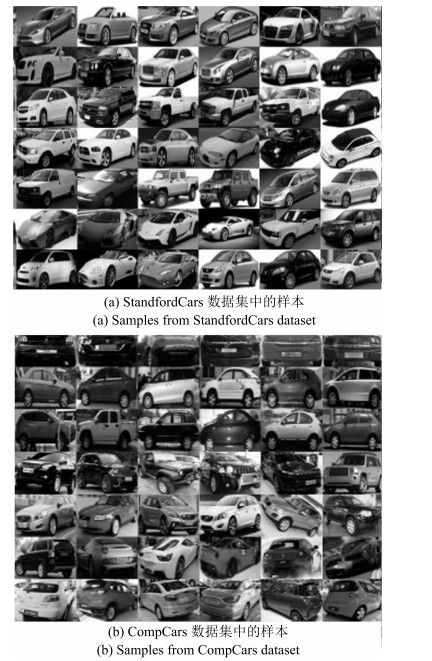

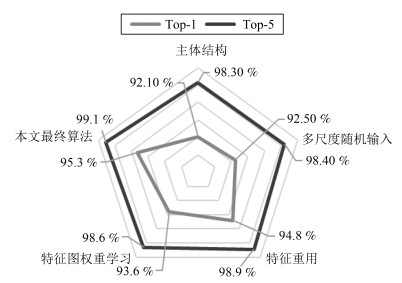

摘要: 车辆型号精细识别的关键是提取有区分性的细节特征. 以"特征重用"为核心, 以有效提取车辆图像细节特征并进行高效利用为目的, 提出了一种基于残差网络特征重用的深度卷积神经网络模型FR-ResNet (Improved ResNet focusing on feature reuse). 该网络以ResNet残差结构为基础, 分别采用多尺度输入、低层特征在高层中重用和特征图权重学习策略来实现特征重用. 多尺度输入可以防止网络过深导致性能退化以及陷入局部最优; 对各层网络部分加以不同程度的特征重用, 可以加强特征传递, 高效利用特征并降低参数规模; 在中低层网络部分采用特征图权重学习策略, 可以有效抑制冗余特征的比重. 在公开车辆数据集CompCars和StanfordCars上进行实验, 并与其他的网络模型进行比较, 实验结果表明FR-ResNet在车辆型号精细识别任务中对车辆姿态变化和复杂背景干扰等具有鲁棒性, 获得了较高的识别准确率.Abstract: The key of fine-grained car model recognition is to extract the discriminative feature details. Focusing on "feature reuse", aiming at efficiently extracting and using the feature details from car images, a deep convolutional neural network model named FR-ResNet (improved ResNet focusing on feature reuse) is proposed. Based on the residual structure, FR-ResNet adopts the strategy of multi-scale input, reuse of low level feature in high level network, and weight learning of feature maps to realize feature reuse. Multi-scale input can prevent performance degradation caused by too deep network and fall into local optimum. Different degrees of feature reuse for high, medium and low level network can enhance the feature transfer, efficiently reuse the features and reduce the size of parameters. The strategy of feature map weight learning applied on middle and low level network can effectively suppress the proportion of redundant features. Experimental results carried out on the state-of-the-art public vehicle datasets CompCars and StanfordCars show that, compared with other network models, FR-ResNet can obtain high recognition accuracy in car model recognition task, and is robust in the shooting angle of vehicles and the change of background.

-

Key words:

- Fine-grained classification of car models /

- convolutional neural network (CNN) /

- residual structure /

- feature reuse

1) 本文责任编委 白翔 -

表 1 在StanfordCars数据集上的实验结果比较(%)

Table 1 Comparison of classification results on the StanfordCars dataset (%)

表 2 在CompCars数据集上的实验结果比较(%)

Table 2 Comparison of classification results on the CompCars dataset (%)

表 3 在CompCars少量样本数据集上的实验结果比较(%)

Table 3 Comparison of classification results on the small training samples from CompCars dataset (%)

模型方法 少量样本集$A$ 少量样本集$B$ Top-1准确率 Top-5准确率 Top-1准确率 Top-5准确率 GoogLeNet 65.8 87.9 52.3 76.7 ResNet 78.3 93.5 69.7 82.3 DenseNet 90.6 98.0 81.5 90.1 FR-ResNet 92.5 98.4 85.2 93.8 表 4 特征重用比例$P$值对准确率的影响

Table 4 Effect of feature reuse ratio $P$ on recognition accuracy

第1阶段 第2阶段 第3阶段 第4阶段 $P$值 1/64 1/16 1/8 1/64 1/16 1/8 1/64 1/32 3/64 1/16 1/8 1/64 1/16 1/8 准确率(%) 93.6 93.7% 93.4 93.6 94.1% 93.9 94.3 94.6% 94.2 94.2 94.0 94.6 94.8% 94.5% 表 5 权重学习中池化策略的对比(%)

Table 5 Comparison results of pooling strategies in weight learning (%)

池化选择策略 Top-1准确率 全局平均池化 93.3 全局最大值池化 92.3 局部平均池化 92.5 局部最大值池化 92.6 全局平均+ 局部平均 93.4 全局平均+ 局部最大值 93.6 全局最大值+ 局部平均 93.1 全局最大值+ 局部最大值 92.6 -

[1] 苏锑, 杨明, 王春香, 唐卫, 王冰. 一种基于分类回归树的无人车汇流决策方法. 自动化学报, 2018, 44(1): 35-43 doi: 10.16383/j.aas.2018.c160457Su Ti, Yang Ming, Wang Chun-Xiang, Tang Wei, Wang Bing. Classification and regression tree based traffic merging for method self-driving vehicles. Acta Automatica Sinica, 2018, 44(1): 35-43 doi: 10.16383/j.aas.2018.c160457 [2] Song D, Tharmarasa R, Kirubarajan T, Fernando X N. Multi-vehicle tracking with road maps and car-following models. IEEE Transactions on Intelligent Transportation Systems, 2018, 19(5): 1375-1386 doi: 10.1109/TITS.2017.2723575 [3] Yu Y, Wang J, Lu J T, Xie Y, Nie Z X. Vehicle logo recognition based on overlapping enhanced patterns of oriented edge magnitudes. Computers and Electrical Engineering, 2018, 71: 273-283 doi: 10.1016/j.compeleceng.2018.07.045 [4] Hu C P, Bai X, Qi L, Wang X G, Xue G J, Mei L. Learning discriminative pattern for real-time car brand recognition. IEEE Transactions on Intelligent Transportation Systems, 2015, 16(6): 3170-3181 doi: 10.1109/TITS.2015.2441051 [5] Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, et al. Imagenet large scale visual recognition challenge. International Journal of Computer Vision, 2014, 115(3): 211-252 http://arxiv.org/abs/1409.0575v2 [6] Lowe D G. Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 2004, 60(2): 91-110 doi: 10.1023/B:VISI.0000029664.99615.94 [7] Dalal N, Triggs B. Histograms of oriented gradients for human detection. In: Proceedings of the 2005 Computer Vision and Pattern Recognition. California, USA: IEEE, 2005. 886 -893 [8] 罗建豪, 吴建鑫. 基于深度卷积特征的细粒度图像分类研究综述. 自动化学报, 2017, 43(8): 1306-1318 doi: 10.16383/j.aas.2017.c160425Luo Jian-Hao, Wu Jian-Xin. A survey on fine-grained image categorization using deep convolutional features. Acta Automatica Sinica, 2017, 43(8): 1306-1318 doi: 10.16383/j.aas.2017.c160425 [9] Liu W Y, Wen Y D, Yu Z D, Li M, Raj B, Song L. Sphereface: Deep hypersphere embedding for face recognition. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Hawaii, HI, USA: IEEE, 2017. [10] Mao J Y, Xiao T T, Jiang Y N, Cao Z M. What can help pedestrian detection? In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Hawaii, HI, USA: IEEE, 2017. 6034-6043 [11] Tang P, Wang X G, Huang Z L, Bai X, Liu W Y. Deep patch learning for weakly supervised object classification and discovery. Pattern Recognition, 2017, 71: 446-459 doi: 10.1016/j.patcog.2017.05.001 [12] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems, 2012, 25(2): 1097-1105 http://users.ics.aalto.fi/perellm1/thesis/summaries_html/node64.html [13] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv Preprint, 2014, arXiv: 1409.1556 [14] He K M, Zhang X Y, Ren S Q, Sun J. Deep residual learning for image recognition. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, Nevada, USA: IEEE, 2016. 770-778 [15] Dong Z, Wu Y W, Pei M T, Jia Y D. Vehicle type classification using a semisupervised convolutional neural network. IEEE Transactions on Intelligent Transportation Systems, 2015, 16(4): 2247-2256 doi: 10.1109/TITS.2015.2402438 [16] Hsieh J W, Chen L C, Chen D Y. Symmetrical SURF and its applications to vehicle detection and vehicle make and model recognition. IEEE Transactions on Intelligent Transportation Systems, 2014, 15(1): 6-20 doi: 10.1109/TITS.2013.2294646 [17] Liao L, Hu R M, Xiao J, Wang Q, Xiao J, Chen J. Exploiting effects of parts in fine-grained categorization of vehicles. In: Proceedings of the 2015 IEEE International Conference on Image Processing. Quebec City, Canada: IEEE, 2015. 745-749 [18] Biglari M, Soleimani A, Hassanpour H. Part-based recognition of vehicle make and model. IET Image Processing, 2017, 11(7): 483-491 doi: 10.1049/iet-ipr.2016.0969 [19] He H S, Shao Z Z, Tan J D. Recognition of car makes and models from a single traffic-camera image. IEEE Transactions on Intelligent Transportation Systems, 2015, 16(6): 3182-3192 doi: 10.1109/TITS.2015.2437998 [20] Lin Y L, Morariu V I, Hsu W, Davis L S. Jointly optimizing 3D model fitting and fine-grained classification. In: Proceedings of the 2014 European Conference on Computer Vision. Zurich, Switzerland: Springer, Cham, 2014. 466-480 [21] Krause J, Stark M, Deng J, Li F F. 3D object representations for fine-grained categorization. In: Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops. Sydney, Australia, NSW: IEEE 2014. 554-561 [22] Sochor J, Herout A, Havel J. Boxcars: 3D boxes as CNN input for improved fine-grained vehicle recognition. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, Nevada, USA: IEEE, 2016. 3006-3015 [23] Gao Y B, Lee H J. Local tiled deep networks for recognition of vehicle make and model. Sensors, 2016, 16(2): 226 doi: 10.3390/s16020226 [24] Le Q V, Ngiam J, Chen Z, Chia D J H, Pang W K, Ng A Y. Tiled convolutional neural networks. Advances in Neural Information Processing Systems, 2010: 1279-1287 http://www.researchgate.net/publication/221619765_Tiled_convolutional [25] Yu S Y, Wu Y, Li W, Song Z J, Zeng W H. A model for fine-grained vehicle classification based on deep learning. Neurocomputing, 2017, 257: 97-103 doi: 10.1016/j.neucom.2016.09.116 [26] 余烨, 金强, 傅云翔, 路强. 基于Fg-CarNet的车辆型号精细分类研究. 自动化学报, 2018, 44(10): 1864-1875 doi: 10.16383/j.aas.2017.c170109Yu Ye, Jin Qiang, Fu Yun-Xiang, Lu Qiang. Fine-grained classification of car models using Fg-CarNet convolutional neural network. Acta Automatica Sinica, 2018, 44(10): 1864 -1875 doi: 10.16383/j.aas.2017.c170109 [27] Hu B, Lai J H, Guo C C. Location-aware fine-grained vehicle type recognition using multi-task deep networks. Neurocomputing, 2017, 243(Supplement C): 60-68 http://www.sciencedirect.com/science/article/pii/S0925231217304691 [28] Fang J, Zhou Y, Yu Y, Du S D. Fine-grained vehicle model recognition using a coarse-to-fine convolutional neural network architecture. IEEE Transactions on Intelligent Transportation Systems, 2017, 18(7): 1782-1792 doi: 10.1109/TITS.2016.2620495 [29] Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: A simple way to prevent neural networks from overfitting. Journal of Machine Learning Research, 2014, 15(1): 1929-1958 http://dl.acm.org/citation.cfm?id=2670313&preflayout=flat [30] Jia Y Q, Shelhamer E, Donahue J, Karayev S, Long J, Girshick R, et al. Caffe: Convolutional architecture for fast feature embedding. In: Proceedings of the 22nd ACM International Conference on Multimedia. NY, USA: ACM, 2014. 675-678 [31] Wang J J, Yang J C, Yu K, Lv F J, Huang T S, Gong Y H. Locality-constrained linear coding for image classification. In: Proceedings of the 23rd IEEE Conference on Computer Vision and Pattern Recognition, California, USA: IEEE, 2010. 3360-3367 [32] Krause J, Gebru T, Deng J, Li L J, Li F F. Learning features and parts for fine-grained recognition. In: Proceedings of the 22nd International Conference on Pattern Recognition (ICPR). Stockholm, Sweden, 2014. 26-33 [33] Liu X, Xia T, Wang J, Yang Y, Zhou F, Lin Y Q. Fine-grained recognition with automatic and efficient part attention. arXiv Preprint, 2016, arXiv, 1603. 06765 http://arxiv.org/abs/1603.06765v3 [34] Wang Y M, Choi J, Morariu V I, Davis L S. Mining discriminative triplets of patches for fine-grained classification. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, Nevada, USA: IEEE, 2016. 1163-1172 [35] Krause J, Jin H L, Yang J C, Li F F. Fine-grained recognition without part annotations. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 5546-5555 [36] Yang L J, Luo P, Chen C L, Tang X O. A large-scale car dataset for fine-grained categorization and verification. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 3973-3981 [37] Szegedy C, Liu W, Jia Y Q, Sermanet P, Reed S, Anguelov D et al. Going deeper with convolutions. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, USA: IEEE, 2015. 1-9 [38] Huang G, Liu Z, Maaten L V D, Weinberger K Q. Densely connected convolutional networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Hawaii, HI, USA: IEEE, 2017. 2261-2269 -

下载:

下载: