-

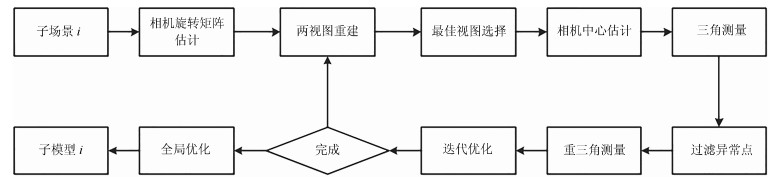

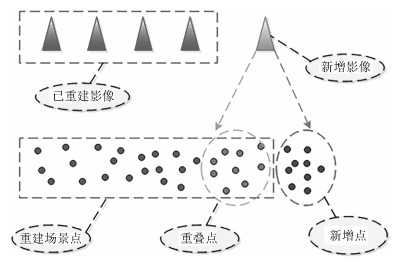

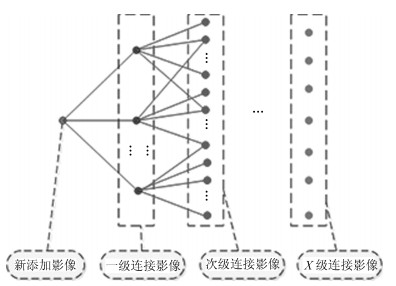

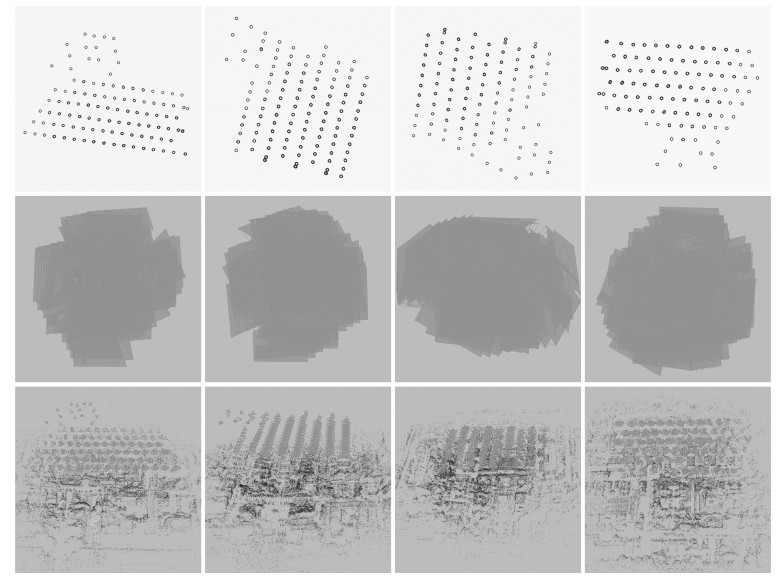

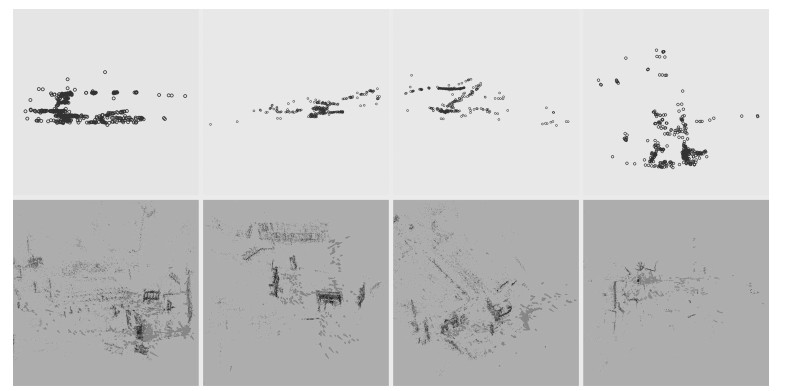

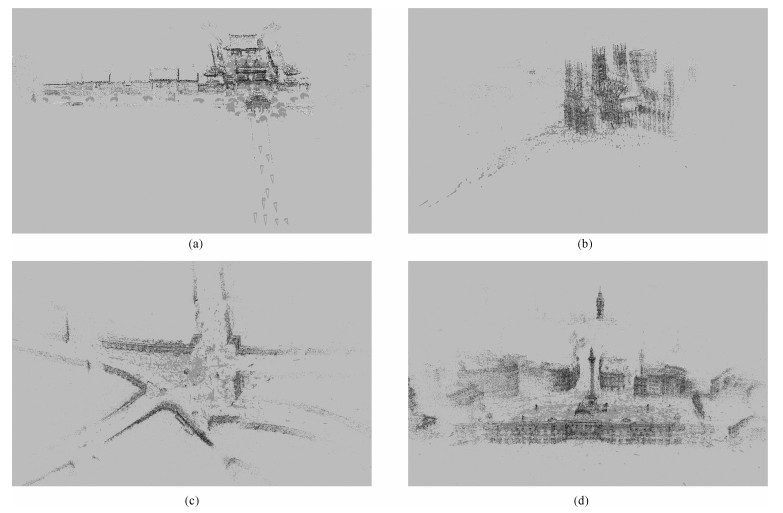

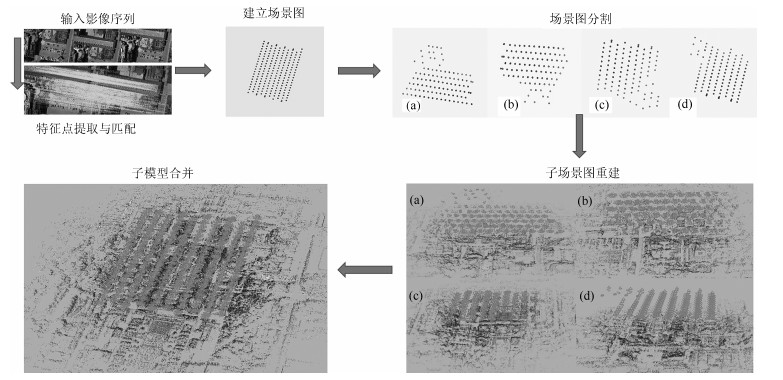

摘要: 针对大范围三维重建, 重建效率较低和重建稳定性、精度差等问题, 提出了一种基于场景图分割的大范围混合式多视图三维重建方法.该方法首先使用多层次加权核K均值算法进行场景图分割; 然后,分别对每个子场景图进行混合式重建, 生成对应的子模型, 通过场景图分割、混合式重建和局部优化等方法提高重建效率、降低计算资源消耗, 并综合采用强化的最佳影像选择标准、稳健的三角测量方法和迭代优化等策略, 提高重建精度和稳健性; 最后, 对所有子模型进行合并, 完成大范围三维重建.分别使用互联网收集数据和无人机航拍数据进行了验证, 并与1DSFM、HSFM算法在计算精度和计算效率等方面进行了比较.实验结果表明, 本文算法大大提高了计算效率、计算精度, 能充分保证重建模型的完整性, 并具备单机大范围场景三维重建能力.Abstract: To solve the problem of low computational efficiency and poor stability of large scale 3D reconstruction, a novel hybrid scheme of large scale reconstruction was proposed. Scene graph was partitioned by multi-level weighted kernel K-means algorithm at first; then sub-scenes were reconstructed by hybrid reconstruction producing sub-models, in which improved optimal image selection criteria, robust triangulation methods and iterative optimization strategies were adopted, and the computational efficiency was improved by using strategies of scene graph part, hybrid reconstruction and partial bundle adjustment (BA); Finally, All sub-models were merged into the final reconstruction result. Experiments were performed using images collected from the internet and UAV aerial images respectively, and comparison was made with 1DSFM and HSFM in terms of computation accuracy and computation efficiency. Experimental results demonstrate the proposed algorithm greatly improves computational efficiency and computational accuracy, fully ensures the integrity of the reconstructed scene and is able to reconstruct large scale scene in single computer.

-

Key words:

- Machine vision /

- 3D reconstruction /

- scene graph partition /

- kernel K-means /

- iterative optimization /

- hybrid reconstruction

1) 本文责任编委 吴毅红 -

表 1 合并前后重建结果对比

Table 1 Comparison of reconstruction results before and after merging

数据集 子场景序号 合并前 合并后 $N_R$ $A_{RE}$ $N_R$ $A_{RE}$ 大雁塔 1 296 0.416 1 045 0.455 2 323 0.463 3 268 0.451 4 253 0.437 Rome Forum 1 470 0.577 1 475 0.572 2 386 0.556 3 355 0.583 4 431 0.572 表中, $N_R$为重建影像数目; $A_{RE}$表示重投影误差, 单位(pixel). 表 2 1DSFM、HSFM与本文算法的不同数据集三维重建结果对比

Table 2 Comparison of 3D reconstruction result of different dataset using 1DSFM, HSFM and Ours

数据集 1DSFM HSFM Ours 名称 $N_D$ $N_R$ $T_{A}$ $A_{RE}$ $N_R$ $T_{A}$ $A_{RE}$ $N_R$ $T_{A}$ $A_{RE}$ 龙泉寺 443 406 25.386 0.841 417 19.661 0.717 413 16.815 0.711 Yorkminster 3 368 1 176 93.910 0.736 1 472 64.726 0.628 1 712 65.803 0.607 Piccadilly 7 351 6 445 476.781 1.194 6 791 269.561 0.865 6 979 231.794 0.822 Trafalgar 15 683 9 384 765.685 1.173 11 943 481.857 0.837 12 741 438.443 0.816 西安大雁塔 1 045 1 043 58.357 0.617 1 045 31.637 0.510 1 045 26.946 0.455 大连市区 4 900 4 900 327.625 1.397 4 900 231.394 1.478 4 900 197.872 1.353 某城市市区 15 750 NA NA NA 15 745 845.632 1.359 15 750 785.451 1.211 表中, $N_D$为数据集中的影像数目; $N_R$为重建影像数目; $T_{A}$表示重建时间, 单位(min); $A_{RE}$表示重投影误差, 单位(pixel). 表 3 嵩山地区部分误差结果

Table 3 Partial error result of the Songshan area

序号 $\Delta X$ $\Delta Y$ $\Delta Z$ RMS 1 0.2727 0.2337 0.2512 0.4383 2 0.1222 0.1053 0.1597 0.3370 3 0.1725 0.3045 0.3623 0.5037 4 0.2045 0.3643 0.5239 0.6703 5 0.1380 0.1419 0.4578 0.4987 表 4 Quad数据集部分误差结果

Table 4 Partial error result of Quad dataset

序号 $\Delta X$ $\Delta Y$ $\Delta Z$ RMS 1 0.2727 0.2337 0.2512 0.4383 2 0.1222 0.1053 0.1597 0.3370 3 0.1725 0.3045 0.3623 0.5037 4 0.2045 0.3643 0.5239 0.6703 5 0.1380 0.1419 0.4578 0.4987 -

[1] 王雪, Shi Jian-Bo, Park Hyun-Soo, 王庆.基于运动目标三维轨迹重建的视频序列同步算法.自动化学报, 2017, 43(10): 1759-1772 doi: 10.16383/j.aas.2017.c160584Wang Xue, Shi Jian-Bo, Park Hyun-Soo, Wang Qing. Synchronization of Video Sequences Through 3D Trajectory Reconstruction. Acta Automatica Sinica, 2017, 43(10): 1759-1772 doi: 10.16383/j.aas.2017.c160584 [2] Cui H, Gao X, Shen S. HSfM: hybrid structure-from-motion. In: Proceedings of the 2017 Conference on Computer Vision and Pattern Recognition. IEEE, 2017. 2393-2402 [3] Wu C. Towards linear-time incremental structure from motion. In: Proceedings of In3D Vision-3DV 2013, 2013 International Conference. IEEE, 2013. 127-134 [4] Schonberger J L, Frahm J M. Structure-from-motion revisited. In: Proceedings of the IEEE Computer Vision and Pattern Recognition. IEEE, 2016. 4104-4113 [5] Toldo R, Gherardi R, Farenzena M, Fusiello A. Hierarchical structure-and-motion recovery from uncalibrated images. Computer Vision and Image Understanding, 2015, 140: 127-143 doi: 10.1016/j.cviu.2015.05.011 [6] 宋征玺, 张明环.基于分块聚类特征匹配的无人机航拍三维场景重建.西北工业大学学报, 2016, 34(4): 731-737 http://d.old.wanfangdata.com.cn/Periodical/xbgydxxb201604028Song Zheng-Xi, Zhang Ming-Huan. 3D Reconstruction on Unmanned Aerial Video by Using Patch Clustering Matching Method. Journal of Northwestern Polytechnical University, 2016, 34(4): 731-737 http://d.old.wanfangdata.com.cn/Periodical/xbgydxxb201604028 [7] Cefalu A, Haala N, Fritsch D. Hierarchical structure from motion combining global image orientation and structureless bundle adjustment. International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, 2017, 42: 535 [8] Agarwal S, Furukawa Y, Snavely N. Building Rome in a day. Communications of the ACM, 2011, 54(10): 105-112 doi: 10.1145/2001269.2001293 [9] Heinly J, Schonberger J L, Dunn E. Reconstructing the world in six days. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, 2015: 3287-3295 [10] 王伟, 高伟, 朱海, 胡占义.快速鲁棒的城市场景分段平面重建.自动化学报, 2017, 43(4): 674-684 doi: 10.16383/j.aas.2017.c160261Wang Wei, Gao Wei, Zhu Hai, Hu Zhan-Yi. Rapid and robust piecewise planar reconstruction of urban scenes. Acta Automatica Sinica, 2017, 43(4): 674-684 doi: 10.16383/j.aas.2017.c160261 [11] Özyeşil O, Voroninski V, Basri R, Singer A. A survey of structure from motion. Acta Numerica. 2017, 26: 305-64 doi: 10.1017/S096249291700006X [12] Simone B, Ciocca G, Marelli D. Evaluating the performance of structure from motion pipelines. Journal of Imaging, 2018, 4(8): 98 doi: 10.3390/jimaging4080098 [13] Cui H, Shen S, Gao W, Hu Z. Efficient large-scale structure from motion by fusing auxiliary imaging information. IEEE Transactions on Image Processing. 2015, 24(11): 3561-3573 doi: 10.1109/TIP.2015.2449557 [14] Zhu S, Shen T, Zhou L, Zhang R, Wang J, Fang T, Quan L. Accurate, scalable and parallel structure from motion[Ph. D. dissertation], Hong Kong University of Science and Technology, China, 2017 [15] Yang Y, Chang MC, Wen L, Tu P, Qi H, Lyu S. Efficient large-scale photometric reconstruction using Divide-Recon-Fuse 3D Structure from Motion. In: Proceedings of the 13th IEEE International Conference on Advanced Video and Signal Based Surveillance. IEEE, 2016. 180-186 [16] Wilson K, Snavely N. Robust global translations with 1dsfm. In: Proceedings of the 2014 European Conference on Computer Vision, Cham: Springer, 2014. 61-75 [17] Govindu V M. Lie-algebraic averaging for globally consistent motion estimation. In: Proceedings of the 2004 Computer Vision and Pattern Recognition, IEEE, 2004. 684-691 [18] Martinec D, Pajdla T. Robust rotation and translation estimation in multiview reconstruction. In: Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2007. 1-8 [19] Jensen S H, Del Bue A, Doest M E, Aanæs H. A Benchmark and Evaluation of Non-Rigid Structure from Motion. arXiv preprint arXiv: 1801.08388, 2018. [20] Chatterjee A, Madhav Govindu V. Efficient and robust large-scale rotation averaging. In: Proceedings of the 2013 IEEE International Conference on Computer Vision, IEEE, 2013. 521-528 [21] Jiang N, Cui Z, Tan P. A global linear method for camera pose registration. In: Proceedings of the 2013 IEEE International Conference on Computer Vision, IEEE, 2013. 481-488 [22] 郭复胜, 高伟.基于辅助信息的无人机图像批处理三维重建方法.自动化学报, 2013, 39(6): 834-845 doi: 10.3724/SP.J.1004.2013.00834Guo Fu-Sheng, Gao Wei. Batch reconstruction from UAV images with prior information. Acta Automatica Sinica, 2013, 39(6): 834-845 doi: 10.3724/SP.J.1004.2013.00834 [23] Arie-Nachimson M, Kovalsky S Z, Kemelmacher-Shlizerman I, Singer A, Basri R. Global motion estimation from point matches. In: Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, IEEE, 2012. 81-88 [24] Crandall D, Owens A, Snavely N, Huttenlocher D. Discrete-continuous optimization for large-scale structure from motion. In: Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2011. 3001-3008 [25] Cui Z. Global Structure-from-Motion and Its Application[Ph. D. dissertation], Applied Sciences: School of Computing Science, 2017 [26] Havlena M, Torii A, Pajdla T. Efficient structure from motion by graph optimization. In: Proceedings of the European Conference on Computer Vision, Berlin: Springer, 2010. 100-113 [27] Bhowmick B, Patra S, Chatterjee A, Govindu VM, Banerjee S. Divide and conquer: efficient large-scale structure from motion using graph partitioning. In: Proceedings of the Asian Conference on Computer Vision, Cham: Springer, 2014. 273-287 [28] Agudo A, Moreno-Noguer F. A scalable, efficient, and accurate solution to non-rigid structure from motion. Computer Vision and Image Understanding, 2018, 167: 121-133 doi: 10.1016/j.cviu.2018.01.002 [29] Dhillon I S, Guan Y, Kulis B. Weighted graph cuts without eigenvectors a multilevel approach. IEEE transactions on pattern analysis and machine intelligence, 2007, 29(11): 1-14 doi: 10.1109/TPAMI.2007.4302753 [30] Karami E, Prasad S, Shehata M. Image matching using SIFT, SURF, BRIEF and ORB: performance comparison for distorted images. arXiv preprint arXiv: 1710.02726, 2017. [31] Li X, Larson M, Hanjalic A. Pairwise geometric matching for large-scale object retrieval. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2015. 5153-5161 [32] Sweeney C, Sattler T, Hollerer T, Turk M, Pollefeys M. Optimizing the viewing graph for structure-from-motion. In: Proceedings of the 2015 IEEE International Conference on Computer Vision, IEEE, 2015. 801-809 [33] 韦盛斌, 王少卿, 周常河, 刘昆, 范鑫.用于三维重建的点云单应性迭代最近点配准算法.光学学报, 2015, 35(5): 244-250 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=gxxb201505033Wei Sheng-Bin, Wang Shao-Qing, Zhou Chang-He, Liu Kun, Fan Xin. An iterative closest point algorithm based on biunique correspondence of point clouds for 3D reconstruction. Acta Optica Sinica, 2015, 35(5): 244-250 http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=gxxb201505033 [34] Agarwal S, Mierle K. Ceres Solver: Tutorial & Reference. Google Inc, 2012, 2:72. [35] Hartley R, Aftab K, Trumpf J. $L_1$ rotation averaging using the Weiszfeld algorithm. In: Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2011. 3041-3048 [36] Zhang Q, Chin T J. Coresets for Triangulation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=8dc54afc7e357efa504f48233844295f [37] Shah R, Chari V, Narayanan PJ. A Unified View-Graph Selection Framework for Structure from Motion. arXiv preprint arXiv: 1708.01125, 2017. [38] National Laboratory of Pattern Recognition, Institute of Automation, Chinese Academy of Sciences. datasets for 3D reconstruction[Online], available: http://vision.ia.ac.cn/data, January 3, 2018 -

下载:

下载: