-

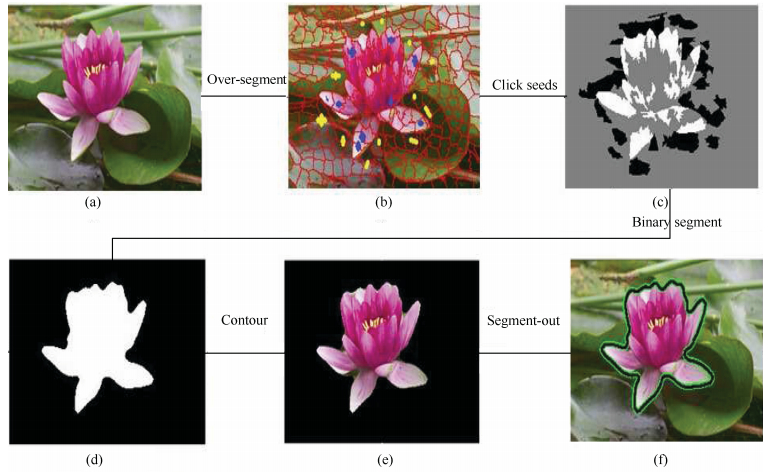

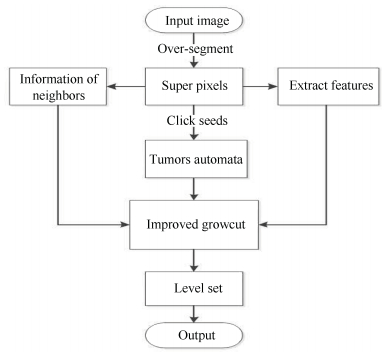

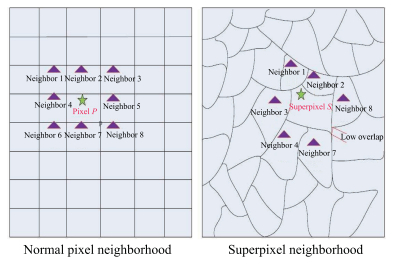

摘要: 交互式分割对于选取图像中感兴趣的对象很有意义.在图像处理领域中有着很重要的地位,具有广泛的应用,至今仍然是一个研究的热点问题.然而,逐像素执行交互式分割通常是耗时的.本文提出了一种新的分割方法.在Growcut算法框架下,提出基于超像素的肿瘤自动攻击(TA)分割.其中,超像素可以提供强大的边界信息来引导分割,且可以由过分割算法很容易来获取.TA与细胞自动攻击算法(CA)有着相似的原理,给定少量的用户标记目标的超像素,可以由TA完成目标分割任务,处理速度比Growcut算法快.此外,为获得最佳效果,应用了水平集和多层TA方法来进行边界的调整.在VOC挑战分割数据集上进行的实验表明,所提出的分割算法性能表现优异,高效和精准,且能处理多目标分割任务.Abstract: Interactive segmentation is useful for selecting object of interest in an image and it continues to be a popular topic. It plays an increasingly important role in image processing and has a wide range of applications. However, performing interactive segmentation pixel by pixel is normally time consuming. This paper presents a new method to improve the segmentation efficiency. The proposed method improves the growcut algorithm by utilizing the super-pixel-level tumors automata (TA), since the super-pixels can supply powerful boundary clues to guide segmentation and can be gathered easily by over-segmentation algorithm. The TA has the similar principle as cellular automata. Given a small number of user-tagged super-pixels, the rest of the image can be automatically segmented by TA. Due to the iterative strategy, user can observe that the processing is faster than the growcut. To obtain the best result, both level set and multi-layer TA approaches are applied. Experiments carried out on the VOC challenge segmentation dataset show that the proposed method achieves state-of-the-art performance.

-

Key words:

- Growcut /

- interactive segmentation /

- Super-pixel /

- tumors automata(TA)

-

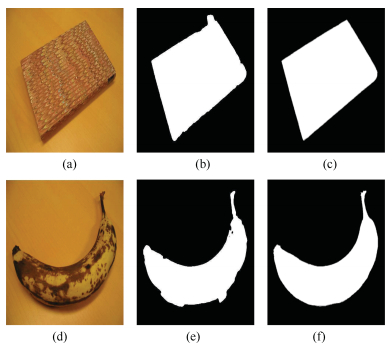

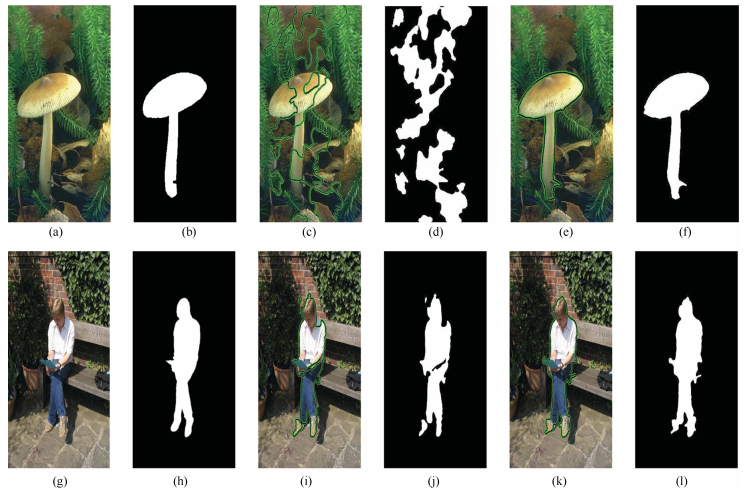

Fig. 10 The segmentation results with different neighborhood measure. (a), (g) and (b), (h) are the original images and the ground truth. (c), (i) and (d), (j) are the results obtained by using the feature space neighborhood measure. (e), (k) and (f), (l) are our results by using the new neighborhood measure.

Algorithm 1. Search super-pixel neighborhoods algorithm $//$ For each tumor, $ s$ is the super-pixel; $p$ is the pixel on the overlap boundary.

$//$ Neighborhood $S$ is the final neighborhoods information of each super-pixel.

for $\forall\, s\in{S}$ do

$//$ Search the nearest super-pixel

$Temp\_S \leftarrow {L}(s)$ $//$ record the number of the neighbor

$Temp\_P \leftarrow P(boundary)$ $//$ record the overlap pixel between

super-pixels

for $\forall\, s\in {L(s)}$ do

if $Temp\_P > threshold $ then

$Neighborhood\_S \leftarrow s$

end if

end for

end forAlgorithm 2. Tumors automata evolution rule $//$ For each tumor

for $\forall \, p\in P$ do

$//$ Copy previous state

$l^{t+1}=l^t$

$\theta_p^{t+1}=\theta_p^t$

$//$ Neighbors try to attack current tumor

for $\forall q\in N(p)$ do

if $g(\|\vec C_p-\vec C_q\|_2)\cdot \theta_q^t > \theta_p^t$ then

$l_p^{t+1}=l_q^t$

$\theta_p^{t+1}=g(\|\vec C_p - \vec C_q\|_2)\cdot \theta_q^t$

end if

end for

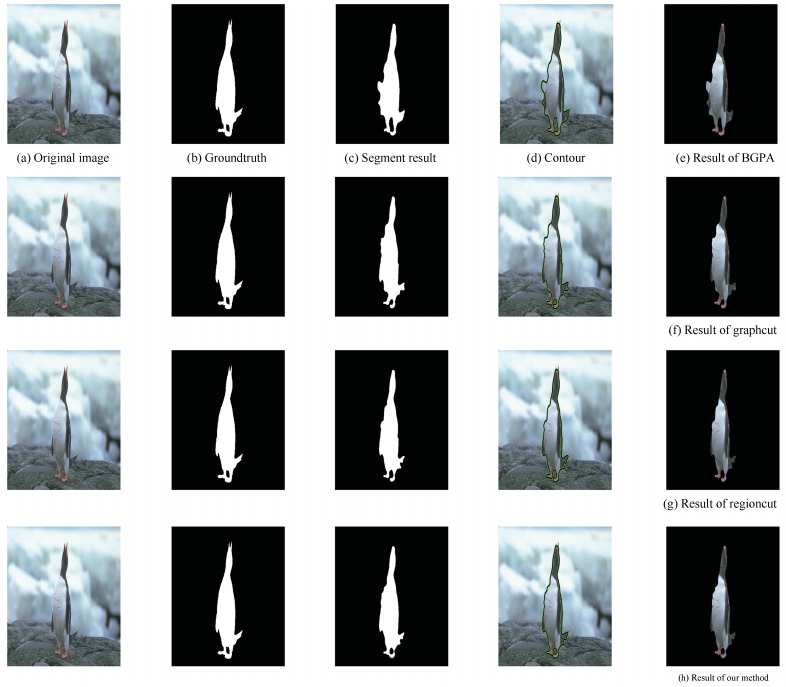

end forTable Ⅰ Accuracy Rates on the Harder Dataset [23] Based on Different Methods

Method Mean accuracy rate (%) Pixel-based Growcut 83.55 RW 85.23 Graph-cut 84.34 Super-pixel based BGPA 87.38 Regioncut 89.26 Our method 89.85 Table Ⅱ Comparison of Segmentation Efficiency

Methods Time of convergence (s) Iteration Loop number of single iteration Total time (s) Graph-cut 2.97 345 number of pixels of image ($180\times 271$) 3.28 Regioncut 1.45 169 number of pixels of image ($180\times 271$) 5.82 Proposed algorithm 1.03 14 number of pixels of image ($300$) 4.75 -

[1] V. Kolmogorov and R. Zabin, "What energy functions can be minimized via graph cuts, " IEEE Trans. Patt. Anal. Mach. Intell., vol. 26, no. 2, pp. 147-159, Feb. 2004. doi: 10.1109/TPAMI.2004.1262177 [2] M. A. G. Carvalho and A. L. Costa, "Combining hierarchical structures on graphs and normalized cut for image segmentation, " New Frontiers in Graph Theory, Y. G. Zhang, Ed. Rijeka, Yugoslavia: InTech Open Access Publisher, 2012. [3] J. Carreira and C. Sminchisescu, "Constrained parametric min-cuts for automatic object segmentation, " in Proc. 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 2010, pp. 3241-3248. [4] D. Kuettel and V. Ferrari, "Figure-ground segmentation by transferring window masks, " in Proc. 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 2012, pp. 558-565. [5] C. Rother, V. Kolmogorov, and A. Blake, "'grabcut':Interactive foreground extraction using iterated graph cuts, " ACM Trans. Graph., vol. 23, no. 3, pp. 309-314, Aug. 2004. doi: 10.1145/1015706 [6] X. Bai and G. Sapiro, "A geodesic framework for fast interactive image and video segmentation and matting, " University of Minnesota, Minnesota, USA, Tech. Rep. 2171, 2007. [7] L. Yu and C. S. Li, "Low depth of field image automatic segmentation based on graph cut, " J. Autom., no. 10, pp. 1471-1481, 2014. [8] O. Sener, K. Ugur, and A. A. Alatan, "Error-tolerant interactive image segmentation using dynamic and iterated graph-cuts, " in Proc. 2nd ACM International Workshop on Interactive Multimedia on Mobile and Portable Devices, New York, NY, USA, 2012, pp. 9-16. [9] L. Grady, "Random walks for image segmentation, " IEEE Trans. Patt. Anal. Mach. Intell., vol. 28, no. 11, pp. 1768-1783, Nov. 2006. doi: 10.1109/TPAMI.2006.233 [10] O. J. Arndt, B. Scheuermann, and B. Rosenhahn, "'Regioncut'-interactive multi-label segmentation utilizing cellular automaton, " in Proc. 2013 IEEE Workshop on Applications of Computer Vision (WACV), Tampa, FL, USA, 2013, pp. 309-316. [11] V. Vezhnevets and V. Konouchine, ""GrowCut": Interactive multi-label N-D image segmentation by cellular automata, " in Proc. Graphicon, Novosibirsk Akademgorodok, Russia, 2005, pp. 150-156. [12] J. Von Neumann and A. W. Burks, Theory of Selfreproducing Automata. Champaign, IL, USA:University of Illinois Press, 1966. [13] A. Blake, C. C. E. Rother, and P. Anandan, "Foreground extraction using iterated graph cuts, " U. S. Patent 7 660 463, Feb. 9, 2010. [14] R. Dondera, V. Morariu, Y. L. Wang, and L. Davis, "Interactive video segmentation using occlusion boundaries and temporally coherent superpixels, " in Proc. 2014 IEEE Winter Conference on Applications of Computer Vision (WACV), Steamboat Springs, CO, USA, 2014, pp. 784-791. [15] M. Ghafarianzadeh, M. B. Blaschko, and G. Sibley, "Unsupervised spatio-temporal segmentation with sparse spectral clustering, " in Proc. British Machine Vision Conference (BMVC), Nottingham, UK, 2014. [16] I. Gallo, A. Zamberletti, and L. Noce, "Interactive object class segmentation for mobile devices, " in Proc. 27th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Rio de Janeiro, Brazil, 2014, pp. 73-79. [17] Z. G. Li, X. M. Wu, and S. F. Chang, "Segmentation using superpixels: A bipartite graph partitioning approach, " in Proc. 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 2012, pp. 789-796. [18] R. Achanta, A. Shaji, K. Smith, A. Lucchi, P. Fua, and S. Süsstrunk, "Slic superpixels compared to state-of-the-art superpixel methods, " IEEE Trans. Patt. Anal. Mach. Intell., vol. 34, no. 11, pp. 2274-2282, Nov. 2012. doi: 10.1109/TPAMI.2012.120 [19] C. M. Li, C. Y. Xu, C. F. Gui, and M. D. Fox, "Distance regularized level set evolution and its application to image segmentation, " IEEE Trans. Image Process., vol. 19, no. 12, pp. 3243-3254, Dec. 2010. doi: 10.1109/TIP.2010.2069690 [20] P. L. Rosin, "Image processing using 3-state cellular automata, " Comp. Vision Image Understand., vol. 114, no. 7, pp. 790-802, Jul. 2010. doi: 10.1016/j.cviu.2010.02.005 [21] Y. Qin, H. C. Lu, Y. Q. Xu, and H. Wang, "Saliency detection via cellular automata, " in Proc. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 2015, pp. 110-119. [22] M. Everingham, L. Van Gool, C. K. I. Williams, J. Winn, and A. Zisserman, "The Pascal visual object classes challenge 2009 (VOC2009), " in Summary Presentation at the 2009 PASCAL VOC Workshop, 2009. [23] V. Gulshan, C. Rother, A. Criminisi, A. Blake, and A. Zisserman, "Geodesic star convexity for interactive image segmentation, " in Proc. 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 2010, pp. 3129-3136. [24] J. Santner, T. Pock, and H. Bischof, "Interactive multi-label segmentation, " in Asian Conference on Computer Vision, R. Kimmel, R. Klette, and A. Sugimoto, Eds. Berlin, Heidelberg, Germany: Springer, 2010, pp. 397-410. [25] Y. Y. Boykov and M. P. Jolly, "Interactive graph cuts for optimal boundary & region segmentation of objects in ND images, " in Proc. 8th IEEE International Conference on Computer Vision, Vancouver, BC, USA, vol. 1, pp. 105-112, Jul. 2001. [26] P. A. V. de Miranda, A. X. Falcáo, and J. K. Udupa, "Synergistic arc-weight estimation for interactive image segmentation using graphs, " Comp. Vision Image Understand., vol. 114, no. 1, pp. 85-99, Jan. 2010. doi: 10.1016/j.cviu.2009.08.001 -

下载:

下载: