-

摘要:

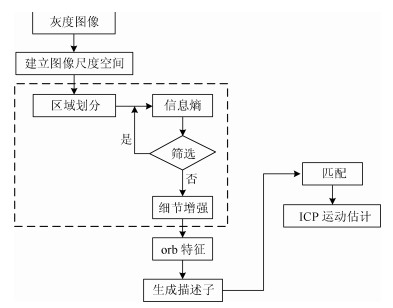

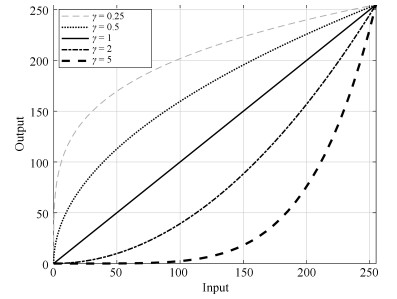

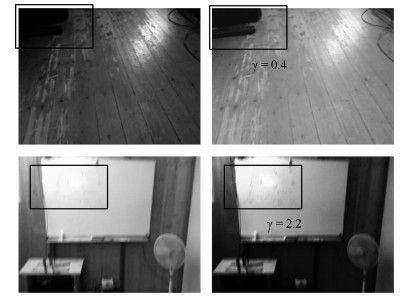

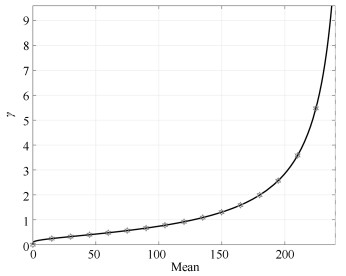

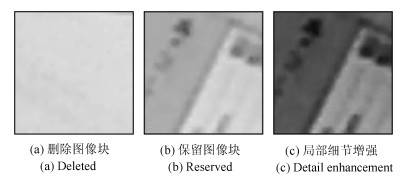

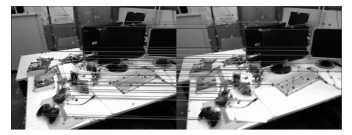

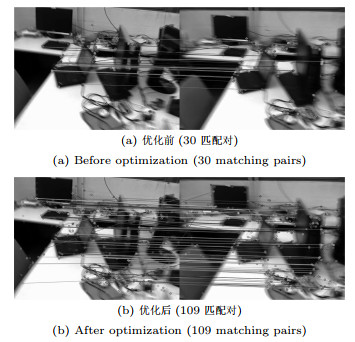

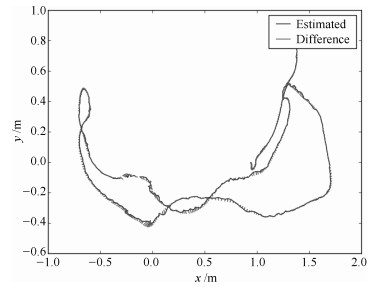

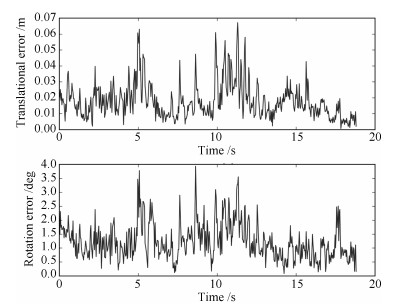

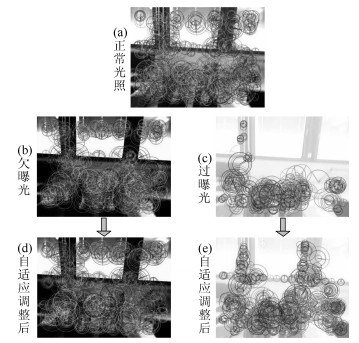

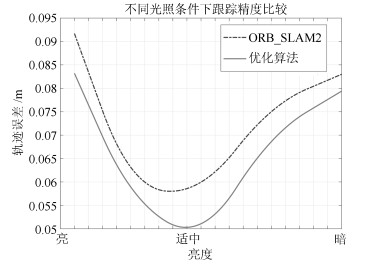

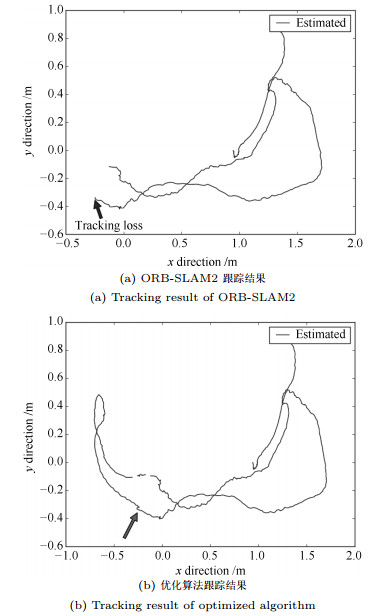

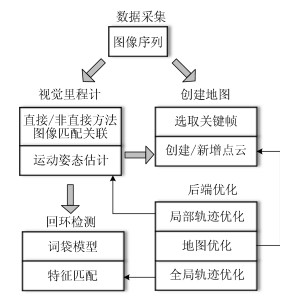

针对移动机器人视觉同步定位与地图创建中由于相机大角度转动造成的帧间匹配失败以及跟踪丢失等问题, 提出了一种基于局部图像熵的细节增强视觉里程计优化算法. 建立图像金字塔, 划分图像块进行均匀化特征提取, 根据图像块的信息熵判断其信息量大小, 将对比度低以及梯度变化小的图像块进行删除, 减小图像特征点计算量. 对保留的图像块进行亮度自适应调整, 增强局部图像细节, 尽可能多地提取能够表征图像信息的局部特征点作为相邻帧匹配以及关键帧匹配的关联依据. 结合姿态图优化方法对位姿累计误差进行局部和全局优化, 进一步提高移动机器人系统性能. 采用TUM数据集测试验证, 由于提取了更能反映物体纹理以及形状的特征属性, 本文算法的运动跟踪成功率最高可提升至60 % 以上, 并且测量的轨迹误差、平移误差以及转动误差都有所降低. 与目前ORB-SLAM2系统相比, 本文提出的算法不但提高了移动机器人视觉定位精度, 而且满足实时SLAM的应用需要.

Abstract:For the problems of failed matching and tracking loss due to big camera rotation in simultaneous localization and mapping (SLAM) for mobile robots, an optimized detail enhancement algorithm of visual odometry based on the local image entropy is proposed. The image pyramid is built and is divided into blocks on each level to extract features homogeneously. The information of each image block is determined through its entropy value and the blocks with low contrast and small intensity gradient will be deleted to reduce feature calculation. Nonlinear and adaptive illumination adjustment on each reserved block is applied to increase local image details. Local features that representing image information is preserved as much as possible to be the correlations between adjacent frames and keyframes. Combined with the pose graph optimization method, the local and global optimization of accumulation error is carried out to further improve the system performance for mobile robot. The proposed method is verifled on the TUM dataset. Since using the feature properties which are more reflective of texture and shape, the maximum success rate of motion tracking is increased to over 60 %. And the results also show that the tracking error, translational error and rotation error is decreased. Compared with the original system ORB-SLAM2, this method can not only improve visual positioning accuracy of the mobile robot, but also meet the application need of real-time SLAM requirement.

-

Key words:

- SLAM /

- visual odometry /

- sparse feature /

- information entropy /

- pose-graph optimization

1) 本文责任编委 吴毅红 -

表 1 阈值选取

Table 1 Threshold selection

fr1_desk μ = 0.3 μ = 0.4 μ = 0.5 μ = 0.6 μ = 0.7 平均处理时间 0.063 s 0.064 s 0.062 s 0.063 s 0.06 s 绝对轨迹误差 0.0156 m 0.0156 m 0.0153 m 0.0163 m 0.0165 m 相对平移误差 0.0214 m 0.0215 m 0.0209 m 0.0216 m 0.0218 m 相对旋转误差 1.455° 1.426° 1.412° 1.414° 1.39° 成功跟踪概率 50 % 40 % 62 % 30 % 25 % 表 2 轨迹分析结果

Table 2 Trajectory analysis results

图像序列 平均处理时间 绝对轨迹误差 相对平移误差 相对旋转误差 fr1_desk ORB-SLAM2 0.036 s 0.0176 m 0.0241 m 1.532° 优化算法 0.062 s 0.0153 m 0.0209 m 1.412° fr1_360 ORB-SLAM2 0.030 s 0.2031 m 0.1496 m 3.806° 优化算法 0.048 s 0.1851 m 0.1313 m 3.635° fr1_floor ORB-SLAM2 0.028 s 0.0159 m 0.0133 m 0.955° 优化算法 0.051 s 0.0138 m 0.0126 m 0.979° fr1_room ORB-SLAM2 0.037 s 0.0574 m 0.0444 m 1.859° 优化算法 0.057 s 0.047 m 0.0441 m 1.797° -

[1] Fink G K, Franke M, Lynch A F, R"obenack K, Godbolt B. Visual inertial SLAM: Application to unmanned aerial vehicle. IFAC-PapersOnline, 2017, 50(1): 1965-1970 doi: 10.1016/j.ifacol.2017.08.162 [2] Höll M, Lepetit V. Monocular LSD-SLAM Integration within AR System, Technical Report ICG-CVARLab-TR-ICGCVARLab-TR003, Inst. for Computer Graphics and Vision, Graz University of Technology, Austria, 2017 http://arxiv.org/abs/1702.02514?context=cs.CV [3] 王楠, 马书根, 李斌, 王明辉, 赵明杨. 震后建筑内部层次化SLAM的地图模型转换方法. 自动化学报, 2015, 41(10): 1723-1733 doi: 10.16383/j.aas.2015.c150125Wang Nan, Ma Shu-Gen, Li Bin, Wang Ming-Hui, Zhao Ming-Yang. A model transformation of map representation for hierarchical SLAM that can be used for after-earthquake buildings. Acta Automatica Sinica, 2015, 41(10): 1723-1733 doi: 10.16383/j.aas.2015.c150125 [4] 赵洋, 刘国良, 田国会, 罗勇, 王梓任, 张威, 李军伟. 基于深度学习的视觉SLAM综述. 机器人, 2017, 39(6): 889-896 https://www.cnki.com.cn/Article/CJFDTOTAL-JQRR201706015.htmZhao Yang, Liu Guo-Liang, Tian Guo-Hui, Luo Yong, Wang Zi-Ren, Zhang Wei, Li Jun-Wei. A survey of visual SLAM based on deep learning. Robot, 2017, 39(6): 889-896 https://www.cnki.com.cn/Article/CJFDTOTAL-JQRR201706015.htm [5] Yi K M, Trulls E, Lepetit V, Fua P. LIFT: Learned invariant feature transform. In: Proceedings of the 14th European Conference on Computer Vision. Amsterdam, Netherlands: 2016. 9910: 467-483 [6] DeTone D, Malisiewicz T, Rabinovich A. Toward geometric deep SLAM. Computer Vision and Pattern Recognition, arXiv preprint arXiv: 1707.07410, 2017 [7] 丁文东, 徐德, 刘希龙, 张大朋, 陈天. 移动机器人视觉里程计综述. 自动化学报, 2018, 44(3): 385-400 doi: 10.16383/j.aas.2018.c170107Ding Wen-Dong, Xu De, Liu Xi-Long, Zhang Da-Peng, Chen Tian. Review on visual odometry for mobile robots. Acta Automatica Sinica, 2018, 44(3): 385-400 doi: 10.16383/j.aas.2018.c170107 [8] Cadena C, Carlone L, Carrillo H, Latif Y, Scaramuzza D, Neira J, Reid I, Leonard J J. Past, present, and future of simultaneous localization and mapping: Towards the robust-perception age. IEEE Transactions on Robotics, 2016, 32(6): 1309-1332 doi: 10.1109/TRO.2016.2624754 [9] Kim J, Kim A. Light condition invariant visual SLAM via entropy based image fusion. In: Proceedings of the 14th International Conference on Ubiquitous Robots and Ambient Intelligence. Jeju, Korea: 2017. 529-533 [10] Davison A J, Reid I D, Molton N D, Stasse O. MonoSLAM: Real-time single camera SLAM. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007, 29(6): 1052-1067 doi: 10.1109/TPAMI.2007.1049 [11] Klein G, Murray D. Parallel tracking and mapping for small AR workspaces. In: Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality. Nara, Japan: IEEE, 2007. 225-234 [12] Endres F, Hess J, Sturm J, Cremers D, Burgard W. 3D mapping with an RGB-D camera. IEEE Transactions on Robotics, 2014, 30(1): 177-187 doi: 10.1109/TRO.2013.2279412 [13] Mur-Artal R, Montiel J M M, Tard's J D. Orb-SLAM: A versatile and accurate monocular slam system. IEEE Transactions on Robotics, 2015, 31(5): 1147-1163 doi: 10.1109/TRO.2015.2463671 [14] Mur-Artal R, Tard'os J D. ORB-SLAM2: An open-source SLAM system for monocular, stereo and RGB-D cameras. IEEE Transactions on Robotics, 2017, 33(5): 1255-1262 doi: 10.1109/TRO.2017.2705103 [15] Lv Q, Lin H, Wang G, Wei H, Wang Y. ORB-SLAM-based tracing and 3d reconstruction for robot using Kinect 2.0. In: Proceedings of the 29th Chinese Control and Decision Conference. Chongqing, China: 2017. 3319-3324 [16] Newcombe R A, Lovegrove S J, Davison A J. DTAM: dense tracking and mapping in real-time. In: Proceedings of the 2011 IEEE International Conference on Computer Vision. Barcelona, Spain: IEEE, 2011. 2320-2327 [17] Engel J, Sch"ops T, Cremers D. LSD-SLAM: Large-scale direct monocular SLAM. In: Proceedings of the 13th European Conference on Computer Vision. Zurich, Switzerland: Springer, 2014. 834-849 [18] Engel J, Usenko V, Cremers D. Direct sparse odometry. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(3): 611-625 doi: 10.1109/TPAMI.2017.2658577 [19] Forster C, Pizzoli M, Scaramuzza D. SVO: fast semi-direct monocular visual odometry. In: Proceedings of the 2014 IEEE International Conference on Robotics and Automation. Hong Kong, China: IEEE, 2014. 15-22 [20] Shannon C E. A mathematical theory of communication. ACM SIGMOBILE Mobile Computing and Communications Review, 2001, 5(1): 3-55 doi: 10.1145/584091.584093 [21] Farid H. Blind inverse gamma correction. IEEE Transactions on Image Processing, 2001, 10(10): 1428-1433 doi: 10.1109/83.951529 [22] Siebert A. Retrieval of gamma corrected images. Pattern Recognition Letters, 2001, 22(2): 249-256 doi: 10.1016/S0167-8655(00)00107-0 -

下载:

下载: