-

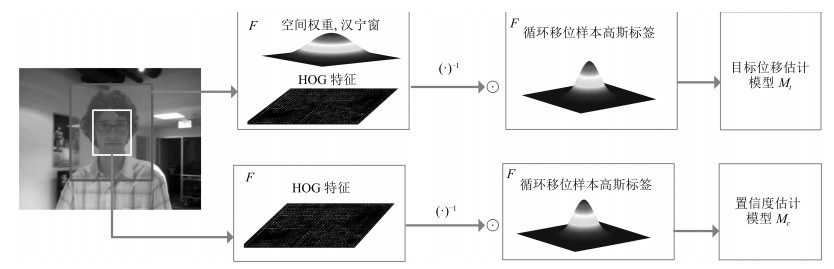

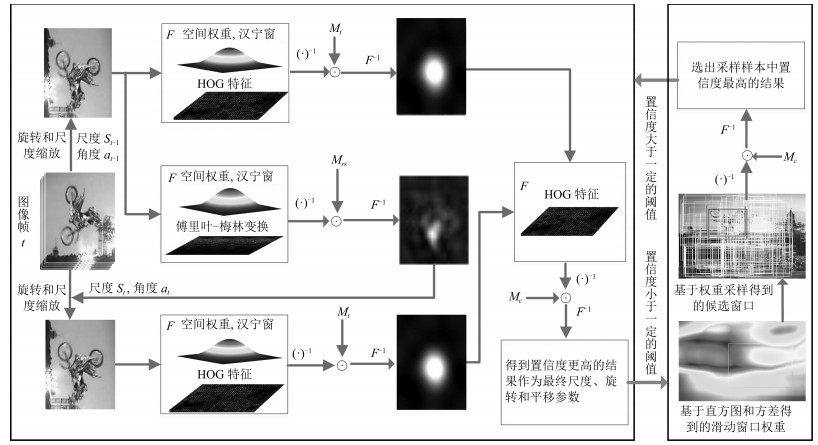

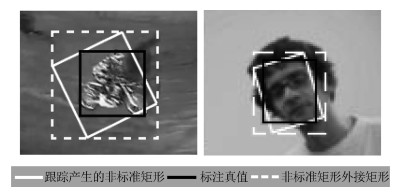

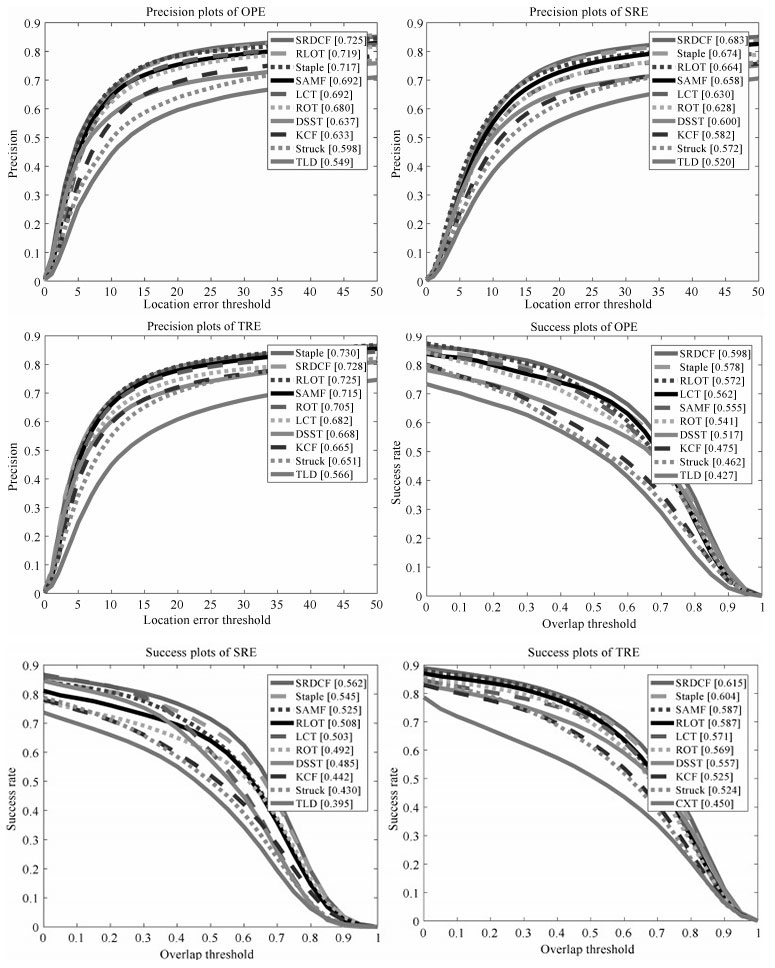

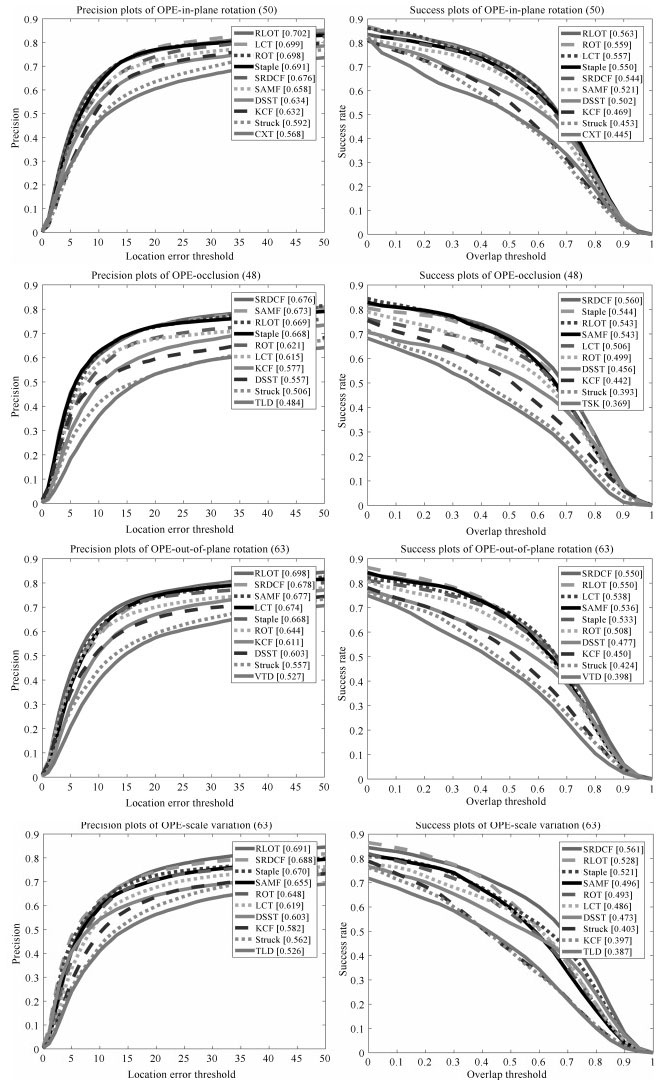

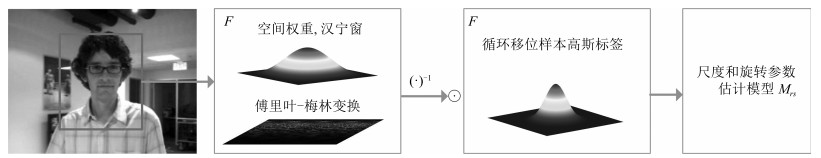

摘要: 目标发生尺度和旋转变化会给长时间目标跟踪带来很大的挑战,针对该问题,本文提出了具有尺度和旋转适应性的鲁棒目标跟踪算法.首先针对跟踪过程中目标存在的尺度变化和旋转运动,提出一种基于傅里叶-梅林变换和核相关滤波的目标尺度和旋转参数估计方法.该方法能够实现连续空间的目标尺度和旋转参数估计,采用核相关滤波提高了估计的鲁棒性和准确性.然后针对长时间目标跟踪过程中,有时不可避免地会出现跟踪失败的情况(例如由于长时间半遮挡或全遮挡等),提出一种基于直方图和方差加权的目标搜索方法.当目标丢失时,通过提出的搜索方法能够快速从图像中确定目标可能存在的区域,使得跟踪算法具有从失败中恢复的能力.本文还训练了两个核相关滤波器用于估计跟踪结果的置信度和目标平移,通过专门的核相关滤波器能够使得估计的跟踪结果置信度更加准确和鲁棒,置信度的估计结果可用于激活基于直方图和方差加权的目标搜索模块,并判断搜索窗口中是否包含目标.本文在目标跟踪标准数据集(Online object tracking benchmark,OTB)上对提出的算法和目前主流的目标跟踪算法进行对比实验,验证了本文提出算法的有效性和优越性.Abstract: A robust long-term object tracking algorithm with adaptive scale and rotation estimation is proposed to deal with the challenges of scale and rotation changes during long-term object tracking. Firstly, a robust scale and rotation estimation method is proposed based on the Fourier-Mellin transform and the kernelized correlation filter for scale changes and rotation of the object. Then a weighted object searching method based on histogram and variance is proposed to deal with possible tracker's failures in the long-term tracking (due to long-term semi-occlusion or full occlusion, etc.). When the tracked object is lost, the object can be re-located in the image using the proposed searching method, so the tracker can be recovered from failures. Moreover, this paper also trains two other kernelized correlation filters to estimate the object's translation and the confidence of tracking results. The customized kernelized correlation filter can make the estimated confidence more accurate and robust. The confidence is used to activate a weighted object searching module and determine whether the searching windows contain objects or not. The proposed algorithm is compared with the state-of-the-art tracking algorithms using the online object tracking benchmark (OTB). Experimental results validate the effectiveness and superiority of the proposed tracking algorithm.1) 本文责任编委 左旺孟

-

表 1 三个核相关滤波器参数

Table 1 The parameters of three kernelized

核相关滤波器 位移估计 置信度估计 尺度旋转估计 核相关滤波器 核相关滤波器 核相关滤波器 高斯核宽度参数 $\sigma $ 0.6 0.6 0.4 学习率 $\beta$ 0.012 0.012 0.075 高斯标签宽度参数 $s$ 0.125 $\sqrt{mn}$ 0.125 $\sqrt{mn}$ 0.075( $d$ , $a$ ) 正则化参数 $\alpha$ $10^{-4} $ $10^{-4} $ $5^{-5} $ 表 2 OTB数据集中选择的11个序列包含的视觉挑战

Table 2 The visual tracking challenges included in the 11 image sequences selected from the OTB datasets

图像序列 光照变化 平面外旋转 尺变变化 半遮挡 扭曲 运动模糊 快速运动 平面内旋转 出相机视野 凌乱背景 低分辨率 David 1 1 1 1 1 1 0 1 0 0 0 CarScale 0 1 1 1 0 0 1 1 0 0 0 Dog1 0 1 1 0 0 0 0 1 0 0 0 FaceOcc2 1 1 0 1 0 0 0 1 0 0 0 Jogging-2 0 1 0 1 1 0 0 0 0 0 0 Lemming 1 1 1 1 0 0 1 0 1 0 0 MotorRolling 1 0 1 0 0 1 1 1 0 1 1 Shaking 1 1 1 1 1 0 0 0 0 1 0 Singer2 1 1 0 0 1 0 0 1 0 1 0 Tiger1 1 1 0 1 1 1 1 1 0 0 0 Soccer 1 1 1 1 0 1 1 1 0 1 0 表 3 不同跟踪算法的平均处理帧速率

Table 3 The average frame rates of different object tracking algorithms

跟踪算法 帧速率 RLOT 36 SRDCF 4 LCT 27.4 KCF 167 DSST 27 TLD 20 Struck 28 -

[1] Yilmaz A, Javed O, Shah M. Object tracking:a survey. ACM Computing Surveys (CSUR), 2006, 38(4):Article No. 13.1-13.45 http://d.old.wanfangdata.com.cn/Periodical/jsjyyyj200912002 [2] Hu W M, Tan T N, Wang L, Maybank S. A survey on visual surveillance of object motion and behaviors. IEEE Transactions on Systems, Man, and Cybernetics, Part C:Applications and Reviews, 2004, 34(3):334-352 doi: 10.1109/TSMCC.2004.829274 [3] Li X, Hu W M, Shen C H, Zhang Z F, Dick A, van den Hengel A V D. A survey of appearance models in visual object tracking. ACM Transactions on Intelligent Systems and Technology (TIST), 2013, 4(4):Article No. 58.1-58.48 http://d.old.wanfangdata.com.cn/OAPaper/oai_arXiv.org_1303.4803 [4] 尹宏鹏, 陈波, 柴毅, 刘兆栋.基于视觉的目标检测与跟踪综述.自动化学报, 2016, 42(10):1466-1489 http://www.aas.net.cn/CN/abstract/abstract18935.shtmlYin Hong-Peng, Chen Bo, Chai Yi, Liu Zhao-Dong. Vision-based object detection and tracking:a review. Acta Automatica Sinica, 2016, 42(10):1466-1489 http://www.aas.net.cn/CN/abstract/abstract18935.shtml [5] 管皓, 薛向阳, 安志勇.深度学习在视频目标跟踪中的应用进展与展望.自动化学报, 2016, 42(6):834-847 http://www.aas.net.cn/CN/abstract/abstract18874.shtmlGuan Hao, Xue Xiang-Yang, An Zhi-Yong. Advances on application of deep learning for video object tracking. Acta Automatica Sinica, 2016, 42(6):834-847 http://www.aas.net.cn/CN/abstract/abstract18874.shtml [6] Wu Y, Lim J, Yang M H. Online object tracking: a benchmark. In: Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Portland, OR, USA: IEEE, 2013. 2411-2418 [7] Wu Y, Lim J, Yang M H. Object tracking benchmark. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9):1834-1848 doi: 10.1109/TPAMI.2014.2388226 [8] Kristan M, Matas J, Leonardis A, Felsberg M, Cehovin L, Fernandez G, Vojir T, Hager G, Nebehay G, Pflugfelder R, Gupta A, Bibi A, Lukezic A, Garcia-Martin A, Saffari A, Petrosino A, Montero A S et al. The visual object tracking VOT2015 challenge results. In: Proceedings of the 2015 IEEE International Conference on Computer Vision Workshops (ICCVW). Santiago, Chile: IEEE, 2015. 564-586 [9] Wang H Z, Suter D, Schindler K, Shen C H. Adaptive object tracking based on an effective appearance filter. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007, 29(9):1661-1667 doi: 10.1109/TPAMI.2007.1112 [10] Black M J, Jepson A D. Eigen tracking: robust matching and tracking of articulated objects using a view-based representation. In: Proceedings of the 4th European Conference on Computer Vision. Cambridge, UK: Springer Berlin Heidelberg, 1996. 329-342 [11] Chin T J, Suter D. Incremental kernel principal component analysis. IEEE Transactions on Image Processing IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007, 16(6):1662-1674 doi: 10.1109/TIP.2007.896668 [12] Grabner H, Grabner M, Bischof H. Real-time tracking via on-line boosting. In: Proceedings of the 2006 British Machine Vision Conference. Edinburgh, England: BMVA Press, 2006. 1: 47-56 [13] Zhang K H, Zhang L, Yang M H. Fast compressive tracking. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(10):2002-2015 doi: 10.1109/TPAMI.2014.2315808 [14] Kalal Z, Mikolajczyk K, Matas J. Tracking-learning-detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(7):1409-1422 doi: 10.1109/TPAMI.2011.239 [15] Bolme D S, Beveridge J R, Draper B A, Lui Y M. Visual object tracking using adaptive correlation filters. In: Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR). San Francisco, CA, USA: IEEE, 2010. 2544-2550 [16] Henriques J F, Caseiro R, Martins P, Batista J. High-speed tracking with kernelized correlation filters. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3):583-596 doi: 10.1109/TPAMI.2014.2345390 [17] Li Y, Zhu J K. A scale adaptive kernel correlation filter tracker with feature integration. In: Proceedings of the 2014 European Conference on Computer Vision Workshops. Zurich, Switzerland: Springer Berlin Heidelberg, 2014. 254-265 [18] Danelljan M, Häger G, Shahbaz K F, Felsberg M. Accurate scale estimation for robust visual tracking. In: Proceedings of the 2014 British Machine Vision Conference. Nottingham, UK: BMVA Press, 2014. 65.1-65.11 [19] Danelljan M, Häger G, Shahbaz K F, Felsberg M. Learning spatially regularized correlation filters for visual tracking. In: Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV). Santiago, Chile: IEEE, 2015. 4310-4318 [20] Bertinetto L, Valmadre J, Golodetz S, Miksik O, Torr P H S. Staple: complementary learners for real-time tracking. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, USA: IEEE, 2016. 1401-1409 [21] Zuo W M, Wu X H, Lin L, Zhang L, Yang M-H. Learning support correlation filters for visual tracking. arXiv preprint arXiv: 1601.06032, 2016. [22] 徐建强, 陆耀.一种基于加权时空上下文的鲁棒视觉跟踪算法.自动化学报, 2015, 41(11):1901-1912 http://www.aas.net.cn/CN/abstract/abstract18765.shtmlXu Jian-Qiang, Lu Yao. Robust visual tracking via weighted spatio-temporal context learning. Acta Automatica Sinica, 2015, 41(11):1901-1912 http://www.aas.net.cn/CN/abstract/abstract18765.shtml [23] Ma C, Yang X K, Zhang C Y, Yang M H. Long-term correlation tracking. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Boston, MA, USA: IEEE, 2015. 5388-5396 [24] Zokai S, Wolberg G. Image registration using log-polar mappings for recovery of large-scale similarity and projective transformations. IEEE Transactions on Image Processing, 2005, 14(10):1422-1434 doi: 10.1109/TIP.2005.854501 [25] Reddy B S, Chatterji B N. An FFT-based technique for translation, rotation, and scale-invariant image registration. IEEE Transactions on Image Processing, 1996, 5(8):1266-1271 doi: 10.1109/83.506761 [26] Sarvaiya J N, Patnaik S, Kothari K. Image registration using log polar transform and phase correlation to recover higher scale. Journal of Pattern Recognition Research, 2012, 7(1):90-105 [27] Adam A, Rivlin E, Shimshoni I. Robust fragments-based tracking using the integral histogram. In: Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. New York, NY, USA: IEEE, 2006, 1: 798-805 [28] Hare S, Golodetz S, Saffari A, Vineet V, Cheng M M, Hicks S L, Torr P H S et al. Struck:structured output tracking with kernels. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(10):2096-2109 doi: 10.1109/TPAMI.2015.2509974 [29] Zhong W, Lu H C, Yang M H. Robust object tracking via sparsity-based collaborative model. In: Proceedings of the 2012 IEEE International Conference on Computer Vision and Pattern Recognition (CVPR). Providence, RI, USA: IEEE, 2012. 1838-1845 [30] Jia X, Lu H C, Yang M H. Visual tracking via adaptive structural local sparse appearance model. In: Proceedings of the 2012 IEEE International Conference on Computer Vision and Pattern Recognition (CVPR). Providence, RI, USA: IEEE, 2012. 1822-1829 [31] Dinh T B, Vo N, Medioni G. Context tracker: exploring supporters and distracters in unconstrained environments. In: Proceedings of the 2011 IEEE International Conference on Computer Vision and Pattern Recognition (CVPR). Colorado Springs, CO, USA: IEEE, 2011. 1177-1184 -

下载:

下载: